Some examples of technology timelines

post by NunoSempere (Radamantis) · 2020-03-27T18:13:19.834Z · LW · GW · 0 commentsContents

Introduction After the event horizon Weather forecasting Nuclear proliferation Cryptocurrencies Mobile phones Optical Character Recognition Speech recognition Machine translation. Contact lenses In the event horizon Before the event horizon Other technological processes I only briefly looked into Previous work: Conclusions Highlights from the history of weather forecasting. Highlights from the history of nuclear proliferation. None No comments

"Weather forecasts were comedy material. Now they're just the way things are. I can't figure out when the change happened."

Introduction

I briefly look into the timelines of several technologies, with the hope of becoming marginally less confused about potential A(G)I developments.

Having examples thus makes some scenarios crisper:

- (AGI will be like perpetual motion machines: proven to be impossible)

- AGI will be like flying cars: possible in principle but never in practice.

- AI will overall be like contact lenses, weather forecasts or OCR; developed in public, and constantly getting better, until one day they have already become extremely good.

- AI will overall be like speech recognition or machine translation: Constant improvement for a long time (like contact lenses, weather forecasts or OCR), except that the difference between 55% and 75% is just different varieties of comedy material, and the difference between 75% and 95% is between "not being usable" and "being everywhere", and the change feels extremely sudden.

- AGI will be like the iPhone: Developed in secret, and so much better than previous capabilities that it will blow people away. Or like nuclear bombs: Developed in secret, and so much better than previous capabilities that it will blow cities away.

- (AGI development will be like some of the above, but faster)

- (AGI development will take an altogether different trajectory)

I did not use any particular method to come up with the technologies to look at, but I did classify them afterwards as:

- After the event horizon: Already in mass production or distribution.

- In the event horizon: Technologies which are seeing some progress right now, but which aren't mainstream; they may only exist as toys for the very rich.

- Before the event horizon: Mentioned in stories by Jules Verne, Heinlein, Asimov, etc., but not yet existing. Small demonstrations might exist in laboratory settings or by the DIY community

I then give a summary table, and some highlights on weather forecasting and nuclear proliferation which I found particularly interesting.

After the event horizon

Weather forecasting

A brief summary: Antiquity's weather forecasting methods lacked predictive power in the short term, though they could understand e.g., seasonal rains. Advances were made in understanding meteorological phenomena, like rainbows, in estimating the size of the stratosphere, but not much in the way of prediction. With the advent of the telegraph, information about storms could be relayed faster than the storm itself traveled, and this was the beginning of actual prediction, which was, at first, very spotty. Weather records had existed before, but now they seem to be taken more seriously, and weather satellites are a great boon to weather forecasting. With time, mathematical advances and Moore's law meant that weather forecasting services just became better and better.

Overall, I get the impression of a primitive scholarship existing before the advent of the telegraph, followed by continuous improvement in weather forecasting afterwards, such that it's really difficult to know when weather forecasting "became good".

A brief timeline:

-

- Invention of the electric telegraph: Information could travel faster than the wind.

- 1854 – The French astronomer Leverrier showed that a storm in the Black Sea could be followed across Europe and would have been predictable if the telegraph had been used. A service of storm forecasts was established a year later by the Paris Observatory.

-

- The first daily weather forecasts were published in The Times in 1861; weather forecasting was spurred by the British Navy after losing ships and men to the Great storm of 1859

- 1900s. Advances in numerical methods. Better models of cyclones (1919) and huricanes (1921), global warming from carbon emissions is first postulated as a hypothesis (1938); hurricanes are caught on radar (1944), first correct tornado prediction (1948). Weather observing stations now abound.

-

- D-Day (Allied invasion of Normandy during WW2) postponed because of a weather forecast.

- 1950s and onwards. The US starts a weather satellite program. Chaos theory is discovered (1961). Numerical methods are implemented in computers, but these are not yet fast enough. From there on, further theoretical advances and Moore's law make automated weather forecasting, slowly, more possible, as well as advanced warning of hurricanes and other great storms.

Sources:

- Link to Wikipedia: Timeline. Exhaustive source.

- Link to Wikipedia: Weather forecasting

- The birth of the weather forecast - BBC. Somewhat misleading/incomplete; leaves out non-British initiatives and accomplishments

- Why Weather Forecasting Keeps Getting Better - The New Yorker. Also incomplete.

Nuclear proliferation

What happens when governments ban or restrict certain kinds of technological development? What happens when a certain kind of technological development is banned or restricted in one country but not in other countries where technological development sees heavy investment? Source

A brief summary: Of the 34 countries which have attempted to obtain a nuclear bomb, 9 have succeeded, whereas 25 have failed, for a base rate of ~25%. Of the latter 25, there is uncertainty as to the history of 6 (Iran, Algeria, Burma/Myanmar, Saudi Arabia, Canada and Spain). Excluding those 6, those who succeeded did so, on average, in 14 years, with a standard deviation of 13 years. Those who failed took 20 years to do so, with a standard deviation of 11 years. Three summary tables are available here.

Caveats apply. South Africa willingly gave up nuclear weapons, and many other countries have judged a nuclear program to not be in their interests, after all. Further, many other countries have access to nuclear bombs or might be able to take possession of them in the event of war, per NATO's Nuclear sharing agreement. Additionally, other countries, such as Japan, Germany or South Korea, would have the industrial and technological capabilities to produce nuclear weapons if they were willing to

Overall, although of course the discoveries of, e.g., the Curies were foundational, I get the impression that the discovery of the possibility of nuclear fission, followed by "let's reveal this huge infohazard to our politicians" and the beginning of a nuclear program in the US was relatively rapid.

I also get the impression of a very large standard deviation in wanting nuclear weapons badly enough. For example, Israel or North Korea actually got nuclear weapons, whereas Switzerland or Yugoslavia were vaguely gesturing in the direction of attempting it; the Wikipedia page on Switzerland and weapons of mass destruction is almost comical in the amount of bureaucratic steps and committees and reports, recommendations and statements, which never get anywhere.

A brief timeline:

- 1898: Pierre and Marie Curie commence the study of radioactivity

- ...

- 1934: Leó Szilárd patents the idea of a nuclear chain reaction via neutrons.

- 1938: First fission reaction.

- 1939: The idea of using fission as a weapon is floating around

- 1942: The Manhattan Project starts.

- 1945: First nuclear bomb.

- 1952: First hydrogen bomb.

Cryptocurrencies

A brief summary: Wikipedia's history of cryptocurrencies doesn't mention any cyberpunk influences, and mentions a 1983 ecash antecedent. I have the recollection of PayPal trying to solve the double-spending problem but failing, but couldn't find a source. In any case, by 2009 the double-spending problem, which had previously been considered pretty unsolvable, was solved by Bitcoin. Ethereum (2013) and Ethereum 2.0 (2021?) were improvements, but haven't seen widespread adoption yet. Other alt-coins seem basically irrelevant to me.

A brief timeline:

- 1983: The idea exists within the cryptopunk community, but the double-spending problem can't be solved, and the worldwide web doesn't exist yet.

- 2009: Bitcoin is released; the double-spending problem is solved.

- 2015: Ethereum is released

- 2020-2021: Ethereum 2.0 is scheduled to be released.

Mobile phones

A brief summary: "Mobile telephony" was telephones installed on trains, and then on cars. Because the idea of mobile phones was interesting, people kept working on it, and we went from a 1kg beast to the first iPhone in less than twenty years. Before that, there was a brief period where Nokia phones all looked the same.

A brief timeline:

- 1918: Telephones in trains

- 1946: Telephones in cars.

- 1950s-1960s: Interesting advances are made in the Soviet empire, but these don't get anywhere. Bell Labs works is working on the topic.

- 1973: First handheld phone. Weight: 1kg

- 1980s: The lithium-ion battery, invented by John Goodenough.

- 1983: "the DynaTAC 8000X mobile phone launched on the first US 1G network by Ameritech. It cost $100M to develop and took over a decade to reach the market. The phone had a talk time of just thirty minutes and took ten hours to charge. Consumer demand was strong despite the battery life, weight, and low talk time, and waiting lists were in the thousands"

- 1989: Motorola Microtac. A phone that doesn't weigh a ton.

- 1992/1996/1998: Nokia 1011; first brick recognizable as a Nokia phone. Mass-produced. / Nokia 8110; the mobile phone used in the Matrix. / Nokia 7110; a mobile with a browser. In the next years, mobile phones become lighter, and features are added one by one: GPS, MP3 music, storage increases, calendars, radio, Bluetooth, colour screens, cameras, really unaesthetic touchscreens, better batteries, minigames,

- 2007: The iPhone is released. Nokia will die but doesn't know it yet. Motorola/Sony had some sleek designs, but the iPhone seems to have been better than any other competitor among many dimensions.

- Onwards: Moore's law continues and phones look sleeker after the iPhone. Cameras and internet get better, and so on.

Link to Wikipedia

List of models

Optical Character Recognition

A brief summary: I had thought that OCR had only gotten good enough in the 2010s, but apparently they were already pretty good by the 1975s, and initially used for blind people, rather than for convenience. Recognizing different fonts was a problem until it wasn't anymore.

A brief timeline:

- 1870: First steps. The first OCR inventions were generally conceived as an aid for the blind.

- 1931: "Israeli physicist and inventor Emanuel Goldberg is granted a patent for his "Statistical machine" (US Patent 1838389), which was later acquired by IBM. It was described as capable of reading characters and converting them into standard telegraph code". Like many inventions of the time, it is unclear to me how good they were.

- 1962: "Using the Optacon, Candy graduated from Stanford and received a PhD". "It opens up a whole new world to blind people. They aren't restricted anymore to reading material set in braille."

- 1974: American inventor Ray Kurzweil creates Kurzweil Computer Products Inc., which develops the first omni-font OCR software, able to recognize text printed in virtually any font. Kurzweil goes on the Today Show, sells a machine to Stevie Wonder.

- 1980s: Passport scanner, price tag scanner.

- 2008: Adobe Acrobat starts including OCR for any PDF document

- 2011: Google Ngram. Charts historic word frequency.

Link to Wikipedia

Kurzweil OCR

Speech recognition

A brief summary: Initial progress was slow; "a system could understand 16 spoken words (1962), then a thousand words (1976). Hidden Markov models (1980s) proved to be an important theoretical advance, and commercial systems soon existed (1990s), but they were different degrees of clunky. Cortana, Siri, Echo and Google Voice search seem to be the first systems in which voice recognition was actually good.

A brief timeline:

- 1877: Thomas Edison's phonograph.

- 1952: A team at Bell Labs designs the Audrey, a machine capable of understanding spoken digits

- 1962: Progress is slow. IBM demonstrates the Shoebox, a machine that can understand up to 16 spoken words in English, at the 1962 Seattle World's Fair.

- 1976: DARPA funds five years of speech recognition research with the goal of ending up with a machine capable of understanding a minimum of 1,000 words. The program led to the creation of the Harpy by Carnegie Mellon, a machine capable of understanding 1,011 words

- 1980s: Hidden Markov models!

- 1990: Dragon Dictate. Used discrete speech where the user must pause between speaking each word.

- 1996: IBM launches the MedSpeak, the first commercial product capable of recognizing continuous speech.

- 2006: The National Security Agency begins using speech recognition to isolate keywords when analyzing recorded conversations.

- 2008: Google Announces Voice Search.

- 2011: Apple announces Siri.

- 2014: Microsoft announces Cortana / Amazon announces the Echo.

Link to Wikipedia

A short history of speech recognition

Machine translation.

"The Georgetown experiment in 1954 involved fully automatic translation of more than sixty Russian sentences into English. The authors claimed that within three or five years, machine translation would be a solved problem".

A brief summary: Since the 50s, various statistical, almost hand-programmed methods were tried, and they don't get qualitatively better, though they can eventually translate e.g. weather forecasts. Though in my youth google translator was sometimes a laughing stock, with the move towards neural network methods, it has become exceedingly good in the last years.

Socially, although translators are in denial and still maintain that their four years of education are necessary, and maintain a monopoly through bureaucratic certification, in my experience it's easy to automate the localization of a commercial product and then just edit the output.

A brief timeline:

- 1924: Concept is proposed.

- 1954: "The Georgetown-IBM experiment, held at the IBM head office in New York City in the United States, offers the first public demonstration of machine translation. The system itself, however, is no more than what today would be called a "toy" system, having just 250 words and translating just 49 carefully selected Russian sentences into English — mainly in the field of chemistry. Nevertheless, it encouraged the view that machine translation was imminent — and in particular stimulates the financing of the research, not just in the US but worldwide". Hype ends in 1966.

- 1977: The METEO System, developed at the Université de Montréal, is installed in Canada to translate weather forecasts from English to French, and is translating close to 80,000 words per day or 30 million words per year.

- 1997: babelfish.altavista.com is launched.

-

- Google translate is launched; it uses statistical methods.

- Onwards. Google translate and other tools get better. They are helped by the gigantic corpus which the European Union and the United Nations produce, which release all of their documents in their official languages. This is a boon for modern machine learning methods, which require large datasets for training; in 2016, Google translate moves to use an engine based on neural networks.

Contact lenses

A brief summary:

Leonardo da Vinci speculated on it in 1508, and some heroic glassmakers experimented on their own eyes. I'll take 1888 to be the starting date.

He experimented with fitting the lenses initially on rabbits, then on himself, and lastly on a small group of volunteers, publishing his work, "Contactbrille", in the March 1888 edition of Archiv für Augenheilkunde.[citation needed] Large and unwieldy, Fick's lens could be worn only for a couple of hours at a time.[citation needed] August Müller of Kiel, Germany, corrected his own severe myopia with a more convenient blown-glass scleral contact lens of his own manufacture in 1888

Many distinct improvements followed during the next century, each making contact lenses less terrible. Today, they're pretty good. Again, there wasn't any point at which contact lenses had "become good".

A brief timeline:

- 1508: Codex of the Eye. Leonardo da Vinci introduces a related idea.

- 1801: Thomas Young heroically experiments with wax lenses.

- 1888: First glass lenses. German ophthalmologist experiments on rabbits, then on himself, then on volunteers.

- 1939: First plastic contact lens. Polymethyl methacrylate (PMMA) and other plastics will be used from now onwards.

- 1949: Corneal lenses; much smaller than the original scleral lenses, as they sat only on the cornea rather than across all of the visible ocular surface, and could be worn up to 16 hours a day

- 1964: Lyndon Johnson became the first President in the history of the United States to appear in public wearing contact lenses

- 1970s-1980s: Oxygen-permeable lense materials are developed, chiefly by chemist Norman Gaylord. Soft lenses are also developed

- More developments are made so that lenses become more comfortable and more disposable. From this point onwards, it's difficult for me to differentiate between lense companies wanting to hype up their marginal improvements and new significant discoveries being made.

In the event horizon

Technologies which are seeing some progress right now, but which aren't mainstream:

- Virtual Reality. First (nondigital) prototype in 1962, first digital prototype in 1968. Moderate though limited success as of yet, though VR images made a deep impression on me and may have contributed to me becoming vegetarian.

- Text generation. ELIZA in 1968. 2019's GPT-2 is impressive but not perfect.

- Solar sails. First mention in 1864-1899. Current experiments, like the IKAROS 2010, provide proof of concept. Anders Sandberg has a bunch of cool ideas about space colonization, some of which include solar sails.

- Autonomous vehicles. "In 1925, Houdina Radio Control demonstrated the radio-controlled "American Wonder" on New York City streets, traveling up Broadway and down Fifth Avenue through the thick of a traffic jam. The American Wonder was a 1926 Chandler that was equipped with a transmitting antenna on the tonneau and was operated by a person in another car that followed it and sent out radio impulses which were caught by the transmitting antenna." It is my impression that self-driving cars are very decent right now, if perhaps not mainstream.

- Space tourism. Precursors were the Russian's INTERKOSMOS, starting as early as 1978. Dennis Tito was the first space tourist in 2001 for 20 million; SpaceX hopes to make it cheaper in the coming decades.

- Prediction systems. Systems exist such as PredictionBook, Betfair, Metaculus, PredictIt, Augur, Foretold, The Good Judgement Project, etc. but they haven't found widespread adoption yet.

Before the event horizon

For the sake of completeness, I came up with some technologies which are "beyond the event horizon". These are technologies mentioned in stories by Jules Verne, Heinlein, Asimov, etc., but which haven't been implemented yet. Small demonstrations might exist in laboratory settings or by the DYI community.

- Flying cars. Though prototypes could be found since at least the 1926, fuel efficiency, bureaucratic regulations, noise concerns, etc., have prevented flying cars from being successful. Companies exist which will sell you a flying car if you have $100,000 and a pilot's license, though.

- Teleportation. "Perhaps the earliest science fiction story to depict human beings achieving the ability of teleportation in science fiction is Edward Page Mitchell's 1877 story The Man Without a Body, which details the efforts of a scientist who discovers a method to disassemble a cat's atoms, transmit them over a telegraph wire, and then reassemble them. When he tries this on himself...". "An actual teleportation of matter has never been realized by modern science (which is based entirely on mechanistic methods). It is questionable if it can ever be achieved because any transfer of matter from one point to another without traversing the physical space between them violates Newton's laws, a cornerstone of physics." No success as of yet.

- Space colonization. "The first known work on space colonization was The Brick Moon, a work of fiction published in 1869 by Edward Everett Hale, about an inhabited artificial satellite". No rendezvous at L5; no success as of yet;

- Automated data analysis. Unclear origins As of 2020, IBM's Watson is clunky.

Other technological processes I only briefly looked into

- Alternating current.

- Mass tourism.

- Video conferences.

- Algorithms that play checkers/chess/go.

- Sound quality / Image quality / Video quality / Film quality.

- Improvements in vehicles.

- Air conditioning.

It also seems to me that you could get a prior on the time it takes to develop a technology using the following Wikipedia pages: Category: History of Technology, Category: List of timelines; Category: Technology timelines.

Previous work:

- Algorithmic Progress

- Continuity of progress; some discussion of the last batch in the EA Forum [EA · GW]

- Kurzweil, The Singularity is Near

- Scott Alexander, 1960: The Year The Singularity Was Cancelled

- Some other examples of discontinuous progress

Conclusions

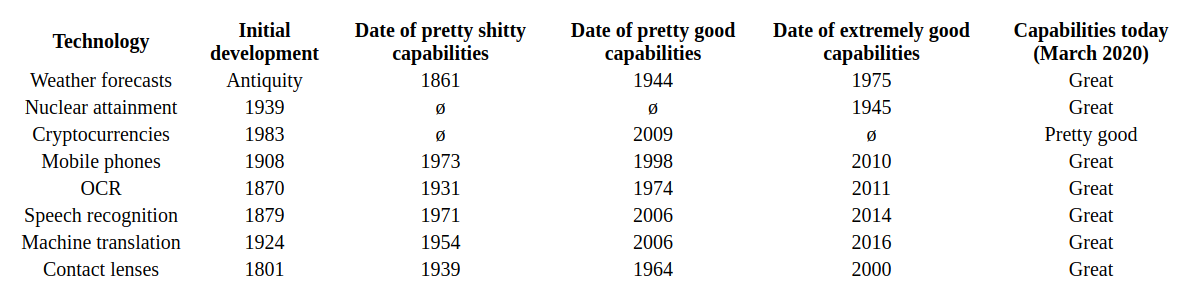

There are no sweeping conclusions to be had. What follows is a summary table; below it are some quotes on the history of weather forecasting and nuclear proliferation which I found particularly interesting.

Highlights from the history of weather forecasting.

There is some evidence that Democritus predicted changes in the weather, and that he used this ability to convince people that he could predict other future events

Several years after Aristotle's book (350 BC), his pupil Theophrastus puts together a book on weather forecasting called The Book of Signs. Various indicators such as solar and lunar halos formed by high clouds are presented as ways to forecast the weather. The combined works of Aristotle and Theophrastus have such authority they become the main influence in the study of clouds, weather and weather forecasting for nearly 2000 years

During his second voyage Christopher Columbus experiences a tropical cyclone in the Atlantic Ocean, which leads to the first written European account of a hurricane

It was not until the invention of the electric telegraph in 1835 that the modern age of weather forecasting began. Before that, the fastest that distant weather reports could travel was around 160 kilometres per day (100 mi/d), but was more typically 60–120 kilometres per day (40–75 mi/day) (whether by land or by sea). By the late 1840s, the telegraph allowed reports of weather conditions from a wide area to be received almost instantaneously, allowing forecasts to be made from knowledge of weather conditions further upwind.

But calculated by hand on threadbare data, the forecasts were often awry. In April 1862 the newspapers reported: "Admiral FitzRoy's weather prophecies in the Times have been creating considerable amusement during these recent April days, as a set off to the drenchings we've had to endure. April has been playing with him roughly, to show that she at least can flout the calculations of science, whatever the other months might do."

It was not until the 20th century that advances in the understanding of atmospheric physics led to the foundation of modern numerical weather prediction. In 1922, English scientist Lewis Fry Richardson published "Weather Prediction By Numerical Process", after finding notes and derivations he worked on as an ambulance driver in World War I. He described therein how small terms in the prognostic fluid dynamics equations governing atmospheric flow could be neglected, and a finite differencing scheme in time and space could be devised, to allow numerical prediction solutions to be found.

Richardson envisioned a large auditorium of thousands of people performing the calculations and passing them to others. However, the sheer number of calculations required was too large to be completed without the use of computers, and the size of the grid and time steps led to unrealistic results in deepening systems. It was later found, through numerical analysis, that this was due to numerical instability. The first computerised weather forecast was performed by a team composed of American meteorologists Jule Charney, Philip Thompson, Larry Gates, and Norwegian meteorologist Ragnar Fjørtoft, applied mathematician John von Neumann, and ENIAC programmer Klara Dan von Neumann. Practical use of numerical weather prediction began in 1955, spurred by the development of programmable electronic computers.

Sadly, Richardson’s forecast factory never came to pass. But twenty-eight years later, in 1950, the first modern electrical computer, eniac, made use of his methods and generated a weather forecast. The Richardsonian method proved to be remarkably accurate. The only downside: the twenty-four-hour forecast took about twenty-four hours to produce. The math, even when aided by an electronic brain, could only just keep pace with the weather.

Highlights from the history of nuclear proliferation.

The webpage of the Institute for Science and Internation Security has this this compendium on the history of nuclear capabilities. Although the organization as such remains influential, the ressource above is annoying to navigate, and may contain factual inaccuracies (e.g., Spain's nuclear ambitions both during the dictatorship and during the democratic period). Improving that online ressource might be a small project for an aspiring EA.

On the origins:

In 1934, Tohoku University professor Hikosaka Tadayoshi's "atomic physics theory" was released. Hikosaka pointed out the huge energy contained by nuclei and the possibility that both nuclear power generation and weapons could be created.[3] In December 1938, the German chemists Otto Hahn and Fritz Strassmann sent a manuscript to Naturwissenschaften reporting that they had detected the element barium after bombarding uranium with neutrons;[4] simultaneously, they communicated these results to Lise Meitner. Meitner, and her nephew Otto Robert Frisch, correctly interpreted these results as being nuclear fission[5] and Frisch confirmed this experimentally on 13 January 1939.[6] Physicists around the world immediately realized that chain reactions could be produced and notified their governments of the possibility of developing nuclear weapons.

Modern country capabilities:

Like other countries of its size and wealth, Germany has the skills and resources to create its own nuclear weapons quite quickly if desired South Korea has the raw materials and equipment to produce a nuclear weapon but has not opted to make one Today, Japan's nuclear energy infrastructure makes it capable of constructing nuclear weapons at will. The de-militarization of Japan and the protection of the United States' nuclear umbrella have led to a strong policy of non-weaponization of nuclear technology, but in the face of nuclear weapons testing by North Korea, some politicians and former military officials in Japan are calling for a reversal of this policy

In general, the projects of Switzerland and Yugoslavia were characterized by bureacratic fuckaroundism, and the Wikipedia page on their nuclear efforts is actually amusing because of the abundance of committees which never got anywhere:

The secret Study Commission for the Possible Acquisition of Own Nuclear Arms was instituted by Chief of General Staff Louis de Montmollin with a meeting on 29 March 1957.[8][12][13] The aim of the commission was to give the Swiss Federal Council an orientation towards "the possibility of the acquisition of nuclear arms in Switzerland."[12] The recommendations of the commission were ultimately favorable.[8]

The authors complained that the weapons effort had been hindered by Yugoslav bureaucracy and the concealment from the scientific leadership of key information regarding the organization of research efforts. It offers a number of specific illustrations about how this policy of concealment, “immeasurably sharper than that of any country, except in the Soviet bloc,” might hamper the timely purchase of 10 tons of heavy water from Norway.

On the other hand, the heterogeneity is worrying. Some countries (Israel) displayed ruthlessness and competency, whereas others got dragged down by bureaucracy. I suspect that this heterogeneity would also be the case for new technologies.

Cooperation between small countries:

Some scholars believe the Romanian military nuclear program to have started in 1984, however, others have found evidence that the Romanian leadership may have been pursuing nuclear hedger status earlier than this, in 1967 (see, for example, the statements made toward Israel, paired with the minutes of the conversation between the Romanian and North Korean dictators, where Ceauşescu said, "if we wish to build an atomic bomb, we should collaborate in this area as well")

The same report revealed that Brazil's military regime secretly exported eight tons of uranium to Iraq in 1981

In accord with three presidential decrees of 1960, 1962 and 1963, Argentina supplied about 90 tons of unsafeguarded yellowcake (uranium oxide) to Israel to fuel the Dimona reactor, reportedly creating the fissile material for Israel's first nuclear weapons.[11]

Syria was accused of pursuing a military nuclear program with a reported nuclear facility in a desert Syrian region of Deir ez-Zor. The reactor's components were believed to have been designed and manufactured in North Korea, with the reactor's striking similarity in shape and size to the North Korean Yongbyon Nuclear Scientific Research Center. The nuclear reactor was still under construction.

Veto powers:

These five states are known to have detonated a nuclear explosive before 1 January 1967 and are thus nuclear weapons states under the Treaty on the Non-Proliferation of Nuclear Weapons. They also happen to be the UN Security Council's permanent members with veto power on UNSC resolutions.

China:

China[6] became the first nation to propose and pledge NFU policy when it first gained nuclear capabilities in 1964, stating "not to be the first to use nuclear weapons at any time or under any circumstances." During the Cold War, China decided to keep the size of its nuclear arsenal small, rather than compete in an international arms race with the United States and the Soviet Union.[7][8] China has repeatedly reaffirmed its no-first-use policy in recent years, doing so in 2005, 2008, 2009 and again in 2011. China has also consistently called on the United States to adopt a no-first-use policy, to reach an NFU agreement bilaterally with China, and to conclude an NFU agreement among the five nuclear weapon states. The United States has repeatedly refused these calls.

0 comments

Comments sorted by top scores.