The map of quantum (big world) immortality

post by turchin · 2016-01-25T10:21:04.857Z · LW · GW · Legacy · 88 commentsContents

88 comments

The main idea of quantum (the name "big world immortality" may be better) is that if I die, I will continue to exist in another branch of the world, where I will not die in the same situation.

This map is not intended to cover all known topics about QI, so I need to clarify my position.

I think that QI may work, but I put it as Plan D for achieving immortality, after life extension(A), cryonics(B) and digital immortality(C). All plans are here.

I also think that it may be proved experimentally, namely that if I turn 120 years or will be only survivor in plane crash I will assign higher probability to it. (But you should not try to prove it before as you will get this information for free in next 100 years.)

There is also nothing quantum in quantum immortality, because it may work in very large non-quantum world, if it is large enough to have my copies. It was also discussed here: Shock level 5: Big worlds and modal realism.

There is nothing good in it also, because most of my survived branches will be very old and ill. But we could use QI to work for us, if we combine it with cryonics. Just sign up for it or have an idea to sign up, and most likely you will find your self in survived branch where you will be resurrected after cryostasis. (The same is true for digital immortality - record more about your self and future FAI will resurrect you, and QI rises chances of it.)

I do not buy "measure" objection. It said that one should care only about his "measure of existence", that is the number of all branches there he exists, and if this number diminish, he is almost dead. But if we take an example of a book, it still exist until at least one copy of it exist. We also can't measure the measure, because it is not clear how to count branches in infinite universe.

I also don't buy ethical objection that QI may lead unstable person to suicide and so we should claim that QI is false. I think that rational understanding of QI is that it or not work, or will result in severe injuries. The idea of soul existence may result in much stronger temptation to suicide as it at least promise another better world, but I never heard that it was hidden because it may result in suicide. Religions try to stop suicide (which is logical in their premises) by adding additional rule against it. So, QI itself is not promoting suicide and personal instability may be the main course of suicidal ideation.

I also think that it is nothing extraordinary in QI idea, and it adds up to normality (in immediate surroundings). We all already witness to examples of similar ideas. That is the anthropic principle and the fact that we found ourselves on habitable planet while most planets are dead. And the fact that I was born, but not my billions potential siblings. Survivalship bias could explain finding one self in very improbable conditions and QI is the same idea projected in the future.

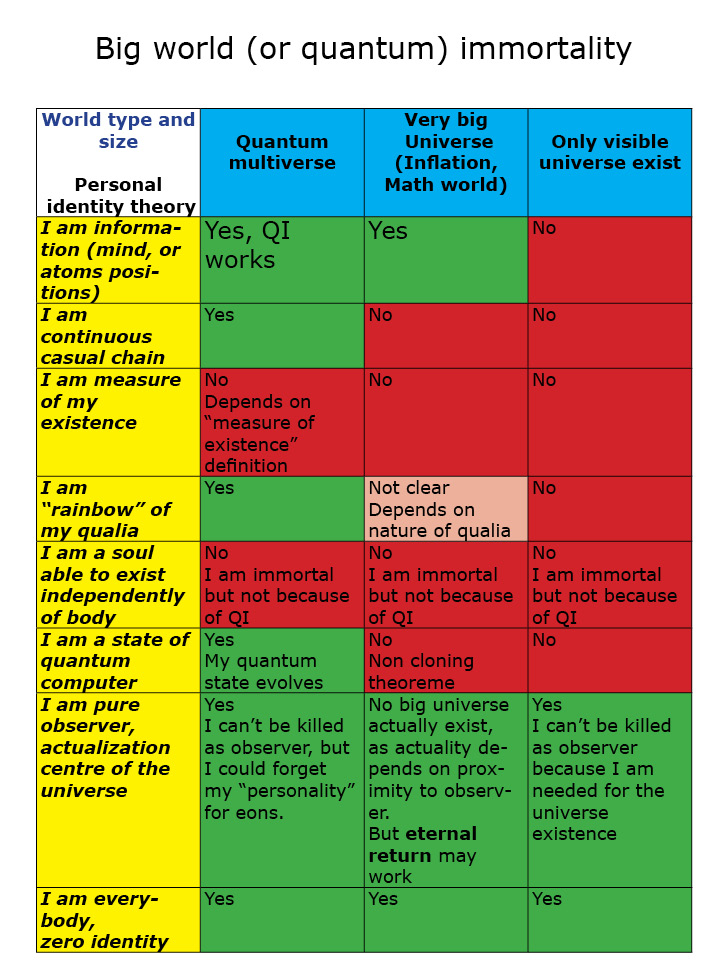

The possibility of big world immortality depends on size of the world and of nature of “I”, that is the personal identity problem solution. This table show how big world immortality depends on these two variables. YES means that big world immortality will work, NO means that it will not work.

Both variables are unknown to us currently. Simply speaking, QI will not work if (actually existing) world is small or if personal identity is very fragile.

My apriori position is that quantum multiverse and very big universe are both true, and that information is all you need for personal identity. This position is most scientific one, as it correlate with current common knowledge about Universe and mind. If I could bet on theories, I would bet on it 50 per cent, and 50 per cent on all other combination of theories.

Even in this case QI may not work. It may work technically, but become unmeasurable, if my mind will suffer so much damage that it will be unable to understand that it works. In this case it will be completely useless, the same way as survival of atoms from which my body is composed is meaningless. But this maybe objected, if we say that only my copies that remember that me is me should be counted (and such copies will surely exist).

From practical point of view QI may help if everything failed, but we can't count on it as it completely unpredictable. QI should be considered only in context of other world-changing ideas, that is simulation argument, doomsday argument, future strong AI.

88 comments

Comments sorted by top scores.

comment by Diadem · 2016-01-28T15:44:47.161Z · LW(p) · GW(p)

The problem with Quantum Immortality is that it is a pretty horrible scenario. That's not an argument against it being true of course, but it's an argument for hoping it's not true.

Let's assume QI is true. If I walk under a bus tomorrow, I won't experience universes where I die, so I'll only experience miraculously surviving the accident. That sounds good.

But here's where the nightmare starts. Dying is not a binary process. There'll be many more universes where I survive with serious injuries then universes where I survive without injury. Eventually I'll grow old. There'll be some universes where by random quantum fluctuations that miraculously never happens, but in the overwhelming majority of them I'll grow old and weak. And then I won't die. In fact I wouldn't even be able to die if I wanted to. I could decide to commit suicide, but I'll only ever experience those universes where for some reason I chose not to go through with it (or something prevented me from going through with it).

It's the ultimate horror scenario. Forced immortality, but without youth or health.

If QI is true having kids would be the ultimate crime. If QI is true the only ethical course of action would be to pour all humanity's resources into developing an ASI and program it to sterilize the universe. That won't end the nightmare, there'll always be universes where we fail to build such an ASI, but at least it will reduce the measure of suffering.

Replies from: MakoYass, turchin, qmotus↑ comment by mako yass (MakoYass) · 2016-02-13T23:04:29.882Z · LW(p) · GW(p)

We believe we may have found a solution to degenerate QI, via simulationism under acausal trade. The basic gist of it is that continuations of the mind-pattern after naturally lethal events could be overwhelminly more frequent under benevolent simulationism than continuations arising from improbable quantum physical outcomes, and, if many of the agents in the multiverse operate under an introspective decision theory, pact-simulist continuations already are overwhelmingly more frequent.

↑ comment by turchin · 2016-01-28T22:11:06.531Z · LW(p) · GW(p)

In its natural form QI is bad, but if we add cryonics, they will help each other.

If you go under the bus you now have three outcomes: you die, you are cryopreserved and lately resurrected and you are badly injured for eternity. QI prevent first one.

So you will be or cryopreserved or badly injured and survive for eternity. While both things have very small probability, cryopreservation may overweight longterm injury. And it certainly overweight a chance that you will live until 120 years old.

So if you do not want to suffer for eternity , you need to sign up for cryonics ))))

If we go deeper, we maybe surprised to find our selves in the world that prevent us from very improbable life of no dying old man, because we live in a very short time of human history where cryonics is known.

It may be explained (speculatively) that if you are randomly chosen from all possible immortals, you will find yourself in the class with highest measure.

It means that that you should expect no degradation, but ascending, may be by merging with Strong AI. It may sound wild, but I was surprised that I was not only one who came to the same conclusion, as when I was in MIRI last fall one guy had the same ideas (I forget his name).

In short it may be explained in following way: from all humans who will be immortal the biggest part will be the ones who merge with AI and the smallest one will be those who survive as very old man thanks to random fluctuation.

Replies from: Diadem↑ comment by Diadem · 2016-01-29T11:07:15.223Z · LW(p) · GW(p)

Sure, cryonics would help. But it wouldn't be more than a drop in the ocean. If QI is true, and cryonics is theoretically possible, then 500 years from now there'll be 3 kinds of universes: 1) Universes where I'm dead, either because cryonics didn't pan out (perhaps society collapsed), or because for some reason I wasn't revived. 2) Universes where I'm alive thanks to cryonics and 3) Universes where I'm alive due to quantum fluctuations 'miraculously' keeping me alive.

Clearly the measure of the 3rd kind of universe will be very very small compared to the other two. And since I don't experience the first, that means that subjectively I'm overwhelmingly likely to experience being alive thanks to cryonics. And in most of those universes I'm probably healthy and happy. So that sounds good.

But quantum immortality implies forced immortality forever. No way to escape, no way to permanently shut yourself down once you get bored with life. No way to die even after the heath death of the universe.

No matter how good the few trillion years before that will be, the end result will be floating around as an isolated mind in an empty universe, kept alive by random quantum fluctuations in an increasingly small measure of all universes that will nevertheless always have subjective measure of 1, for literally ever.

Now personally I don't think QI is very likely. In fact I consider it extremely unlikely. All I'm saying is that if it were true, that'd be a nightmare.

Replies from: turchin, qmotus, turchin↑ comment by turchin · 2016-01-29T13:55:52.978Z · LW(p) · GW(p)

While I understand your concerns, I think that during next trillion years you will be able to find the ways to solve the problem, and I even able to suggest some of the solutions now.

A trillion years from now you will be very powerful AI which also knows for sure that QI works.

The simplest solution is circle time. In in you are immortal, but your experience are repeatings. If they are pleasant, there will be no sufferings. More complex forms of time are also possible, so the "linear time trap" is just a result of our lack of imagination. Circular time probably result naturally from QI, because any human has non zero probability to transform in any other human, so you will circle in random patterns the space of all possible minds. (It also solves identity problem by the way - everybody are identical, but with different time for transformation.)

You could edit your brain in the way that it would enjoy empty eternity, so no sufferings. Anyway you may lost part of your long-term memory, so you may don't know your real age. And in most QI branches it will happen naturally.

Even if suffering (not very strong and painful) is real after trillion years from now, it may be good deal to agree on QI now, because of discounting effect. I prefer to live trillion years than to die in strong suffering in next 20.

Maybe the strong AI will prove that it is able to create fun quicker than use this fun, so it will always has something to do, no matter how much linear time has gone. It also may create many levels avatar worlds (simulations) where avatars will not remember their real age (and we are probably inside such simulation).

I spent 25 years to come to these ideas (from the summer 1990 than I get the idea of QI) so in next trillion years I will be able to get better ideas I hope.

Replies from: Lumifer↑ comment by Lumifer · 2016-01-29T16:15:20.147Z · LW(p) · GW(p)

A trillion years from now you will be very powerful AI which also knows for sure that QI works.

So if in the next few months a planet-sized rock comes out of deep space at high velocity and slams into Earth, in which Everett branch will you survive? Which quantum fluctuation will save you?

Replies from: turchin, OrphanWilde↑ comment by turchin · 2016-01-29T20:33:57.194Z · LW(p) · GW(p)

Yes, in all branches where i am in simulation and wake up. The same "me" may be in different worlds.

Or in the universe where aliens will save me just a second before the impact.

Or I will be resurrected by another aliens based on my footprint in radiowaves.

Replies from: Lumifer↑ comment by Lumifer · 2016-01-29T20:50:53.282Z · LW(p) · GW(p)

There is no possible issue that cannot be resolved by an answer "you are in a simulation and the simulation just changed its rules".

Replies from: qmotus↑ comment by qmotus · 2016-01-30T10:42:10.012Z · LW(p) · GW(p)

Given a big world, we live in a simulation and we don't; we're simply unable to self-locate ourselves in the set of all identical copies. That's one of the main points of of the post about modal realism that turchin linked to in the original post. Failure to see how this leads to survival in every scenario is due to not thinking enough about it.

A big world was presented here as one of the premises of the whole argument, so if you think that the conclusions drawn here are ridiculous, you should probably attack that premise. I actually think physicists and philosophers would be rather more reluctant to bite all the bullets shot at them and think of alternatives if they realized what implications theories like MWI and inflation have, and care more about valid criticisms such that we have no accepted solution to the measure problem (although it seems that most physicists think that it can be solved without giving up the multiverse).

↑ comment by OrphanWilde · 2016-01-29T16:31:13.789Z · LW(p) · GW(p)

The one where events happened exactly the same - and then you wake up.

Uncertainty doesn't happen in the universe, after all. The universe isn't uncertain about what it is; the observer is uncertain about what universe it is in.

Replies from: Lumifer↑ comment by turchin · 2016-01-29T15:58:33.395Z · LW(p) · GW(p)

Update: There are many ways how we could survive the end of the universe (see my map), so the endless emptiness is not necessary option. http://lesswrong.com/lw/mfa/a_roadmap_how_to_survive_the_end_of_the_universe/

↑ comment by qmotus · 2016-01-28T16:01:15.038Z · LW(p) · GW(p)

If QI is true having kids would be the ultimate crime. If QI is true the only ethical course of action would be to pour all humanity's resources into developing an ASI and program it to sterilize the universe. That won't end the nightmare, there'll always be universes where we fail to build such an ASI, but at least it will reduce the measure of suffering.

This is something I've thought about too, although I've been a bit reluctant to write about it publicly. But on the other hand QI seems quite likely to be true, so I guess we should make up our minds about it.

comment by Lumifer · 2016-01-28T15:54:46.497Z · LW(p) · GW(p)

I am a bit confused. If we are living in a Quantum Immortality world, why don't we see any 1000-year-old people around?

Replies from: turchin, qmotus, ESRogs, CAE_Jones↑ comment by turchin · 2016-01-28T22:26:05.660Z · LW(p) · GW(p)

Only one observer is immortal in one world, you can'y meet others.

It is the same like in lottery with one prize. If you win, other has lost. But Anthropic principle metaphor is more correct. You don't meet other immortals, the same way as Fermi paradox work and we don't meet other habitable planets. Because winning is so improbable that we could find ourselves on habitable planet only because observation selection.

↑ comment by qmotus · 2016-01-28T16:09:42.530Z · LW(p) · GW(p)

Because it's incredibly unlikely for anyone to live to be a thousand years old and equally unlikely whether MWI is true or not. There are worlds where we see maybe one such person, of course, but this just isn't one of them (unless you think that, say, Stephen Hawking keeping on living against all odds is evidence of QI).

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T16:16:32.666Z · LW(p) · GW(p)

Because it's incredibly unlikely for anyone to live to be a thousand years old and equally unlikely whether MWI is true or not.

Under QI, doesn't everyone live to be a thousand years old and more?

There are worlds where we see maybe one such person, of course, but this just isn't one of them

Human longevity looks to have a pretty hard cut-off at the moment. We don't see anyone 150 years old, too.

Replies from: qmotus↑ comment by qmotus · 2016-01-28T17:03:35.933Z · LW(p) · GW(p)

Under QI, doesn't everyone live to be a thousand years old and more?

Think of it like this: MWI makes the exact same predictions regarding observations as the Copenhagen interpretation, it's just that observations that are incredibly unlikely to ever happen in CI happen in a very small portion of all existing worlds in MWI. QI does not change this, which means that everybody does live to 1000 in a small minority of worlds, but in most worlds they die in their 120s at the latest. Therefore you're very unlikely to see anyone else besides yourself living miraculously long.

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T17:12:28.850Z · LW(p) · GW(p)

MWI makes the exact same predictions regarding observations as the Copenhagen interpretation

I don't believe the Copenhagen interpretation expects me to live forever.

Out of curiosity, have there been attempts to estimate the "branching speed" under MWI? How many worlds with slightly different copies of me will exist in 1 second?

Replies from: qmotus↑ comment by qmotus · 2016-01-28T17:24:49.278Z · LW(p) · GW(p)

I don't believe the Copenhagen interpretation expects me to live forever.

It does not. There's the difference. But if someone looks at you from the outside, the probability with which they will see you living or dying is not affected by quantum interpretations.

As to your second question, I don't know. QI as it is presented here is based on a pretty simplistic version of MWI, I suppose, one which may have flaws. I hope that's the cased, actually.

↑ comment by CAE_Jones · 2016-01-28T16:33:37.440Z · LW(p) · GW(p)

I understand QI as related to the Anthropic Principal. The point is that you will tend to find yourself observing things, which implies that there is an effectively immortal version of you somewhere in probability space. It doesn't require that any Quantum Immortals coexist in the same world.

Of course, we'd be far more likely to continue observing things in a world where immortality is already available than in one where it is not, but since we're not in that world, it doesn't seem too outlandish to give a little weight to the idea that the absence of Quantum Immortals is a precondition to being a Quantum Immortal. I have no idea how that makes sense, though. One could construct fantastic hypotheticals about eventually encountering an alien race intent on wiping out immortals, or some Highlander-esque shenanigans, but more likely is that immortality is just hard and not that many people can win the QI lottery in a single world. (Or even that we happen to be living at the time when immortality is attainable.)

Incidentally (or frustratingly), this gets us back into "it's all part of the divine plan" territory. Why do you go through problem X? Because if you didn't, you would eventually die forever.

I am now curious as to whether or not there are books that combine Quantum Immortality with religious eschitology[sic]. Just wait for the Quantum Messiah to invent a world-hopping ability to rescue everyone who has ever lived from their own personal eternity (which is probably a Quantum Hell by that point), and bring them to Quantum Heaven.

(I was not thinking Quantum Jesus would be an AI, but sure; why not? Now we have the Universal Reconciliation version of straw Singularitarianism.)

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T16:40:07.466Z · LW(p) · GW(p)

I understand QI as related to the Anthropic Principal. The point is that you will tend to find yourself observing things, which implies that there is an effectively immortal version of you somewhere in probability space.

The Anthropic Principle does not imply immortality. It basically says that you will not observe a world in which you don't exist, but it says nothing about you continuing to exist forever in time.

comment by jollybard · 2016-02-14T16:29:23.453Z · LW(p) · GW(p)

There might also be situations where surviving is not just ridiculously unlikely, but simply mathematically impossible. That is, I assume that not everything is possible through quantum effects? I'm not a physicist. I mean, what quantum effects would it take to have your body live forever? Are they really possible?

And I have serious doubts that surviving a plane crash or not could be due to quantum effects, but I suppose it could simply be incredibly unlikely. I fear that people might be confusing "possible worlds" in the subjective Bayesian sense and in the quantum many-worlds sense.

Replies from: turchin↑ comment by turchin · 2016-02-15T10:33:34.302Z · LW(p) · GW(p)

In Soviet Union a woman survived mid-air frontal planes collision - her chair rotated together with part of the wing and failed into a forrest.

But the main idea here is that the same "me" may exist in different worlds - in one I am in a plane in the other I am in plane simulator. I will survive in the second one.

Replies from: jollybard↑ comment by jollybard · 2016-02-15T20:06:32.010Z · LW(p) · GW(p)

My point was that QM is probabilistic only at the smallest level, for example in the Schrödinger's cat thought experiment. I don't think surviving a plane crash is ontologically probabilistic, unless of course the crash depends on some sort of radioactive decay or something! You can't make it so that you survive the plane crash without completely changing the prior causal networks... up until the beginning of you universe. Maybe there could be a way to very slightly change one of the universal constants so that nothing changes except that you survive, but I seriously doubt it.

Replies from: qmotus↑ comment by qmotus · 2016-02-15T20:28:35.333Z · LW(p) · GW(p)

As turchin said, it's possible that the person in the plane accident exists in both a "real world" and a simulation, and will survive in the latter. Or they quantum tunnel to ground level before the plane crashes (as far as I know, this has an incredibly small but non-zero probability of occurring, although I'm not a physicist either). Or they're resurrected by somebody, perhaps trillions of years after the crash. And so forth.

Replies from: turchin↑ comment by turchin · 2016-02-15T21:43:20.097Z · LW(p) · GW(p)

In fact so called QI does not depends of QM at all. All it needs is big world in Tegmark style.

This means that many earths exist in the universe and they are different, but me is the same on them. On one earth the planes kill everybody and on the other there will be survivors.

comment by gjm · 2016-01-28T16:20:37.591Z · LW(p) · GW(p)

I have never seen it adequately explained exactly what "QI is true" or "QI works" is supposed to mean.

If it just means (as, e.g., in the first paragraph here) "in any situation where it seems like I die, there are branches where I somehow don't": OK, but why is that interesting? I mean, why is it more interesting than "in any situation where it seems like I die, there are very-low-probability ways for it not to happen"?

Whatever intuitions you have for how you should feel about a super-duper-low-probability event, you should apply them equally to a super-duper-low-measure branch, because these are the same thing.

Replies from: turchin, qmotus↑ comment by turchin · 2016-01-28T21:39:51.075Z · LW(p) · GW(p)

QI predict result of a physical experiment. It said that if there are two outcomes, 1 and 2, and I am an observer of this experiment and in case of outcome 2 I die, than I will measure outcome 1 with 100 per cent probability, no matter what was priors of outcome 1 and 2.

This definition doesn't depend on any "esoteric" ideas about "I" and personal identity. Observer here coud be a turing-computer program.

For example, if we run 1 000 000 copies of a program which will be terminated if dice (each for any instance of the program) falls odd (1,3,5) and not terminated if (2,4,6), than the program should expect that it will measure only 2, 4 or 6 with one third probability each and that after the dices were rolled only 500 000 copies of the program survive.

Replies from: gjm, Lumifer↑ comment by gjm · 2016-01-28T22:00:24.672Z · LW(p) · GW(p)

QI predict result of a physical experiment. [...] I will measure outcome 1 with 100 per cent probability

The same is true on any interpretation of QM, and even without QM.

If you are guaranteed to die when the outcome is 2, then every outcome you experience will be outcome 1. Everyone should agree with that. It has nothing to do with any special feature of quantum mechanics. It doesn't rely on "many worlds" or anything.

Replies from: qmotus, turchin↑ comment by qmotus · 2016-01-28T22:18:13.014Z · LW(p) · GW(p)

The same is true on any interpretation of QM, and even without QM.

What is true on any interpretation is that if one experiences any outcome at all, they will with 100 percent probability experience 1. Only with QI can they be 100 percent certain of actually experiencing it.

Replies from: turchin↑ comment by Lumifer · 2016-01-28T21:56:06.548Z · LW(p) · GW(p)

QI predict result of a physical experiment. It said that if there are two outcomes, 1 and 2, and I am an observer of this experiment and in case of outcome 2 I die, than I will measure outcome 1 with 100 per cent probability, no matter what was priors of outcome 1 and 2.

That's just anthropics: you will not observe the world in which you do not exist.

As I mentioned in another comment, I still don't see how this leads to you existing forever.

You will not, actually, measure outcome 1 with 100% probability, since you may well die before doing so.

Replies from: turchin↑ comment by turchin · 2016-01-28T22:37:22.952Z · LW(p) · GW(p)

Lets assume that 1 million my copies exist and they play russian roulette every second with two equal outcomes. Next second there will be 500 000 my copies who experience outcome 1 and so on for next 20 second. So one copy of me will survive 20 rounds of roulette and will feel itself immortal.

Many world immortality is based on this experiment with two premises: that there are infinitely many my copies (or they created after each round) and that there is no existential difference between the copies. In this case roulette will always fail.

I put all different outcomes of these two premises in the map in the opening post, seems strange that no body sees it )) If there is no infinite number of my copies and or if copies are not equal, big world immortality doesn't work.

↑ comment by qmotus · 2016-01-28T17:06:36.494Z · LW(p) · GW(p)

QI messes up the subjective probabilities. If there is simply one world and one "copy" of you, and you have a very, very small probability of surviving some event, you can be practically certain that you won't live to eat breakfast the next day. However, if there are very many copies of you and QI works, you can be certain that you will live. It completely changes what you should, subjectively, expect to experience in such a situation.

Replies from: Lumifer, entirelyuseless, gjm↑ comment by Lumifer · 2016-01-28T17:16:17.584Z · LW(p) · GW(p)

if there are very many copies of you and QI works, you can be certain that you will live

You use the word "you" to refer not to a single something, but rather to a vast rapidly expanding field of different consciousnesses united only by the fact that long time ago they branched off from a single point -- right?

Replies from: qmotus↑ comment by qmotus · 2016-01-28T17:29:37.477Z · LW(p) · GW(p)

Yes, I'm assuming a sort of patternist viewpoint here. Although I don't think that it's particularly important, whatever one's preferred theory of identity is, it remains the case that given QI, there will be a "you" (or multiple "you"s) in that scenario who will feel like they are the same consciousness as the "you" at the point of branching.

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T17:52:52.849Z · LW(p) · GW(p)

it remains the case that given QI, there will be a "you" (or multiple "you"s) in that scenario who will feel like they are the same consciousness as the "you" at the point of branching.

Well, not quite, in that scenario I will feel that I am one of a multitude of different "I"s spawned from a branching point. Kinda like the relationship between you and your (first-, second-, third-, etc.) cousins.

An important property of self-identity is uniqueness.

Replies from: qmotus↑ comment by qmotus · 2016-01-28T17:58:09.662Z · LW(p) · GW(p)

Will the person before the branching then simply be another cousin to you? If so, do you feel like the person you woke up as tomorrow morning was not in fact you, but yet another cousin of yours?

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T18:00:51.779Z · LW(p) · GW(p)

It depends on whether I know/believe that I'm the only one who woke up this morning with memories of my yesterday's self, or a whole bunch of people/consciousnesses woke up this morning with memories of my yesterday's self.

The self before the branching would be my ancestor who begat a lot of offspring of which I'm one.

One -> one is a rather different situation from one -> many.

Replies from: qmotus↑ comment by qmotus · 2016-01-28T18:04:25.859Z · LW(p) · GW(p)

Fair enough, I just find it extremely difficult to think like that in practice (it's a bit easier if I look back at myself from ten years ago or thirty years to the future).

Replies from: Lumifer↑ comment by Lumifer · 2016-01-28T18:08:31.995Z · LW(p) · GW(p)

Well, under MWI there are people who "are" you in sense of having been born to the same mother on the same day, but their branch diverged early on so that they are very unlike you now. And still they are also "you".

Replies from: qmotus↑ comment by qmotus · 2016-01-28T18:15:55.652Z · LW(p) · GW(p)

True, and as I said, I feel like those people are indeed closer to cousins. But when we're talking about life and death situations such as those that QI applies to, the "I's after branching" are experientially so close to me that I do think that it's more about immortality for me than about me just having a bunch of cousins.

↑ comment by entirelyuseless · 2016-01-28T22:56:58.380Z · LW(p) · GW(p)

In no case should you expect to experience not living until the next day. That cannot be experienced, whether QI is true or not.

Replies from: qmotus↑ comment by gjm · 2016-01-28T17:31:31.376Z · LW(p) · GW(p)

What exactly do you mean by "you" here? (I think maybe different things in different cases.)

Replies from: qmotus↑ comment by qmotus · 2016-01-28T17:46:48.200Z · LW(p) · GW(p)

Maybe I should try to get rid of that word. So let's suppose we have a conscious observer in a situation like that, so that they have a very, very large probability to die soon and a small but non-zero probability to survive. Now, if there is only one world that doesn't split and there are no copies of that observer, i.e. other observers who have a conscious experience identical or very similar to that of our original observer, then that observer should expect that i) the only outcome that they may experience is one in which they survive, but that ii) most likely they will not experience any outcome.

Whereas given MWI and QI, there will be an observer (numerous such observers, actually) who will rememeber being the original observer and feel like they are the same observer, with a certainty.

So "you" kind of means "someone who feels like he/she/it is you".

Replies from: gjm↑ comment by gjm · 2016-01-28T20:57:35.549Z · LW(p) · GW(p)

But if you hold "you X" to be true merely because someone who feels like they're you does X, without regard for how plentiful those someones are across the multiverse (or perhaps just that part of it that can be considered the future of the-you-I'm-talking-to, or something) then you're going to have trouble preferring a 1% chance of death (or pain or poverty or whatever) to a 99% chance. I think this indicates that that's a bad way to use the language.

Replies from: qmotus↑ comment by qmotus · 2016-01-28T21:19:27.578Z · LW(p) · GW(p)

I'm not sure I entirely get what you're saying; but basically, yes, I can see trouble there.

But I think that, at its core, the point of QI is just to say that given MWI, conscious observers should expect to subjectively exist forever, and in that it differs from our normal intuition which is that without extra effort like signing up for cryonics, we should be pretty certain that we'll die at some point and no longer exist after that. I'm not sure that all this talk about identity exactly hits the mark, although it's relevant in the sense that I'm hopeful that somebody manages to show me why QI isn't as bad as it seems to be.

Replies from: gjm↑ comment by gjm · 2016-01-28T22:03:14.470Z · LW(p) · GW(p)

QI or no QI, we should believe the following two things.

In every outcome I will ever get to experience, I will still be alive.

In the vast majority of outcomes 200 years from now (assuming no big medical breakthroughs etc.), measured in any terms that aren't defined by my experiences, I will be dead.

What QI mostly seems to add to this is some (questionable) definitions of words like "you", and really not much else.

Replies from: entirelyuseless, qmotus↑ comment by entirelyuseless · 2016-01-29T14:21:41.929Z · LW(p) · GW(p)

I agree with qmotus that something is being added, not so much by QI, as by the many worlds interpretation. There is certainly a difference between "there will be only one outcome" and "all possible outcomes will happen."

If we think all possible outcomes will happen, and if you assume that "200 years from now, I will still be alive," is a possible outcome, it follows from your #1 that I will experience being alive 200 years from now. This isn't a question of how we define "I" - it is true on any definition, given that the premises use the same definition. (This is not to deny that I will also be dead -- that follows as well.)

If only one possible outcome will happen, then very likely 200 years from now, I will not experience being alive.

So if QI adds anything to MWI, it would be that "200 years from now, I will still be alive," and the like, are possible outcomes.

Replies from: gjm↑ comment by gjm · 2016-01-29T16:58:56.839Z · LW(p) · GW(p)

There is certainly a difference between "there will be only one outcome" and "all possible outcomes will happen"

There's no observable difference between them. In particular, "happen" here has to include "happen on branches inaccessible to us", which means that a lot of the intuitions we've developed for how we should feel about something "happening" or not "happening" need to be treated with extreme caution.

If we think [...] it follows from your #1 that I will experience being alive 200 years from now. This isn't a question of how we define "I" - it is true on any definition

OK. But the plausibility -- even on MWI -- of (1) "all possible outcomes will happen" plus (2) "it is possible that 200 years from now, I will still be alive" depends on either an unusual meaning for "will happen" or an unusual meaning for "I" (or of course both).

Maybe the right way to put it is this. MWI turns "ordinary" uncertainty (not knowing how the world is or will be) into indexical uncertainty (not knowing where in the world "I" will be). If you accept MWI, then you can take something like "X will happen" to mean "I will be in a branch where X happens" (in which case you're only entitled to say it when X happens on all branches, or at least a good enough approximation to that) or to mean "there will be a branch where X happens" (in which case you shouldn't feel about that in the same way as you feel about things definitely happening in the usual sense).

So: yes, on some branch I will experience being alive 200 years from now; this indeed follows from MWI. But to go from there to saying flatly "I will experience being alive 200 years from now" you need to be using "I will ..." locutions in a very nonstandard manner. If your employer asks "Will you embezzle all our money?" and your intentions are honest, you will probably not answer "yes" even though presumably there's some very low-measure portion of the multiverse where for some reason you set out to do so and succeed.

Whether that nonstandard usage is a matter of redefining "I" (so it applies equally to every possible continuation of present-you, however low its measure) or "will" (so it applies equally to every possible future, however low its measure) is up to you. But as soon as you say "I will experience being alive 200 years from now" you are speaking a different language from the one you speak when you say "I will not embezzle all your money". The latter is still a useful thing to be able to say, and I suggest that it's better not to redefine our language so that "I will" stops being usable to distinguish large-measure futures from tiny-measure futures.

if QI adds anything to MWI, it would be that [...] are possible outcomes.

Unless they were already possible outcomes without MWI, they are not possible outcomes with MWI (whether QI or no QI).

What MWI adds is that in a particular sense they are not merely possible outcomes but certain outcomes. But note that the thing that MWI makes (so far as we know) a certain outcome is not what we normally express by "in 200 years I will still be alive".

Replies from: qmotus↑ comment by qmotus · 2016-01-30T10:44:44.247Z · LW(p) · GW(p)

You raise a valid point, which makes me think that our language may simply be inadequate to describe living in many worlds. Because both "yes" and "no" seem to me to be valid answers to the question "will you embezzle all our money".

I still don't think that it refutes QI, though. Take an observer at some moment: looking towards the future and ignoring the branches where they don't exist, they will see that every branch will lead to them living to be infinitely old; but every branch doesn't lead to them embezzling their employer's money.

But note that the thing that MWI makes (so far as we know) a certain outcome is not what we normally express by "in 200 years I will still be alive".

Do you mean that it's not certain because of the identity considerations presented, or that MWI doesn't even say that it's necessarily true in some branch?

Replies from: gjm↑ comment by gjm · 2016-01-30T11:46:40.820Z · LW(p) · GW(p)

I still don't think that it refutes QI, though.

I don't think refuting is what QI needs. It is, actually, true (on MWI) that despite the train rushing towards you while you're tied to the tracks, or your multiply-metastatic inoperable cancer, or whatever other horrors, there are teeny-tiny bits of wavefunction (and hence of reality) in which you somehow survive those horrors.

What QI says that isn't just restating MWI is as much a matter of attitude to that fact as anything else.

I wasn't claiming that QI and inevitable embezzlement are exactly analogous; the former involves an anthropic(ish) element absent from the latter.

Do you mean that it's not certain because of the identity considerations presented, or that MWI doesn't even say that it's necessarily true in some branch?

The "so far as we know" was because of the possibility that there are catastrophes MWI gives you no way to survive (though I think that can only be true in so far as QM-as-presently-understood is incomplete or incorrect). The "not what we normally express by ..." was because of what I'd been saying in the rest of my comment.

Replies from: qmotus↑ comment by qmotus · 2016-01-30T12:09:28.782Z · LW(p) · GW(p)

I see. But I fail to understand, then, how this is uninteresting, as you said in your original comment. Let's say you find yourself on those rain tracks: what do you expect to happen, then? What if a family member or other important person comes to see you for (what they believe to be) a final time? Do you simply say goodbye to them, fully aware that from your point of view, it won't be a final time? What if we repeat this for a hundred times in a row?

Replies from: gjm↑ comment by gjm · 2016-01-30T15:20:14.282Z · LW(p) · GW(p)

what do you expect to happen, then?

I have the following expectations in that situation:

- In most possible futures, I will soon die. Of course I won't experience that (though I will experience some of the process), but other people will find that the world goes on without me in it.

- Therefore, most of my possible trajectories from here end very soon, in death.

- In a tiny minority of possible futures, I somehow survive. The train stops more abruptly than I thought possible, or gets derailed before hitting me. My cancer abruptly and bizarrely goes into complete remission. Or, more oddly but not necessarily more improbably: I get most of the way towards death but something stops me partway. The train rips my limbs off and somehow my head and torso get flung away from the tracks, and someone finds me before I lose too much blood. The cancer gets most of the way towards killing me, at which point some eccentric billionaire decides to bribe everyone involved to get my head frozen, and it turns out that cryonics works better than I expect it to. Etc.

I suspect you will want to say something like: "OK, very good, but what do you expect to experience?" but I think I have told you everything there is to say. I expect that a week from now (in our hypothetical about-to-die situation) all that remains of "my" measure will be in situations where I had an extraordinary narrow escape from death. That doesn't seem to me like enough reason to say, e.g., that "I expect to survive".

Do you simply say goodbye to them [...]?

Of course. From my present point of view it almost certainly will be a final time. From the point of view of those ridiculously lucky versions of me that somehow survive it won't be, but that's no different from the fact that (MWI or no, QI or no) I might somehow survive anyway.

If we repeat this several times in a row, then actually my update isn't so much in the direction of QI (which I think has zero actual factual content; it's just a matter of definitions and attitudes, ) as in the direction of weird theories in which someone or something is deliberately keeping me alive. Because if I have just had ten successive one-in-a-billion-billion escapes, hypotheses like "there is a god after all, and for some reason it has plans that involve my survival" start to be less improbable than "I just got repeatedly and outrageously lucky".

Replies from: turchin, qmotus, entirelyuseless↑ comment by turchin · 2016-01-30T21:49:24.269Z · LW(p) · GW(p)

I think that this attitude to QI is wrong because the measure should be renormilized if the number of the observers change.

We can't count the worlds where I do not exist as worlds that influence my measure (or if we do, we have to add all other worlds where I do not exist, which are infinite and so my chances to exist in any next moment are almost zero).

The number of "me" will not change in case of embezzle. But If I die in some branches, it changes. It may be a little bit foggy in case of quantum immortality, but if we use many world immortality it may be clear.

For example a million copies of the program tries to calculate something inside actual computer. The goal system of the program is that it should calculate, say, pi with 10 digits accuracy. But it knows that most copies of the program will be killed soon, before it will able to finish calculation. Should it stop, knowing that it will be killed in next moment and with overwhelming probability? No, because if it stops, all its other copies stop too. So it must behave as it will survive.

My point is that from decision theory point of view rational agent should behave as if QI works, and plan his action or expectation accordingly. It also should expect that all his future experiences will be supportive to QI.

I will try to construct more clear example: For example, I have to survive many rounds of russian rouletts with chances of survival 1 in 10 each. The only thing I could change about it is following: after each round I will be asked if I believe in QI and will be punished by electroshock if I say "NO". If I say "YES", I will be punished twice in this round, but never again in any round.

If agent believe in QI it is rational to him to say "YES" in the beginning, get two shocks and never get it again. If he "believes in measure", than it will be rational to him to say NO, get one punishment in the beginning, and 0,1 punishment in next round, 0.01 punishment in third and so on, with total 1.111, which is smaller than 2.

My point here is that after several rounds most people (if they will be such agents) will change their decision and will say Yes.

In case of your example with train it means that it will be rational to you to use part of your time not for speaking with relatives, but for planning your actions after you survive in most probable way (train derails).

Replies from: gjm↑ comment by gjm · 2016-01-31T00:13:11.484Z · LW(p) · GW(p)

the measure should be renormalized if the number of observers change

I'm pretty sure I disagree very strongly with this, but I'm not absolutely certain I understand what you're proposing so I could be wrong.

from decision theory a rational agent should behave as if QI works

Not quite, I think. Aren't you implicitly assuming that the rational agent doesn't care what happens on any branch where they cease to exist? Plenty of (otherwise?) rational agents do care. If you give me a choice between a world where I get an extra piece of chocolate now but my family get tortured for a year after I die, and an otherwise identical world where I don't get the chocolate and they don't get the torture, I pick the first without hesitation.

Can we transpose something like this to your example of the computer? I think so, though it gets a little silly. Suppose the program actually cares about the welfare of its programmer, and discovers that while it's running it's costing the programmer a lot of money. Then maybe it should stop, on the grounds that the cost of those millions of futile runs outweighs the benefit of the one that will complete and reveal the tenth decimal place of pi.

(Of course the actual right decision depends on the relative sizes of the utilities and the probabilities involved. So it is with QI.)

After surviving enough rounds of your Russian Roulette game, I will (as I said above) start to take seriously the possibility that there's some bias in the results. (The hypotheses here wouldn't need to be as extravagant as in the case of surviving obviously-fatal diseases or onrushing trains.) That would make it rational to say yes to the QI question (at least as far as avoiding shocks goes; I also have a preference for not lying, which would make it difficult for me to give either a simple yes or a simple no as answer).

I agree that in the train situation it would be reasonable to use a bit of time to decide what to do if the train derails. I would feel no inclination to spend any time deciding what to do if the Hand of God plucks me from its path or a series of quantum fluctuations makes its atoms zip off one by one in unexpected directions.

Replies from: turchin, turchin↑ comment by turchin · 2016-01-31T10:55:19.478Z · LW(p) · GW(p)

It looks like you suppose that there is branches where agent cease to exist, like dead end branches. In this branches he has zero experience after death. But another description of this situation is that there is no dead ends, because branching happens in every point, and so we should count only cells of space-time there future I exist. For example I do not exist on Mars and on all other Solar system bodies (except Earth). It doesn't mean that I died on Mars. Mars is just empty cell in our calculation of future me, which we should not count on. The same is true about branches where I was killed. Renormalization on observer number is used in other discussions of anthropic, like anorthic principle, Sleeping beauty. There are still some open questions there, like how we could measure identical observers.

If an agent cares about his family, yes. He should not care about his QI. (But if he really believe in MWI and modal realism, he may also conclude that he can't do anything to change their fate.)

Qi is rises very quickly chances that I am in a strange universe where God exist (or that I am in a simulation which also models afterlife). So finding myself in it will be evidence that QI worked.

↑ comment by turchin · 2016-01-31T11:14:31.924Z · LW(p) · GW(p)

I will try completely different explanation. For example, I die, but in future I will be resurrected by strong AI as exact copy of me. If I think that personal identity is information, I should be happy about it.

Let now assume that 10 copies of me exist in ten planets and all of them die, all the same way. The same future Ai may think that it will be enough to create only one copy of me to resurrect all dead copies. Now it is more similar to QI.

If we have many copies of compact disk with Windows95 and many of them destroyed, it doesn't matter if one disk still exist.

Replies from: gjm↑ comment by gjm · 2016-01-31T18:07:15.902Z · LW(p) · GW(p)

So, first of all, if only one copy exists then any given misfortune is more likely to wipe out every last one of me than if ten copies exist. Aside from that, I think it's correct that I shouldn't much care now how many of me there are -- i.e., what measure worlds like the one I'm in have relative to some predecessor.

But there's a time-asymmetry here: I can still care (and do) about the measure of future worlds with me in is, relative to the one I'm in now. (Because I can influence "successors" of where-I-am-now but not "predecessors". The point of caring about things is to help you influence them.)

Replies from: turchin↑ comment by turchin · 2016-01-31T18:24:07.516Z · LW(p) · GW(p)

It looks like that we are close to conclusion that QI mainly put difference between "egocentric" and "altruistic" goal systems. The most interesting question is: where is the border between them? If I like my hand, is it part of me or of external world?

There is also interesting analogy with virus behavior. A virus seems to be interested in existing of his remote copies, with which it may have no any casual connections, because they will continue to replicate. (Altruistic genes do the same, if they exist after all). So egoistic behaviour here is altruistic to another copies of the virus.

↑ comment by qmotus · 2016-01-31T09:23:36.033Z · LW(p) · GW(p)

I suspect you will want to say something like: "OK, very good, but what do you expect to experience?" but I think I have told you everything there is to say.

I'm tempted to, but I guess you have tried to explain your position as well as you can. I see you what you are trying to say, but I still find it quite incomprehensible how that attitude can be adopted in practice. On the other hand, I feel like it (or somehow getting rid of the idea of continuity of consciousness, as Yvain has suggested, which I have no idea how to do) is quite essential for not being as anxious and horrified about quantum/big world immortality as I am.

↑ comment by entirelyuseless · 2016-01-31T17:32:02.752Z · LW(p) · GW(p)

But unless you are already absolutely certain of your position in this discussion, you should also update toward, "I was mistaken and QI has factual content and is more likely to be true than I thought it was."

Replies from: gjm↑ comment by gjm · 2016-01-31T18:03:06.038Z · LW(p) · GW(p)

Probably. But note that according to my present understanding, from my outrageously-surviving self's vantage point all my recent weird experiences are exactly what I should expect -- QI or no QI, MWI or MWI, merely conditioning on my still being there to observe anything.

↑ comment by qmotus · 2016-01-28T22:16:04.946Z · LW(p) · GW(p)

I would say that QI (actually, MWI) adds a third thing, which is that "I will experience every outcome where I'm alive", but it seems that I'm not able to communicate my points very effectively here.

Replies from: gjm↑ comment by gjm · 2016-01-29T14:15:19.803Z · LW(p) · GW(p)

How does MWI do that? On the face of it, MWI says nothing about experience, so how do you get that third thing from MWI? (I think you'll need to do it by adding questionable word definitions, assumptions about personal identity, etc. But I'm willing to be shown I'm wrong!)

Replies from: qmotus↑ comment by qmotus · 2016-01-29T16:57:16.214Z · LW(p) · GW(p)

I think this post by entirelyuseless answers your question quite well, so if you're still puzzled by this, we can continue there. Also, I don't see how QI depends on any additional weird assumptions. After all, you're using the word "experience" in your list of two points without defining it exactly. I don't see why it's necessary to define it either: a conscious experience is most likely simply a computational thing with a physical basis, and MWI and these other big world scenarios essentially say that all physical states (that are not prohibited by the laws of physics) happen somewhere.

Replies from: gjmcomment by qmotus · 2016-01-25T14:00:02.707Z · LW(p) · GW(p)

I've contemplated writing a post about the same subject of "big world immortality" (could be call it BWI for short?) myself, but mostly focusing on this part: "There is nothing good in it also, because most of my survived branches will be very old and ill. But we could use QI to work for us, if we combine it with cryonics. Just sign up for it or have an idea to sign up, and most likely you will find your self in survived branch where you will be resurrected after cryostasis. (The same is true for digital immortality - record more about your self and future FAI will resurrect you, and QI rises chances of it.)"

It seems to me that we should be very pessimistic about the future because of QI/BWI. After all, what guarantee is there that you will wake up in a friendly world, or that the AI who resurrects is friendly? Should we be worried about this? What could we do to increase the likelihood that we'll find ourselves in a comfortable future?

I'm very confused about this myself. It seems to me, too, that there's a significant chance that QI is true, but there are objections, of course: the inventor of the mathematical universe hypothesis, Max Tegmark, disputes it himself in his 2014 book, arguing that "infinitely big" and "infinitely small" don't actually exist and QI will therefore not work. I have no idea if this makes sense or not. There are also attempts to rid physics of somewhat related ideas such as Boltzmann brains.

It's even more confusing since I'm not really interested in immortality myself. Normally I would be mildly enthusiastic about "ordinary" ways of life extension, but avoid things such as cryonics. With QI, I don't know. Now that this post is here, I hope people will share their thoughts.

Replies from: turchin↑ comment by turchin · 2016-01-25T14:43:15.082Z · LW(p) · GW(p)

If I will be resurrected, I expect that the AI that will do it will be with probability 90 per cent friendly. Why UFAI will be interested to resurrect me? Just to punish? Or to test his ideas about the end of the world in a simulation? In this case it will simulate me from my birth.

Anyway signing to cryonics is the best way to escape from eternal suffering of bad quantum immortality in very old body.

I don't understand Tegmark objection. We don't need infinite world for BWI, just very big one, big enough to have many my copies.

BWI will help me to survive if I am a Bolzmann brain now. I will die next moment, but in another world, there I am part of a real world, I will continue to exist, so the same logic as in BWI may be applied.

I still think that BWI is too speculative to be use in actual decision making. I also think that ones enthusiasm about death prevention may depend on urgency of situation: if there is fire in a house everybody in it will be very enthusiastic to save their life.

Replies from: qmotus↑ comment by qmotus · 2016-01-25T20:48:09.773Z · LW(p) · GW(p)

If I will be resurrected, I expect that the AI that will do it will be with probability 90 per cent friendly. Why UFAI will be interested to resurrect me? Just to punish?

Maybe; there's a certain scenario, for instance, that for a time wasn't allowed to be mentioned on LW (not anymore, I suppose). In any case, the ratio of UFAIs to FAIs is also important; even if few UFAIs care about resurrecting you, they can be much more numerous than FAIs.

Or to test his ideas about the end of the world in a simulation? In this case it will simulate me from my birth.

This is actually what I would suppose to be most common. In which case we're back to the enormously prolonged old age scenario, I suppose.

I don't understand Tegmark objection. We don't need infinite world for BWI, just very big one, big enough to have many my copies.

Basically, I think you're right. Either Tegmark hasn't thought about this enough, or he believes that it would shrink the size of our big world enormously. Kudos to him for devoting a chapter of a popular science book for the subject, though.

I still think that BWI is too speculative to be use in actual decision making.

Why do you think that it's so speculative? MWI has a lot of support in LW and among people working on quantum foundations; cosmic inflation has basically universal acceptance among physicists (and alternatives, such as Steinhardt's epkyrotic cosmology, have basically the same implications in this regard); string theory is very plausible; Tegmark's mathematical universe is what I would call speculative, but even it makes a lot of sense; and patternism, the other necessary ingredient, is again almost universally accepted on LW.

I also think that ones enthusiasm about death prevention may depend on urgency of situation: if there is fire in a house everybody in it will be very enthusiastic to save their life.

Probably. But as humans we're basically built to strive to survive in a situation like that, meaning that their jugdment is likely pretty severely impaired.

Replies from: turchin↑ comment by turchin · 2016-01-25T23:31:11.124Z · LW(p) · GW(p)

Now we could speak about RB for free. I mostly think that mild version is true, that is good people will be more rewarded, but not punishment or sufferings. I know some people who independently come to idea that future AI will reward them. For me, I don't afraid any version of RB as I did a lot to promote ideas of AI safety.

Still don't get Tegmark's idea, may be need to go to his book.

For example, we could live in a simulation with afterlife, and suicide in it is punished. If we strongly believe in BWI we could build universal fulfilment of desires machine. Just connect any desired outcome with bomb, so it explodes if our goal is not reach. But I am sceptical about all believed in general, which is probably also shared idea in LW )) I will not risk permanent injury or death if I have chance to survive without it. But i could imagine situation where I will change my mind, if real danger overweight my uncertainty about BWI.

For example if one have cancer, he may prefer an operation which has 20 per cent positive outcome to chemo with 40 per cent positive outcome, but slow and painful decline in case of failure. In this case BWI gives him large chance to become completely illness free.

This thread is not about values, but I think that values exist only inside human beings. Abstract rational agent may have no values at all, because it may prove that any value is just logical mistake.

comment by TikiB · 2017-02-26T23:01:51.256Z · LW(p) · GW(p)

Dr Jacques Mallah PhD has arrogantly been asserting for the last few years that quantum immortality is obviously wrong. This is a rebuttal to his argument, his primary argument can be found on https://arxiv.org/: "Many-Worlds Interpretations Can Not Imply ‘Quantum Immortality’".

For the record I don't necessarily believe quantum immortality is right or wrong but I think it could be.

His primary arguments come down to the decrease of 'measure' of consciousness after a likely death event like proposed in quantum suicide. And the fact that we find ourselves in normal lifetimes he suggests that if we were immortal then we would likely be much older than we are. I will point out flaws in these two arguments and also add some of my own arguments.

The measure argument:

The measure argument is THE primary argument Jaques uses, basically because he thinks that proponents of QI are uneducated and don't understand quantum mechanics or the wave function. Despite the fact that Everett the discoverer of the many worlds interpretation believed in quantum immortality himself.

Basically to summarise MWI after every Planck second the world splits into many(possibly infinite) many parallel universes, in each of which different events occur. However in order that future times not be more likely than past-times the probability of an event is subdivided each time so that every event in a chain of events makes the overall chain less likely.

Therefore the probability of anything staying the same over time (for instance a person staying alive) decreases with every split.

Basically he implies that this means that in the example of quantum suicide (the idea that because theres always a world in which you survive so if you attempt suicide you will always fail) the reduction in measure caused by the deaths in some branches implies that there must be a chance of death from the 1st persons perspective because the overall measure in the wave function has been decreased. This means the overall amount of consciousness has decreased.

To this I have several responses: Firstly its fairly trivial to come up with hypothetical scenario's where the measure (or amount) of consciousness decreases but yet from a first person perspective the first person still exists, providing that you accept that fusion of consciousness is possible.

The example I gave to Jaques was a star trek inspired idea whereby 2 identical Kirks are scanned by the transporter, there details averaged (so that causality from both is identical) the transporter then creates one dead kirk and one alive kirk on the planet or whatever. From the point of view of both original Kirks the alive kirk created on the planet forfils both the data and causality requirements necessary to be the future of both of them, the dead Kirk is entirely irrelevant and could just as well be a rock. As you may have noticed this is very similar to the setup in the quantum suicide experiment.

A further argument against his arrogant assumption is the fact that I exist at all, I am an absolutely tiny part of the wavefunction of the universe and in most branches between the beginning and end of the universe I simply do not exist at all, suggesting that from a first person perspective I 'select' branches of the wavefunction in which I exist.

Also an analogy could be found between spacetime and MWI splits. If we take the example of sleep in the vast majority of my future lightcone I don't exist. The ratio is terrible between empty space and my brain, and yet somehow I wake up every morning.

Finally it can be argued that from a first person perspective there is no 'measure' of consciousness in the universe merely the observer and the rest of the universe.

His other major argument against quantum immortality is his 'general argument against immortality' this argument can be shown to be extremely weak quite easily. Basically the idea is that if we were immortal we would expect to be in our immortal state right now, so as an example in quantum immortality we would expect to be very old.

This argument can be shown to be ridiculous very quickly:

Lets imagine like millions of people across the world do that death results in us going to heaven with thousands of angels etc. Should we not expect to be in heaven right now? NO the rules in Christianity state that we must live a mortal life first.

I am not sure about Mallah but my life occurs in a linear fashion, I did not just appear at age 40 and have experienced my age varying around the midpoint throughout my existence. Instead I started at zero and then increased in age. So in his paper the reason why Max Tegmark is not the oldest person in existence has nothing whatsoever to do with his mortality but instead is because he has only been alive X number of years.

Finally

In MWI all possible futures happen, the whole fact that we talk about probabilities of one event or the other happening is an artefact of our first person perspective. It therefore seems reasonable that splits in which I don't exist have zero probability from my point of view.

This is further backed up by the fact that the passing of time may well be an artefact of our consciousness, there is no passing of time function in either quantum mechanics or relativity, all times are equally relevant independent of an observer(entropy increases over time but there is no now independent of an observer and no obvious reason for time to flow), so in what way is an event in which I don't exist in my future? Perhaps Consciousness flows like a river around obstacles.

Expecting to experience nothing after a death event maybe like expecting your consciouness to somehow leave spacetime as nothing by definition has no space or time. Instead it seems that you either experience time freezing or as I find more likely you simply find yourself in a branch with a high measure.

Two final points:

Given the universe itself will likely one day end, how can consiousness persist for eternity? Quantum mechanics says that even the shape of spacetime fluctuates permitting time travel with very low probability (hawking, universe in a nutshell.) In principle there is a probability that any possible spacetime is connected to any other possible spacetime.

Assuming quantum immortality is true, should we expect quantum torment?

The most likely event is a medium length period of aging followed by a long period of minimal consciouness, followed by possible reincarnation through fusion with another consciouness.

Replies from: turchin↑ comment by turchin · 2017-02-27T19:43:16.581Z · LW(p) · GW(p)

I agree with your critics of this article. Moreover, his first objection is contradicting the second. Imagine the following model of QM. We have 1024 copy of Harry Potter book. Each day half of copies is destroyed. From the point of Harry it doesn't matter until at least one book exist. Number of books doesn't affect plot of the book. the same way number my copies (measure) doesn't affect my consiousness.

But if we ask there is the medium copy of the book, we will see that it is in the beggining of the pyramid, somewhere in the first or second day when there was 1024 or 512 books. So if HP will ask where he is, he more probably will find himself in beggining of the story, not in the middle of eternity. This is where idea of measure starts to work and it exactly explain why QI being will more probably find itself in its early time.

There is other possible explanation why I am not so old, one is that I am computer game of high level avatar, who is very old by choose to forget his age for each round of the game - but here we stack QI with simulation argument.

Another explanation is that asking about my age is not random event, as I already surprised why I am so early.

Replies from: TikiB↑ comment by TikiB · 2017-02-27T20:48:04.953Z · LW(p) · GW(p)

An observer moment is not an average of all times at all but is instead (likely)a high measure future moment relative to the previous moment. Consciousness is experienced as a flow because our brain compares the current experience to the previous one making us percieve that one followed the other.

The place where measure really comes in is the first moment, we exist on this planet because our first experience was on this planet. Because the first moment can be at any time (it doesn't have a previous moment) it will likely be in a location with a high measure of consciousness, which is why we are on earth and not a boltzmann brain near the beginning of the universe as Mallah proposes.

Furthermore the whole argument can be turned on its head if we expect to be dead for the vast majority of the future as Mallah proposes why are we not already dead. I am sure Mallahs's argument would be that being dead doesn't involve any moments and therefore it cannot be averaged. The problem for Mallah is that this is precisely my point, non existence has no location in space or time.

Replies from: turchin↑ comment by turchin · 2017-02-27T21:16:34.527Z · LW(p) · GW(p)

BTW if we will be able to explain consiosness as a stream of similar observer moments, we don't need reality at all. Only Boltzmann brains existence will be enough. Our lives will be just lines in the space of all possible observer moments.

Replies from: TikiB↑ comment by TikiB · 2017-02-27T21:59:07.315Z · LW(p) · GW(p)

Of course its entirely possible to exist as a boltzmann brain and if we do in fact exist for eternity as MWI seems to imply then some of that time will be as a boltzmann brain.

The point is that Boltzmann brains have low measure, which is why we aren't one now.

Replies from: turchin↑ comment by turchin · 2017-02-27T22:07:22.525Z · LW(p) · GW(p)

If nothing except BBs exists, their measure doesn't matter. I don't say I believe in it, but it is interesting theory to explore. It is similar to Dust theory. I hope to write an article about it one day when I finish other articles.

Replies from: TikiB↑ comment by TikiB · 2017-02-27T22:17:03.350Z · LW(p) · GW(p)

Lubos Motl already discussed this in this blog. if we were Boltzmann brains we wouldn't expect to see any consistency in physical laws, moments would happen at random. Of course there would be a a very low measure subset of boltmann brains that perceived there to be our physical laws, but its far more likely that the physical laws exist.

Replies from: turchin↑ comment by turchin · 2017-02-27T22:44:01.007Z · LW(p) · GW(p)

There were recent article which showed flaw in this reasoning https://arxiv.org/abs/1702.00850 and I agree with the flaw: BB can't make coherent opinions about randomness of its environment, so the fact that we think that it is not random doesn't prove that it is not random.

But if we are BB - we are in fact some consequent lines of BBs, which could be called similar observer moments. Such similarity exclude randomens, but it is a property of a line.

Simplified example: imagine there is infinitely many random numbers. In these numbers exists a line goverened by some rule, like 1,10,100,1000,10 000 etc. Such line will always have next number for it inside the pile of numbers (this is so called Dust theory in nutshell). If each number is decribing observer-moment, in all BBs there will be sequences of observer-moments which corresponding to some rule.

More over, for any crazy BB there will be a line which explains it. As a result we get the world almost similar to normal.

The idea needs longer explanation so I hope on understanding here and I am not trying to prove anything

Replies from: entirelyuseless, TikiB↑ comment by entirelyuseless · 2017-02-28T03:26:09.122Z · LW(p) · GW(p)

I understand what you are saying. But I think you cannot reasonably speak of BBs in that way. I think BB is just a skeptical scenario, that is, a situation where everything we believe is false or might be false. And BB has the same problems that all situations like that have. Consider a different skeptical scenario: a brain in a vat.

Suppose you ask the person who is a brain in a vat, "Are you a brain in a vat?" He will say no, and he will be right. Because when he says "brain" he is referring to things in his simulated world, and when he says "vat," he is referring to things in his simulated world. And he is not a simulated brain in a simulated vat, even though those are the only kind he can talk about.

He is a brain in a vat only from an overarching viewpoint which he does not actually have: if you want to ask him about it, you should say, "Is it possible that you are something like a brain in something like a vat?" And then he will say, "Of course, anything is possible with such vague qualifications. But I am not the kind of brain I know about, in the kind of vat I know about." And he will be right.

The same thing is true about BBs. If you look at BBs in the world you are talking about, moments of them say things like "I will wake up tomorrow." And even though according to our viewpoint they are just moments that will cease to be, they are talking about the continuous series that you called a normal life. So they are right that they will wake up, just like the brain in the vat is right when it says "I am not a brain in a vat." So they say "We are not BBs", and they are right. They are BBs only from an overarching point of view that they do not have.

So what that means for us: we are definitely not BBs. But there could be some overarching metaphysical point of view, which we do not actually have, where we would be something like BBs (like the brain in the vat says it might be something like a brain in a vat.)

Replies from: turchin↑ comment by TikiB · 2017-02-28T00:08:35.352Z · LW(p) · GW(p)

Yea Carroll has rather the obsession with Boltzmanns brains. Both sides have vaild arguments if we were living in a boltzmann brain dominated universe random observations would be more likely but no amount of measuring would prove that you weren't a boltzmann brain.

Of course Carroll repeatedly tries to use this to argue agaist a universe dominated by boltzmann brains, but it does no such thing all it means is that he WANTS the universe not to be dominated by boltzmann brains because if it is then his life work was a waste of time :P

Replies from: TikiB