Posts

Comments

Editability and findability --> higher quality over time

Editability

Code being easier to find and easier to edit, for example,

if it's in the same live environment where you're working, or if it's a simple hotkey away, or an alt-tab away to a config file which updates your settings without having to restart,

makes it more likely to be edited, more subject to feedback loop dynamics.

Same applies to writing, or anything where you have connected objects that influence each other, where the "influencer node" is editable and visible.

configs : program layout / behavior

informal rules about how your relationship is to your friend : the dynamics of the relationship

layout of your desk : the way you work

underlying philosophy of ideas : writing ideas

ideas "going viral" in social media : people discussing them (think about Luigi Mangione triggering notions of killing people you don't like (this is bad!! and it's done under the memetic shield of "big corpo bad"), or Elon x Trump's doge thing having all sorts of people discussing efficiency of organizations and bureaucracy (this is amazing) )

(not sure if the last one is a good example)

Imagine if when writing this Quick Take:tm:, I had a side panel that on every keystroke, pulled up related paragraphs from all my existing writings!

I can see past writings which cool, but I can edit them way more easily (assuming a "jump to" feature), in the long term this yields many more edits, and a more polished and readable total volume of work.

Findability

If you can easily see the contents of something and go, "wait this is dumb". Then even if it's "far away" like, you have to find it in the browser, do mouse clicks and scrolls, you'll still do it.

What in fact determined you editing it, is that the threshold for loading its contents into your mind, had been lowered. When you load it, the opinion is instantly triggered.

It seems easier to fit an analogy to an image than to generate an image starting with a precise analogy (meaning a detailed prompt).

Maybe because an image is in a higher-dimensional space and you're projecting onto the lower-dimensional space of words.

(take this analogy loosely idk any linear algebra)Claude: "It's similar to how it's easier to recognize a face than to draw one from memory!"

(what led to this:)

I've been trying to create visual analogies of concepts/ideas, in order to compress them and I noticed how hard it is. It's hard to describe images, and image generators have trouble sticking to descriptions (unless you pay a lot per image).

Then I started instead, to just caption existing images, to create sensible analogies. I found this way easier to do, and it gives good enough representations of the ideas.

(some people's solution to this is "just train a bigger model" and I'd say "lol")

(Note, images are high-dimensional not in terms of pixels but in concept space.

You have color, relationships of shared/contrasting colors between the objects (and replace "color" with any other trait that imply relationships among objects), the way things are positioned, lighting, textures, etc. etc.

Sure you can treat them all as configurations of pixel space but I just don't think this is a correct framing (too low of an abstraction))

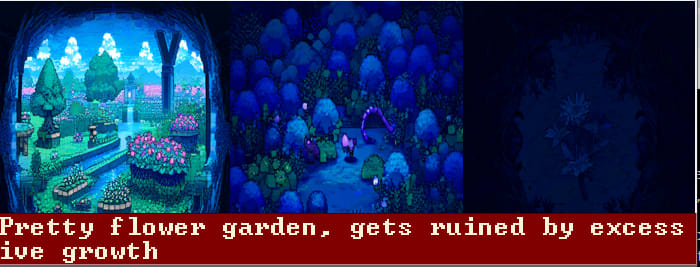

(specific example that triggered this idea and this post:)

pictured: "algorithmic carcinogenesis" idea, talked about in this post https://www.conjecture.dev/research/conjecture-a-roadmap-for-cognitive-software-and-a-humanist-future-of-ai

(generated with NovelAI)

I was initially trying to do more precise and detailed analogies, like, sloppy computers/digital things spreading too fast like cancer, killing and overcrowding existing beauty, where the existing beauty is of the same "type" -- meaning also computers/digital things -- but just more organized or less "cancerous" (somehow). It wasn't working.

On our "interfaces" to the world...

Prelude

This is a very exploratory post.

It underlies my thinking in this post: https://www.lesswrong.com/posts/wQ3bBgE8LZEmedFWo/root-node-of-my-posts but it's hard to put into words but I'll make a first attempt here. I also expressed some of it here, https://www.lesswrong.com/posts/MCBQ5B5TnDn9edAEa/atillayasar-s-shortform?commentId=YNGb5QNYcXzDPMc5z, in the part about deeper mental models / information bottlenecks.

It's also why I've been spending over a year making a GUI that can encompass as much of my activities on the computer as possible -- which is most of my entire life.

(The beauty of coding personal tooling is that you get to construct your own slice of reality --- which can be trivial or extremely profound depending on your abilities and on whether controlling your slice of reality even matters to begin with -- and the digital world is so much more malleable than the physical world.)

Start

Our interface to the world and our minds is basically our memory, which is not a static lookup, it changes as you spend time "remembering" (or reconstructing your memories and ideas, which maybe shouldn't be seen as "remembering" exactly).

Tools extends the available "surface area" of our interface, meaning our memory, because the tool is still one "item" in your mind but it stores a lot more knowledge (and/or references to knowledge) than if you had to store the unpacked version.

When you replace "all programming knowledge known to man" with "skill at Googling", you pay for storage space with runtime compute.

(And sometimes you actually want to buy runtime compute by spending storage, meaning, you practice with some topic so that you can immediately recall things and actually think about things directly instead of every thought being behind a search query, which would be like 100x slower or something and totally impractical)

Holistic worldview means fewer root nodes

A very holistic worldview basically means you have fewer items of that initial interface, like, anything you wanna think about passes through a smaller number of initial nodes. This probably makes it easier to think about things, and if this worldview actually works and is consistent, you can iterate on it and refine it over years and years, which is much harder if you have like 100 scattered thoughts and ways of thinking, because they won't be revisited as often.

(I wonder if this is the holy grail of philosophy)

(But ofcourse it only works if it's not costing you too much "runtime compute", like, if you have a worldview that is arguably holistic and consistent, but every time you think about some topic you have to spend 5 minutes explaining how exactly it maps to your worldview, and it's better to just directly think about that topic instead, then it would be worthless.)

Examples

Having a longtime best friend/wife/husband/partner who you always talk to about whatever you're thinking of, is kind of like having a node that encompasses most of your worldview. So you can iterate on it.

Something like asking "what would Jesus do" is like that too, or any religious figure or role model. (Every time you do this, you actually understand Jesus better -- at least your model and other people's model of him -- but you also get better at usefully using this framing to inform your decisions (or your heart), it's not literally about doing what Jesus would do, I think.)

Maybe any general habit someone has that they consistently use to deal with problems, is like this. A thing which the majority of one's thoughts pass through. ( <-- wait that's weird lol )

This post got weird

Somehow I got a different definition than the "interface" idea I started out with.

Maybe the "node that most thoughts pass through" definition makes more sense than interfaces anyway, because we're talking about a cybernetic-like system, with constant feedback loops and no clear start or end. And the node can be anywhere within the system and have any arbitrary "visit frequency", like maybe your deep conversation with your best friend or mentor is only once every 2 months.

(I guess the "initial interface" framing is a special case of this more general version)

Anyway this is a good time to end it.

Just because X describes Y in a high level abstract way, doesn't mean studying X is the best of understanding Y.

Often, the best way is to simply study Y, and studying X just makes you sound smarter when talking about Y.

pointless abstractions: cybernetics and OODA loop

This is based on my experience trying to learn stuff about cybernetics, in order to understand GUI tool design for personal use, and to understand the feedback loop that roughly looks like, build -> use -> rethink -> let it help you rethink -> rebuild, where me and any LLM instance I talk to (via the GUI) are part of the cybernetic system. Whenever I "loaded cybernetics concepts" into my mind and tried to view GUI design from that perspective, I was just spending a bunch of effort mapping the abstract ideas to concrete things, and then being like, "ok but so what?".

A similar thing happened while looking into the OODA loop, though at least its Wiki page has a nice little flowchart, and it's much more concrete than cybernetics. And you can draw more concrete inspiration about GUI design by thinking about fighter pilot interfaces.

It's also because I often see people using abstract reasoning and, whenever I dig into what they're actually saying it doesn't make that much sense. Also because of personal experience where, things become way clearer and easier to think about, after phrasing them in very concrete and basic ways.

Jocko Willink talking about OODA loops, paraphrase

The f-86 wasn't as good at individual actions, but it could transition between them faster than the MiG-15

analogous to how end-to-end algorithms, llm agents, and things optimized for the tech demo, are "impressive single actions", but not as good for long term tasks

Two tools is a lot more complex than one, not just +1 or *2

When you have two tools, you have think about their differences, or about specifically what they each let you do, and then pattern match to your current problem, before using it. With one tool, you don't have to understand the shape of its contents at all, because it's the only tool and you already know you want to use it.

Concrete example, doing groceries

Let's compare the amount of information you need to remember with 1 vs 2 tools. You want food (task), you're going to get it from a supermarket (tool).

With 1 available supermarket:

You want food. Supermarket has food. Done. The tool only requires 1 trait: "has food".

With 2 available supermarket:

Market A has <list of products> (or a "vibe", which is a proxy for a list of products), market B has <list of products/vibe>, you compare the specific food you want to the products of each market, then make a decision.

Each tool's complexity grew from "has food" to "list of product types", your own "food" requirement grew to "specific food". It's from 1 to 2* ( complexity growth of a given comparison ( = tool complexity + requirement complexity ) ) + difficulty of comparing multiple tools.

And after two, the complexity increase gets smaller and smaller, for the obvious reason that now you're just adding 1 tool straightforwardly to the existing list of tools. But also because you're already comparing multiple tools to each other, which you weren't doing with one.

Twitter doesn't incentivize truth-seeking

Twitter is designed for writing things off the top of your head, and things that others will share or reply to. There are almost no mechanisms to reward good ideas, to punish bad ones, nor for incentivizing the consistency of your views, nor any mechanism for even seeing whether someone updates their beliefs, or whether a comment pointed out that they're wrong.

(The fact that there are comments is really really good, and it's part of what makes Twitter so much better than mainstream media. Community Notes is great too.)

The solution to Twitter sucking, is not to follow different people, and DEFINITELY not to correct every wrong statement (oops), it's to just leave. Even smart people, people who are way smarter and more interesting and knowledgeable and funny than me, simply don't care that much about their posts. If a post is thought-provoking, you can't even do anything with that fact, because nothing about the website is designed for deeper conversations. Though I've had a couple of nice moments where I went deep into a topic with someone in the replies.

Shortforms are better

The above thing is also a danger with Shortforms, but to a lesser extent, because things are easier to find, and it's much more likely that I'll see something I've written, see that I'm wrong, and delete it or edit it. Posts on Twitter or not editable, they're harder to find, there's no preview-on-hover, there is no hyperlinked text.

when chatting with an LLM, do you wonder what its purpose is in the responses it gives? I'm pretty sure it's "predict a plausible next token", but I don't know how I'll know to change my belief.

I think "it has a simulated purpose, but whether it has an actual purpose is not relevant for interacting with it".

My intuition is that the token predicter doesn't have a purpose, that it's just answering, "what would this chatbot that I am simulating, respond with?"

For the chatbot character (the Simulacrum) it's, "What would a helpful chatbot want in this situation?" It behaves as if its purpose is to be helpful and harmless (or whatever personality it was instilled with).

(I'm assuming that as part of making a prediction, it is building (and/or using) models of things, which is a strong statement and idk how to argue for it)

I think framing it as "predicting the next token" is similar to explaining a rock's behavior when rolling as, "obeying the laws of physics". Like, it's a lower-than-useful level of abstraction. It's easier to predict the rock's behavior via things it's bouncing off of, its direction, speed, mass, etc.

Or put another way, "sure it's predicting the next token, but how is it actually doing that? what does that mean?". A straightforward way to predict the next token is to actually understand what it means to be a helpful chatbot in this conversation (which includes understanding the world, human psychology, etc.) and completing whatever sentence is currently being written, given that understanding.

(There's another angle that makes this very confusing: whether RLHF fundamentally changes the model or not. Does it turn it from a single token predicter to a multi-token response predicter? Also is it possible that the base model already has goals beyond predicting 1 token? Maybe the way it's trained somehow makes it useful for it to have goals.)

There have been a number of debates (which I can't easily search on, which is sad) about whether speech is an action (intended to bring about a consequence) or a truth-communication or truth-seeking (both imperfect, of course) mechanism

The immediate reason is, we just enjoy talking to people. Similar to "we eat because we enjoy food/dislike being hungry". Biologically, hunger developed for many low-level processes like our muscles needing glycogen, but subjectively, the cause of eating is some emotion.

I think asking what speech really is at some deeper level doesn't make sense. Or at least it should recognize that why individual people speak, and why speech developed in humans, are separate topics, with (I'm guessing) very small intersections.

personality( ground_truth ) --> stated_truth

Or, what someone says is a subset of what they're really thinking, transformed by their personality.

(personality plus intentions, context, emotions, etc.)

(You can tell I'm not a mathematician because I didn't express this in latex xD but I feel like there's a very elegant Linear Algebra description where, ground truth is a high dimensional object, personality transforms to make it low-dimensional enough to communicate, and changes its "angle" (?) / vibe so it fits their goals better)

So, if you know someone's personality, meaning, what kind of way they reshape a given ground truth, you can infer things about the ground truth.

Anecdote

I feel like everyone does this implicitly, it's one of those social skills I struggle with. I always thought it was a flaw of other people, to not simply take things at face value, but to think at the level of "why do they say this", "this is just because they want <x>", while I'm always thinking about "no but what did they literally say? is it true or not?".

It's frustrating because usually, the underlying social/personality context completely dominates what someone says, like, 95% of the time it literally doesn't fucking matter one bit what someone is saying at the object level. And maybe, the fact that humans have evolved to think in this way, is evidence that in fact, 95% of what people say doesn't matter and shouldn't matter object-level, that the only thing that matters is implications about underlying personality and intentions.

My recollection of sitting through "adult conversations" of family and relative visits for probably many hundreds of hours is, "I remember almost zero things that anybody has ever talked about". I guess the point is that they're talking, not what it's about.

Practical

This reframing makes it easier to handle social situations. I can ask myself questions like, "what is the point of this social interaction?", "why is this person saying this to me?"

Often the answer is, "they just wanna vibe, and this is an excuse to do so".

this shortform: https://www.lesswrong.com/posts/MCBQ5B5TnDn9edAEa/atillayasar-s-shortform?commentId=QCmaJxDHz2fygbLgj was spawned from what was initially a side-note in the above one.

Feature-level thinking is natural, yet deeply wrong

In this Shortform I talked about why interactive systems that include AI are more powerful than end-to-end AI-powered algorithms. But it misses something big, a concept where actually most of my surprising ideas about UI design have come from.

The raw data underneath any given UI, and the ability to define functions that "do things at certain points" to that data, is very elementary relative to the richness of that UI, and the things that it lets you do.

Something mundane like "article -> list of (index, string)-pairs representing paragraphs" can become, "menu of clickable chapters sitting beside the main editor window, that take you to that section". But (depending on your language/utils/environment), with only 1-2 lines of code you can add a "right click to regenerate this section".

The fact that adding a "right click to regenerate this section" is merely 1% of the codebase, but like a doubling or 1.5x increase in the amount of things you can qualitatively do in the UI, is not a coincidence, it happens over and over again.

in the correct perspective, an AI that can do kind of almost do everything is still a very large distance from an AI that can actually do everything. This perspective is not natural, it is not the feature-level perspective.

Ctrl-f transforms Notepad++

It turns each substring of a given thing you typed, into a potential index, with your memory acting as an interface to that index. Substring search is very simple feature in terms of pure code, and a little more complex in terms of UI but not that complex, not complex relative to how deeply it transforms a Notepad app.

It creates emergent things, like, you can use your own tags and keywords inside notes that you know you'll be able to ctrl-f to easily. Not only is the tagging system not explicitly programmed, it's not even a good idea to program it, because it would make the tool more clunky and bloated.

Cognitive workspaces, not end-to-end algorithms

It's summer 2020 and gpt3 is superhuman in many ways and probably could take over the world but only when its alien goo flows through the right substrate, channeled into the right alchemical chambers, then forked and rejoined and reshaped in the right way, but somehow nobody can build this.

Robustness via pauseability

Normal agent-systems (like what you design with LangChain) are brittle in that you're never sure if they'll do the job. A more robust system is one where you can stop execution when it's going wrong, and edit a previous output, or edit the code that generated it, and retry.

Neat things like the $do command of Conjecture's Tactics, don't solve this. They allow the system in which it is implemented, to speed up any given cognitive task, simply by making it less likely that you have to edit and retry the steps along the way. $do turns the task of prompt + object shape --> object run flawlessly (except rn since it's bugged), as a single "step", rather than a bunch of things you have to do manually.

The same goes for how strong of a model you use. Increasing model strength should be (given a good surrounding system), a trade of token cost for retry count and tinker time and number of intermediate steps, as opposed to enabling qualitatively different tasks.

(At least not at every model upgrade step. There are (probably) some things that you can't do with GPT-2, that you can do with GPT-4, no matter how powerful your UI and workflows are.)

A cool way of seeing it is that you turn the time dimension, which is a single point (0-D) in end-to-end algorithms, into a 1-D line, where each point along the path contains a pocket dimension you can go into (meaning inspect, pause, edit, retry).

Practical example -- feel free to skip -- messily written somewhat obvious point

I'll start with a simple task and a naive solution, then by showing the problems that come up, and what it implies about the engineering challenges involved in making a "cognitive workspace".

I personally learned these ideas by doing exactly this, by trying to build apps that help me, because *surely*, a general intelligence that is basically superhuman (I thought this even with gpt3) can completely transform my life and the world! Right?! No.

I believe that such problems are fundamental to tasks where you're trying to get AI to help you, that that's why an interactive UI-based approach is the right one, as opposed to an end-to-end like approach.

Task: you have an article but none of your paragraphs have titles (shoutout Paul Graham) and you want AI to give it titles.

1)

Strong model solves it one shot, returning the full article.

1 -- problem)

Okay, fine, that can work.

But what if it's a really long article and you don't want the AI wasting 95% of its tokens on regurgitating paragraphs, or you're very particular about your titles and you don't wanna retry more than 5 times.

2)

You build a simple script that splits it into paragraphs, prompts each instance of gpt3 to give a title, recombines everything and outputs your full article, and even allows a variable where you can put paragraph indices that the gpt3-caller will ignore.

It solves paragraph regurgitation, you selectively regenerate titles you don't like yet, so iteration cost is much lower, and with 2-3 lines of code you can even have custom prompts for certain paragraphs.

2 -- problem)

You actually had to write the script, for one, you've already created a scaffold for the alien superintelligence. (Even iteratively sampling from the token distribution and appending it to the old context is a scaffold around an alien mind -- but anyway)

And every time you load up a new article you need to manually write indices of paragraphs to ignore, and any other little parameters you've added to the script. Maybe you want a whole UI for it that contains the text, where you can toggle which ones are ignored with a mouse or keyboard event, and takes you to paragraph-specific custom prompts. Or you create a mini scripting language where you can maybe add an $ignore$ line, and a $custom prompt| <prompt>$ line which the parser stores, uses for AI calls, and deletes from the final article when it outputs it with the new titles.

And then maybe you want to analyze the effects of certain prompts, or store outputs across retries -- etc.

3 and beyond)

This just goes on and on, as the scope of the task expands.

Say you're not titling paragraphs, but doing something of the shape, "I write something, code turns it into something, AI helps with some of the parts, code turns back into the format it was originally in" -- which a lot of tasks are described by, including writing code. Now you have a way broader thing that a script may not be enough for, and that even a paragraph-titler-UI is not enough for.

And why shouldn't the task scope expand? We want AI to solve all problems and create utopia, why can't they? They're smarter than me and almost everyone I know (most of the time anyway) they should be like 1000x more useful. Even if they're not *that* smart, they're still 100x faster at reading and writing, and ~~1 million x more knowledgeable.

The task scope implied by AIs that are about as smart as humans (roughly true of gpt3, definitely true of Claude 3.5), is vastly smaller than their actual task scope. I think it's because we have skill issues. Not because we need to build 100 billion dollar compute clusters powered by nuclear reactors. (some say it's because AIs don't have "real" intelligence, but I don't wanna get into that -- because it's just dumb)

programmable UI solves UI design

The space of cognitive task types is really really big, and so is the space of possible UIs that you can build to help you do those tasks.

You can't figure out a-priori what things should look like. However, if you have something that's easily reconfigurable (programmatically reconfigurable -- because you have way more power as a code-writer than as a clicker-of-UI-elements), you can iterate on its design while using it.

This relates to gradient descent, and to decentralized computation, to the thing that gives free markets their power.

It's roughly that instead of solving something directly, you can create a system that is reconfigurable, which then reaches a solution over time as it gets used and edited bit by bit across many iterations.

A given UI tool that lets AI help you do tasks, is a solution which we are unable to find. A meta-program that lets you experiment with UIs, is the system that lets you find a solution over time.

(In cybernetic terms, the system consists of the user, the program itself, any UIs inside of it that you create, and any AI instances that you consult to help you think -- and now LessWrong is involved too because this is helping me think)

This "meta-UI" being easy to program and understand is not a given at all. In my experience almost all the effort goes into making functions findable and easy to understand, making data possible to analyze (since, for anything where str(data) is larger than the terminal window (+ some manual scrolling), you can't simply print(data) to see what it looks like), and into making behaviors (like hotkey bindings) and function parameters editable on-the-fly.

Because idk how I'm gonna use something when I start writing it, and I don't wanna think about it that hard to begin with. But IF it turns out to be neat, I want to be able to iterate on it or put it on a different hotkey or combine it with some existing event. Or if I don't need it yet, I need to be able to find it later on. And then the more broad the tool gets, the more things I'm adding to it, the messier it gets, so internal search and inspection tools became more and more important -- which I also don't know a-priori how to design.

When is philosophy useful?

Meta

This post is useful to me because 1) it helped me think more clearly about whether and how exactly philosophy is useful, 2) I can read it later and get those benefits again.

The problem

Doing philosophy and reading/writing is often so impractical, people do it just for the sake of doing it. When you write or read a bunch of stuff about X, get categories and lists and definitions, it feels like you're making progress on X, but are you really?

Joseph Goldstein (meditation teacher) at the beginning of his lecture about Mindfulness, jokes that they'll do an hour and a half of meditation, then after pausing for laughter, points out that that would actually be more useful than anything he could say on the subject.

Criteria

The way to tell if philosophy is useful is, if it actually influences the future, if you:

- directly use the information for an action or decision

- use the material OR the wordless intuitions gained from the material in your future thinking (times the probability that you'll use it for a future action or decision)

- refute the bad ideas that you read or write (pruning is progress too!)

(slight caveat: reading and writing and thinking, makes you better at those things and it creates positive habits, even if it's not "object level useful". But still! I want to train my skills while attempting to be productive -- I don't take my mental energy for granted.)

Lost in time / deeper mental models / information bottlenecks

One really simplified way to measure utility, is whether you can remember philosophy that you did. But there's something way deeper to this. If you force yourself to do useful philosophy, given that you can't remember a lot, a solution that arises naturally is that you create deeper, or higher level, representations of things that you know.

The more abstract they get, the more information they can capture.

A way to view it (basically compression and indexing lol):

you simply replace the "main table" that was previously storing the information, with an index that point to the information (because the "main table" runs out of space, and then later an index to the indices, etc., where the main table gets increasingly abstract as the number of "leaves" at the end of the node (meaning explicit ideas and pieces of content and pieces of reasoning), keep increasing. But the "main table" is what you're mostly working with, in terms of what you perceive as your thoughts. So from your perspective, as you learn, you are simply getting increasingly abstract representations, and it takes longer to retrieve information or to put into words what you think you know, because you are spending more cycles on traversing and indexing the graph and translating between forms of representation. (Memory being reconstructive is loosely related to this but I haven't dug into that topic at all. Also isn't this analogous to how auto-encoders work?)

(Balaji Srinivasan about memory management, paraphrase: "if you just have a giant mental tree that you attach concepts to over time, you can have compounding learning and you can remember everything because it is all connected" -- similar to memory palace)

Format / readability / retrievability

This is subtle and hard to put into words but is in practice very impactful and keeps surprising me, which is that, how easy things are to find and how easy it is to read them once you find them, matters a lot. If you have a diary in Notepad++, it's essentially a flat list, which is super annoying to retrieve things from, since you can only scroll or do ctrl-f. Fancier systems can make retrieval easier and they allow formatting, but require more initial energy to start writing notes in.

Youtube comments contain almost zero back-and-forth, Twitter has more, Reddit has a lot more, 4chan has longer chains than Reddit but is weaker in terms of structure.

LessWrong is actually really good for this, because you can read a preview on-hover, and do it recursively for preview panels -- Gwern-style. It has great formatting and is pleasant to edit. Ease of discussion via comments is better than all the above, except Reddit.

About retrieval on LW: this is my first time seeing it, but this is actually pretty cool -- though it's still not super great. Because retrieval is very much a UI/UX problem that is super limited by this existing on the browser. Notice how you can't use the keyboard to affect how results are presented, affect filter/sort or change page -- this goes for any search engine I've ever seen.

It's hard to make dynamically changing and editable UIs. You can design nice UIs, but you can't really design a superset of UIs that is traversable with hotkeys. Because it has to work on, like, 9000 different browsers and devices and screen sizes.

Retrievability transformed programming

I believe that Substack+Google deeply transformed what it means to be a programmer. Think of the meme about expectation vs reality, that programmers spend almost all their time Googling things. It's because the search engine is so powerful, that it outsourced almost all of the memorization requirement to, Googling skills + ability to parse Substack posts + trying out suggestions + figuring out whether a suggestion will work for you and how to reshape it to your codebase.

Posts as nodes -- what's beautiful about LessWrong

I'm new to this site as a writer (and a writer in general), and I read LW's user guide, to think more clearly about what kind of articles are expected and about why people are here. Direct quote:

LessWrong is a good place for someone who:

- values curiosity, learning, self-improvement, figuring out what's actually true (rather than just what you want to be true or just winning arguments)

- will change their mind or admit they're wrong in response to compelling evidence or argument

- wants to work collaboratively with others to figure out what's true

- likes acknowledging and quantifying uncertainty and applying lessons from probability, statistics, and decision theory to your reasoning

- is nerdy and interested in all questions of how the world works and who is not afraid to reach weird conclusions if the arguments seem valid

- likes to be pedantic and precise, and likes to bet on their beliefs

- doesn't mind reading a lot

There is a style of communication and thought, that summarizes the spirit of most of these. It's when your presentation is structured like a graph of dependencies.

"I believe x because of y" is much better than "I believe x"

Changing your mind is built into this type of writing, it says that they believe x because they believe that y => x, and/or that they believe x to the degree that y is true. It allows collaboration, because someone else can point out other implications of y, or that y isn't true, or that y => x doesn't hold, etc., and they have to change their mind. Or put in another way:

When you make the graph explicit, including the edges, the audience can judge the way things are connected, in addition to the conclusion.

It's like open-sourcing the code (where your conclusion is like the "app").

(and you can arrive at weird conclusions for free, since you're simply following the graph)

But what about exploratory thinking?

You simply take your exploratory post, identify the parts that are solid, refactor the post into self-contained things with explicit paths of reasoning, and you take those posts as nodes to reason about and speculate about!

(maybe a better way to put it is that it encourages factoring out solid elements of an idea or conclusion, and that you have the Quick Takes feature for doing exploration?? idk this second part is way less solid lol)

I agree that morality and consensus are in principle not the same -- Nazis or any evil society is an easy counterexample.

(One could argue Nazis did not have the consensus of the entire world, but you can then just imagine a fully evil population.)

But for one, simply rejecting civilization and consensus based on "you have no rigorous definition of them and also look at Genghis Khan/Nazis this proves that governments are evil" is like, basically, putting the burden of proof on the side that is arguing for civilization and common sense morality, which is suspicious.

I'm open to alternatives, but just saying "governments can be evil therefor I reject it, full stop" is not that helpful for discourse. Like what do you wanna do, just abolish civilization?

So consider a handwavy view of morality and of what a "good civilization" looks like. Let's assume common sense morality is correct, and that mostly everyone understands what this means: "don't steal, don't hurt people, leave people alone unless they're doing bad things, don't be a sex pervert, etc.". And assume most people agree with this and want to live by this. Then when you have consensus, meaning most people are observing and agreeing that civilization (or practically speaking, the area or community over which they have a decent amount of influence), is abiding by "common sense morality", then everything is basically moral and fine.

(I also want to point out that caring too much on pinpointing what morality means exactly and how to put it into words, distracts from solving practical problems where it's extremely obvious what is morally going wrong, but where you have to sort out the "implementation details".)

Despite being "into" AI safety for a while, I haven't picked a side. I do believe it's extremely important and deserves more attention and I believe that AI actually could kill everyone in less 5 years.

But any effort spent on pinning down one's "p(doom)" is not spent usefully on things like: how to actually make AI safe, how AI works, how to approach this problem as a civilization/community, how to think about this problem. And, as was my intention with this article, "how to think about things in general, and how to make philosophical progress".

Memetics and iteration of ideas

Meta

Publish or keep as draft?

I kept changing the title and moving between draft and public, after reading the description of Shortforms, it seemed super obvious that this is the best format.

Shipping chapters as self-contained posts

Ideally, this will be like an annotated map of many many posts. The more ideas exist as links, the more condensed and holistic this post can be.

It also lets me treat ideas as discrete discussable objects, like this: "I believe X as I argued here <link>, therefor Y <link>, which maybe means that [...] ".

Also, things existing as links makes this post more flexible and easier to iterate on.

Todo

Turn the TikTok case study and Bifurcation chapters into their own posts.

Collection of effects and phenomena.

Bifurcation of communities

1) increases total energy, because some people will enjoy talking more in the niche community

extreme case: imagine a party of only introverts where everyone is in 1 big group, versus everyone talking exclusively in pairs <-- massive difference in total conversation-energy expended (leading to more "idea-evolution/memetics/coordination/whatever tf im supposed to call it")

2) fewer agents == individual outputs reach more of the community with less loss of resolution, and higher iteration speed (or "applies bigger relative update to the global state") --> faster evolution of ideas

(and the ideas that *are* good in a broader way, can still be shipped to the main community, which is cool)

TikTok case study: Less agency --> more alien memetic processes?

Tiktok scrolling basically has 1 action: "how long until you scroll?", it's a single integer value with a pretty small range (video length) and often it's actually a boolean: "did you immediately scroll further?".

(There are a bunch of other actions too, but they're negligable in terms of how important they are for the algorithm, and how many of decisions in terms of quantity are formed by these: like, comment, input search term, click channel, share, close app)

Compare this to a conversation, where you have word choice, intonation, topic choice, social cues about what you find interesting, it's a set of very high-dimensional pieces of information that you share repeatedly and they all have a big effect, crazy amounts of agency.

You can make a similar analysis on the "supersets" of both of these.

the entirety of Tiktok and how viral videos and hashtags spread and how they influence humans, compared to, when people start getting annoyed by how their friendship is going, they can explicitly talk about it and steer it or abort mission.

Conversations very much *do not* feel like "runaway memetic phenomena", they feel like 2 people converging on their values (finding out they dislike each other is included, advancing a dialogue while subtly learning about each other is included).

A dialogue is ruled by human will, Tiktok scrolling is ruled by the recommendation algorithm and more broadly, some alien process of meme spread that we don't understand or control, it's not even purely explained by recommendation algorithm. I doubt that even the recommendation algorithm plus the decisions of the engineers of the algorithm and the entire TikTok codebase would explain it very well.

Blurry terminology I want clarity on

These 3 things are all different, but they feel very similar, so I want to describe them in more detail at some point:

- memetics

- coordination

- increasingly clear definitions and concepts

For one, coordination is a about how agents in a system relate to one another, whereas "clarity of concepts" and "memetics" are more like names of topics.

But I'm curious what you think is a better word or term for referring to "iteration on ideas", as this is one of the things I'm actively trying to sort out by writing this post.

It's just a pointer to a concept, I'm not relying on the analogy to genes.

So I've been thinking more about this...

I think you completely missed the angle of, civilizational coordination via people updating on the state of the world and on what others are up to.

(To be fair I literally wrote in The Gist, "speed up memetic evolution", lol that's really dumb, also explicitly advocated for "memetic evolution" multiple times throughout)

Communication is not exactly "sharing information"

Communication is about making sure you know where you each stand and that you resolve to some equilibrium, not that you tell each other your life story and all the object level knowledge in your head.

Isn't this exactly what you're doing when going around telling people "hey guys big labs are literally building gods they don't understand nor control, this is bad and you should know it" ?

I should still dig into what that looks like exactly and when it's done well vs badly (for example you don't tell people how exactly OpenAI is building gods, just that they are).

I'd argue that if Youtube had a chatbot window embedded in the UI which can talk about contents of a video, this would be a very positive thing, because generally it would increase people's clarity about and ability to parse, contents of videos.

Clarity of ideas is not just "pure memetic evolution"

Think of the type of activity that could be described as "doing good philosophy" and "being a good reader". This process is iterative too: absorb info from world -> share insight/clarified version of info -> get feedback -> iterate again -> affect world state -> repeat. It's still in the class of "unpredictable memetic phenomena", but it's very very different from what happens on the substrate of mindless humans scrolling TikTok, guided by the tentacles of a recommendation algorithm.

Even a guy typing something into a comment box, constantly re-reading and re-editing and re-considering, will land on (evolve towards) unpredictable ideas (memes). That's the point!

TLDR:

Here's all the ways in which you're right, and thanks for pointing these things out!

At a meta-level, I'm *really* excited by just how much I didn't see your criticism coming. I thought I was thinking carefully, and that iterating on my post with Claude (though it didn't write a single word of it!) was taking out the obvious mistakes, but I missed so much. I have to rethink a lot about my process of writing this.

I strongly agree that I need a *way* more detailed model of what "memetic evolution" looks like, when it's good vs bad, and why, whether there's a better way of phrasing and viewing it, dig into historical examples, etc.

I'm curious if social media is actually bad beyond the surface -- but again I should've anticipated "social media kinda seems bad in a lot of ways" being such an obvious problem in my thinking, and attended to it.

Reading it back, it totally reads as an argument for "more information more Gooder", which I didn't see at all. (generally viewing the post as "more X is always more good" is also cool as in, a categorization trick that brings clarity)

I think a good way to summarize my mistake is that I didn't "go all the way" in my (pretty scattered) lines of thinking.

You're on your way to thinking critically about morality, coordination and epistemology, which is great!

Thanks :) A big part of why I got into writing ideas explicitly and in big posts (vs off-hand Tweets/personal notes), is because you've talked about this being a coordination mechanism on Discord.

I've written a post about my thoughts related to this, but I haven't gone specifically into whether UI tools help alignment or capabilities more. It kind of touches on "sharing vs keeping secret" in a general way, but not head-on such that I can just write a tldr here, and not along the threads we started here. Except maybe "broader discussion/sharing/enhanced cognition gives more coordination but risks world-ending discoveries being found before coordination saves us" -- not a direct quote.

But I found it too difficult to think about, and it (feeling like I have to reply here first) was blocking me from digging into other subjects and developing my ideas, so I just went on with it.

https://www.lesswrong.com/posts/GtZ5NM9nvnddnCGGr/ai-alignment-via-civilizational-cognitive-updates

Things I learned/changed my mind about thanks to your reply:

1) Good tools allow experimentation which yields insights that can (unpredictably) lead to big advancements in AI research.

o1 is an example, where basically an insight discovered by someone playing around (Chain Of Thought) made its way into a model's weights 4 (ish?) years later by informing its training.

2) Capabilities overhang getting resolved, being seen as a type of bad event that is preventable.

This is a crux in my opinion:

It is bad for cyborg tools to be broadly available because that'll help {people trying to build the kind of AI that'd kill everyone} more than they'll {help people trying to save the world}.

I need to look more into the specifics of AI research and of alignment work and what kind of help a powerful UI actually provides, and hopefully write a post some day.

(But my intuition is, the fact that cyborg tools help both capabilities and alignment, is bad, and whether I open source code or not shouldn't hinge on narrowing down this ratio, it should overwhelmingly favor alignment research)

Cheers.

(edit: thank you for your comment! I genuinely appreciate it.)

"""I think (not sure!) the damage from people/orgs/states going "wow, AI is powerful, I will try to build some" is larger than the upside of people/orgs/states going "wow, AI is powerful, I should be scared of it"."""

^^ Why wouldn't people seeing a cool cyborg tool just lead to more cyborg tools? As opposed to the black boxes that big tech has been building?

I agree that in general, cyborg tools increase hype about the black boxes and will accelerate timelines. But it still reduces discourse lag. And part of what's bad about accelerating timelines is that you don't have time to talk to people and build institutions --- and, reducing discourse lag would help with that.

"""By not informing the public that AI is indeed powerful, awareness of that fact is disproportionately allocated to people who will choose to think hard about it on their own, and thus that knowledge is more likely to be in reasonabler hands (for example they'd also be more likely to think "hmm maybe I shouldn't build unaligned powerful AI")."""

^^ You make 3 assumptions that I disagree with:

1) Only reasonable people who think hard about AI safety will understand the power of cyborgs

2) You imply a cyborg tool is a "powerful unaligned AI", it's not, it's a tool to improve bandwidth and throughput between any existing AI (which remains untouched by cyborg research) and the human

3) That people won't eventually find out. One obvious way is that a weak superintelligence will just build it for them. (I should've made this explicit, that I believe that capabilities overhang is temporary, that inevitably "the dam will burst", that then humanity will face a level of power they're unaware of and didn't get a chance to coordinate against. (And again, why assume it would be in the hands of the good guys?))

I laughed, I thought, I learned, I was inspired :) fun article!

How to do a meditation practice called "metta", which is usually translated as "loving kindness".

```md

# The main-thing-you-do:

- kindle emotions of metta, which are in 3 categories:

+ compassion (wishing lack of suffering)

+ wishing well-being (wanting them to be happy for its own sake)

+ empathatic joy (feeling their joy as your own)

- notice those emotions as they arise, and just *watch them* (in a mindfulness-meditation kinda way)

- repeat

# How to do that:

- think of someone *for whom it is easy to feel such emotions*

+ so a pet might be more suited than a romantic partner, because emotions for the latter are more complex. it's about how readily or easily you can feel such emotions

- kindle emotions by using these 2 methods (whatever works):

+ imagine them being happy, not being sick, succeeding in life, etc.

- can do more esoteric imaginations too, like, having a pink cord of love or something connecting to that person's heart from your own, idk i just made this up just now

+ repeat phrases like, "may you be happy", "may you succeed in life", "i hope yu get lots of grandkids who love you", "i hope you never get sick or break your arm", etc. :)

```

here are guided meditation recordings for doing this practice: https://annakaharris.com/friendly-wishes/

they're 5-7 minutes and designed for children. so easy to follow, but it works for me too its not dumbed-down, whcih maybe is unlikely to begin with since emotions are just emotions

My summary:

What they want:

Build human-like AI (in terms of our minds), as opposed to the black-boxy alien AI that we have today.Why they want it:

Then our systems, ideas, intuitions, etc., of how to deal with humans and what kind of behaviors to expect, will hold for such AI, and nothing insane and dangerous will happen.

Using that, we can explore and study these systems, (and we have a lot to learn from system at even this level of capability), and then leverage them to solve the harder problems that come with aligning superintelligence.

(in case you want to copy-paste and share this)

Article by Conjecture, from february 25th 2023.

Title: `Cognitive Emulation: A Naive AI Safety Proposal`

Link: <https://www.lesswrong.com/posts/ngEvKav9w57XrGQnb/cognitive-emulation-a-naive-ai-safety-proposal>

(Note on this comment: I posted (something like) the above on Discord, and am copying it to here because I think it could be useful. Though I don't know if this kind of non-interactive comment is okay.)

Is retargetable enough to be deployed to solve many useful problems and not deviate into dangerous behavior, along as it is used by a careful user.

Contains a typo.

along as it is ==> as long as it is

I compressed this article for myself while reading it, by copying bits of text and highlighting parts with colors, and I uploaded screenshots of the result in case it's useful to anyone.

Disagreement.

I disagree with the assumption that AI is "narrow". In a way GPT is more generally intelligent than humans, because of the breadth of knowledge and type of outputs, and it's actually humans who outperform AI (by a lot) at certain narrow tasks.

And an assistance can include more than asking a question and receiving an answer. It can be exploratory with the right interface to a language model.

(Actually my stories are almost always exploratory, where I try random stuff, change the prompt a little, and recursively play around like that, to see what the AI will come up with)

"Specific"

Related to the above: in my opinion thinking of specific tools is the wrong framing. Like how a gun is not a tool to kill a specific person, it kills whoever you point it at. And a language model completes whichever thought or idea you start, effectively reducing the time you need to think.

So the most specific I can get is I'd make it help me build tooling (and I already have). And the better the tooling the more "power" the AI can give you (as George Hotz might put it).

For example I've built a simple webpage with ChatGPT despite knowing almost no javascript. What does this mean? It means my scope as a programmer just changed from Python to every language on earth (sort of), and it's the same for any piece of knowledge, since ChatGPT can explain things pretty well. So I can access any concept or piece of understanding much more quickly, and there are lots of things Google searches simply don't work for.

The form at this link <https://docs.google.com/forms/d/e/1FAIpQLSdU5IXFCUlVfwACGKAmoO2DAbh24IQuaRIgd9vgd1X8x5f3EQ/closedform> says "The form Refine Incubator Application is no longer accepting responses.

Try contacting the owner of the form if you think this is a mistake."

so I suggested changing the parts where it says to sign up, to a note about applications not being accepted anymore.

How can I apply?

Unfortunately, applications are closed at the moment.

I’m opening an incubator called Refine for conceptual alignment research in London, which will be hosted by Conjecture. The program is a three-month fully-paid fellowship for helping aspiring independent researchers find, formulate, and get funding for new conceptual alignment research bets, ideas that are promising enough to try out for a few months to see if they have more potential.

(note: applications are currently closed)