Posts

Comments

Downvoted as I find this comment uncharitable and rude.

(The link for the bluetooth keyboard from your blog is broken / or the keyboard is missing)

Maybe the V1 dopamine receptors are simply useless evolutionary leftovers (perhaps it's easier from a developmental perspective)

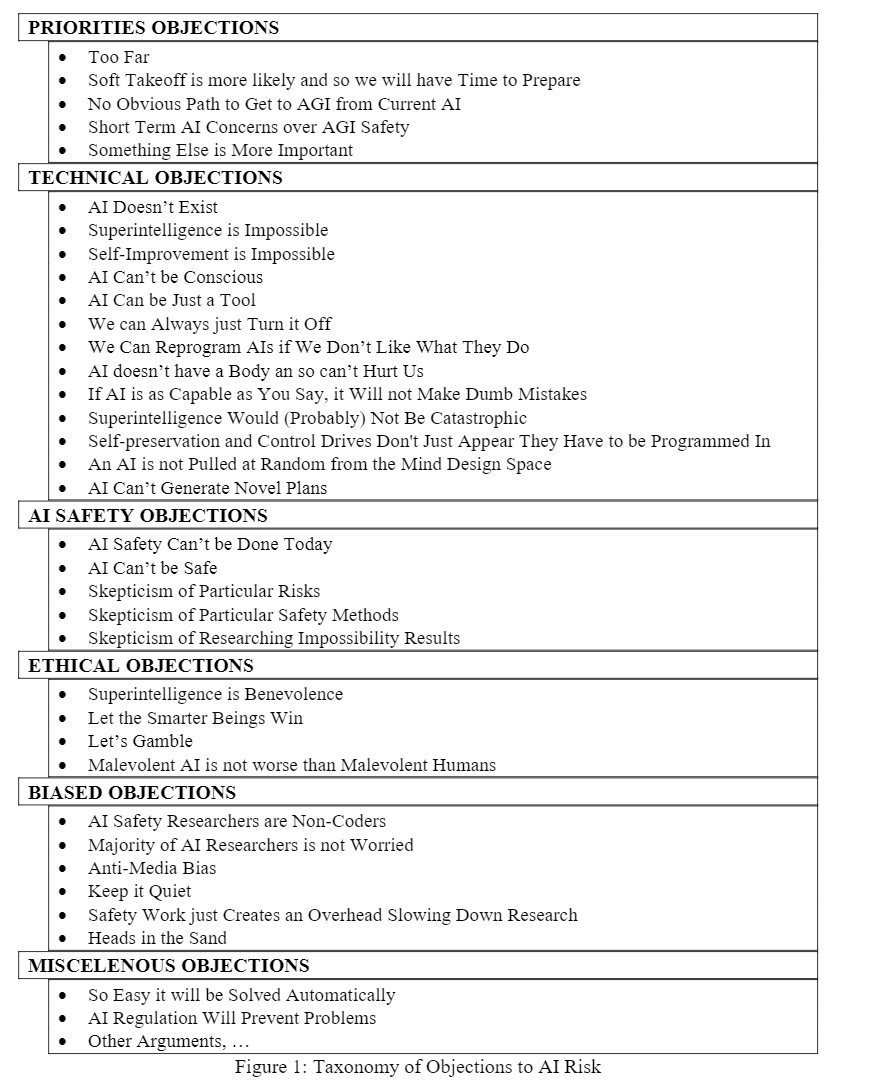

A taxonomy of objections to AI Risk from the paper:

What sort of epistemic infrastructure do you think is importantly missing for the alignment research community?

What's your take on Elicit?

What are the best examples of progress in AI Safety research that we think have actually reduced x-risk?

(Instead of operationalizing this explicitly, I'll note that the motivation is to understand whether doing more work toward technical AI Safety research is directly beneficial as opposed to mostly irrelevant or having second-order effects. )

The (meta-)field of Digital Humanities is fairly new. TODO: Estimating its success and its challenges would help me form a stronger opinion on this matter.

One project which implements something like this is 'Circles'. I remember it was on hold several years ago but seems to be running now - link

I think that generally, skills (including metacognitive skills) don't transfer that well between different domains and it's best to practice directly. However, games also give one better feedback loops and easier access to mentoring, so the room for improvement might be larger.

A meta-analysis on transfer from video games to cognitive abilities saw small or null gains:

The lack of skill generalization from one domain to different ones—that is, far transfer—has been documented in various fields of research such as working memory training, music, brain training, and chess. Video game training is another activity that has been claimed by many researchers to foster a broad range of cognitive abilities such as visual processing, attention, spatial ability, and cognitive control. We tested these claims with three random-effects meta-analytic models. The first meta-analysis (k = 310) examined the correlation between video game skill and cognitive ability. The second meta-analysis (k = 315) dealt with the differences between video game players and nonplayers in cognitive ability. The third meta-analysis (k = 359) investigated the effects of video game training on participants’ cognitive ability. Small or null overall effect sizes were found in all three models. These outcomes show that overall cognitive ability and video game skill are only weakly related. Importantly, we found no evidence of a causal relationship between playing video games and enhanced cognitive ability. Video game training thus represents no exception to the general difficulty of obtaining far transfer.

However, A review on study of chess does see some gains, and gains that seem to improve with more time of instruction, but it's a smaller survey of inadequately designed studies.

👍

Thanks for the concrete examples! Do you have relevant references for these at hand? I could imagine that there might be better ways to solve these issues, or that they somehow mostly cancel out or relatively low problems, so I'm interested to see relevant arguments and case studies.

I don't think that operationalizing exactly what I mean by a consensus would help a lot. My goal here is to really understand how certain I should be about whether rent control is a bad policy (and what are the important cases where it might not be a good policy, such as the examples ChristianKl gave below).

That's right, and a poor framing on my part 😊

I am interested in a consensus among academic economists, or in economic arguments for rent control. Specifically because I'm mostly interested in utilitarian reasoning, but I'd also be curious about what other disciplines have to say.

This sounds like an amazing project and I find it very motivating. Especially the questions around how we'd like future epistemics to be and prioritizing different tools/training.

As I'm sure you are aware, there is a wide academic literature around many related aspects including the formalization of rationality, descriptive analysis of personal and group epistemics, and building training programs. If I understand you correctly, a GPI analog here would be something like an interdisciplinary research center that attempts to find general frameworks with which it would be possible later on to better compare between interventions that aim at improving epistemics and to standardize a goal of "epistemic progress", with a focus on the most initially promising subdomains?

I think that M only prints something after converging with Adv, and that Adv does not print anything directly to H

Abram, did you reply to that crux somewhere?

I agree that hierarchy can be used only sparingly and still be very helpful. Perhaps just nesting under the core tags, or something similar.

On special posts where that does not seem to be the case that the hierarchy holds, people can still downvote the parent tag. That is annoying, but may reduce work overall.

Also, navigating up/down with arrow keys and pressing enter should allow choice of tags with keyboard only.

Some thoughts:

1. More people would probably rank tags if it could be done directly through the tag icon instead of using the pop-up window.

2. When searching for new tags, I'd like them sorted probably by relevance (say, some preference for: being a prefix, being a popular tag, alphabetical ordering).

3. When browsing through all posts tagged with some tag, I'd maybe prefer to see higher karma posts first, or to have it factored in the ordering.

4. Perhaps it might be easier to have a hierarchy of tags - so that voting for Value learning also votes for AI Alignment say

If you wouldn't think that AI researchers care that much about destroying the world, what else makes you optimistic that there will be enough incentives to ensure alignment? Does it all go back to people in relevant power generally caring about safety and taking it seriously?

I think that the debate around the incentives to make aligned systems is very interesting, and I'm curious if Buck and Rohin formalize a bet around it afterwards.

I feel like Rohin point of view compared to Buck is that people and companies are in general more responsible, in that they are willing to pay extra costs to ensure safety - not necessarily out of a solution to a race-to-the-bottom situation. Is there another source of disagreement, conditional on convergence on the above?

Is GPI / forethought foundation missing?

No, I was simply mistaken. Thanks for correcting my intuitions on the topic!

If this is the case, this seems more like a difference in exploration/exploitation strategies.

We do have positively valenced heuristics for exploration - say curiosity and excitement

I think that the intuition for this argument comes from something like a gradient ascent under an approximate utility function. The agent will spend most of it's time near what it perceives to be a local(ish) maximum.

So I suspect the argument here is that Optimistic Errors have a better chance of locking into a single local maximum or strategy, which get's reinforced enough (or not punished enough), even though it is bad in total.

Pessimistic Errors are ones in which the agent strategically avoids locking into maxima, perhaps by Hedonic Adaptation as Dagon suggested. This may miss big opportunities if there are actual, territorial, big maxima, but that may not be as bad (from a satisficer point of view at least).

And kudos for the neat explanation and an interesting theoretical framework :)

I'd expect the preference at each point to mostly go in the direction of either axis.

However, this analysis should be interesting in non-cooperative games where the vector might represent a mixed strategy, with amplitude the expected payoff perhaps.

I may be mistaken. I tried reversing your argument, and I bold the part that doesn't feel right.

Optimistic errors are no big deal. The agent will randomly seek behaviours that get rewarded, but as long as these behaviours are reasonably rare (and are not that bad) then that’s not too costly.

But pessimistic errors are catastrophic. The agent will systematically make sure not to fall into behaviors that avoid high punishment, and will use loopholes to avoid penalties even if that results in the loss of something really good. So even if these errors are extremely rare initially, they can totally mess up my agent.

So I think that maybe there is inherently an asymmetry between reward and punishment when dealing with maximizers.

But my intuition comes from somewhere else. If the difference between pessimism and optimism is given by a shift by a constant then it ought not matter for a utility maximizer. But your definition goes at errors conditional on the actual outcome, which should perhaps behave differently.

Pessimistic errors are no big deal. The agent will randomly avoid behaviors that get penalized, but as long as those behaviors are reasonably rare (and aren’t the only way to get a good outcome) then that’s not too costly.

But optimistic errors are catastrophic. The agent will systematically seek out the behaviors that receive the high reward, and will use loopholes to avoid penalties when something actually bad happens. So even if these errors are extremely rare initially, they can totally mess up my agent.

I'd love to see someone analyze this thoroughly (or I'll do it if there will be an interest). I don't think it's that simple, and it seems like this is the main analytical argument.

For example, if the world is symmetric in the appropriate sense in terms of what actions get you rewarded or penalized, and you maximize expected utility instead of satisficing in some way, then the argument is wrong. I'm sure there is good literature on how to model evolution as a player, and the modeling of the environment shouldn't be difficult.

I find the classification of the elements of robust agency to be helpful, thanks for the write up and the recent edit.

I have some issues with Coherence and Consistency:

First, I'm not sure what you mean by that so I'll take my best guess which in its idealized form is something like: Coherence is being free of self contradictions and Consistency is having the tool to commit oneself to future actions. This is going by the last paragraph of that section-

There are benefits to reliably being able to make trades with your future-self, and with other agents. This is easier if your preferences aren’t contradictory, and easier if your preferences are either consistent over time, or at least predictable over time.

Second, the only case for Coherence is that reasons that coherence helps you make trade with your future self. My reasons for it are more strongly related to avoiding compartmentalization and solving confusions, and making clever choices in real time given my limited rationality.

Similarly, I do not view trades with future self as the most important reason for Consistency. It seems that the main motivator here for me is some sort of trade between various parts of me. Or more accurately, hacking away at my motivation schemes and conscious focus, so that some parts of me will have more votes than others.

Third, there are other mechanisms for Consistency. Accountability is a major one. Also, reducing noise in the environment externally and building actual external constraints can be helpful.

Forth, Coherence can be generalized to a skill that allows you to use your gear lever understanding of yourself and your agency to update your gears to what would be the most useful. This makes me wonder if the scope here is too large, and that gears level understanding and deliberate agency aren't related to the main points as much. These may all help one to be trustworthy, in that one's reasoning can judged to be adequate - including for oneself - which is the main thing I'm taking out from here.

Fifth (sorta), I have reread the last section, and I think that I understand now that your main motivation for Coherence and Consistency is that the conversation between rationalists can be made much more effective in that they can more easily understand each other's point of view. This I view related to Game Theoretic Soundness, more than the internal benefits of Coherence and Consistency which are probably more meaningful overall.

Non-Bayesian Utilitarian that are ambiguity averse sometimes need to sacrifice "expected utility" to gain more certainty (in quotes because that need not be well defined).

Thank you very much! Excited to read it :)

If it's simple, is it possible to publish also a kindle version?

Thinking of stocks, I find it hard to articulate how this pyramid might correspond to predicting market value of a company. To give it a try:

Traders predict the value of a stock.

The stock is evaluated at all times by the market buy\sell prices. But that is self referential and does not encompass "real" data. The value of a stock is "really evaluated" when a company distributes dividends, goes bankrupt, or anything that collapses a stock to actual money.

The ontology is the methods by which stocks get actual money.

Foundational understanding is the economic theory involved.

[After writing this down, this feels more natural to need a pyramid in this case also (even though I initially guessed that I would find the lower layers unnecessary), or more precisely - it is very useful to think about this pyramid to see how we can improve the system.]

Emotions and Effective Altruism

I remember reading Nate Soares' Replacing Guilt Series and identifying strongly with the feeling of Cold Resolve described there. I since tried a bit to give it some other words and describe it using familiar-er emotions, but nothing really good.

I think that Liget , an emotion found in an isolated tribe at the philippines, might describe a similar emotion (except the head-throwing part). I'm not sure that I can explain that better than the linked article.

after posting, I have tried to change a link post to a text post. It seemed to be possible when editing the original post, but I have discovered later that the changes were not kept and that the post is still in the link format.

When posting a link post, instead of a text post, it is not clear what would be the result. There is still an option to write text, which appears strictly as text right after submitting, but when the post is viewed (from the search bar) only some portion of the text is visible and there is no indication that this is a link post.

It would be much more comfortable if editing of a post could be done only using the keyboard. For example, when adding a link, apart from defining a keyboard shortcut, it should also be possible to press enter to submit the link. I also think it would be more comfortable to add HTML support.

Also, do you have MathJax? or something similar to write math?