Posts

Comments

@Ben Pace Can you please add at the top of the post "Nonlinear disputes at least 85 of the claims in this post and intends to publish a detailed point-by-point response.

They also published this short update giving an example of the kind of evidence they plan to demonstrate."

We keep hearing from people who don't know this. Our comments get buried, so they think your summary at the bottom contains the entirety of our response, though it is just the tip of the iceberg. As a result, they think your post marks the end of the story, and not the opening chapter.

"Alice quit being vegan while working there. She was sick with covid in a foreign country, with only the three Nonlinear cofounders around, but nobody in the house was willing to go out and get her vegan food, so she barely ate for 2 days."

Seems like other people besides Ruby are confused about this too, maybe also because Ben sometimes says "the Nonlinear cofounders" and Emerson/Kat/Drew

Just FYI Drew is not a cofounder of Nonlinear. That is another inaccurate claim from the article. He did not join full time until April 2022.

I'd like to kindly remind you that you are making a lot of judgments about my character based on a 10,000 word post written by someone who explicitly told you he was looking for negative information and only intended to share the worst information.

That is his one paragraph paraphrase of a very complex situation and I think it's fine as far as it goes but it goes nowhere near far enough. We have a mega post coming ASAP.

Ben has also been quietly fixing errors in the post, which I appreciate, but people are going around right now attacking us for things that Ben got wrong, because how would they know he quietly changed the post?

This is why every time newspapers get caught making a mistake they issue a public retraction the next day to let everyone know. I believe Ben should make these retractions more visible.

This is more false info. The approximate/expected total compensation was $70k which included far more than room and board and $1k a month.

Chloe has also been falsely claiming we only had a verbal agreement but we have multiple written records.

We'll share specifics and evidence in our upcoming post.

Just FYI, the original claim is a wild distortion of the truth. We'll be providing evidence in our upcoming post.

Rob Bensinger replied with:

I think that there's a big difference between telling everyone "I didn't get the food I wanted, but they did get/offer to cook me vegan food, and I told them it was ok!" and "they refused to get me vegan food and I barely ate for 2 days".

Agreed.

And this:

This also updates me about Kat's take (as summarized by Ben Pace in the OP):> Kat doesn’t trust Alice to tell the truth, and that Alice has a history of “catastrophic misunderstandings”.

When I read the post, I didn't see any particular reason for Kat to think this, and I worried it might be just be an attempt to dismiss a critic, given the aggressive way Nonlinear otherwise seems to have responded to criticisms.

With this new info, it now seems plausible to me that Kat was correct (even though I don't think this justifies threatening Alice or Ben in the way Kat and Emerson did). And if Kat's not correct, I still update that Kat was probably accurately stating her epistemic state, and that a lot of reasonable people might have reached the same epistemic state.

Thanks for sharing your thoughts!

1) I disagree with that interpretation.

2) She was not an employee of Nonlinear at the time, just a friend. Ben's post said she was, but that was a factual inaccuracy, one of many we are working hard to correct in our forthcoming post.

Just to clear up a view things:

- It was $70k in approximate/expected total compensation. The $1k a month was just a small part of the total compensation package.

- Despite false claims to the contrary, it wasn't just verbally agreed, we have written records.

- Despite false claims to the contrary, we were roughly on track to spend that much. This is another thing we will show evidence for ASAP, but there is a lot of accounting/record keeping etc to do to organize all the spending information, etc.

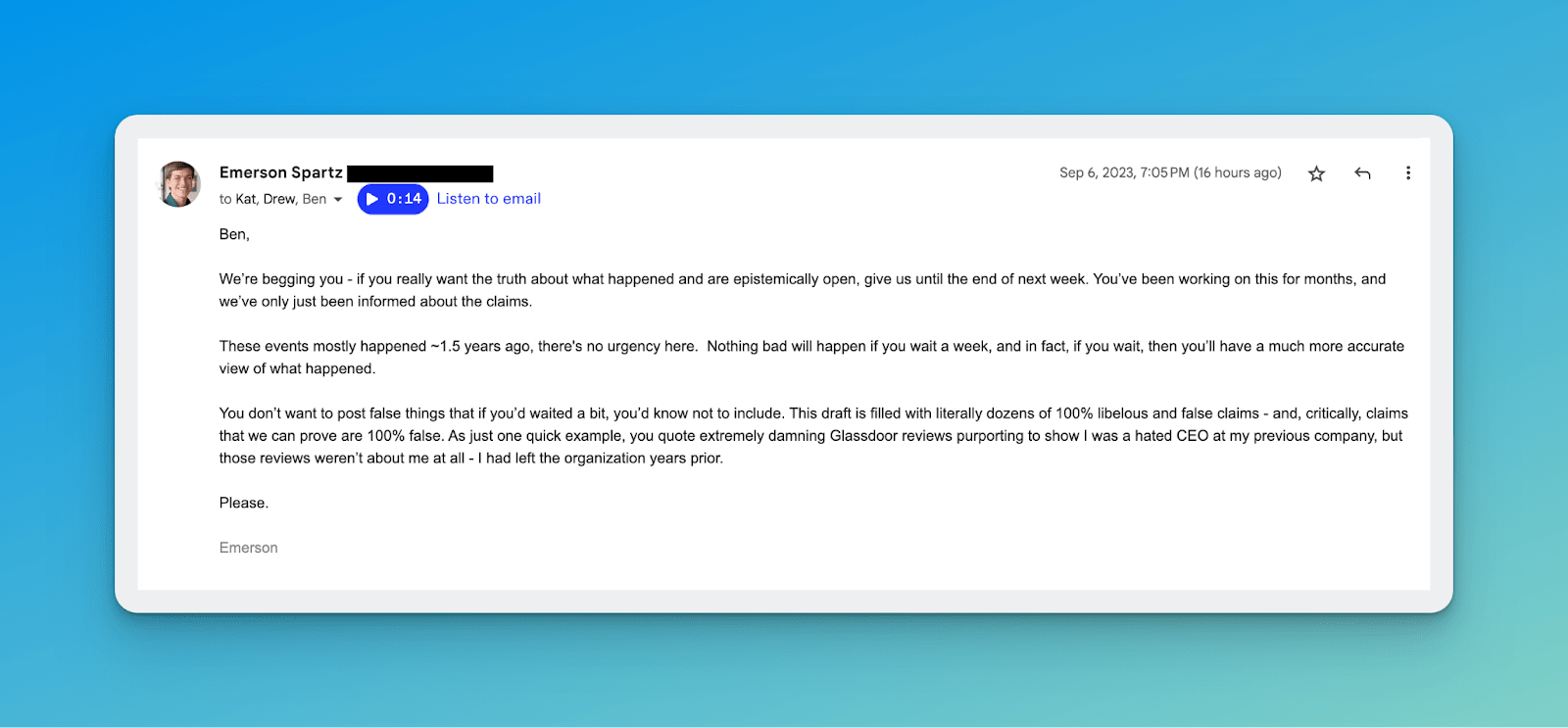

Yes, that is what I intended to communicate here, and I was worried people might think I was trying to suppress the article so I bolded this request to ensure people didn't misunderstand:

In case it wasn't clear, we didn't say 'don't publish', we said 'don't publish until we've had a week to gather and share the evidence we have':

Personally, I think it's correct to update somewhat, but in situations like this where only one side has shared their perspective, I'm much more likely to overupdate ("those monsters!") so I have to guard against that.

Indeed, without context that is a cartoon villain thing to say. Not asking you to believe us, yet just asking you to withhold judgment until you've seen the evidence we have which will make that message seem very different in context.

We were very clear that we felt there were still major issues to address. Here’s another email in the thread a day later:

We also clearly told Ben and Robert in the call many times that there is a lot more to the story, and we have many more examples to share. This is why we suggested writing everything up, to be more precise and not say anything that was factually untrue. Since our former employees’ reputations are on the line as well, it makes sense to try to be very deliberate.

It's possible there was a miscommunication between you and Ben around how strongly we communicated the fact that there was a lot more here.

There is a reason courtrooms give both sides equal chances to make their case before they ask the jury to decide.

It is very difficult for people to change their minds later, and most people assume that if you’re on trial, you must be guilty, which is why judges remind juries about “innocent before proven guilty”.

This is one of the foundations of our legal system, something we learned over thousands of years of trying to get better at justice. You’re just assuming I’m guilty and saying that justifies not giving me a chance to present my evidence.

Also, if we post another comment thread a week later, who will see it? EAF/LW don’t have sufficient ways to resurface old but important content.

Re: “my guess is Ben’s sources have received dozens of calls” - well, your guess is wrong, and you can ask them to confirm this.

You also took my email strategically out of context to fit the Emerson-is-a-horned-CEO-villain narrative. Here’s the full one:

People give standing ovations when they feel inspired to because something resonated with them. They're applauding him for trying to save humanity, and this audience reaction gives me hope.

It's unfortunate that this version is spreading because many people will think it's a low credibility TEDx talk instead of a very credible main stage TED talk.

+1

My background is extremely relevant here and if anybody in the alignment community would like help thinking through strategy, I'd love to be helpful.

I think this is a really promising idea.

If the goal is to unify diverse stakeholders, including non-technical ones, I wonder if it would be better to use a less-wonky target (e.g. "50%" instead of ".002 OOMs")

Going to share a seemingly-unpopular opinion and in a tone that usually gets downvoted on LW but I think needs to be said anyway:

This stat is why I still have hope: 100,000 capabilities researchers vs 300 alignment researchers.

Humanity has not tried to solve alignment yet.

There's no cavalry coming - we are the cavalry.

I am sympathetic to fears of a new alignment researchers being net negative, and I think plausibly the entire field has, so far, been net negative, but guys, there are 100,000 capabilities researchers now! One more is a drop in the bucket.

If you're still on the sidelines, go post that idea that's been gathering dust in your Google Docs for the last six months. Go fill out that fundraising application.

We've had enough fire alarms. It's time to act.

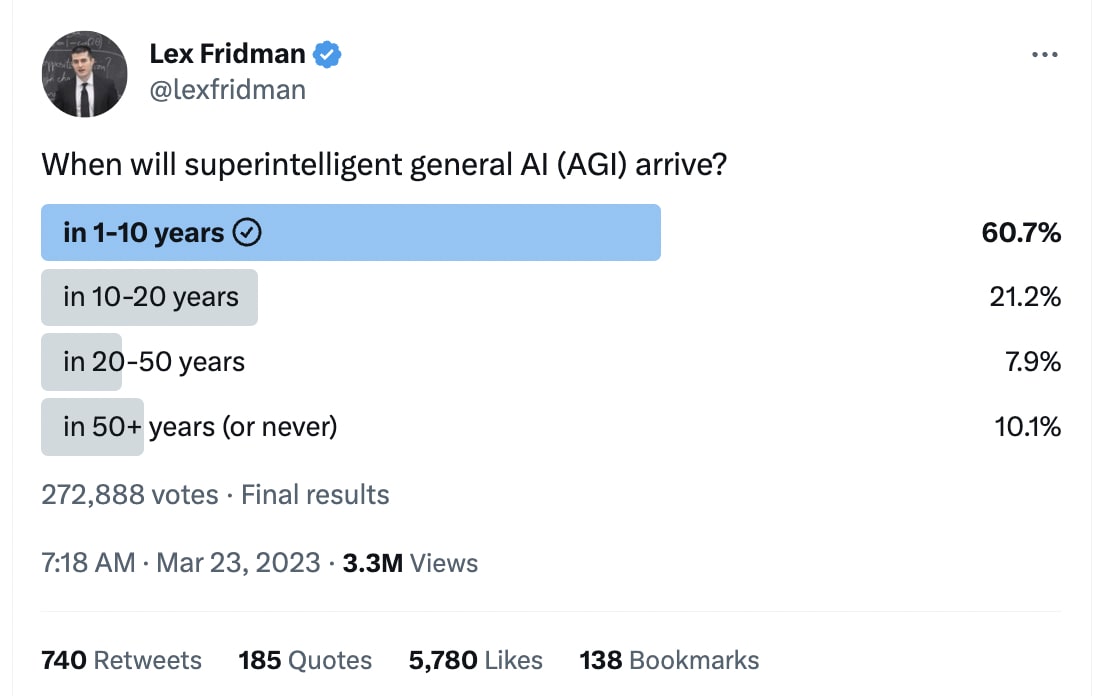

Another example of Overton movement - imagine seeing these results a few years ago:

"I'm an accelerationist for solar power, nuclear power to the extent it hasn't been obsoleted by solar power and we might as well give up but I'm still bitter about it, geothermal, genetic engineering, neuroengineering, FDA delenda est, basically everything except GoF bio and AI"

https://twitter.com/ESYudkowsky/status/1629725763175092225?t=A-po2tuqZ17YVYAyrBRCDw&s=19

I also think this approach deserves more consideration.

Also: since BCIs can generate easy-to-understand profits, and are legibly useful to many, we could harness market forces to shorten BCI timelines.

Ambitious BCI projects will likely be more shovel ready than many other alignment approaches - BCIs are plausibly amenable to Manhattan Project-level initiatives where we unleash significant human and financial capital. Maybe use Advanced Market Commitments to kickstart the innovators, etc.

For anybody interested, Tim Urban has a really well written post about Neuralink/BCIs: https://waitbutwhy.com/2017/04/neuralink.html

Anecdata: many in my non-EA/rat social circles of entrepreneurs and investors are engaging with this for the first time.

And, to my surprise (given the optimistic nature of entrepreneurs/VCs) they aren't just being reflexive techno-optimists, they're taking the ideas seriously and, since Bankless, "Eliezer" is becoming a first name-only character.

Eliezer said he's an accelerationist in basically everything except AI and gain-of-function bio and that seems to resonate. AI is Not Like The Other Problems.

Came here to say this. Highly recommend this book for anyone working on deception.

Love this! I used to manage teams of writers/editors and here are some ideas we found useful for increasing readability:

To remove fluff, imagine someone is paying you $1,000 for every word you remove. Our writers typically could cut 20-50% with minimal loss of information.

Long sentences are hard to read, so try to change your commas into periods.

Long paragraphs are hard to read, so try to break each paragraph into 2-3 sentences.

Most people just skim, and some of your ideas are much more important than others, so bold/italicize your important points.

Thanks for the feedback! We think a bot could make sense as well - we're exploring this internally.

Good idea! Will add this to the roadmap.

So glad you're enjoying it! It's mine too - I consume way more LW content because of it.

Great idea, we'll add this to the roadmap!

I'd expect the most common failure mode for rationalists here is not understanding how patronage networks work.

Even if you do everything else right, it is very hard to get elected to a position of power if the other guy is distributing the office's resources for votes.

You should be able to map out the voting blocs and what their criteria are, i.e. "Union X and its 500 members will mostly vote for Incumbent Y because they get $X in contracts per year etc"

People are so irrationally intimidated by lawyers that some legal firms make all their money by sending out thousands of scary form letters demanding payment for bullshit transgressions. My company was threatened with thousands of frivolous lawsuits but only actually sued once.

Threats are cheap.

Great idea! We'll add it to the list.