Posts

Comments

if I was sufficiently sick I would want it.

The problem is in the first few days of an infection you won't know whether you'll be sufficiently sick to be hospitalised on say day 10. We don't really know who will deteriorate on day 7 and beyond, and who will recover. And if you take the drug later it won't be as effective.

I suspect this drug will only be used for high risk groups, due to risk of side-effects and the high price (US is paying $700 per course).

I'm a bit surprised by this. The entire logic behind booster doses for the immunocompromised (which several countries are already doing, e.g. US/UK) is based on a lack of (or low) immune response to the vaccine, which can be confirmed by an antibody test (measuring antibodies to the spike protein). There are studies with large numbers of people where you can see the median levels of antibodies in healthy adults and compare results (e.g. here and here). These tests are quantitative and give you a number, not just a positive or negative result, some people can have orders of magnitude more antibodies than others as a result of vaccination.

Also my impression is that antibody levels (post vaccination) are possibly correlated or even predict who can be infected and correlate with vaccine effectiveness. Is that not likely to be the case?

This is a bit anecdotal but I've read about people in the UK on immunosuppressive therapy getting 4 vaccine doses (as advised by their doctor) in order to generate enough immune response to have detectable antibodies.

2.5 times deadlier than existing strains

Where does this number come from?

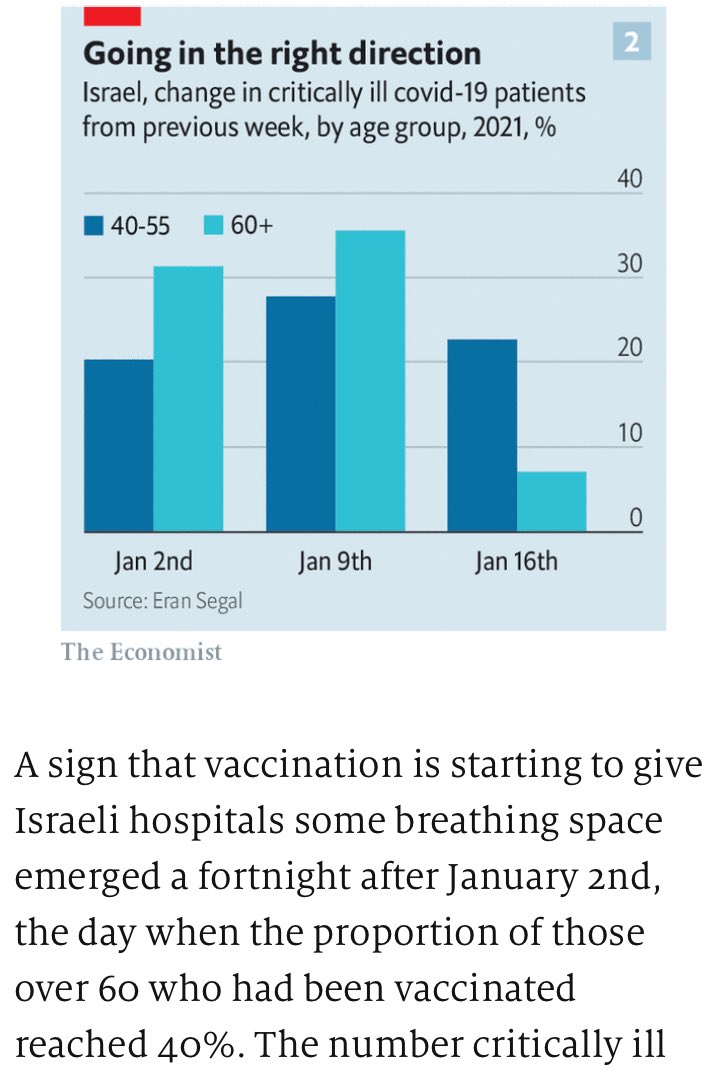

I'm confused by this graph, if ~30% of critically ill patients are 60+, ~20% are 40-55, that adds up to 50%. What are the other 50%? Only the 55-60 and 0-39 age groups remain, but surely they can't be 50% of critically ill patients (the 55-60 group is too small and the 0-39s should have like 2 orders of magnitude fewer critically ill people than the 60+ group)?

The site is lacking breadcrumbs so it's hard to orient oneself. It's hard to follow what section of the website you're in as you dig deeper into the content. Any plans to add breadcrumbs (or some alternative)?

A black is also more likely to commit a violent crime than a white person.

Isn't it more relevant whether a black person is more likely to commit a violent crime against a police officer (during a search, etc)? After all the argument is that the police are responding to some perceived threat. The typical mostly black-on-black violent crime isn't the most relevant statistic that should be used. Where are the statistics about how blacks respond to the police?

Funny you should mention that..

AI risk is one of the 2 main focus areas for the The Open Philanthropy Project for this year, which GiveWell is part of. You can read Holden Karnofsky's Potential Risks from Advanced Artificial Intelligence: The Philanthropic Opportunity.

They consider that AI risk is high enough on importance, neglectedness, and tractability (their 3 main criteria for choosing what to focus on) to be worth prioritizing.

things that I am very confident are false

Could you give any example?

This is also interesting: Barack Obama on Artificial Intelligence, Autonomous Cars, and the Future of Humanity

This is the article: Barack Obama on Artificial Intelligence, Autonomous Cars, and the Future of Humanity

He's mentioned it on his podcast. It won't be out for another 1.5-2 years I think.

Also Sam Harris recently did a TED talk on AI, it's now up.

He's writing an AI book together with Eliezer, so I assume he's on board with it.

Can't we just add a new 'link' post type to the current LW? Links and local posts would both have comment threads (here on LW), the only difference is the title of the linked post would link to an outside website/resource.

Should we try to promote the most valuable/important (maybe older?) Less Wrong content on the front page? Currently the front page features a bunch of links and featured articles that don't seem to be organized in any systematic way. Maybe Less Wrong would be more attractive/useful to new people if they could access the best the site has to offer directly from the front page (or at least more if it, and in a systematic way)?

Target: a good post every day for a year.

Why specifically 1/day? It seems a bit too much. Why not e.g. ~3/week?

Your sensory system is still running

There are brain subsystems that are still running, but they are not necessarily ones "you" identify with. If you replaced the parts/networks of the brain that control your heart and lungs (through some molecular nanotechnology), would "you" still be you? My intuition says yes. The fact that "something is running" doesn't mean that something is you.

I know the computer metaphor doesn't work well for the brain, but imagine the system in the brain that wakes you up when you hear some sound could be sort of like when a sleeping computer that gets woken up by a signal in the computer network.

Also as others have mentioned, I'm pretty sure during anesthesia/coma there can be periods where you are completely lacking any experience.

Would this help?

empirical literature on what makes websites effective (which we've done a lot of now)

Can you share some of your sources?

It only takes a small extension of the logic to show that the Just World Hypothesis is a useful heuristic.

I don't see it, how is it useful?

Hey, is there write-up a of the UK-specific stuff for people who weren't able to attend?

Sleep might be a Lovecraftian horror.

Going even further, some philosophers suggest that consciousness isn't even continuous, e.g. as you refocus your attention, as you blink, there are gaps that we don't notice. Just like how there are gaps in your vision when you move your eyes from one place to another, but to you it appears as a continuous experience.

The error rate in replication experiments in the natural sciences is expected to be much much lower than in the social sciences. Humans and human environments are noisy and complicated. Look at nutrition/medicine - it's taking us decades to figure out whether some substance/food is good or bad for you and under what circumstances. Why would you expect it be easier to analyze human psychology and behavior?

The trailer for the movie Transcendence is out.

to value breadth of perspective and flexibility of viewpoint significantly more than internal consistency

As humans we can't change/modify ourselves too much anyway, but what about if we're able to in the future? If you can pick and choose your values? It seems to me that, for such entity, not valuing consistency is like not valuing logic. And then there's the argument that it leaves you open for dutch booking / blackmail.

Well whether it's a "real" change may be besides the point if you put it this way. Our situation and our knowledge are also changing, and maybe our behavior should also change. If personal identity and/or consciousness are not fundamental, how should we value those in a world where any mind-configurations can be created and copied at will?

we value what we value, we don't value what we don't value, what more is there to say?

I'm confused what you mean by this. If there wasn't anything more to say, then nobody would/should ever change what they value? But people's values changes over time, and that's a good thing. For example in medieval/ancient times people didn't value animals' lives and well-being (as much) as we do today. If a medieval person tells you "well we value what we value, I don't value animals, what more is there to say?", would you agree with him and let him go on to burning cats for entertainment, or would you try to convince him that he should actually care about animals' well-being?

You are of course using some of your values to instruct other values. But they need to be at least consistent and it's not really clear which are the "more-terminal" ones. It seems to me byrnema is saying that privileging your own consciousness/identity above others is just not warranted, and if we could, we really should self-modify to not care more about one particular instance, but rather about how much well-being/eudaimonia (for example) there is in the world in general. It seems like this change would make your value system more consistent and less arbitrary and I'm sympathetic to this view.

By the same logic eating you favorite food because it tastes good is also wireheading.

Better, instrumentally, to learn to handle the truth.

It really depends on your goals/goal system. I think the wiki definition is supposed to encompass possible non-human minds that may have some uncommon goals/drives, like a wireheaded clippy that produces virtual paperclips and doesn't care whether they are in the real or virtual world, so it doesn't want/need to distinguish between them.

You can use the "can be good at everything" definition to suggest quantification as well. For example, you could take these same agents and make them produce other things, not just paperclips, like microchips, or spaceships, or whatever, and then the agents that are better at making those are the more intelligent ones. So it's just using more technical terms to mean the same thing.

I looked through some of them, there's a lot of theory and discussions, but I'm rather interested just in a basic step-by-step guide on what to do basically.

So I'm interested in taking up meditation, but I don't know how/where to start. Is there a practical guide for beginners somewhere that you would recommend?

"Regression to the mean" as used above is basically using a technical term to call someone stupid.

Well I definitely wasn't implying that. I actually wanted to discuss the statistics.

Why? I couldn't think of a way to make this comment without it sounding somewhat negative towards the OP, so I added this as a disclaimer, meaning that I want to discuss the statistics, not to insult the poster.

I look forward to reading your future posts.

I hate to sound negative, but I wouldn't count on it.

They probably would have flown off had he twisted it faster.

Or maybe you do, but it's not meaningfully different from deciding to care about some other person (or group of people) to the exclusion of yourself if you believe in personal identity

I think the point is actually similar to this discussion, which also somewhat confuses me.

figure out how to make everyone sitting around on a higher level credibly precommit to not messing with the power plug

That's MFAI's job. Living on the "highest level" also has the same problem, you have to protect your region of the universe from anything that could "de-optimize" it, and FAI will (attempt to) make sure this doesn't happen.

I, on the other hand, (suspect) I don't mind being simulated and living in a virtual environment. So can I get my MFAI before attempts to build true FAI kill the rest of you?

Not really. You can focus your utility function on one particular optimization process and its potential future execution, which may be appropriate given that the utility function defines the preference over outcomes of that optimization process.

Well you could focus your utility function on anything you like anyway, the question is why, under utilitarianism, would it be justified to value this particular optimization process? If personal identity was fundamental, then you'd have no choice, conscious existence would be tied to some particular identity. But if it's not fundamental, then why prefer this particular grouping of conscious-experience-moments, rather than any other? If I have the choice, I might as well choose some other set of these moments, because as you said, "why not"?

Well, shit. Now I feel bad, I liked your recent posts.

I'm now quite skeptical that my urge to correct reflects an actual opportunity to win by improving someone's thinking,

Shouldn't you be applying this logic to your own motivations to be a rationalist as well? "Oh, so you've found this blog on the internet and now you know the real truth? Now you can think better than other people?" You can see how it can look from the outside. What would the implication for yourself be?

On the other hand, given that humans (especially on LW) do analyze things on several meta levels, it seems possible to program an AI to do the same, and in fact many discussions of AI assume this (e.g. discussing whether the AI will suspect it's trapped in some simulation). It's an interesting question how intelligent can an AI get without having the need (or ability) to go meta.

Given that a parliament of humans (where they vote on values) is not accepted as a (final) solution to the interpersonal value / well-being comparison problem, why would a parliament be acceptable for intrapersonal comparisons?

It seems like people sort of turn into utility monsters - if people around you have a strong opinion on a certain topic, you better have a strong opinion too, or else it won't carry as much "force".

What about "decided on"?

With regards to the singularity, and given that we haven't solved 'morality' yet, one might just value "human well-being" or "human flourishing" without referring to a long-term self concept. I.e. you just might care about a future 'you', even if that person is actually a different person. As a side effect you might also equally care about everyone else in to future too.

Right, but I want to use a closer to real life situation or example that reduces to the wason selection task (and people fail at it) and use that as the demonstration, so that people can see themselves fail in a real life situation, rather than in a logical puzzle. People already realize they might not be very good at generalized logic/math, I'm trying to demonstrate that the general logic applies to real life as well.

Well the thing is that people actually get this right in real life (e.g. with the rule 'to drink you must be over 18'). I need something that occurs in real life and people fail at it.

I'm planning on doing a presentation on cognitive biases and/or behavioral economics (Kahneman et al) in front of a group of university students (20-30 people). I want to start with a short experiment / demonstration (or two) that will demonstrate to the students that they are, in fact, subject to some bias or failure in decision making. I'm looking for suggestion on what experiment I can perform within 30 minutes (can be longer if it's an interesting and engaging task, e.g. a game), the important thing is that the thing being demonstrated has to be relevant to most people's everyday lives. Any ideas?

I also want to mention that I can get assistants for the experiment if needed.

Edit: Has anyone at CFAR or at rationality minicamps done something similar? Who can I contact to inquire about this?

I don't think this deserves its own top level discussion post and I suspect most of the downvotes are for this reason. Maybe use the open thread next time?