Posts

Comments

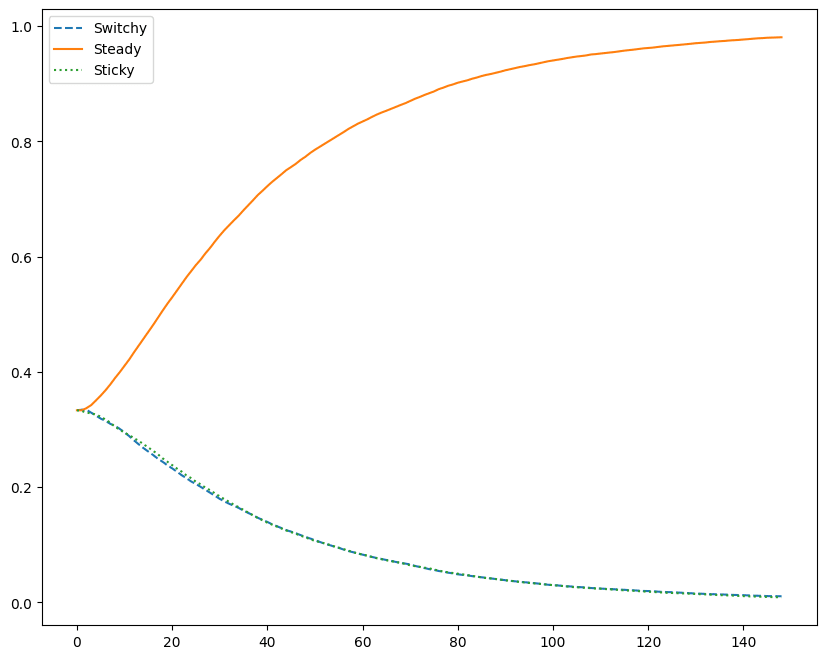

I agree with @James Camacho, at least intuitively, that stickiness in the space of observations is equivalent to switchiness in the space of their prefix XORs and vice versa. Also, I tried to replicate, and didn't observe the mentioned effect, so maybe one of us has a bug in the simulation.

I agree that if a DT is trained on trajectories which sometimes contain unoptimal actions, but the effect size of this mistake is small compared to the inherent randomness of the return, the learned policy will also take such unoptimal action, though with a bit less frequency. (By inherent randomness I mean, in this case, the fact that Player 2 pushes the button randomly and independently of the actions of Player 1)

But where do these trajectories come from? If you take them from another RL algorithm, then the optimization is already there. And if you start from random trajectories, it seems to me that one iteration of DT will not be enough, because due to this randomness, the learned policy will also be quite random. And when the transformers starts producing reasonable actions, it will probably have fixed the mistake as well.

Another way to see it is to consider DT as doing behaviour cloning from the certain fraction of best trajectories (they call it %BC in the paper and compare DT with it on many benchmarks). It ignores generalization, of course, but that shouldn't be relevant for this case. Now, what happens if you take a lot of random trajectories in an environment with a nondeterministic reward and select those which happened to get high rewards? They will contain some mix of good and lucky moves. To remove the influence of luck, you run each of them again, and filter by new rewards (i.e. training a DT on the trajectories generated by previous DT). And so on until the remaining trajectories are all getting a high average reward. I think that in your example at this point the n-th iteration of DT will learn to disconnect the button with high probability on the first move, and that this probability was gradually rising during the previous iterations (because each time the agents who disconnect the button were slightly more likely to perform well, and so they were slightly overrepresented in the population of winners).

That example with traders was to show that in the limit these non EU-maximizers actually become EU-maximizers, now with linear utility instead of logaritmic. And in other sections I tried to demonstrate that they are not EU-maximizers for a finite number of agents.

First, in the expression for their utility based on the outcome distribution, you integrate something of the form, a quadratic form, instead of as you do to compute expected utility. By itself it doesn't prove that there is no utility function, because there might be some easy cases like , and I didn't rigorously proof that this utility function can't be split, though it feels very unlikely to me that something can be done with such non-linearity.

Second, in the example about Independence axiom we have , which should have been equal if was equivalent to expectation of some utility function.