Posts

Comments

This looks very useful, although I think the performance improvements in the more recent open-weight, smaller, quantized models (like Gemma-2, Qwen-2.5, or Phil-3.5) have made it much more reasonable to run such a model locally for this purpose rather than using a remote API, since sending data about the webpages they visit to OpenAI is a repulsive idea to many people (it would also have cost benefits over huge models like GPT-4, but the increase in benefit/cost ratio would be an epsilon increase compared to budget proprietary models like Gemini-2.0-Flash).

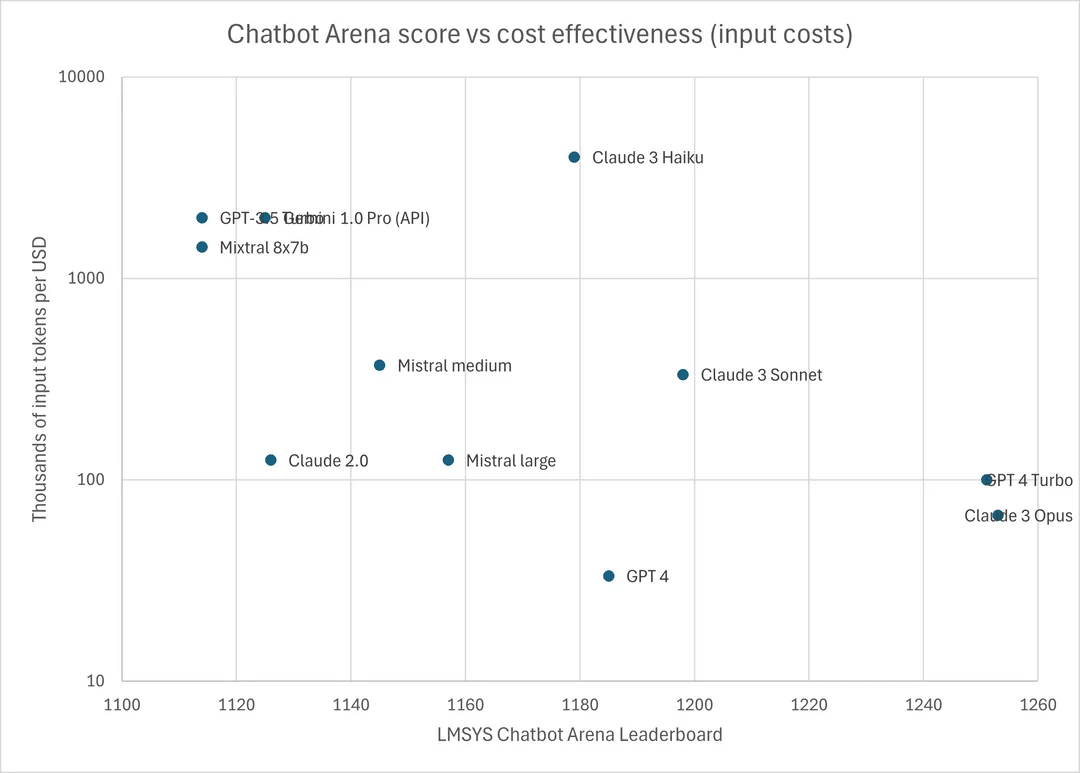

Claude API support would be great since Claude 3 models are highly competitive. Claude-3 Haiku performs similarly to GPT-4 at a fraction of the cost and Claude-3 Opus outperforms GPT-4-Turbo in many tasks.