Posts

LLMs Universally Learn a Feature Representing Token Frequency / Rarity

2024-06-30T02:48:01.839Z

Mathematical Circuits in Neural Networks

2022-09-22T03:48:21.154Z

Comments

Comment by

Sean Osier on

LLMs Universally Learn a Feature Representing Token Frequency / Rarity ·

2024-07-01T04:12:47.203Z ·

LW ·

GW

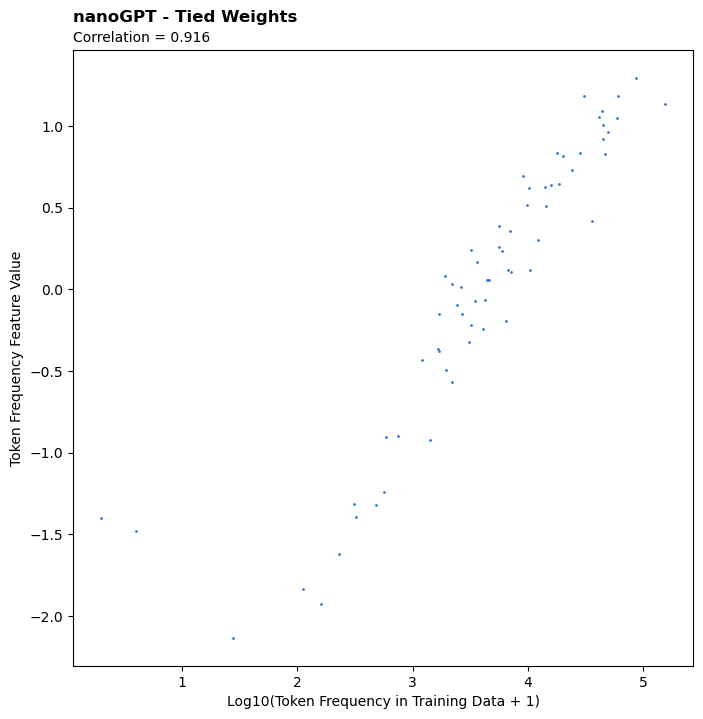

Very true. If a token truly never appears in the training data, it wouldn't be trained / learned at all. Or similarly, if it's only seen like once or twice it ends up "undertrained" and the token frequency feature doesn't perform as well on it. The two least frequent tokens in the nanoGPT model are an excellent example of this. They appear like only once or twice and as a result don't get properly learned, and as a result end up being big outliers.

Comment by

Sean Osier on

Mathematical Circuits in Neural Networks ·

2022-09-23T00:11:53.417Z ·

LW ·

GW

Thanks for the comment! I didn't get around to testing that, but that's one of the exact things I had in mind for my "Next Steps" #3 on training regimens that more reliably produce optimal, interpretable models.

Comment by

Sean Osier on

Mathematical Circuits in Neural Networks ·

2022-09-23T00:05:37.123Z ·

LW ·

GW

Interesting, I'll definitely look into that! Sounds quite related.