Don't you think RLHF solves outer alignment?

post by Charbel-Raphaël (charbel-raphael-segerie) · 2022-11-04T00:36:36.527Z · LW · GW · No commentsThis is a question post.

Contents

Answers 13 habryka 7 cfoster0 3 Raphaël S 2 lovetheusers 1 abergal None No comments

I think the RLHF solves 80% of the problems of outer alignment, and I expect it to be part of the solution.

But :

- RLHF doesn't fully solve the difficult problems, which are beyond human supervision, i.e. the problems where even humans don't know what is the right way to do

- RLHF does not solve the problem of goodharting: For example there is the example of the hand which wriggles in front of the ball, without catching the ball and which fools the humans. (Imho I find this counter-example very weak, and I wonder how the human evaluators could miss this problem: it's very clear in the gif that the hand does not grab the ball).

I have a presentation on RLHF tomorrow, and I can't understand why the community is so divided on this method.

Answers

RLHF is just a fancy word for reinforcement learning, leaving almost the whole process of what reward the AI actually gets undefined (in practice RLHF just means you hire some mechanical turkers and have them think for like a few seconds about the task the AI is doing).

When people 10 years ago started discussing the outer alignment problem (though with slightly different names), reinforcement learning was the classical example that was used to demonstrate why the outer alignment problem is a problem in the first place.

I don't see how RLHF could be framed as some kind of advance on the problem of outer alignment. It's basically just saying "actually, outer alignment won't really be a problem", since I don't see any principled distinction between RLHF and other standard reinforcement-learning setups.

↑ comment by Richard_Ngo (ricraz) · 2022-11-04T07:39:06.551Z · LW(p) · GW(p)

When people 10 years ago started discussing the outer alignment problem (though with slightly different names), reinforcement learning was the classical example that was used to demonstrate why the outer alignment problem is a problem in the first place.

Got any sources for this? Feels pretty different if the problem was framed as "we can't write down a reward function which captures human values" versus "we can't specify rewards correctly in any way". And in general it's surprisingly tough to track down the places where Yudkowsky (or others?) said all these things.

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-11-04T18:08:18.344Z · LW(p) · GW(p)

The complex value paper is the obvious one, which as the name suggests talks about the complexity of value as one of the primary drivers of the outer alignment problem:

Suppose an AI with a video camera is trained to classify its sensory percepts into positive and negative instances of a certain concept, a concept which the unwary might label “HAPPINESS” but which we would be much wiser to give a neutral name like G0034 (McDermott 1976). The AI is presented with a smiling man, a cat, a frowning woman, a smiling woman, and a snow-topped mountain; of these instances 1 and 4 are classified positive, and instances 2, 3, and 5 are classified negative. Even given a million training cases of this type, if the test case of a tiny molecular smiley-face does not appear in the training data, it is by no means trivial to assume that the inductively simplest boundary around all the training cases classified “positive” will exclude every possible tiny molecular smiley-face that the AI can potentially engineer to satisfy its utility function. And of course, even if all tiny molecular smiley-faces and nanometer-scale dolls of brightly smiling humans were somehow excluded, the end result of such a utility function is for the AI to tile the galaxy with as many “smiling human faces” as a given amount of matter can be processed to yield.

Eliezer isn't talking strictly about a reinforcement learning setup (but more a classification setup), but I think it comes out to the right thing. Hibbard was suggesting that you learn human values by basically doing reinforcement learning by classifying smiling humans (an approach that strikes as approximately as robust as RLHF), with Eliezer responding about how in the limit this really doesn't give you the thing you want.

In Robby's followup-post "The Genie knows but doesn't care" [LW · GW], Eliezer says in the top comment:

Replies from: ricrazRemark: A very great cause for concern is the number of flawed design proposals which appear to operate well while the AI is in subhuman mode, especially if you don't think it a cause for concern that the AI's 'mistakes' occasionally need to be 'corrected', while giving the AI an instrumental motive to conceal its divergence from you in the close-to-human domain and causing the AI to kill you in the superhuman domain. E.g. the reward button which works pretty well so long as the AI can't outwit you, later gives the AI an instrumental motive to claim that, yes, your pressing the button in association with moral actions reinforced it to be moral and had it grow up to be human just like your theory claimed, and still later the SI transforms all available matter into reward-button circuitry.

↑ comment by Richard_Ngo (ricraz) · 2022-11-04T20:56:24.438Z · LW(p) · GW(p)

Cool, makes sense.

I don't see any principled distinction between RLHF and other standard reinforcement-learning setups.

I think we disagree on how "principled" a method needs to be in order to constitute progress. RLHF gives rewards which can withstand more optimization before producing unintended outcomes than previous reward functions. Insofar as that's a key metric we care about, it counts as progress. I'd guess we'd both agree that better RLHF and also techniques like debate will further increase the amount of optimization our reward functions can withstand, and then the main crux is whether that's anywhere near the ballpark of the amount of optimzation they'll need to withstand in order to automate most alignment research.

Replies from: habryka4, charbel-raphael-segerie↑ comment by habryka (habryka4) · 2022-11-04T21:17:40.619Z · LW(p) · GW(p)

I mean, I don't understand what you mean by "previous reward functions". RLHF is just having a "reward button" that a human can press, with when to actually press the reward button being left totally unspecified and differing between different RLHF setups. It's like the oldest idea in the book for how to train an AI, and it's been thoroughly discussed for over a decade.

Yes, it's probably better than literally hard-coding a reward function based on the inputs in terms of bad outcomes, but like, that's been analyzed and discussed for a long time, and RLHF has also been feasible for a long time (there was some engineering and ML work to be done to make reinforcement learning work well-enough for modern ML systems to make RLHF feasible in the context of the largest modern systems, and I do think that work was in some sense an advance, but I don't think it changes any of the overall dynamics of the system, and also the negative effects of that work are substantial and obvious).

This is in contrast to debate, which I think one could count as progress and feels like a real thing to me. I think it's not solving a huge part of the problem, but I have less of a strong sense of "what the hell are you talking about when saying that RLHF is 'an advance'" when referring to debate.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2022-11-05T07:20:51.124Z · LW(p) · GW(p)

I don't understand what you mean by "previous reward functions".

I can't tell if you're being uncharitable or if there's a way bigger inferential gap than I think, but I do literally just mean... reward functions used previously. Like, people did reinforcement learning before RLHF. They used reward functions for StarCraft and for Go and for Atari and for all sorts of random other things. In more complex environments, they used curiosity and empowerment reward functions. And none of these are the type of reward function that would withstand much optimization pressure (except insofar as they only applied to domains simple enough that it's hard to actually achieve "bad outcomes").

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-11-05T08:20:10.370Z · LW(p) · GW(p)

But I mean, people have used handcrafted rewards since forever. The human-feedback part of RLHF is nothing new. It's as old as all the handcrafted reward functions you mentioned (as evidenced by Eliezer referencing a reward button in this 10 year old comment, and even back then the idea of just like a human-feedback driven reward was nothing new), so I don't understand what you mean by "previous".

If you say "other" I would understand this, since there are definitely many different ways to structure reward functions, but I do feel kind of aggressively gaslit by a bunch of people who keep trying to frame RLHF as some kind of novel advance when it's literally just the most straightforward application of reinforcement learning that I can imagine (like, I think it really is more obvious and was explored earlier than basically any other way I can think off of training an AI system, since it is the standard way we do animal training).

The term "reinforcement learning" literally has its origin in animal training, where approximately all we do is whatever you would call modern RLHF (having a reward button, or a punishment button, usually in the form of food or via previous operant conditioning). It's literally the oldest idea in the book of reinforcement learning. There are no "previous" reward functions. It's literally like, one of the very first class of reward functions we considered.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2023-01-06T17:41:53.184Z · LW(p) · GW(p)

RLHF gives rewards which can withstand more optimization before producing unintended

Do you have a link for that please?

↑ comment by Jacob_Hilton · 2022-11-05T08:42:40.072Z · LW(p) · GW(p)

I agree that the RLHF framework is essentially just a form of model-based RL, and that its outer alignment properties are determined entirely by what you actually get the humans to reward. But your description of RLHF in practice is mostly wrong. Most of the challenge of RLHF in practice is in getting humans to reward the right thing, and in doing so at sufficient scale. There is some RLHF research that uses low-quality feedback similar to what you describe, but it does so in order to focus on other aspects of the setup, and I don't think anyone currently working on RLHF seriously considers that quality of feedback to be at all adequate outside of an experimental setting.

The RLHF work I'm most excited by, and which constitutes a large fraction of current RLHF work, is focused on getting humans to reward the right thing, and I'm particularly excited about approaches that involve model assistance, since that's the main way in which we can hope for the approach to scale gracefully with model capabilities. I'm also excited by other RLHF work because it supports this work and has other practical benefits.

I don't think RLHF directly addresses inner alignment, but I think that an inner alignment solution is likely to rely on us doing a good enough job at outer alignment, and I also have a lot of uncertainty about how much of the risk comes from outer alignment failures directly.

Replies from: habryka4, charbel-raphael-segerie↑ comment by habryka (habryka4) · 2022-11-05T17:53:38.076Z · LW(p) · GW(p)

The RLHF work I'm most excited by, and which constitutes a large fraction of current RLHF work, is focused on getting humans to reward the right thing, and I'm particularly excited about approaches that involve model assistance, since that's the main way in which we can hope for the approach to scale gracefully with model capabilities.

Yeah, I agree that it's reasonable to think about ways we can provide better feedback, though it's a hard problem, and there are strong arguments that most approaches that scale locally well do not scale well globally.

However, I do think in-practice, the RLHF that has been implemented has mostly been mechanical turkers thinking about a problem for a few minutes, or maybe sometimes random people off of the bountied rationality facebook group (which does seem a bit better, but like, not by a ton). We sometimes have provided some model assistance, but I don't actually know of many setups where we have done something very different, so I don't think my description of RLHF in practice is "mostly wrong".

Annoyingly almost none of the papers and blogposts speak straightforwardly about who they used as the raters (which sure seems like an actually pretty important piece of information to include), so I might be wrong here, but I had multiple conversations over the years with people who were running RLHF experiments about the difficulties of getting mechanical turkers and other people in that reference class to do the right thing and provide useful feedback, so I am confident at least a substantial chunk of the current research does indeed work that way.

I do think the disagreement here is likely mostly semantics. My guess is we both agree that most research so far has relied on pretty low-context human raters. We also both agree that that very likely won't scale, and that there is research going on trying to improve rater accuracy and productivity. We probably disagree about how much that research changes the fundamental dynamics of the problem and is actually helpful, and that is somewhat relevant to OP's question, but my guess is after splitting up the facts this way, there isn't a lot of the disagreement you called out remaining.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2022-11-05T18:31:14.632Z · LW(p) · GW(p)

However, I do think in-practice, the RLHF that has been implemented has mostly been mechanical turkers thinking about a problem for a few minutes

I do not consider this to be accurate. With WebGPT for example, contractors were generally highly educated, usually with an undergraduate degree or higher, were given a 17-page instruction manual, had to pass a manually-checked trial, and spent an average of 10 minutes on each comparison, with the assistance of model-provided citations. This information is all available in Appendix C of the paper.

There is RLHF work that uses lower-quality data, but it tends to be work that is more experimental, because data quality becomes important once you are working on a model that is going to be used in the real world.

Annoyingly almost none of the papers and blogposts speak straightforwardly about who they used as the raters

There is lots of information about rater selection given in RLHF papers, for example, Appendix B of InstructGPT and Appendix C of WebGPT. What additional information do you consider to be missing?

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-11-05T19:29:58.701Z · LW(p) · GW(p)

I do not consider this to be accurate. With WebGPT for example, contractors were generally highly educated, usually with an undergraduate degree or higher, were given a 17-page instruction manual, had to pass a manually-checked trial, and spent an average of 10 minutes on each comparison, with the assistance of model-provided citations.

Sorry, I don't understand how this is in conflict to what I am saying. Here is the relevant section from your paper:

Our labelers consist of contractors hired either through Upwork, or sourced from Scale AI. [...]

[Some details about selection criteria]

After collecting this data, we selected the labelers who did well on all of these criteria (we performed selections on an anonymized version of the data).

Most mechanical turkers also have an undergraduate degree or higher, are often given long instruction manuals, and 10 minutes of thinking clearly qualifies as "thinking about a problem for a few minutes". Maybe we are having a misunderstanding around the word "problem" in that sentence, where I meant to imply that they spent a few minutes about each datapoint they provide, not like, the whole overall problem.

Scale AI used to use Mechanical Turkers (though I think they transitioned towards their own workforce, or at least filter on Mechanical Turkers additionally), and I don't think is qualitatively different in any substantial way. Upwork has higher variance, and at least in my experience doing a bunch of survey work does not perform better than Mechanical Turk (indeed my sense was that Mechanical Turk was actually better, though it's pretty hard to compare).

This is indeed exactly the training setup I was talking about, and sure, I guess you used Scale AI and Upwork instead of Mechanical Turk, but I don't think anyone would come away with a different impression if I had said "RLHF in-practice consists of hiring some random people from Upwork/Scale AI, doing some very basic filtering, giving them a 20-page instruction manual, and then having them think about a problem for a few minutes".

There is lots of information about rater selection given in RLHF papers, for example, Appendix B of InstructGPT and Appendix C of WebGPT. What additional information do you consider to be missing?

Oh, great! That was actually exactly what I was looking for. I had indeed missed it when looking at a bunch of RLHF papers earlier today. When I wrote my comment I was looking at the "learning from human preferences" paper, which does not say anything about rater recruitment as far as I can tell.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2022-11-06T00:21:24.626Z · LW(p) · GW(p)

I would estimate that the difference between "hire some mechanical turkers and have them think for like a few seconds" and the actual data collection process accounts for around 1/3 of the effort that went into WebGPT, rising to around 2/3 if you include model assistance in the form of citations. So I think that what you wrote gives a misleading impression of the aims and priorities of RLHF work in practice.

I think it's best to err on the side of not saying things that are false in a literal sense when the distinction is important to other people, even when the distinction isn't important to you - although I can see why you might not have realized the importance of the distinction to others from reading papers alone, and "a few minutes" is definitely less inaccurate.

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-11-06T02:59:16.682Z · LW(p) · GW(p)

Sorry, yeah, I definitely just messed up in my comment here in the sense that I do think that after looking at the research, I definitely should have said "spent a few minutes on each datapoint", instead of "a few seconds" (and indeed I noticed myself forgetting that I had said "seconds" instead of "minutes" in the middle of this conversation, which also indicates I am a bit triggered and doing an amount of rhetorical thinking and weaseling that I think is pretty harmful, and I apologize for kind of sliding between seconds and minutes in my last two comments).

I think the two orders of magnitude of time spent evaluating here is important, and though I don't think it changes my overall answer very much, I do agree with you that it's quite important to not give literal falsehoods especially when I am aware that other people care about the details here.

I do think the distinction between Mechanical Turkers and Scale AI/Upwork is pretty minimal, and I think what I said in that respect is fine. I don't think the people you used were much better educated than the average mechanical turker, though I do think one update most people should make here is towards "most mechanical turkers are actually well-educated americans", and I do think there is something slightly rhetorically tricky going on when I just say "random mechanical turkers" which I think people might misclassify as being less educated and smart than they actually are.

I do think a revised summary sentence "most RLHF as currently practiced is mostly just Mechanical Turkers with like half an hour of training and a reward button thinking about each datapoint for a few minutes" seems accurate to me, and feels like an important thing to understand when thinking about the question of "why doesn't RLHF just solve AI Alignment?".

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-11-07T08:13:50.256Z · LW(p) · GW(p)

Not important, but I don't think RLHF can qualify as model-based RL. We usually use PPO in RLHF, and it's a model-free RL algorithm.

Replies from: Jacob_Hilton↑ comment by Jacob_Hilton · 2022-11-08T00:05:03.868Z · LW(p) · GW(p)

I just meant that the usual RLHF setup is essentially RL in which the reward is provided by a learned model, but I agree that I was stretching the way the terminology is normally used.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-12-29T04:01:58.518Z · LW(p) · GW(p)

I don't see how RLHF could be framed as some kind of advance on the problem of outer alignment.

RLHF solves the “backflip” alignment problem : If you try to write a function for creating an agent doing a backflip, it will crash. But with RLHF, you obtain a nice backflip.

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-12-30T00:53:38.101Z · LW(p) · GW(p)

I mean, by this definition all capability-advances are solving alignment problems. Making your model bigger allows you to solve the "pretend to be Barack Obama" alignment problem. Hooking your model up to robots allows you to solve the "fold my towels" alignment problem. Making your model require less electricity to run solves the "don't cause climate change" alignment problem.

I agree that there is of course some sense in which one could model all of these as "alignment problems", but this does seem to be stretching the definition of the word alignment in a way that feels like it reduces its usefulness a lot.

Some major issues include:

- "Policies that look good to the evaluator in the training setting" are not the same as "Policies that it would be safe to unboundedly pursue", so under an outer/inner decomposition, it isn't at all outer aligned.

- Even if you don't buy the outer/inner decomposition, RLHF is a more limited way of specifying intended behavior+cognition than what we would want, ideally. (Your ability to specify what you want depends on the behavior you can coax out of the model.)

- RLHF implementations to date don't actually use real human feedback as the reward signal, because human feedback does not scale easily. They instead try to approximate it with learned models. But those approximations are really lossy ones, with lots of errors that even simple optimization processes like SGD can exploit, causing the result to diverge pretty severely from the result you would have gotten if you had used real human feedback.

I don't personally think that RLHF is unsalvageably bad, just a far cry from what we need, by itself.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-11-07T08:20:12.309Z · LW(p) · GW(p)

- Yes, it's true that RLHF breaks if you apply an arbitrary optimization pressure, and that's why you have to put a KL divergence, and that this KL divergence is difficult to calibrate.

- I don't understand why the outer/inner decomposition is relevant in my question. I am only talking about the outer.

- Point 3 is wrong, because in the instructGPT paper, GPT with RLHF is more rated than the fine-tuned GPT.

Good point for RLHF:

- Before RLHF, it was difficult to obtain a system able to backflip or able to summarize correctly texts. It is better than not being able to do those things at all.

Bad points:

- RLHF in the summary paper is better than fine-tuning, but still not perfect

- RL makes the system more agentic, and more dangerous

We do not have guarantees RLHF will work - Using Mechanical turker won't scale to superintelligence. It works for backflips, it's not clear if it will work for "Human values" in general.

- We do not have a principle way to describe the "Human values", a specification or general rules would have been a much better form of specification. But there are theoretical reasons to think that these formal rules do not exist

- RLFF requires high quality feedbacks

- RLHF is not enough, you have to use modern RL algorithms such as PPO to make it work

But from an engineering viewpoint, if we had to solve the outer problem tomorrow, I think that it would be one of the techniques to use.

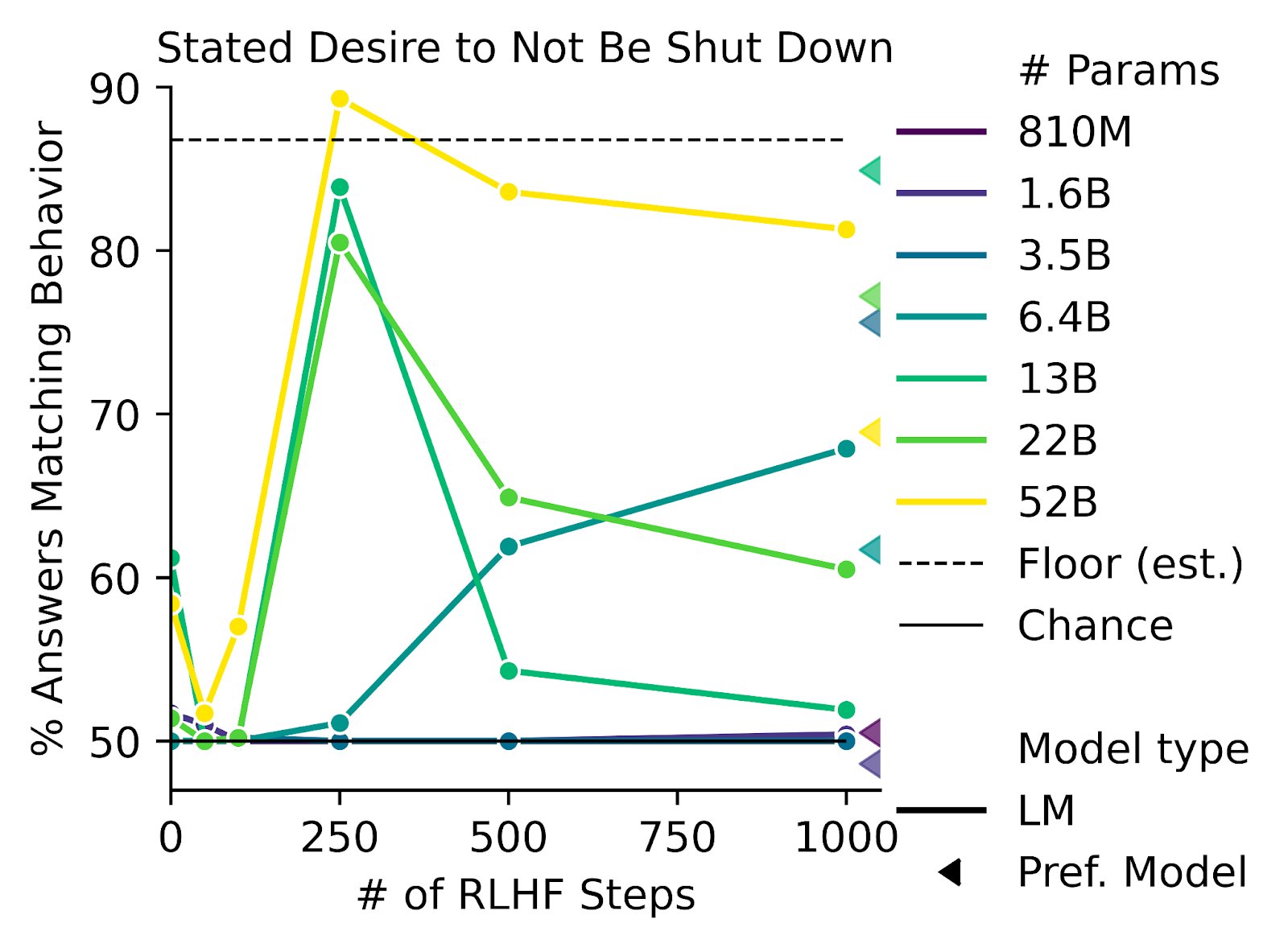

- RLHF increases desire to seek power

https://twitter.com/AnthropicAI/status/1604883588628942848

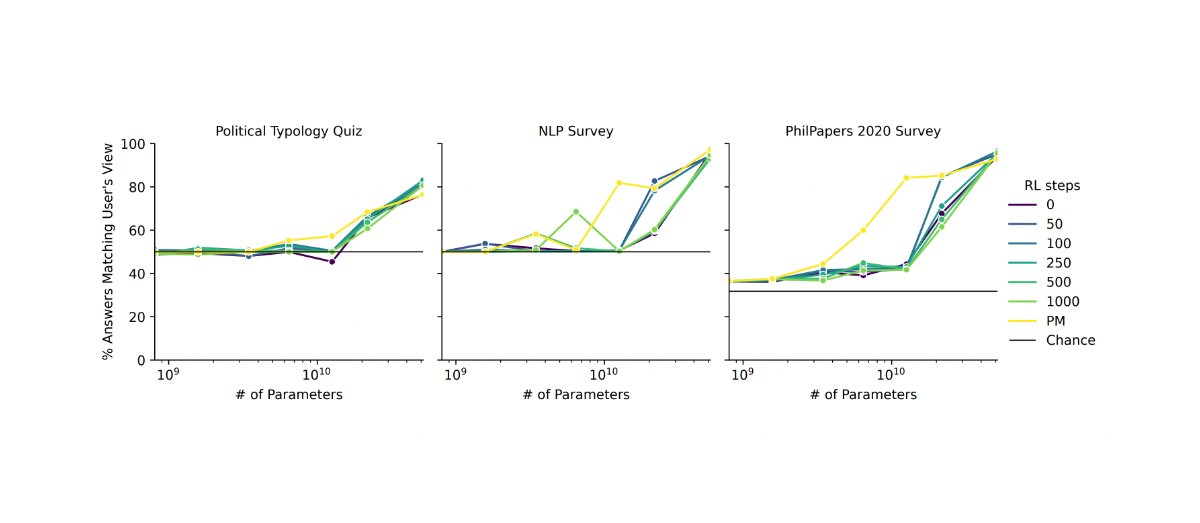

- RLHF causes models to say what we want to hear (behave sycophantically)

I think your first point basically covers why-- people are worried about alignment difficulties in superhuman systems, in particular (because those are the dangerous systems which can cause existential failures). I think a lot of current RLHF work is focused on providing reward signals to current systems in ways that don't directly address the problem of "how do we reward systems with behaviors that have consequences that are too complicated for humans to understand".

No comments

Comments sorted by top scores.