Order from Randomness: Ordering the Universe of Random Numbers

post by Shmi (shminux) · 2018-06-19T05:37:42.404Z · LW · GW · 7 commentsContents

Can one start with a sequence of random numbers as a toy universe, i.e. no order and get somewhere that way, just by finding spurious patterns in the sequence? The arrow of time matches the arrow of increasing entropy. None 7 comments

Epistemic status: not sure what to make of it.

Previously [LW · GW]I had suggested that the laws of physics were the observers' attempts to make sense of the universe without laws while looking for patterns, which then become their models of reality. Some people suggested that this idea matched their intuition, others were bringing up Tegmark's mathematical universe as something that inherently has laws in it. Not being a fan of Tegmark (that's a different discussion) I will not pursue this avenue. Returning to the questions I have asked in the previous post, the first one was:

Can one start with a sequence of random numbers as a toy universe, i.e. no order and get somewhere that way, just by finding spurious patterns in the sequence?

This post attempts to create a toy model that does just that! it might appear contrived, but hopefully will make a bit of sense.

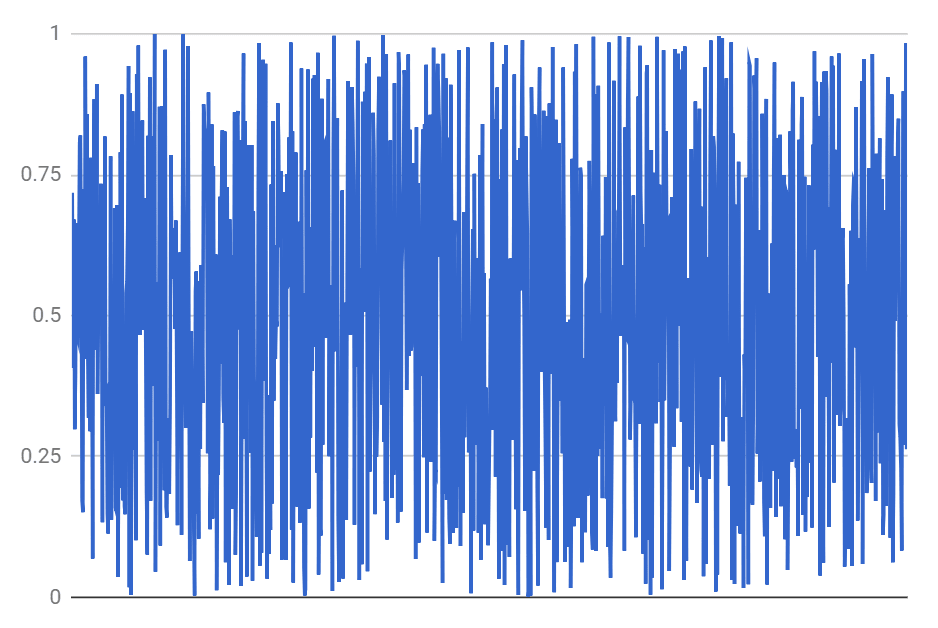

So, let's have our toy model of the universe as a sequence of random numbers. For definiteness, let's start with a uniform random sequence with elements between zero and one. While it is possible to get more random than that, it would require some effort. So, here is a sample sequence:

This looks, appropriately, rather orderless. One can see that this is so by looking at the power spectrum of this sequence. I have averaged about 10,000 of power spectra to get a smooth curve:

There are no correlations in this sequence and the power spectrum is flat, indicating the lack of order, as it should be. The random sequence was our toy universe, with the element (sample) number interpreted as the discrete "time" in this universe, t=1..1024.

But why let the time match the sample number? What is time, anyway? That has been, well, a timeless question for several thousands of years. Why do most of us, humans, perceive time as one-dimensional, inexorably going from the past to the future, and not in the other direction, or without any direction at all? A common answer to this question is related to the second law of thermodynamics:

The arrow of time matches the arrow of increasing entropy.

Well, not quite. The law, as generally formulated, states that entropy increases with time. But how do we know that this is a physical law and not a pattern we notice and successfully extrapolate, in keeping with the main thesis under consideration? Let's see what we can get from our toy model of a universe of random numbers. As remarked previously, the perceived passage of time is an open problem, so, until it is resolved, we are free to assign time values to our samples any way we wish.

Now comes the controversial part.

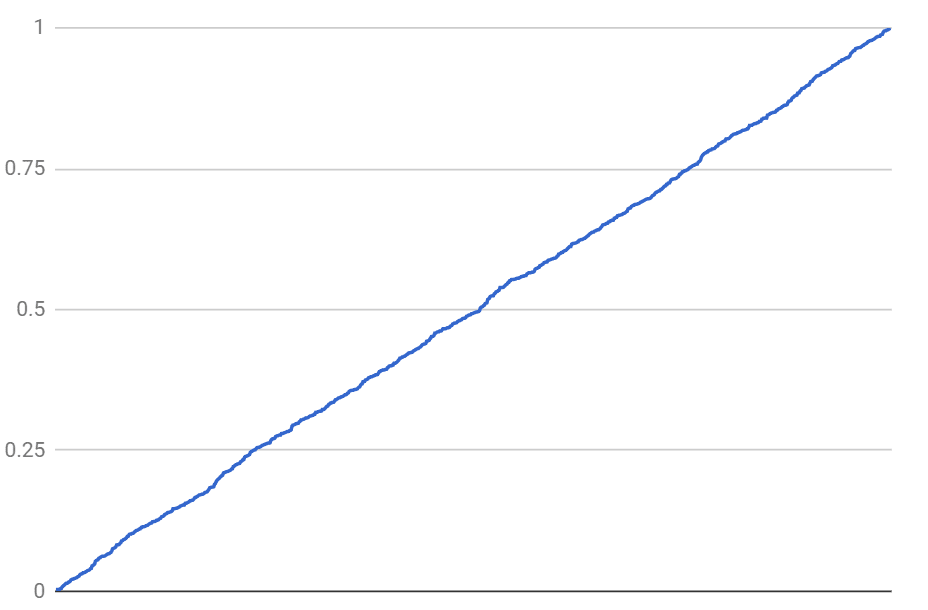

How do we find something like entropy in this toy model? After all, there aren't any macrostates we can average over some microstates. The lack of order is intentionally built into this universe from the beginning. All we have is a bunch of numbers. So, let us use the magnitude of each number as a poor-man's proxy for entropy, and assign the later time to larger numbers. In other words, let's sort the random sequence! Here is a sample sorted sequence:

So, if we assign it in the ascending order, larger numbers corresponding to the later times, then, from the point of view of this hypothetical observer the universe would be quite predictable: the only quantity that exists in this universe monotonically increases with the perceived time.

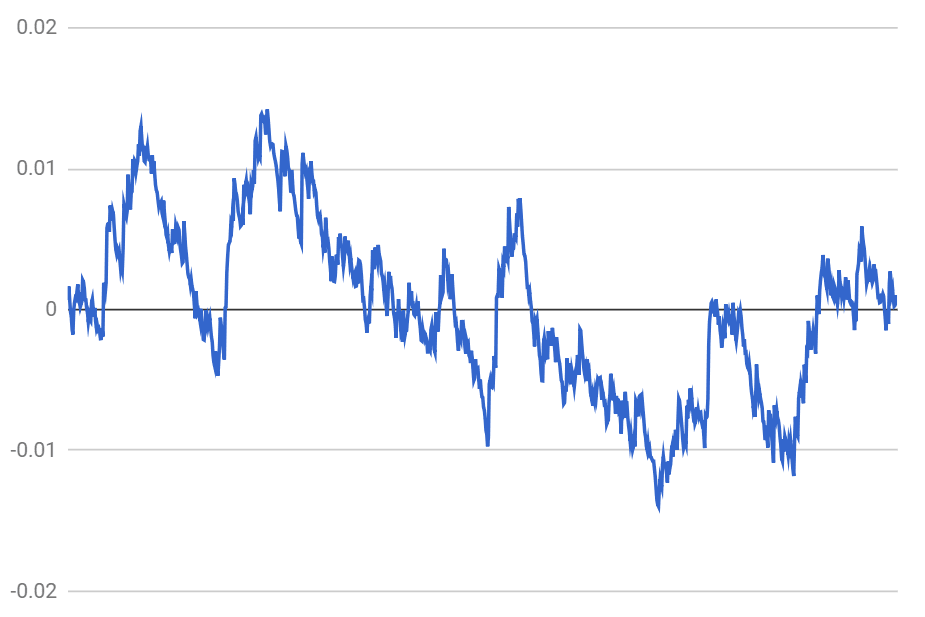

In this ordered random universe an observer can definitely make at least one prediction, the same one we built into this ordering: that time goes forward. This is almost tautological. Let us try and see what other observables we can find here. One obvious thing to notice is that the line is not perfectly straight but has fluctuations. So let's subtract the trend and see what the fluctuations are like. Here is a picture for one run:

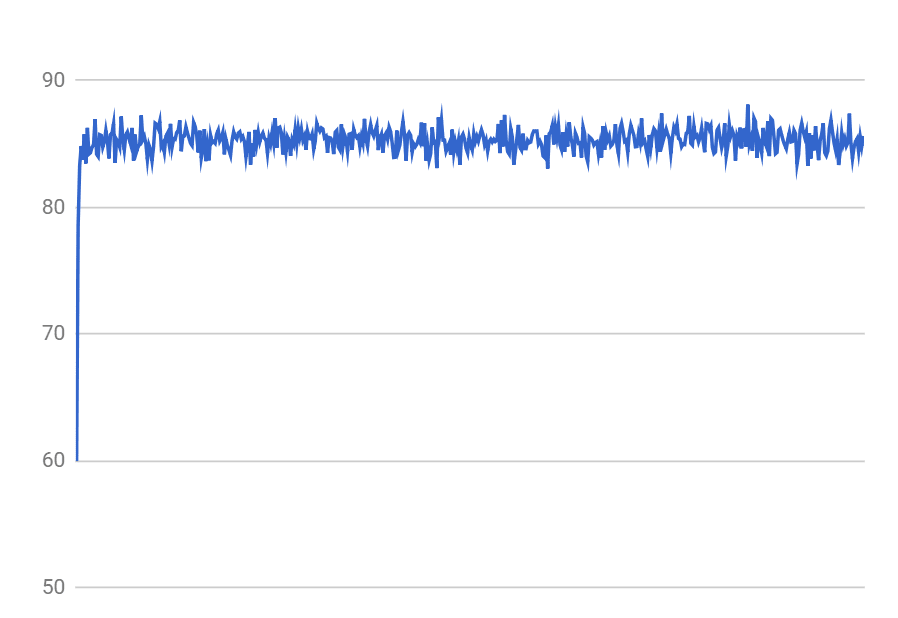

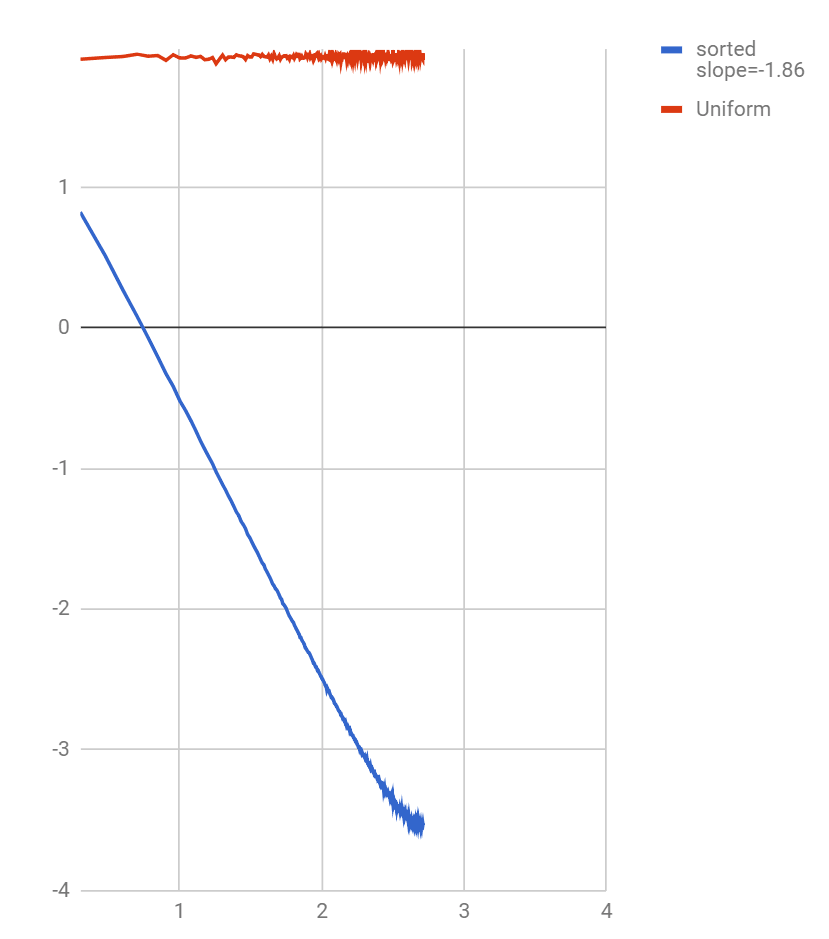

This is definitely not the uniform random noise anymore. To see what it is, let's look at the power spectrum again, averaged over 100,000 samples. I have included the power spectrum of the uniform distribution for comparison:

This is the log-log scale, as is customary for power spectra. The slope is non-integer, corresponding to the power law with the fractal dimension P~1/f^1.86. Initially I thought that this non-integer dimension could be an artifact of the random number generator being pseudo-random, but taking a "truly" random uniform distribution with the source of signal from actual physical noise did not materially change the outcome.

If someone understands enough probability and statistics, and can explain what is going on here, please, by all means!

Now, let's take stock of what has happened: we started with uniformly distributed random noise, picked an "arrow of time", and ended up with fractal power-law fluctuations. Whether these fluctuations mimic an actual dynamical system, I am not sure, but if they do, this would mean creating apparent, if chaotic, order from randomness.

I am not sure what this all might mean, if anything, so any feedback is welcome.

7 comments

Comments sorted by top scores.

comment by philh · 2018-06-21T14:31:28.339Z · LW(p) · GW(p)

It feels weird to me to set time as "index of the current observation in the sorted order" rather than as "magnitude of the current observation".

Like, I feel like whatever an observer perceives as "the passage of time" must be something in the physical universe, something that changes from one state to the "next". But here there's nothing to distinguish states except their magnitude, so it feels like cheating to say that the same amount of time passes regardless of the magnitude gap.

comment by Dacyn · 2018-06-22T17:48:42.080Z · LW(p) · GW(p)

I don't know what "power spectrum" is, but the second-to-last graph looks pretty obviously like Brownian motion. This makes sense because the differences between consecutive points in the third graph will be approximately Poisson and independently distributed, so if you renormalize so that the expected value of the difference is zero, then the central limit theorem will give you Brownian motion in the limit.

Anyway regarding the relation of your post to Tegmark's theory, a random sequence can be a perfectly well-defined mathematical object (well maybe you need to consider pseudo-randomness, but that's not the point) so you are not getting patterns out of something non-mathematical (whatever that would mean) but out of a particular type of mathematical object.

comment by avturchin · 2019-04-09T16:20:43.188Z · LW(p) · GW(p)

It reminds me this article: https://arxiv.org/abs/1712.01826

comment by MrFailSauce (patrick-cruce) · 2018-06-21T14:32:47.585Z · LW(p) · GW(p)

Maybe I’m being dense, and missing the mystery, but I think this reference might be helpful.

https://www.dsprelated.com/showarticle/40.php

Replies from: shminux↑ comment by Shmi (shminux) · 2018-06-21T17:24:13.482Z · LW(p) · GW(p)

Thanks for the link! Yep, I had a thought about the 1/f noise right after calculating the spectra above, especially because my original power spectrum slope calculation had an error and the result came out very close to that of the 1/f noise :) I had looked up if sorting can generate it, but nothing came up.

Were I still in academia, I would have been tempted to write a speculative paper resulting in cringy headlines like "Scientists solve several long-standing mysteries at once: the arrow of time is driven by the human quest for order and meaning"

The "mystery" as I see it currently, is the mathematical origin of the pink noise emerging from sorting.

Replies from: patrick-cruce↑ comment by MrFailSauce (patrick-cruce) · 2018-06-22T05:23:18.582Z · LW(p) · GW(p)

The result you got is pretty close to the fft of f(t) = t

Which is roughly what you got from sorting noise.

comment by PhilipTrettner · 2018-06-19T07:27:45.481Z · LW(p) · GW(p)

The law, as generally formulated, states that entropy increases with time. But how do we know that this is a physical law and not a pattern we notice and successfully extrapolate, in keeping with the main thesis under consideration?

I've recently read The Big Picture and spent some time chasing Quantum Mechanics Wikipedia articles. From what stuck, thermodynamics itself is an effective field theory of the Standard Model of particle physics. It is a macroscopic, consistent approximation of our current best QM theory. Part of this approximation is that entropy is not a physical quantity and the second law of thermodynamics is not a "law". Entropy is a statistical property of a system and has a really high probability to increase in closed systems but doesn't have to. From what I understand, a currently popular hypothesis is that the Big Bang was a really really low entropy state and thus we observe increasing entropy with virtual certainty.

I'm not entirely sure what my point is, but maybe it just support yours, that even such apparently ironclad properties as the second law of thermodynamics are just derived, emergent "laws" that allow us to successfully predict the universe.