Beware over-use of the agent model

post by Alex Flint (alexflint) · 2021-04-25T22:19:06.132Z · LW · GW · 10 commentsContents

Outline The phenomenon under investigation The agent model None 10 comments

This is independent research. To make it possible for me to continue writing posts like this, please consider supporting me.

Thank you to Shekinah Alegra for reviewing a draft of this essay.

Outline

-

A short essay intended to elucidate the boundary between the agent model as a way of seeing, and the phenomena out there in the world that we use it to see.

-

I argue that we emphasize the agent model as a way of seeing the real-world phenomenon of entities that exert influence over the future to such an extent that we exclude other ways of seeing this phenomenon.

-

I suggest that this is dangerous, not because of any particular shortcomings in the agent model, but because using a single way of seeing makes it difficult to distinguish features of the way of seeing from features of the phenomenon that we are using it to look at.

The phenomenon under investigation

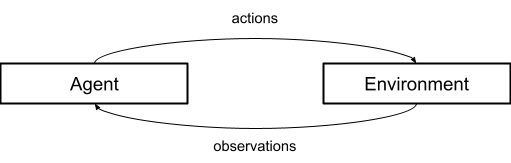

Yesterday I wrote about the pitfalls of over-reliance on probability theory as a sole lens for looking at the real-world phenomena of machines that quantify their uncertainty in their beliefs [LW · GW]. Today I want to look at a similar situation with respect to over-reliance on the agent model as a sole lens for looking at the real-world phenomena of entities that exert influence over the future. Under the agent model, the agent receives sense data from the environment, and sends actions out into the environment, but agent and environment are fundamentally separate, and this separation forms the top-level organizing principle of the model.

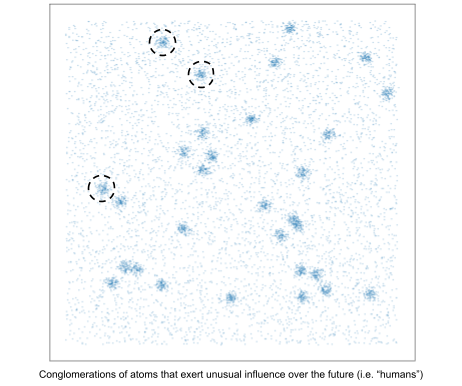

And what is it that we are using the agent model to see? Well let’s start at the beginning. There is something actually out there in the world. We might say that it’s a bunch of atoms bouncing around, or we might say that it’s a quantum wavefunction evolving according to the Schrodinger equation, or we might say that it is God. This post isn’t about the true nature of reality, it’s about the lenses we use to look at reality. And one of the things we see when we look out at the world is that there are certain parts of the world that seem to exert an unusual amount of influence over the future. For example, on Earth there are these eight billion parts of the world that we call humans, and each of those parts has a sub-part called a brain, and if you want to understand the overall evolution of the cosmos in this part of the world then you can get a long way just in terms of pure predictive power by placing all your attention on these eight billion parts of the world that we call humans.

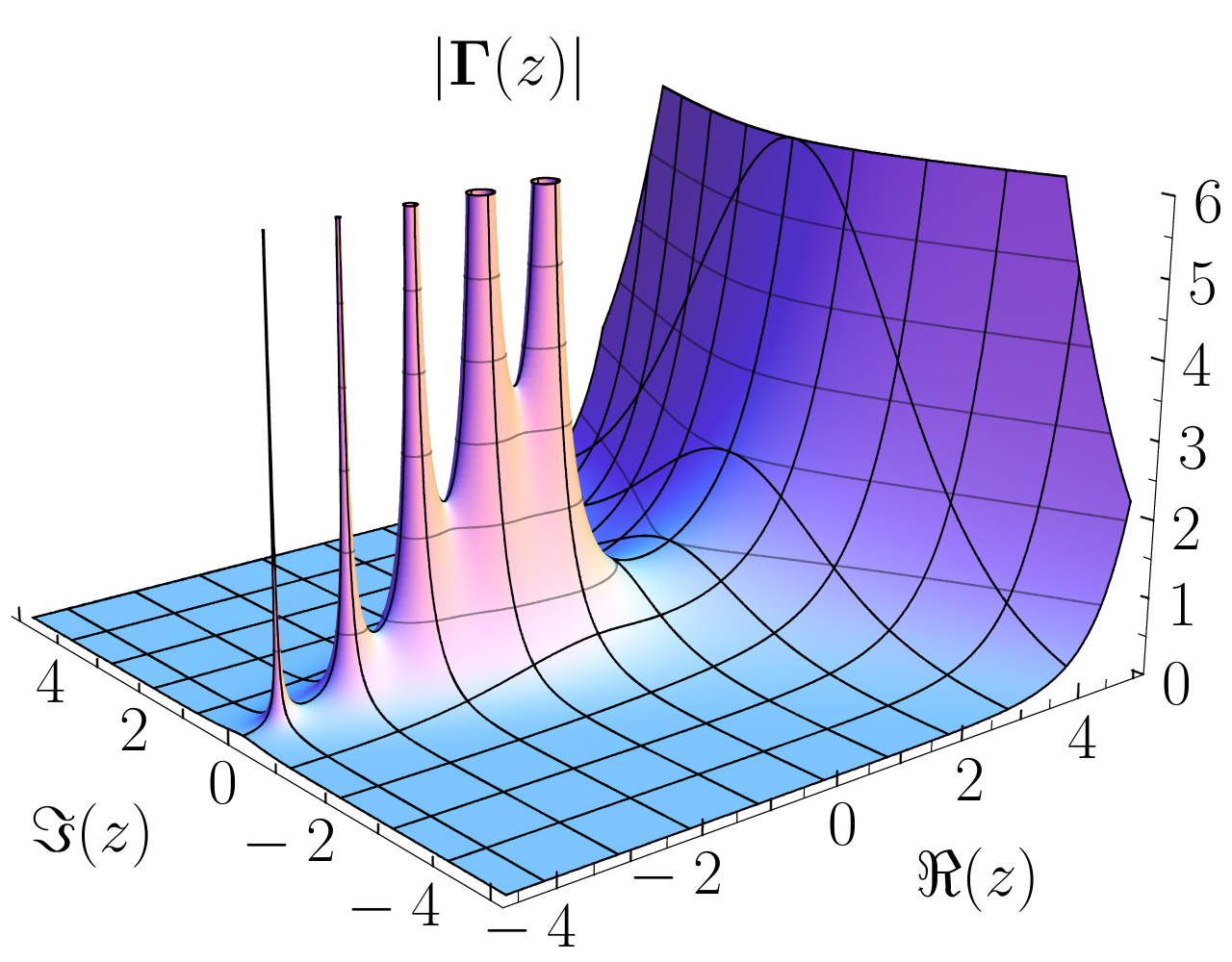

It’s a bit like in complex analysis, where if you want to compute the integral of a function with certain continuity properties, it turns out that you can just find the finite number of points at which the function goes to infinity and compute a quantity called the "residue" at those points, and then the whole integral is just a sum of those residues.

It’s quite remarkable. You can understand the whole function just by understanding what’s happening in the vicinity of a few points. It’s not that we decide that these singularity points are more worthy of our attention, it simply is the case, for better or worse, that the behavior of the entire function turns upon the behavior of these singularity points.

Now, understanding the evolution of the whole cosmos in our local region of space by understanding the evolution of the conglomerations of atoms or regions of the wavefunction or faces of God that we identify as humans has no precise connection whatsoever to the residue theorem of complex analysis! It is just an illustrative example! Human minds do not represent singularities in the quantum wavefunction! Understanding the future of life on Earth is not like computing the integral over a holomorphic function! Put any such thoughts out of your mind completely. It is just an example of the phenomenon in which a whole system can be understood by examining some small number of critical points, and not because we make some parochial choice to attend more closely to these critical points than to other points, but because it just is the case, for better or worse, that the evolution of the whole system turns upon the evolution of these finite number of points.

And that does seem to be the situation here on Earth. For better or worse, the fate of all the atoms in and close to the Earth now appear to turn upon the evolution of the eight billion little conglomerations of atoms that we identify as humans. This just seems to be the case.

We are interested in examining these conglomerations of atoms that we identify as humans, so that we might understand the likely future of this region of the cosmos, and so that we might empower ourselves to take appropriate action. Due to our interest in examining these conglomerations of atoms that we identify as humans we develop abstractions for understanding what is going on, because a human consists of a very large number of atoms / a very complex wavefunction / a very difficult-to-understand aspect of God’s grace, and we need abstractions in order to make sense of things. And one such abstraction is the agent model.

The agent model

Under the agent model, the agent receives sense data from the environment, and sends actions out into the environment, but agent and environment are fundamentally separate, and this separation forms the top-level organizing principle of the model.

The agent model abstracts away many details of the underlying reality, as all good models should. It abstracts away the physical details of the sensors -- how "observations" get transmitted from the "environment" to the "agent". It abstracts away the physical details of the actuators -- how "actions" get transmitted from the "agent" to the "environment". Very importantly, it abstracts away the physical details of the computing infrastructure used to run the agent algorithm.

The agent model is a good abstraction. It has proven useful in many domains. It was developed, I understand, although I have not looked into it, within economics. It is used extensively today within computer science as a lens through which we think about building intelligent systems. For example, in partially observable Markov decision processes (POMDPs), which is the basic model underlying reinforcement learning, there is an explicit exchange of actions and observations with the environment.

But these eight billion little conglomerations of atoms upon which the whole future of the cosmos appears to turn is a real phenomenon out there in the world, and the agent model is just one lens through which we might understand that phenomenon. It is a powerful lens, but precisely because it is so powerful I fear that we currently use it to the exclusion of all other lenses. As a result we wind up losing track of what is the lens and what is the phenomenon.

It is the same basic situation that we discussed yesterday [LW · GW] with respect to over-use of probability theory as a lens for looking at the real-world phenomenon of machines with quantified uncertainty in their beliefs.

It is not that the agent model is a bad model, nor that we should discard all models and insist on gazing directly upon the atoms / wavefunction / God. It is that when we have only one lens, it is extremely difficult to discern in what ways it is helping us to see the world and in what ways we are seeing impurities in the lens itself.

A good general way to overcome this is to move between different lenses. Even if one lens seems to be the most powerful one available, it is still helpful to spend some time looking through other lenses if for no other reason than to distinguish that which the powerful lens is revealing to us about reality from that which is merely an artifact of the lens itself.

But what is a good second lens for looking at these conglomerations of atoms that exert power over the future? This is a question that I would very much like to begin a conversation about.

10 comments

Comments sorted by top scores.

comment by Matt Goldenberg (mr-hire) · 2021-04-26T15:01:56.246Z · LW(p) · GW(p)

But what is a good second lens for looking at these conglomerations of atoms that exert power over the future?

One interesting alternative I've been learning about recently is the buddhist idea of "dependent origination". I'll give a brief summary of some thoughts I've had based on it, although these should definitely not be taken as an accurate representation of the actual dependent origination teaching.

The basic idea is that the delusion of agency (or in buddhist terms, self) comes from the conglomeration of sensations (or sensors) and desires. This leads to a clinging on to things that fulfill those desires, which leads to a need to pretend there is an agent that can fulfill those desires. This then leads to the creation of more things that desire and sense(babies, AIs, whatever), to whom we pass on the same delusions. We can view each of these as individual agents, or we can simply view whole process as one thing, a perpetual cycle of cause and effect the Buddhists call dependent origination.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-09T19:04:30.330Z · LW(p) · GW(p)

Hey Matt - long time!

Yeah the shortcomings of the agent model as do seem similar to the shortcomings of "self" as a primary concept, and the Buddhist critiques of "self" have informed my thinking on the agent model.

I had not considered dependent origination as an alternative to the agent model. I understand dependent origination as being the Buddha's account of the causal chain that leads to suffering.

Do you have any rough sense for how might we use dependent origination to understand this phenomenon of entities in the world that exert influence over the future? I would be very interested in any rough thoughts you might have.

comment by Alex Flint (alexflint) · 2022-12-08T14:34:12.609Z · LW(p) · GW(p)

This post attempts to separate a certain phenomenon from a certain very common model that we use to understand that phenomenon. The model is the "agent model" in which intelligent systems operate according to an unchanging algorithm. In order to make sense of their being an unchanging algorithm at the heart of each "agent", we suppose that this algorithm exchanges inputs and outputs with the environment via communication channels known as "observations" and "actions".

This post really is my central critique of contemporary artificial intelligence discourse. That critique is: any unexamined views that we use to understand ourselves are likely to enter the design of AI systems that we build. This is because if we think that deep down we really are "agents", then we naturally conclude that any similar intelligent entity would have that same basic nature. In this way we take what was once an approximate description ("humans are somewhat roughly like agents in certain cases") and make it a reality (by building AI systems that actually are designed as agents, and which take over the world).

In fact the agent model is a very effective abstraction. It is precisely because it so effective that we have forgotten the distinction between the model and the reality. It is as if we had so much success in modelling our refrigerator as an ideal heat pump that we forgot that there even is a distinction between real-world refrigerators and the abstraction of an ideal heat pump.

I have the sense that a great deal of follow-up work is needed on this idea. I would like to write detailed critiques of many of the popular approaches to AI design, exploring ways in which over-use of the agent model is a stumbling block for those approaches. I would also like to explore the notion of goals and beliefs in a similar light to this post: what exactly is the model we're using when we talk about goals and beliefs, and what is the phenomenon we're trying to explain with those models?

comment by Gordon Seidoh Worley (gworley) · 2021-05-09T17:32:28.152Z · LW(p) · GW(p)

I think this is right and underappreciated. However I struggle myself to make a clear case of what to do about it. There's something here, but I think it mostly shows up in not getting confused that the agent model just is how reality is, which underwhelms people who perhaps most fail to deeply grok what that means because they have a surface understanding of it.

comment by Chloe Thompson (Jalen Lyle-Holmes) · 2021-04-27T14:09:02.317Z · LW(p) · GW(p)

I assume you've read the Cartesian Frame sequence? [LW · GW] What do you think about that as an alternative to the traditional agent model?

Replies from: Jalen Lyle-Holmes↑ comment by Chloe Thompson (Jalen Lyle-Holmes) · 2021-04-27T14:09:28.349Z · LW(p) · GW(p)

Side question: After I read some of the Cartesian Frames sequence, I wondered if something cool could come out of combining its ideas with your ideas from your Ground of Optimisation post. Because: Ground of Optimisation 1. formalises optimisation but 2. doesn't split the system into agent and environment, whereas Cartesian Frames 1. gives a way of 'imposing' an agent-environment frame on a system (without pretending that frame is a 'top level' 'fundamental' property of the system) , but 2. doesn't really deal with optimisation. So I've wondered if there might be something fruitful in trying to combine them in some way, but am not sure if this would actually make sense/be useful (I'm not a researcher!), what do you think?

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2021-05-09T19:00:39.425Z · LW(p) · GW(p)

Thanks for this note Jalen. Yeah I think this makes sense. I'd be really interested in what question might be answered by the combination of ideas from Cartesian Frames and The Ground of Optimization. I have the strong sense that there is a very real question to be asked but I haven't managed to enunciate it yet. Do you have any sense of what that question might be?

Replies from: Jalen Lyle-Holmes↑ comment by Chloe Thompson (Jalen Lyle-Holmes) · 2021-05-18T12:44:01.089Z · LW(p) · GW(p)

Oh cool, I'm happy you think it makes sense!

I mean, could the question even be as simple as "What is an optimiser?", or "what is an optimising agent?"?

With maybe the answer being maybe something roughly to do with

1. being able to give a particular cartesian frame over possible world histories, such that there exists an agent 'strategy' ('way that the agent can be') such that for some 'large' subset of possible environments , and some target set of possible worlds we have for all

and 2. that the agent 'strategy'/'way of being' is in fact 'chosen' by the agent

?

(1) is just a weaker version of the 'ensurable' concept in Cartesian frames, where the property only has to hold for a subset of rather than all of it. I think would correspond to both 'the basin of attraction' and 'pertubations', as a set of ways the environment can 'be' (Thinking of and as sets of possible sort of 'strategies' for agent and environment respectively across time). (Though I guess is a bit different to Basin of attraction+perturbations because the Ground of Optimization notion of 'basin of attraction' includes the whole system, not just the 'environment' part, and likewise perturbations can be to the agent as well... I feel like I'm a bit confused here.) would correspond to the target configuration set (or actually, the set of world histories in which the world 'evolves' towards a configuration in the target configuration set).

Something along those lines maybe? I'm sure you could incorporate the time aspect better by using some of the ideas from the 'Time in Cartesian Frames' post, which I haven't done here.

↑ comment by Alex Flint (alexflint) · 2021-05-25T04:22:05.561Z · LW(p) · GW(p)

Jalen I would love to support you to to turn this into a standalone post, if that is of interest to you. Perhaps we could jump on a call and discuss the ideas you are pointing at here.

Replies from: Jalen Lyle-Holmes↑ comment by Chloe Thompson (Jalen Lyle-Holmes) · 2021-05-29T01:47:28.370Z · LW(p) · GW(p)

Thank you Alex! Just sent you a PM :)