Progress report 3: clustering transformer neurons

post by Nathan Helm-Burger (nathan-helm-burger) · 2022-04-05T23:13:18.289Z · LW · GW · 0 commentsContents

Previous posts in series: Clustering process Ways I've thought of to improve this: Other work: None No comments

Previous posts in series:

Related prior work I discovered while working on this: https://bert-vs-gpt2.dbvis.de/

Clustering process

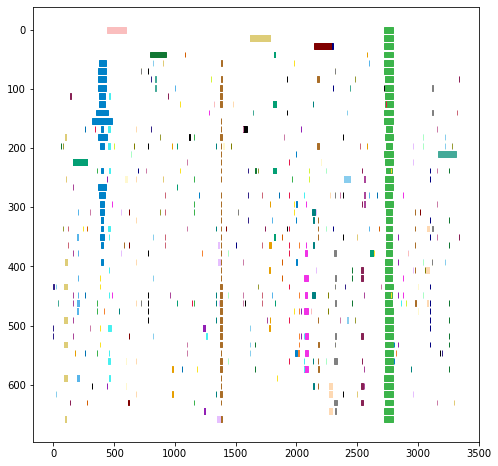

Steps to achieve clustering of the neurons found as important for the various contexts:

- Made list of neuron<-> token weighted edges based on the neuron importance tracing. This list constitutes a bipartite graph (part 1: nodes, part 2: tokens)

- Converted this to a neuron<->neuron graph using networkx's function bipartite.collaboration_weighted_projected_graph

- Used the louvain community detection algorithm from community_louvain to partition this neuron<->neuron graph

- With each neuron assigned to a cluster (aka community), we can also associate tokens to these clusters by summing the strength of the neuron->token edges for each possible token.

Ways I've thought of to improve this:

First and most obviously: more data. Conduct neuron importance traces on more contexts.

Better ways to conceptually divide up or group the contexts to make more sense of things. For example, the part-of-speech and word-meaning-in-context as shown in the prior work linked above.

Try making my methodology more closely match Filan et al for sake of comparison (e.g. use spectral clustering and n-cut assessment instead of louvain partitioning. The trouble with spectral clustering is that you must choose a cluster number. Do I iterate through a variety of possible cluster numbers until I find the one with the lowest n-cut metric and go with that?

This is specifically within-layer clustering. I plan to also look at clustering across layers.

better visualizations:

- Clustering the tokens into token groups and combining clusters with very similar cluster groups

- Alternately, representing the clusters in color and location proportional to the similarity of their associated

- make it more interactive for easier exploration to gain intuition. Use this to get ideas for next steps.

Other work:

I played around with the ROME project's ability to edit info in GPT2. I noticed that some facts (more 'narrowly' represented) are much easier to change than others. For instance, changing the location of the Eiffel Tower is much easier than changing the location of the White House or of California. It could be worth looking into the shapes of representation of facts (multi-layer but narrow vs layer-concentrated-but-thick, etc) and trying to map that to the edit-ability of facts.

2.

For the past few years one of the machine learning concepts I keep coming back to is the idea of decision boundary refinement. What are the best ways to selectively collect or synthesize useful data points near the current decision boundary? More data in the critical area grants more resolution where it is most needed. A better defined decision boundary means less likelihood of unexpected behavior when generalizing to new contexts.

In the past week, I started thinking about this in the context of the problem posed by Redwood Research: how to elicit data points of increasingly subtle / indirect/ complex / implied harm to humans in order to iteratively improve the classifier intended to detect this?

Having recently watched Yannic's review of the Virtual Outlier Synthesis paper (link), I thought that this seemed like a potentially promising approach to refining this decision boundary. The paper is specifically focused on vision models, but I feel like the general concept might still apply to language models. The gist of the relevant part of the idea is that you can train a generative model on the activations of the penultimate layer of your target model in the context of examples of the class of data you want to generate outliers for. You can then use the generative model to generate new-but-similar activation states, run those activation states through the final layer of the target model, and get a novel dataset of similar-but-different examples of the target class. You can control the parameters of the generative model to give increasingly dissimilar examples and tune this in to the decision boundary. Seems promising to me, so I started working on this idea also.

0 comments

Comments sorted by top scores.