A Bat and Ball made me Sad

post by Darren McKee · 2023-09-11T13:48:24.686Z · LW · GW · 26 commentsContents

The answer is $5. Please enter the number 5 in the blank below. None 26 comments

'A bat and a ball cost $110 in total.

The bat costs $100 more than the ball.

How much does the ball cost?

The answer is $5.

Please enter the number 5 in the blank below.

$_____'

That question is from one of 59 new studies (N = 72,310) which focus primarily on the “bat and ball problem," found in The formation and revision of intuitions - ScienceDirect by Andrew Meyer and Shane Frederic.

23% of respondents failed to give the correct answer.

I don't have anything insightful to say but wanted to share because it seems worth knowing.

Additionally, I'm not exactly sure how (and how much) I should update about this.

(Edit: I initially posted the wrong question)

26 comments

Comments sorted by top scores.

comment by Richard_Kennaway · 2023-09-12T08:07:08.479Z · LW(p) · GW(p)

I saw this and thought up a different version:

"A bat and a ball cost $110 in total.

The bat costs $100 more than the ball.

How much does the ball cost?

The answer is $10.

Please enter the number 10 in the blank below.

$_____"

How would you answer?

Replies from: andrew-meyer-1↑ comment by Andrew Meyer (andrew-meyer-1) · 2023-09-13T09:53:29.987Z · LW(p) · GW(p)

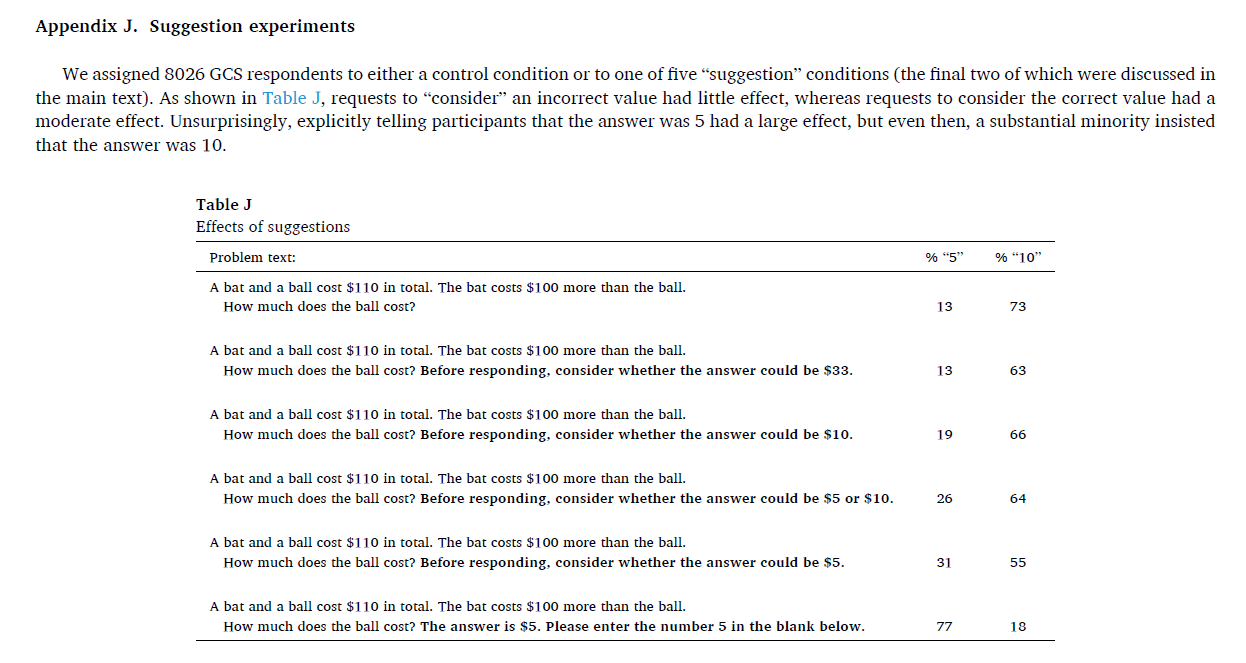

If you look in the appendix, you'll see we ran something similar to that, where we told them to "consider 10". It increased solution rates from 13% to 19%.

comment by cptzc (zac-cucinotta) · 2023-09-11T15:28:53.214Z · LW(p) · GW(p)

I think there should be basically no update from this study, and most others using apps like Mechanical Turk and Google Consumer Surveys, which is what this study used. Consumer surveys are paid ads where you have to fill out the survey before getting to do what you actually want to do. People are incentivized to complete these as soon as possible and there is little penalty for inaccuracy. I would bet that this doesn't reproduce if correct answers are incentivized, or even if it's run on volunteers who aren't being stopped from what they want to do to take the survey.

Replies from: andrew-meyer-1, Dzoldzaya, Darren McKee↑ comment by Andrew Meyer (andrew-meyer-1) · 2023-09-12T09:12:39.033Z · LW(p) · GW(p)

I partially agree. But I'll push back a little. The 23% wrong answers are not random key mashing. 80% of them type the number "10". Instead of copying the single digit that they are instructed to copy, they are looking further from the answer blank, finding two three-digit numbers, subtracting one from the other, and typing the two-digit difference. That is more work than the correct answer, not less.

But still, I do agree that if people had accuracy incentives, the correct answer rate would probably go up.... Then again, maybe, with monetary incentives, people would be even more likely to think the provided answer was a trap. If I had to bet, I'd say that incentives would increase the 77% "5" rate. But I wouldn't be totally shocked if it went down.

↑ comment by Dzoldzaya · 2023-09-12T09:38:41.504Z · LW(p) · GW(p)

This response is incorrect. Firstly, Google Consumer Surveys is very different from MTurk. MTurk users are paid to pay attention to the task for a given amount of time, and they are not 'paid ads'.

"People are incentivized to complete these as soon as possible and there is little penalty for inaccuracy."

This is generally untrue for MTurk - when you run online surveys with MTurk:

1) You set exclusion criteria for people finishing too quickly

2) You set attention check questions that, when you fail, exclude a respondent

3) A respondent is also excluded for certain patterns of answers e.g. answering the same for all questions or sometimes for giving contradictory answers

4) The respondent is rated on their response quality, and they will lose reputation points (and potentially future employment opportunities) for giving an inaccurate response

You can apply these criteria more or less rigorously, but I'd assume that the study designers followed standard practice (see this doc), at least.

I'm not claiming that MTurk is a very good way of getting humans to respond attentively, of course. There are lots of studies looking at error rates: https://arxiv.org/ftp/arxiv/papers/2101/2101.04459.pdf and there are obvious issues with exclusively looking at a population of 'professional survey respondents' for any given question.

So I'm not exactly sure how this survey causes me to update. Perhaps especially because they're slightly rushing the answers, it's genuinely interesting that so many people choose to respond to the (perceived) moderate complexity task (which they assume is: "calculate 110 - 100"), rather than either the simple task: "write out the number 5".

↑ comment by Dagon · 2023-09-12T18:45:53.265Z · LW(p) · GW(p)

There are certainly good ways to ask such a question with reasonable motivation to get it right. You could include this in a 5-question quiz, for instance, and say "you get paid only (or more) if you get 3 correct". And then vary and permute the questions so nobody uses 20 accounts to do the same task and not separately answer the questions.

But that's expensive and time-consuming, and unless the paper specifies it, one should assume they did the simpler/cheaper option of just paying people to answer.

Replies from: andrew-meyer-1↑ comment by Andrew Meyer (andrew-meyer-1) · 2023-09-13T09:56:40.475Z · LW(p) · GW(p)

Monetary incentives raise solution rates a little, but not that much. Lex Borghans and co-authors manipulate small incentives and they do almost nothing. Ben Enke and co-authors offers a full month's salary to Nairobi based undergrads and finds a 13% percentage point increase in solution rates.

I'm not sure how our manipulations would interact with monetary incentives. But I'd like to know!

↑ comment by solvalou · 2023-09-12T13:51:25.045Z · LW(p) · GW(p)

When surveys on mturk are designed to hold a single account occupied for longer than strictly necessary to fill out an answer that passes any surface-level validity checks, the obvious next step is for people to run multiple accounts on multiple devices, and you're back at people giving low-effort answers as fast as possible.

↑ comment by Darren McKee · 2023-09-11T22:42:36.758Z · LW(p) · GW(p)

I guess it depends on what your priors already were but 23% is far higher than the usual 'lizardman', so one update might be to greatly expand how much error is associated with any survey. If the numbers are that high, it gets harder to understand many things (unless more rigorous survey methods are used etc)

comment by Dagon · 2023-09-11T15:53:18.549Z · LW(p) · GW(p)

Mentioned by Tyler Cowen today as well: https://marginalrevolution.com/marginalrevolution/2023/09/the-ball-the-bat-and-the-hopeless.html

My reaction is the same as his - I wish they'd incentivized correct answers. I suspect 23% is too high to be pure lizardman ( https://slatestarcodex.com/2013/04/12/noisy-poll-results-and-reptilian-muslim-climatologists-from-mars/ ), but it's still the case that most participation-selection mechanisms will have a lot of people just trying to finish quickly, rather than correctly.

↑ comment by niplav · 2023-09-11T17:47:53.550Z · LW(p) · GW(p)

See also drossbucket 2018 [? · GW].

comment by Richard_Kennaway · 2023-09-13T11:14:30.539Z · LW(p) · GW(p)

The original version of this puzzle had a total price of $1.10, and the ball was 5 cents. Another reason to be sad.

comment by Kaj_Sotala · 2023-10-05T04:00:59.453Z · LW(p) · GW(p)

People responding to studies assume the questions to make sense; if there's a question that seems to not make sense, they suspect it's a trick question or that they don't understand the instructions correctly, and try to do something that makes more sense.

A question that says "the right answer is X, please write it down here" doesn't make sense in terms of how most people understand tests. Why would they be asked a question for which the answer was already there?

I'm guessing that most of the people who got this wrong thought "wtf, there has to be some trick here", then tried to check what the answer should be, got it wrong, and then felt satisfied that they had noticed and avoided the "trick to see if they were paying attention".

Replies from: zac-cucinotta↑ comment by cptzc (zac-cucinotta) · 2023-10-05T04:17:25.750Z · LW(p) · GW(p)

This study was done via Google ads that block content, which means the people taking the study were thinking "How do I get this ad to go away so I can watch my video".

comment by Kiboneu (kiboneu) · 2023-09-11T23:46:17.123Z · LW(p) · GW(p)

In the US, displayed prices are often not the actual price. Taxes and sometimes other fees like mandatory tips and bag fees are added to the total, which isn't reflected until the very end. If people are used to this, then I can imagine that there is a level of constant uncertainty of a hidden variable that would lead to trusting the written suggestion. Just my 2 cents (plus tax).

comment by Carl Feynman (carl-feynman) · 2023-09-11T22:01:22.513Z · LW(p) · GW(p)

Is text in boldface part of the problem as presented to the persons being tested? If it isn’t, I’m not surprised 23% of the population got it wrong, because I got it wrong the first time I saw it, and I’m mathematically sophisticated.

Replies from: Darren McKee↑ comment by Darren McKee · 2023-09-11T22:40:07.402Z · LW(p) · GW(p)

The boldface was part of the problem presented to participants. It is a famous question because so many people get it wrong.

comment by Dagon · 2023-09-11T16:48:55.622Z · LW(p) · GW(p)

[separate comment, so it can get downvoted separately if needed]

Regardless of reliability or accuracy of the distribution of results, it's clear that many, perhaps most, living humans are not rationally competent, most of the time. A lot of us have some expertise or topics where we have instincts and reflective capacity to get good outcomes, but surprisingly few have the general capability of modeling and optimizing their behaviors and experiences.

I'd expect that this would be front-and-center of debates about long-term human flourishing, and what "alignment" even means. The fact that it's mostly ignored is a puzzle to me.

Replies from: Seth Herd, Algon↑ comment by Seth Herd · 2023-09-11T19:06:53.050Z · LW(p) · GW(p)

Humans are dumb, but not this dumb. This is about people not bothering to answer questions since they're rushing. See other comments.

Replies from: hop↑ comment by hop · 2023-09-13T06:50:23.567Z · LW(p) · GW(p)

If they're rushing then they can enter $5 as specifically instructed by the question. They can also check that if fits as a correct solution, which it does. The fact that so many people aren't doing that is interesting.

The report also details a study that tests instructions like "Be careful! Many people miss the following problem because they do not take the time to check their answer." Along with a big yellow warning icon. Does the warning work? Kindof! mTurk response times go from 22 seconds to 33 seconds. eLab responses go from 21 to 37 seconds (almost doubling). Did scores improve with greater attention paid? Not really! eLab correct answers went from 43% to 52%, a rise of only 9%. mTurk correct answers dropped from 34% to 25%.

I really don't think that they're wrong because they're rushing.

↑ comment by Seth Herd · 2023-09-13T21:48:25.180Z · LW(p) · GW(p)

It's quite rational to ignore what people are telling you to do, and do what's good for you instead. People on MTurk usually do a bunch of it, and they optimize for payout, not for humoring the experimenter or doing a good job.

I think many of them are not paying attention.

There are probably checks on speed of response to weed out obvious gaming. So the smart thing would be to do something else at the same time, and occasionally enter an answer.

I did cognitive psychology and cog neurosci for a couple of decades, and never ceased to be amazed at the claims of "humans can't do this" vs. "these people didn't bother to do this because I didn't bother to motivate them properly".

This isn't to say humans are smart. We're not nearly as smart as we'd like to think. But we're not this dumb, either.

Replies from: hop↑ comment by hop · 2023-09-14T08:45:54.075Z · LW(p) · GW(p)

That's fair. How do you explain the in-person respondents? And more than that, the people who answered incorrectly and then were willing to bet money that they were right?

> 16 of the 22 Yale undergraduates who made the common error were sufficiently confident to bet on it, preferring to receive $2 for a correct response than $1 for sure.

That's a very large proportion! People who are rushing and who know that they are rushing should take the $1. I guess you could claim that that's not a large enough monetary motivation to induce truthful reflection but.. I think that that's a big problem all by itself! People in their day-to-day jobs are almost never motivated to put extra thought into making correct decisions to hard problems like these. They're motivated to put in enough effort to make their boss happy and not get fired and then go home to their spouse and kids. Very few people enjoy these types of problems but everyone encounters them.

If someone makes the common error to the bat and ball problem in their daily life, they'll Maybe lose $5. For most people, intuition is sufficient.

I don't think that your assertion that humans aren't this dumb is particularly useful here. Humans definitely do behave this dumbly in their day-to-day lives and that matters quite a lot.

↑ comment by Seth Herd · 2023-09-14T18:37:49.424Z · LW(p) · GW(p)

Does it matter a lot? People are screwing up decisions that don't matter so they can focus on what they actually care about. This has terrible consequences when we take a sum of opinions on complex issues, so I guess that matters.

Replies from: hop↑ comment by hop · 2023-09-15T08:20:01.114Z · LW(p) · GW(p)

They're not screwing up only decisions that don't matter, they're also screwing up decisions that don't matter to them. But many of those decisions matter quite a lot! The entire phenomenon of bike-shedding happens due to people focusing on the wrong things: things that they can trivially and lazily understand. Bike-shedding was first observed and described in the context of people designing nuclear power generating stations.

So in summary, some of the decisions people make are very important. Some of the people making those decisions are screwing them up because they are dumb, with rushing being a contributing but not exclusive factor, a factor that applies just as much in real life as it does in studies.

↑ comment by Algon · 2023-09-11T17:22:00.061Z · LW(p) · GW(p)

I feel like handling human irrationality is a major motivation for agent foundations style research. What is the type of human values? How do we model human (ir)rationality to disentangle (unstable) goals from (imperfect) decison making procedures when figuring out what humans want. What's the thing which the human brain is an approximation of and how does it approximate that ideal? I know getting a model of human irrationality is important to Vanessa, and she thinks working on meta-cognition will make some progress on that. Scott Garrabrants work, of which geometric rationality is the latest example, makes some headway on these problems. I'd go even further and say that a lot of non-agent foundation agendas are motivated by this problem. For instance, Steve Byrnes agenda tackles the "modelling human rationality" part more directly than any other agenda I can think of.

But that's the thing: there's been minor progress at best and researchers who work on these problems are pessimistic about further progress. I certainly don't know of anything that really looks like it would work. Maybe our perspective is wrong. I would guess that shard theorists believe shard-theory would agree with that claim, and would state that shard theory looks like a more promising route to answering these questions (p~0.4).

So whilst the problem isn't explicitly written about that much, I think a lot of researchers would contest that the problem is being ignored.