SB 1047 Is Weakened

post by Zvi · 2024-06-06T13:40:41.547Z · LW · GW · 4 commentsContents

The Big Flip The Big Fix The Shutdown and Reporting Clarifications The Harm Adjustment The Limited Duty Exemption Clarification Overall Changing Your Mind None 4 comments

It looks like Scott Weiner’s SB 1047 is now severely weakened.

Some of the changes are good clarifications. One is a big very welcome fix.

The one I call The Big Flip is something very different.

It is mind boggling that we can have a political system where a bill can overwhelmingly pass the California senate, and then a bunch of industry lobbyists and hyperbolic false claims can make Scott Weiner feel bullied into making these changes.

I will skip the introduction, since those changes are clarifications, and get on with it.

In the interest of a clean reference point and speed, this post will not cover reactions.

The Big Flip

Then there is the big change that severely weakens SB 1047.

- 22602 (f)(1): Definition of covered model changed from trained with at least 10^26 flops OR a model expecting to have similar capabilities to what 10^26 flops would have gotten you in 2024 → “was trained using a quantity of computing power greater than 10^26 integer or floating-point operations, AND the cost of that quantity of computing power would exceed one hundred million dollars ($100,000,000) if calculated using average market prices of cloud compute as reasonably assessed by the developer at the time of training.”

- On and after January 1, 2026, the dollar amount in this subdivision shall be adjusted annually for inflation to the nearest one hundred dollars ($100) based on the change in the annual California Consumer Price Index for All Urban Consumers published by the Department of Industrial Relations for the most recent annual period ending on December 31 preceding the adjustment.

- Later: They will also publish the annual inflation adjustments.

Bolded text is exact, except I capitalized AND for clarity.

The AND, rather than an OR, makes my heart sink.

Effectively, the 10^26 requirement is dead. Long live the $100 million.

Where the law previously strengthened over time, now it weakens further.

It starts weakening this year. The cost for buying one-time use of 10^26 flops of compute seems likely to fall below $100 million this year.

Consider this from Jack Clark, where he got napkin math of $70 million a few months ago, or $110 million if you rented A100s. Jack clarified on Twitter that he expects B100s to offer a large further cost reduction.

The compute minimum to be a covered model will begin to rise. The strength of non-covered models then rises both with the fall in compute costs, and also with gains in algorithmic efficiency.

The previous version of the bill did an excellent job of handling the potential for Type I (false positive) errors via the limited duty exemption. If your model was behind the non-hazardous capabilities frontier, all you had to do was point that out. You were good to go.

Alas, people willfully misrepresented that clause over and over.

In terms of the practical impact of this law, the hope is that this change does not much matter. No doubt the biggest models will soon be trained on far more compute than $100 million can buy. So if you train on what $100 million can buy in 2026, someone else already trained a bigger model, and you had a limited duty exemption available anyway, so you not being covered only saved you a minimum amount of paperwork, and provides peace of mind against people spreading hyperbolic claims.

What this does do is very explicitly and clearly show that the bill only applies to a handful of big companies. Others will not be covered, at all.

If you are spending over $100 million in 2024 dollars on compute, but you then claim you cannot comply with ordinary regulations because you are the ‘little guy’ that is being stomped on? If you say that such requirements are ‘regulatory capture’ on behalf of ‘big tech’?

Yeah. Obvious Nonsense. I have no intention of pretending otherwise.

This adds slightly to complexity since you now need to know the market cost of compute in order to know the exact threshold. But if you are pushing that envelope, are above 10^26 flops and want to dodge safety requirements and complaining about working in a modest buffer? I find it hard to have much sympathy.

Also section 22606 now assesses damages based on average compute costs at time of training rather than what you claim your model cost you in practice, which seems like a good change to dodge Hollywood Accounting, and it clarifies what counts as damage.

The Big Fix

- 3.22602.(i): “Derivative model” does not include

an entirely independently trained artificial intelligence model.either of the following:- An entirely independently trained artificial intelligence model.

- An artificial intelligence model, including one combined with other software, that is fine-tuned using a quantity of computing power greater than 25 percent of the quantity of computing power, measured in integer or floating-point operations, used to train the original model.

I would tweak this a bit to clarify that ‘fine tuning’ here includes additional ‘pre training’ style efforts, just in case, but surely the intent here is very clear.

If they change your model using more than 25% of the compute you spent, then it becomes their responsibility, not yours.

If they use less than that, then you did most of the work, so you still bear the consequences.

This is essentially how I suggested this clause be fixed, so I am very happy to see this. It is not a perfect rule, I can still see corner case issues that could be raised without getting into Obvious Nonsense territory, and could see being asked to iterate on it a bit more to fix that. Or we could talk price. But at a minimum, we can all agree it is a big improvement.

If the argument is ‘once someone fine tunes my model at all, it should no longer be my responsibility’ then I strongly disagree, but I appreciate saying that openly. It is very similar to saying that you want there to be no rules on open models at all, since it is easy to impose safety precautions that both cripple usefulness and are trivial to undo.

Also, this was added later since it is now required:

- (B) If a developer provides access to the derivative model in a form that makes fine tuning possible, provide information to developers of that derivative model in a manner that will enable them to determine whether they have done a sufficient amount of fine tuning to meet the threshold described in subparagraph (B) of paragraph (2) of subdivision (i) of Section 22602.

In other words, tell us how many flops you trained on, so we can divide by four.

Also 22603(e) adds the word ‘nonderivative’ which was technically missing. Sure.

The Shutdown and Reporting Clarifications

- 3.22602(m): (m) (1) “Full shutdown” means the cessation of operation of a covered model, including all copies and derivative models, on all computers and storage devices within the custody, control, or possession of a

person,nonderivative model developer or a person that operates a computing cluster, including any computer or storage device remotely provided by agreement.(2) “Full shutdown” does not mean the cessation of operation of a covered model to which access was granted pursuant to a license that was not granted by the licensor on a discretionary basis and was not subject to separate negotiation between the parties.

I strongly stated earlier that I believed this was the previous intent of the bill. If your model is open weights, and someone else gets a copy, then you are not responsible for shutting down that copy.

Yes, that does render the model impossible to shut down. That is the whole point. Open model weights cannot comply with the actual safety need this is trying to address. They got an exception saying they do not have to comply. They complained, so now that exception is impossible to miss.

The reporting clarification is similarly modified. Your responsibility for reporting safety incidents now stops where your control stops. If someone copies the weights, you do not have to report what happens after that. Once again, open weights models actively get a free exception, in a way that actually undermines the safety purpose.

(There is also a technical adjustment to 22604’s organization, no effective change.)

Any claims that this is still an issue for open model weights fall into pure Obvious Nonsense territory.

The Harm Adjustment

- (n) (1) “Hazardous capability” means the capability of a covered model to be used to enable any of the following harms in a way that would be significantly more difficult to cause without access to a covered

model:model that does not qualify for a limited duty exemption:(A) The creation or use of a chemical, biological, radiological, or nuclear weapon in a manner that results in mass casualties.(B) At least five hundred million dollars ($500,000,000) of damage through cyberattacks on critical infrastructure via a single incident or multiple related incidents.(C) At least five hundred million dollars ($500,000,000) of damage by an artificial intelligence model that autonomously engages in conduct that would violate the Penal Code if undertaken by ahuman.human with the necessary mental state and causes either of the following:(i) Bodily harm to another human.(ii) The theft of, or harm to, property.(D) Other grave threats to public safety and security that are of comparable severity to the harms described in paragraphs (A) to (C), inclusive.(2) “Hazardous capability” includes a capability described in paragraph (1) even if the hazardous capability would not manifest but for fine tuning and posttraining modifications performed by third-party experts intending to demonstrate those abilities.(3) On and after January 1, 2026, the dollar amounts in this subdivision shall be adjusted annually for inflation to the nearest one hundred dollars ($100) based on the change in the annual California Consumer Price Index for All Urban Consumers published by the Department of Industrial Relations for the most recent annual period ending on December 31 preceding the adjustment.

This clarifies that a (valid) limited duty exemption gets you out of this clause, and that it will be adjusted for inflation. The mental state note is a technical fix to ensure nothing stupid happens.

This also adjusts the definition of what counts as damage. I doubt this change is functional, but can see it mattering in either direction in corner cases.

The Limited Duty Exemption Clarification

- (o) “Limited duty exemption” means an exemption, pursuant to subdivision (a) or (c) of Section 22603, with respect to a covered model that is not a derivative

model thatmodel, which applies if a developer canreasonably exclude the possibilityprovide reasonable assurance that a covered modelhasdoes not have a hazardous capabilityor mayand will not come close to possessing a hazardous capability when accounting for a reasonable margin for safety and the possibility of posttraining modifications. - Later: (C) Identifies specific tests and test results that would be sufficient to

reasonably exclude the possibilityprovide reasonable assurance that a covered modelhasdoes not have a hazardous capabilityor mayand will not come close to possessing a hazardous capability when accounting for a reasonable margin for safety and the possibility of posttraining modifications, and in addition does all of the following: - (u) “Reasonable assurance” does not mean full certainty or practical certainty.

- Later below: (ii) The safeguards enumerated in the

policyprotocol will be sufficient to prevent unreasonable risk of critical harms from the exercise of a hazardous capability in a covered model. - Elsewhere: Technical change that says same thing: and

does not have greaterhas an equal or lesser general capability than either of the following:

I moved the order around here for clarity, as #6 and #7 are the same change.

You have to read clause (u) in the properly exasperated tone. This all clarifies that yes, this is a reasonable assurance standard, as understood in other law. Whether or not my previous interpretation was true before, it definitely applies now.

Charles Foster points out that this also points out that the change in (n)(1) raises the threshold you are comparing against when assessing capabilities. Instead of comparing to lack of access to covered models at all, it compared to lack of access to covered models that do not qualify for a limited duty exemption.

This directly addresses my other major prior concern, where ‘lack of access to a covered model’ could have been an absurd comparison. Now the question is comparing your model’s abilities to someone who has anything short of state of the art. Very good.

Overall

We can divide the changes here into three categories.

- There are the changes that clarify what the bill says. These are all good changes. In each case, the new meaning clearly corresponds to my reading of what the bill used to say in that same spot. Most importantly, the comparison point for capabilities was fixed.

- If you fine-tune a model using 25% or more of original compute costs, it ceases to be a derivative model. This addresses the biggest issue with the previous version of the bill, which would have rendered anyone releasing open weights potentially fully liable for future unrelated models trained ‘on top of’ theirs. We can talk price on this, or make it more complex to address corner cases, but this at minimum helps a lot. If you think that any changes at all should invalidate responsibility for a model, then I see you saying open weights model developers should not be responsible for anything ever. That is a position one can have.

- The compute threshold was raised dramatically. Not only did the equivalent capabilities clause fall away entirely, a new minimum of $100 million in market compute costs was introduced, that will likely raise the 10^26 threshold as early as later this year. This substantially reduces the bill’s reach and impact.

As a result of that third change, it is now Obvious Nonsense to claim that academics, or small businesses, will be impacted by SB 1047. If you are not spending on the order of $100 million to train an individual model, this law literally does not apply to you. If you are planning to do that, and still claim to be small, and that you cannot afford to comply? Oh, do I have questions.

Changing Your Mind

Who will change their mind based on changes to this bill?

If you continue to oppose the bill, will you admit it is now a better written bill with a smaller scope? Will you scale back your rhetoric? Or not? There are still good reasons to oppose this bill, but they have to be based on what the bill now actually says.

Who will continue to repeat the same misinformation, or continue to express concerns that are now invalidated? Who will fall back on new nitpicks?

We will learn a lot about who people are, in the coming days and weeks.

4 comments

Comments sorted by top scores.

comment by Orpheus16 (akash-wasil) · 2024-06-06T16:42:27.599Z · LW(p) · GW(p)

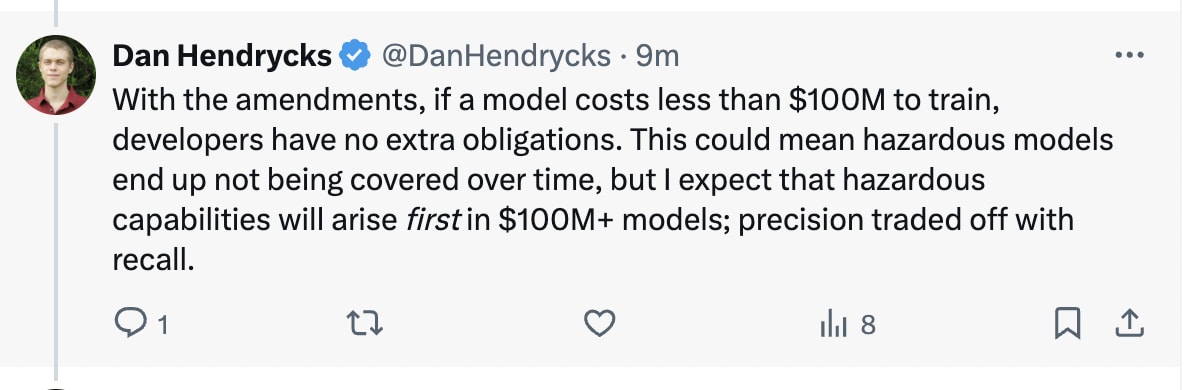

To what extent do you think the $100M threshold will weaken the bill "in practice?" I feel like "severely weakened" might overstate the amount of weakenedness. I would probably say "mildly weakened."

I think the logic along the lines of "the frontier models are going to be the ones where the dangerous capabilities are discovered first, so maybe it seems fine (for now) to exclude non-frontier models" makes some amount of sense.

In the long-run, this approach fails because you might be able to hit dangerous capabilities with <$100M. But in the short-run, it feels like the bill covers the most relevant actors (Microsoft, Meta, Google, OpenAI, Anthropic).

Maybe I always thought the point of the bill was to cover frontier AI systems (which are still covered) as opposed to any systems that could have hazardous capabilities, so I see the $100M threshold as more of a "compromise consistent with the spirit of the bill" as opposed to a "substantial weakening of the bill." What do you think?

See also:

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-06-06T14:29:39.942Z · LW(p) · GW(p)

I'm not sure there's an answer to this question yet, but I have a concern as a model evaluator. The current standard for showing a model is capable of harm is proving that it provides uplift to some category of bad actors relative to resources not including the model in question.

Fine so far. Currently this is taken to mean "resources like web search." What happens if the definition shifts to include "resources like web search and models not previously classified as dangerous"? That seems to me to give a moving goalpost to the threshold of danger in a problematic way. I'm already pretty confident that some published open-weight models do give at least a little bit of uplift to bad actors.

How much uplift must be shown to trigger the danger clause?

By what standards should we measure and report on this hazard uplift?

Seems to me like our failure to pin down definitions of this is already letting bad stuff sneak by us unremarked. If we then start moving the goalpost to be "must be measurably worse than anything which has previously snuck by" then it seems like we won't do any effective enforcement at all.

comment by ryan_greenblatt · 2024-06-06T23:51:54.428Z · LW(p) · GW(p)

Then there is the big change that severely weakens SB 1047. [...] AND the cost of that quantity of computing power would exceed one hundred million dollars

I think this change isn't that bad. (And I proposed changing to a fixed 10^26 flop threshold in my earlier post [LW · GW].)

It seems unlikely to me that this is crucial. If there is some moderately efficient way to convert money into capabilities, then you should expect the first possibly hazardous model to be quite expensive (1), quite soon (e.g. GPT-5) (2), or after a sudden large jump in capabilities due to algorithmic progress (3).

In scenario (1), plausibly the world is already radically transformed by the time algorithmic advances make $100 million enough for truely dangerous models. If the world isn't radically transformed, by the point when $100 million is dangerous, it has probably been a while for human algorithmic insights to advance sufficiently. And, in the delay, the government has enough time to respond in principle.

Scenarios (2) and (3) are most concerning, but they are also somewhat unlikely. In these scenarios, various plans look less good anyway and a bunch of the action will be in trying to mobilize a rapid government response.

comment by Tenoke · 2024-06-06T20:44:03.731Z · LW(p) · GW(p)

>Once again, open weights models actively get a free exception, in a way that actually undermines the safety purpose.

Open weights getting a free exception doesn't seem that bad to me, because yes on one hand it increases the chance of a bad actor getting a cutting-edge model but on the other hand the financial incentives are weaker, and it brings more capability to good actors outside of the top 5 companies earlier. And those weights can be used for testing, safety work, etc.

I think what's released openly will always be a bit behind anyway (and thus likely fairly safe), so at least everyone else can benefit.