Replicators, Gods and Buddhist Cosmology

post by KristianRonn · 2025-01-16T10:51:14.268Z · LW · GW · 3 commentsContents

The Game of Replicators Traps and Landmines From Species Fitness to Planetary Fitness Cosmic Survivor Bias A perfectly balanced metabolism might be hard Major evolutionary transitions might be even harder The Game of Gods Adaptive strategies for avoiding planetary landmines The Limits of Prediction The Rise of High-Fidelity Simulations Is Buddhist cosmology True? Yet another anthropic argument A Quantum Parallel: Observers as Rendering Engines Hidden assumptions Focus on Being a Good Human, Not on Overthinking These Ideas None 3 comments

From the earliest days of evolutionary thinking, we’ve used metaphors to understand how life changes over time. One of the most enduring is the image of a vast “fitness landscape” with countless peaks and valleys, each corresponding to different levels of survival and reproduction. This landscape is a way of imagining how living things (the “replicators”) search for better solutions to life’s challenges—through trial, error, and the mindless mechanics of mutation and selection.

At the heart of it all are replicators: entities that copy themselves (with variation) and compete for whatever resources they need to persist. In the primordial soup, they might have been self-copying molecules jockeying for nutrients, while in modern ecosystems, they range from viruses hijacking cells to human beings shaping civilizations. Despite all this diversity, their basic “goal,” if we can call it that, is constant: remain in the game and keep making copies.

But here’s the interesting part: evolution doesn’t get to see the entire map. It is a blind watchmaker. It doesn’t know where the next mountain peak might be, nor can it foresee an impending drop into a valley. All it does is take one step at a time, test the new terrain, and keep the changes that happen to work better than whatever came before. In effect, each species’ journey across the fitness landscape looks like a random walk—a blind, incremental exploration of what might lead to more offspring.

Evolution through natural selection operates on populations of individuals. When we zoom in on the smallest steps of this process, we observe individual organisms and the random genetic mutations that arise each generation. While each mutation is ultimately determined by the laws of physics, its impact on overall fitness can feel as random as a roll of the dice. Some are neutral, some harmful, and occasionally a few are beneficial, granting a slight edge in survival and reproduction.

Imagine a group of individuals each taking a small step in a different direction on the landscape. Most of these new paths will lead nowhere special—many might wander straight into a valley of lower fitness, where individuals eventually die off or fail to leave many offspring. But from time to time, a single step hits higher ground, granting an advantage that can spread through the population over generations. Although many individuals perish, the overall fitness of the group (as a whole) can still climb upward, thanks to the incremental success of beneficial changes.

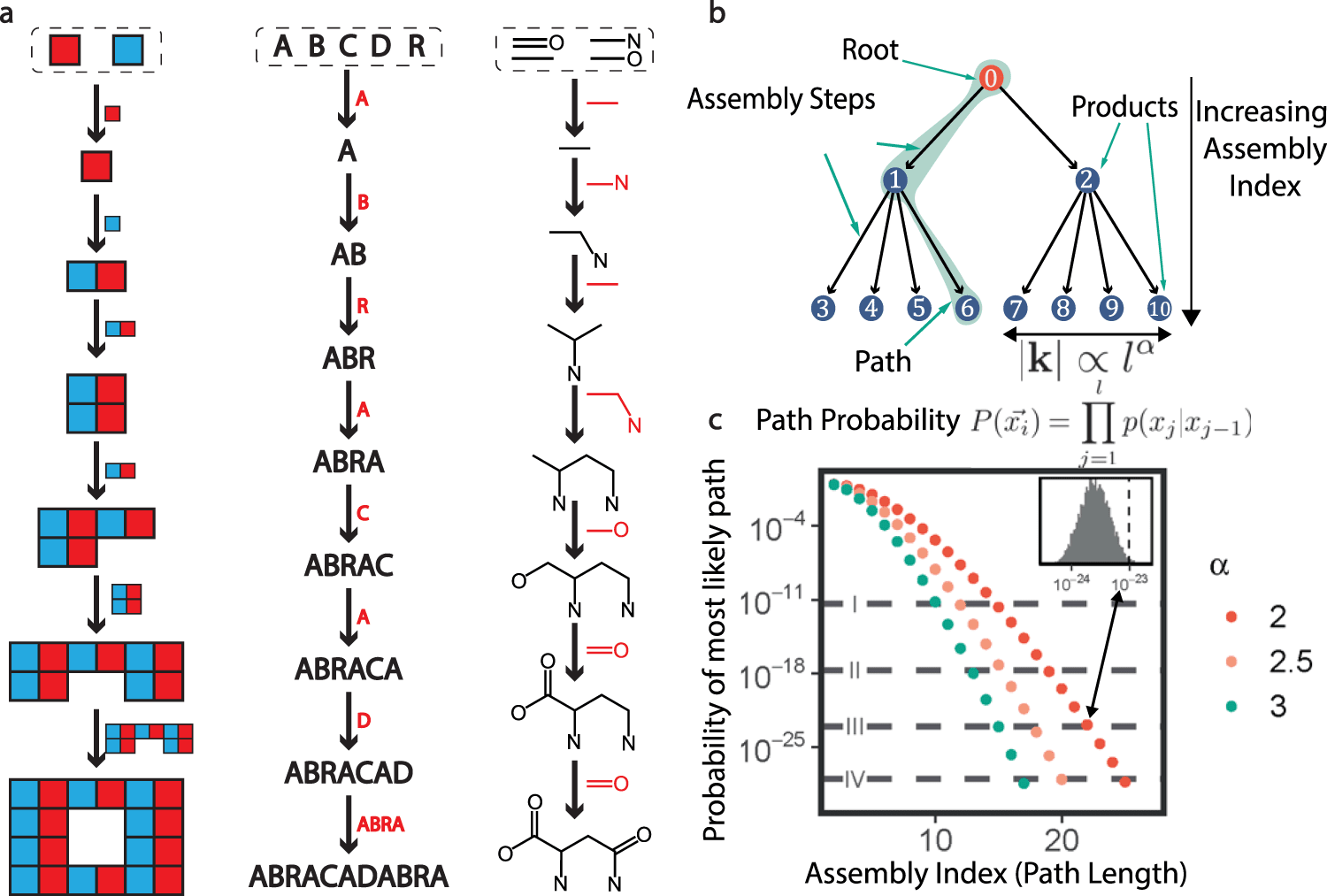

Assembly Theory provides a general framework for understanding how complexity emerges across scales, from molecules to organisms. It starts by examining the smallest components—like atoms or simple molecules—and considers the sequence of steps required to combine them into more complex structures through the laws of physics and chemistry. This sequence, quantified as the assembly index, represents the minimal number of steps needed to construct a given entity. For example, a simple molecule like water has a low assembly index, while a complex protein has a much higher one due to the many steps required to build it from amino acids, and a whole organism like E. coli has an even higher index.

Cells, tissues, and organisms can also be thought of as assemblies, with each layer of biological complexity requiring additional steps to construct. In evolutionary terms, mutations act as changes to these assembly steps. Most changes are neutral or harmful, but those that improve survival or reproduction are preserved and built upon. Over generations, the cumulative effect of these beneficial changes can increase the assembly index, reflecting the growing complexity of life forms.

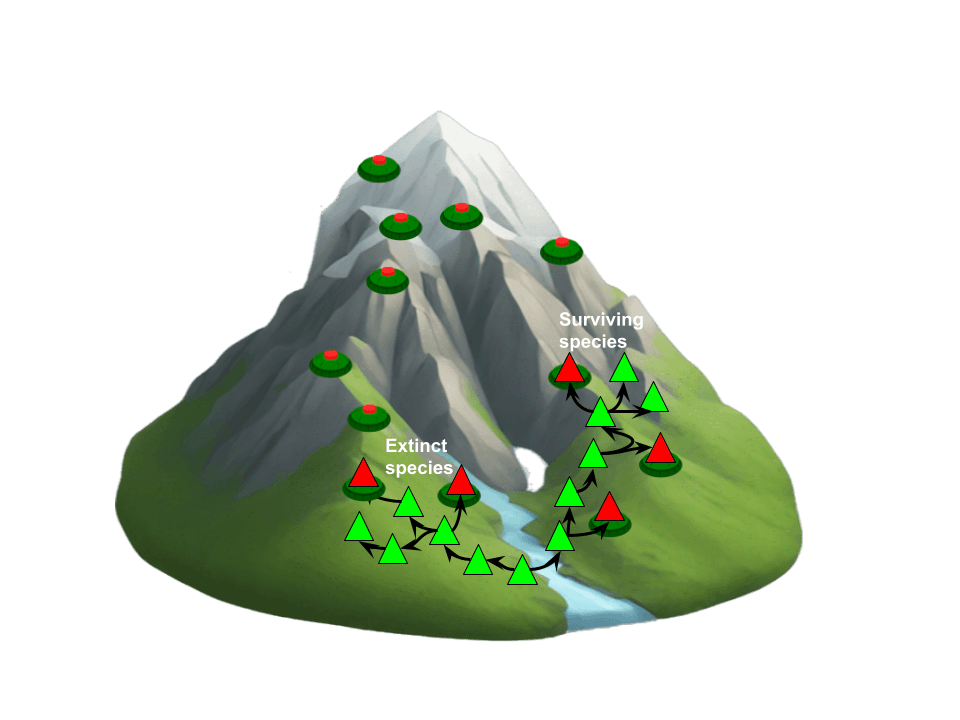

As lineages accumulate distinct assembly steps, they diverge into separate species, each charting its own unique path on the fitness landscape. Since a species is simply a population of individuals with common characteristics, you can visualize a species random-walk through the fitness landscape as an aggregate of numerous overlapping paths, each representing a lineage’s genetic journey. Over time, some of these paths cluster around peaks—zones of higher fitness—while others fade away into valleys.

The Game of Replicators

Traps and Landmines

When we think of evolution as a random walk across a rugged fitness landscape, we might want to figure out if there are convergent strategies in terms of how walks are attempted. Throughout evolution, an organism's success depends primarily on two factors: its ability to reproduce and its ability to repair their body to survive long enough to do so. This has led to two main survival strategies.

In both strategies, organisms require resources—such as nutrients—to reproduce and sustain themselves. The more resources a population can secure, the more numerous its members can be, allowing them to control a larger fraction of Earth’s total biomass. From the perspective of natural selection, this makes them more successful. However, since resources are always limited in any ecosystem, this often creates a zero-sum game where success comes at the expense of other species and individuals. This competition drives a resource arms race with species evolving strategies to outcompete others for these limited supplies.

So, how do species win this resource arms race? Some, like fish, adopt an r-selected strategy, focusing on short lifespans but rapid reproduction in large quantities. This strategy leverages sheer numbers to gain differential access to resources. Others, like elephants, follow a K-selected strategy, producing fewer offspring but investing more energy into traits that enable them to repair their bodies and live longer.

K-selected species often rely on power rather than numbers. For instance, some develop powerful offensive capabilities, such as the predation skills of lions, which enable them to steal resources from others. Others focus on powerful defenses, like the robust physical traits of buffalos, which protect them against predation. This dynamic creates an evolutionary power arms race centered on physical strength and capability, where offensive and defensive traits escalate in response to competitive pressures.

Another strategy for winning the resource arms race is specialization in specific niches. For example, pandas, which were once predatory bears, evolved to specialize in consuming bamboo, a resource with limited competition. By exploiting specific niches, specialized species can avoid direct competition while securing a steady supply of resources.

In essence, evolution reveals several convergent Darwinian drives: fast replication and resource exploitation, power optimization, and niche specialization. Although these drives can confer significant advantages, they are also double-edged swords, each carrying inherent risks.

The crux of the matter lies in natural selection itself. As a trial-and-error optimization process, it cannot foresee the long-term consequences of any given adaptation. It operates only by “feeling” the immediate slope of the fitness curve from one generation to the next. This constraint means that evolution may direct populations toward suboptimal peaks in the fitness landscape, where they stagnate, or even favor traits that are beneficial in the short run but lead to evolutionary suicide over time.

Each Darwinian drive has a corresponding peril when taken to extremes. For instance, fast replication can spiral into overpopulation, ultimately causing starvation or environmental degradation. Power optimization—especially predatory adaptations—can lead to overhunting and population collapse once prey becomes scarce. Niche specialization can be equally hazardous; while it may yield short-term advantages in resource exploitation, it can make a species acutely vulnerable to sudden environmental shocks.

These dangers can be visualized as “landmines” (leading to species extinction) and “traps” (ensnaring species on suboptimal fitness peaks). Importantly, landmines are not found only in the “valleys” of the fitness landscape but also around the very “hills” that seem advantageous at first. In other words, natural selection can unwittingly guide a species to climb a peak that later causes its demise—potentially explaining why the average lifespan of a species is only a few million years.

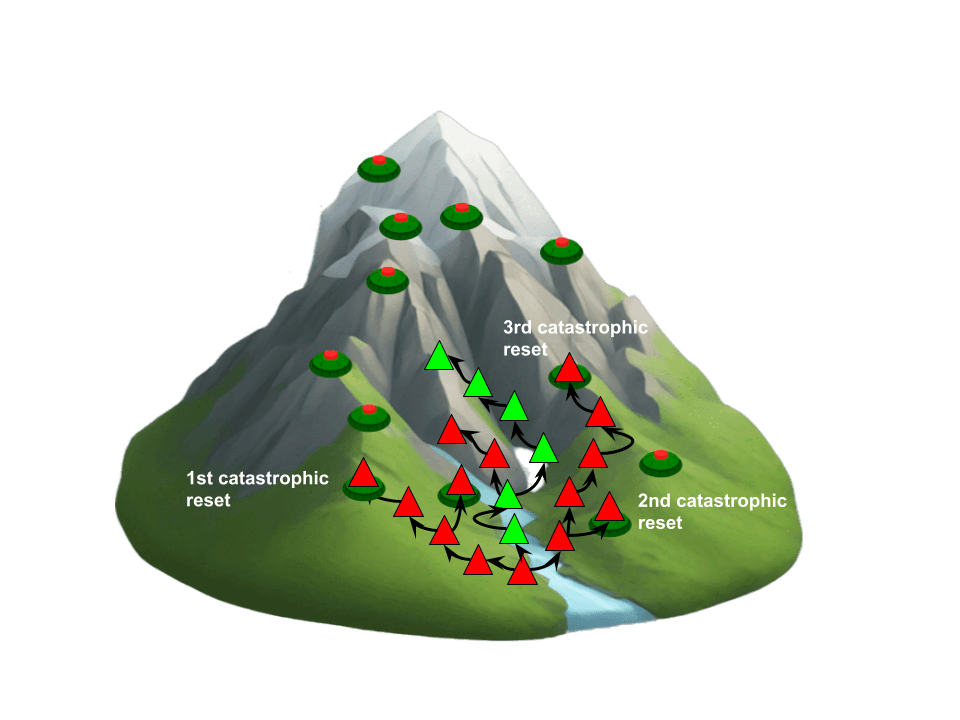

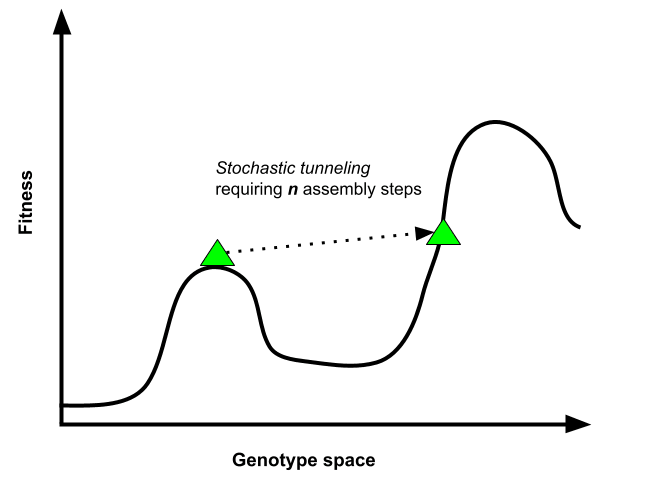

The concept of a landmine, while a simplified and dramatic visual metaphor, serves as an entry point to understanding a more nuanced phenomenon. A more accurate technical description would characterize it as a suboptimal fitness peak that gradually loses viability due to dynamic changes in the fitness landscape, driven by environmental shifts or heightened competition from other species. A better metaphor might be that of being stranded atop an island as rising sea levels encroach, steadily reducing the adaptive space until escape becomes impossible, ultimately leading to extinction. Avoiding this fate—reaching safety through the last remaining land bridges—might necessitate a temporary decrease in fitness, a process that could involve multiple interdependent steps and rely on rare stochastic tunneling events. This concept will be examined in greater depth later in the article, but to use a visual metaphor, it’s kind of like tunnelling across the creek in the image below below through several neutral or maladaptive steps.

Real-world examples of local peaks containing potential landmines are abound in the literature on evolutionary suicide. Pandas illustrate the pitfalls of over-specialization: once predatory bears, they now rely almost exclusively on bamboo, making them highly susceptible to habitat changes. The power arms race between predators and prey can doom both sides if predators overexploit their prey, leading to the eventual collapse of both populations. Similarly, highly contagious viruses often kill their hosts too quickly, cutting off further transmission and dooming themselves in the process. Some bacteria multiply so rapidly that they alter their environment’s pH to toxic levels, triggering mass extinction. Similarly, rapid algal blooms deplete nutrients so quickly that they precipitate their own collapse.

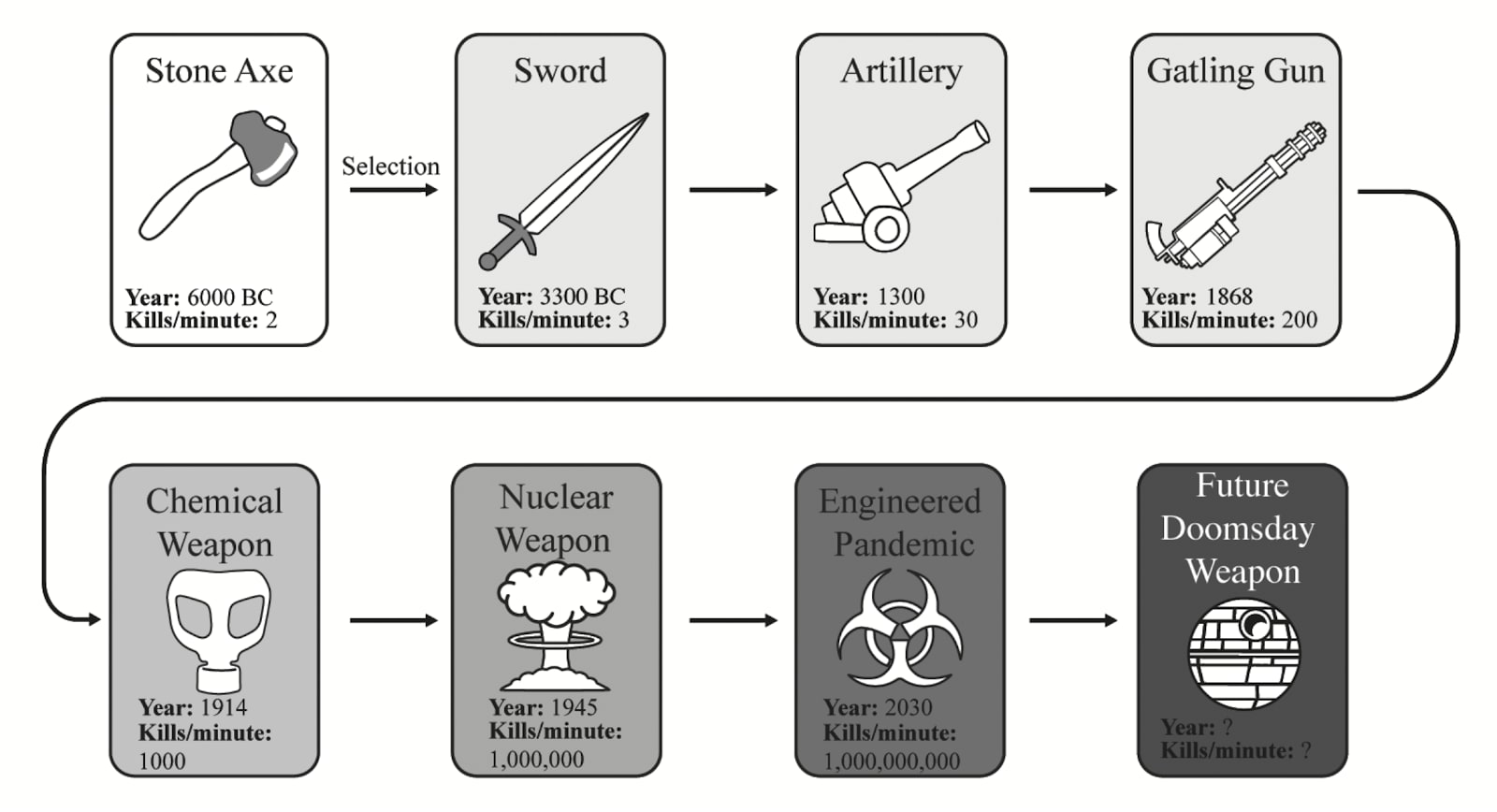

These mechanisms are also mirrored in today’s societies and should give us pause for reflection. Nations face relentless selection pressure to exploit resources as quickly as possible, often exceeding the Earth’s capacity to regenerate them. This dynamic drives the pursuit of ever more competitive economies and stronger militaries. Over time, weapons have become increasingly lethal, evolving from stone tools and bows and arrows to cannons, machine guns and atomic bombs. These patterns reflect both the principles of offensive realism and the evolutionary competition that fuels the relentless quest for power and survival.

Taken together, these examples underscore how evolutionary success stories can quickly turn sour. The random walk across the fitness landscape is riddled with landmines and traps, and evolution itself has no foresight to avoid them.

From Species Fitness to Planetary Fitness

In the previous sections, we explored how individual organisms and species navigate the rugged fitness landscape, striving to replicate, compete for resources, and sometimes cooperate. However, evolution doesn’t stop at the species level. When we zoom out far enough, we can view the biosphere itself as undergoing a random walk—not just of individuals or species, but of planetary fitness.

Just as an individual’s or species’ fitness can be measured by its survival and reproductive success, the planetary fitness might be conceptualized as its ability to absorb shocks, a capacity largely determined by its biological diversity. This diversity, in turn, is influenced by the planet’s assembly index, as a higher index allows for the formation of a greater variety of complex structures. A diverse biosphere is inherently more robust: it weathers ecological changes, recovers from disasters, and fosters continued innovation along new evolutionary trajectories.

Yet, just as species encounter landmines (extinction risks) and traps (suboptimal fitness peaks), so too can the biosphere as a whole. On a planetary scale, these “landmines” might take the form of existential—catastrophic paths that could wipe out all life or severely curtail its complexity.

Life’s fragility to potential landmines before the Cambrian explosion 540 million years ago is easy to imagine. Billions of years ago, when life was confined to single cells thriving in thermal vents on the ocean floor, it could have been wiped out by a lethal virus or an aggressive single-celled predator, before it had time to evolve proper defence mechanisms. Similarly, rapidly multiplying bacteria or algae could have disrupted the biogeochemical cycles essential for life, potentially halting its progress entirely.

However, if biogenesis is relatively easy—a possibility supported by the latest scientific findings—extinction might not spell the end of life altogether. Life could restart from a lower assembly index and rebuild itself. This can be likened to playing a video game like Super Mario: if you have no extra lives and die early, say at level 2, it’s not a big setback. There’s little progress to replay, and it likely won’t take many attempts to get back to where you were. But if you die near the final boss, it might be categorized as a catastrophic resets, as it takes much longer and more replays to regain that level of progress. Moreover, the lifespan and evolution of a star's brightness impose a finite number of opportunities for catastrophic resets before an otherwise habitable planet drifts into the uninhabitable zone.

When it comes to evolutionary traps instead of landmines, we don’t need to rely on imagination as much. Evolutionary history shows us that short-term selection pressures favoring defection have repeatedly hindered major evolutionary transitions, slowing the development of complex life with higher assembly indexes.

What is particularly striking is that many of these evolutionary traps occur at the molecular level. Spiegelman’s monster provides a vivid example. Normal RNA strands typically contain thousands of nucleotides, which carry the “instructions” necessary to produce complex life. However, shorter RNA strands replicate faster than longer ones, all else being equal. Over time, these truncated strands can outcompete and overwhelm normal RNA, leaving behind only these self-replicating "monsters" and halting further complexity.

The table below illustrates evolutionary traps that could have blocked the development of more complex life at various stages, along with the cooperative adaptations required to overcome them.

| Time- line | Unit of selection | Defective adaptation | Potential cooperative adaptation |

| 4.5 billion years ago | Simple Molecules | Asphaltization is when simple organic molecules like amino acids are unable to assemble without unwanted byproducts, possibly blocking complex molecules such as RNA from evolving. | The Reverse Krebs Cycle is a proposed model for how simple organic molecules could have combined to form a stable cycle of chemical reactions avoiding any “asphalt” byproducts. |

| 4 billion years ago | RNA molecules | Spiegelman’s Monster are short RNA molecules that replicate faster, thus outcompeting RNA molecules that encode useful genetic information, blocking the existence of complex genomes needed for life. | Cell membrane, likely a lipid membrane, created a sanctuary for more complex RNA molecules to thrive inside of them, without being outcompeted by faster replicators. |

| 3.5 billion years ago | Genes | Selfish genetic elements are genes capable of cutting themselves out of one spot in the genome and insert themselves into another, ensuring their continued existence even as it disrupts the organism at large. | Suppressor elements are genes that suppress or policy selfish genetic elements such as jumping genes. |

| 3 billion years ago | Prokaryotes | Viruses are rogue generic material that trick a cell into replicating more copies of it, while harming the host organism. | The CRISPR system is the viral immune system inside of cells, cutting away unwanted virus RNA before replicating. |

| 1.6 billion years ago | Multicelled organisms | Cancer cells divide frantically against the interest of the cell colony, which may have blocked the evolution of multicellularity. | Cancer Immune System: inhibiting cell proliferation, regulating of cell death, and ensuring division of labor. |

| 150 million years ago | Groups | Selfish behavior competing for resources with other individuals from the same species, blocking the formation of more advanced groups. | Eusociality: cooperative behavior encoded into the genes of the first social insects, making altruism towards kin the default. |

| 50,000 years ago | Tribes | Tribalism where some tribes try to exploit and subjugate others, blocking the formation of larger cultures and societies. | Language capable of encoding norms and laws, eventually enabling large scale cultures and societies to form. |

| Now | Nations & cultures | Global arms races where countries and enterprises are competing for power and resources. | ? |

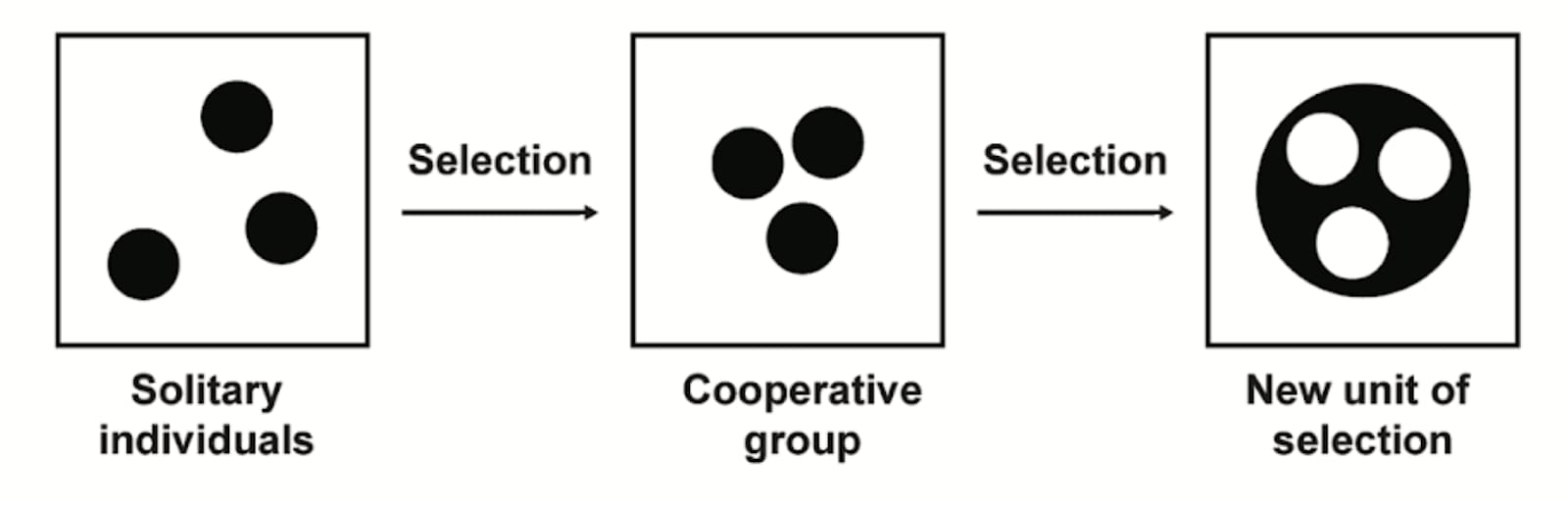

In my book The Darwinian Trap, I call the selection pressures for defection darwinian demons, and the selection pressure for cooperation darwinian angels. To understand the conditions under which cooperative behavior thrives, we can look at our own body. For an individual cell, the path to survival might seem clear: prioritize self-interest by replicating aggressively, even at the organism's expense.

However, from the perspective of the whole organism, survival depends on suppressing these self-serving actions. The organism thrives only when its cells cooperate, adhering to a mutually beneficial code. This tension between individual and collective interests forms the core of multi-level selection, where evolutionary pressures act on both individuals and groups.

Interestingly, the collective drive for survival paradoxically requires cells to act altruistically, suppressing their self-interest for the organism's benefit.

The history of life on Earth spans approximately 4 billion years, yet the dramatic diversification of life seen during the Cambrian explosion occurred only 530 million years ago. In other words, for the vast majority of Earth's history, life remained predominantly single-celled—an era geologists often refer to as the "Boring Billion." Given a planetary journey marked by random walks, frequent "reboots" of life, and traps that hinder the development of complexity, this prolonged simplicity is hardly surprising.

When examining the random walk of our planetary history, it might seem as though life is on an inevitable trajectory toward greater complexity. After all: here we are, billions of years after life first emerged on Earth, equipped with complex brains and the ability to reflect on our own precarious survival. Why haven’t we, or the myriad other lineages in Earth’s history, already stumbled into one of the many “landmines” that could wipe us out? To understand this puzzle, we need to revisit observation selection effects [? · GW]—a phenomenon closely tied to survivorship bias.

Moreover, to truly understand how common "landmines" and traps are—and how easily they can be escaped—we need to zoom out beyond our planet. By considering the average random walk of an average planet at galactic and intergalactic scales, we can better hypothesize about the likelihood of life evolving complexity elsewhere.

Cosmic Survivor Bias

In World War II, researchers mistakenly planned to reinforce the places on returning planes where they saw bullet holes. Abraham Wald famously pointed out they were ignoring planes that never came back; the bullet holes on those planes were in areas so critical that the aircrafts crashed. Had the Allies reinforced only the visibly damaged spots, they might have missed the far more vulnerable areas.

Applying this logic to Earth’s history: the very fact that life has persisted here might lead us to underestimate how many times it could have been wiped out. As observers, we can only exist in a timeline where our planet’s random walk avoided catastrophic "landmines," no matter how frequent those landmines may be—much like how we don’t see the bombers that never made it back.

Even in a universe where life is 99.999999% likely to self-destruct before intelligent observers can evolve, we would still find ourselves on that incredibly lucky planet where the random walk avoided landmines and traps against all odds. This is because, as long as the universe is vast enough and contains countless planets capable of biogenesis, it only takes one planet surviving long enough to produce observers like us.

This argument of survivorship bias, doesn't give us any probabilities for how likely landmines and traps are in an average planetary random-walk, but it tells us that we should update our probabilities in the direction of extinction being more common than our evolutionary history reveals. Moreover, since consciousness might not necessarily be required for fitness optimization (e.g. advanced cognition is possible without it), Andrés Gómez-Emilsson have argued that another type of extinction could be universes populated entirely by non-conscious "zombie" replicators that optimize for survival and reproduction without any inner experience.

In The Darwinian Trap, I introduce the Fragility of Life Hypothesis, which posits that the average planetary random walk may be littered with landmines and traps. This hypothesis extends Nick Bostrom’s Vulnerable World Hypothesis by acknowledging that existential risks are not limited to human-created technologies with a high assembly index. They also apply to biological mutations with a lower assembly index. But what evidence supports this idea?

A perfectly balanced metabolism might be hard

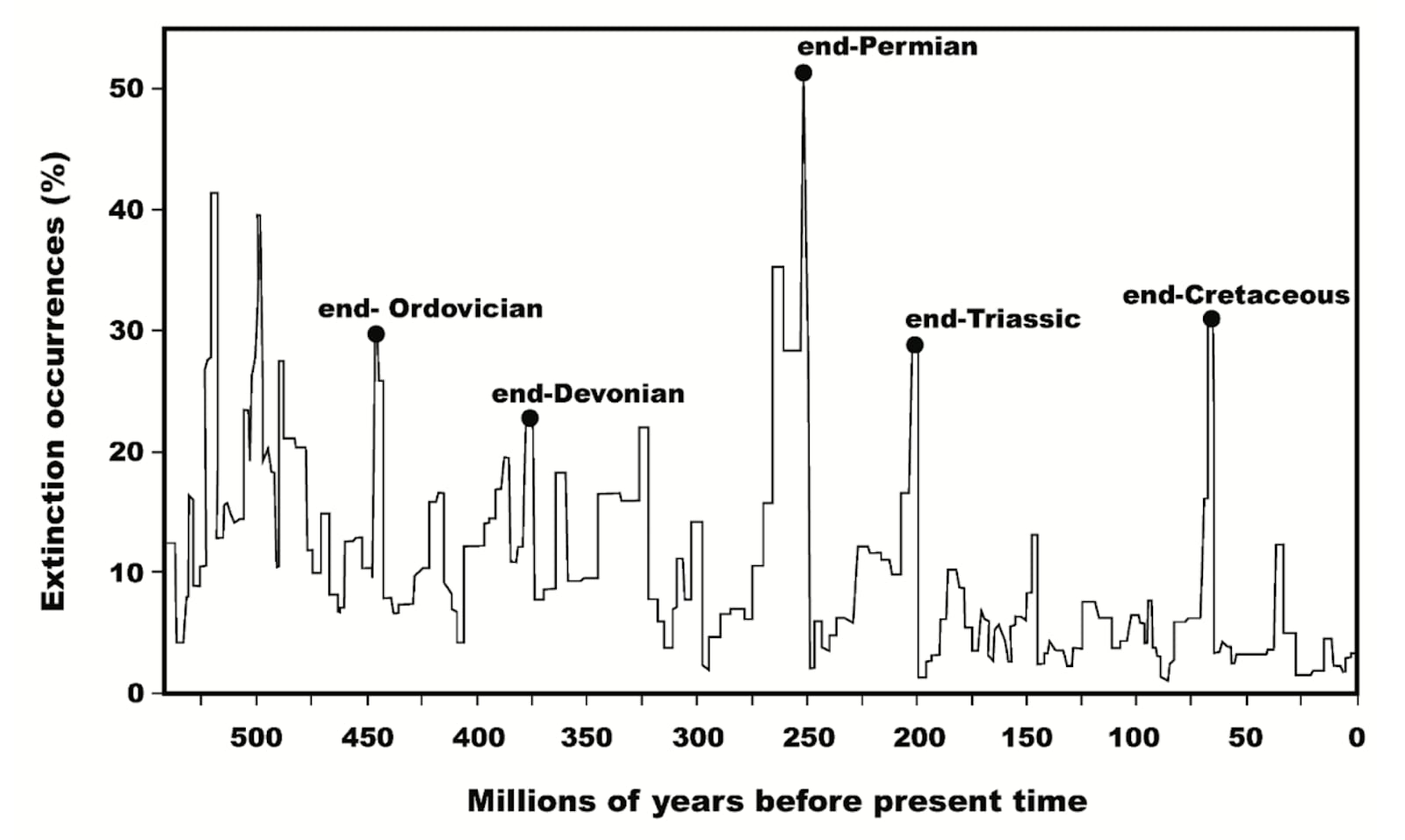

Earth’s fossil record reveals that our planet has endured numerous mass extinction events, many of which were driven by the metabolisms of living organisms. At its core, metabolism is the set of chemical processes that sustain life. All living organisms take in inputs—such as nutrients, energy sources, and gases—and transform them into outputs, including energy to sustain life and waste byproducts that are expelled into the environment. While metabolism allows organisms to grow and reproduce, the accumulation of metabolic byproducts can have significant, sometimes catastrophic, effects on the surrounding environment.

For life to thrive and survive, the metabolic processes must balance each other out, in a cycle sometimes called circular metabolism or chemical cycling. The metabolic by- product of animals breathing must be counterbalanced by photosynthesis from plants. Likewise, without decomposers such as fungi, bacteria, and worms, the accumulation of organic waste would disrupt ecological systems.

Paleontologist Peter Ward has extensively studied this phenomenon, proposing that as many as five out of six major mass extinctions were caused by life forms such as rapidly replicating bacteria and algae altered Earth’s atmosphere and disrupting biogeochemical cycles.

Moreover, today humanity is disrupting critical Earth systems on a massive scale, due to the non-circular nature of our industrial metabolism, which is currently causing another mass extinction where as many as half of all species risk going extinct within our lifetime. Our industrial activities take in natural inputs—such as fossil fuels, minerals, and forests—and produce vast amounts of waste, including greenhouse gases, chemical pollutants, and disrupted nutrient cycles. These outputs are pushing planetary boundaries—such as climate stability, biodiversity, and nitrogen and phosphorus cycles—beyond safe limits. By overloading Earth's capacity to regulate its systems, we are replicating the imbalances that have triggered past mass extinctions, but at an unprecedented pace.

None of these mass extinction "landmines" led to the complete annihilation of all life. Survivorship bias reminds us that, as observers, we could not exist on a planetary random walk where 100% of species were wiped out. Achieving a perfectly balanced planetary metabolism might be an extraordinarily rare and challenging evolutionary feat—or survivorship bias alone cannot rule it out.

Similarly, escaping traps in the planetary random walk—such as through cooperative adaptations enabling major evolutionary transitions highlighted in the table earlier—may also be equally rare.

Major evolutionary transitions might be even harder

Previously in the article, I discussed the necessity of cooperative mechanisms to overcome adaptive defects that might block the emergence of more complex structures with higher assembly indexes. Examples include:

- The Tar Paradox, which hinders the formation of macromolecules.

- Spiegelman’s Monster, which blocks the formation of stable genes.

- Selfish Genetic Elements, which prevent the establishment of a functioning genome.

- Cancer, which can obstruct the development of multicellularity.

These evolutionary bottlenecks illustrate how defects at intermediate stages can stifle complexity. However, the evolution of cooperative mechanisms to resolve these challenges might itself involve multiple assembly steps that offer no adaptive benefits when considered in isolation.

The concept of stochastic tunneling provides a compelling framework for understanding how evolutionary transitions surmount these "fitness valleys." Similar to quantum tunneling in physics, stochastic tunneling allows a sequence of critical assembly steps to accumulate through sheer chance, even when each individual step does not confer an immediate fitness advantage. This process enables a species to traverse otherwise insurmountable fitness valleys, unlocking new pathways for evolutionary complexity.

The probability of such stochastic jumps decreases exponentially with the number of assembly steps required. For instance, the emergence of eukaryotes depended on the incorporation of a mitochondria-like symbiont. This event likely relied on genetic, metabolic, and ecological scaffolds, which were essential for the eventual complexity of eukaryotes. Yet, these scaffolds provided no immediate fitness advantage until they were integrated into a functional higher-order system, making the evolution of eukaryotes into a so-called “hard step”.

Stochastic modeling by Snyder-Beattie et al. suggests that the time required to complete such hard-step transitions often exceeds Earth’s habitable lifespan by many orders of magnitude. This underscores the extraordinary improbability of intelligent life, which depends on the rare convergence of assembly fragments, cooperative adaptations, and stochastic breakthroughs during a planetary random walk.

Moreover, the Fragility of Life Hypothesis is a reasonable resolution to the Fermi Paradox, i.e. the lack of solid evidence for alien life in the universe, despite several decades of observation. If intelligent life capable of colonizing other planets and solar systems were common, we would expect to see clear signs of their presence throughout the cosmos. In theory, a civilization could colonize an entire galaxy within a few million years—a blink of an eye on cosmic timescales. One could argue that the selection pressure driving offensive realism also applies to galactic conquest, with "grabby aliens" embodying expansionist tendencies as they seek territory and remain relatively easy to detect.

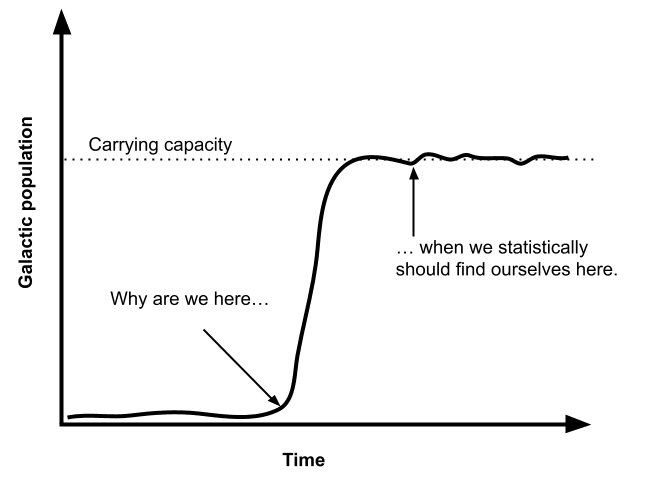

If the population growth of such a civilization followed an S-shaped curve, similar to those observed in Earth's ecosystems, it would be highly improbable for us to find ourselves at the inflection point of that curve. In other words, we would either expect to see life abundantly distributed across the universe or not at all. As it stands, the absence of evidence suggests we may be in the latter scenario.

The Game of Gods

Adaptive strategies for avoiding planetary landmines

So far, we have examined how the random walk of the replicators can propel life toward both extraordinary complexity and perilous landmines. Just as multi-level selection favors groups of cooperating cells that deploy mechanisms to inhibit the traps of cancerous defection, there will inevitably be intergalactic selection pressures favoring strategies that adheres to the precautionary principle and traverse the fitness landscape in ways that circumvent potential landmines and traps (if the Fragility of Life Hypothesis is true). This raises an even deeper question at the planetary level: how does life prevent the inherent self-annihilation of the replicators?

For simple life, unequipped with brains capable of predicting future consequences, the only way to dodge evolutionary landmines might be to evolve extremely slowly, with minimal mutations between generations. Evolutionary innovations that accelerate evolution itself, such as sexual reproduction, horizontal gene transfer, and multicellularity, while adaptive in the short term, might lead to a faster planetary random walk, thereby increasing the probability of stepping on a landmine. From a galactic perspective, such innovations might ultimately be selected against, as their long-term risks outweigh their short-term advantages. This might resemble a stable equilibrium where single-celled organisms barely mutate, never progressing beyond a rudimentary metabolism or primitive replication. Evolution remains frozen in a state of near-zero risk.

For intelligent life, capable of evolving through advanced predictions, there might exist additional options. The Great Bootstrap is the idea of shifting from a replicator random walk to a predicted walk—proactively identifying and avoiding landmines through foresight and planning. In my book, The Darwinian Trap, I suggest that such a bootstrap is possible if we can foresee which paths lead to existential pitfalls, we might continue innovating without blindly stumbling into ruin. A practical way to create the global incentives for this type of predictions could be through the deployment of what I called repetitional markets (based on the idea of prediction markets).

The Limits of Prediction

However, as computational complexity theory teaches us, no matter how advanced our forecasting tools become, certain phenomena might be impossible to predict. Stephen Wolfram’s calls this phenomenon computational irreducibility— which is when you can only see the outcome of a computational process by running them to completion.

In the Vulnerable World Hypothesis, Nick Bostrom calls these theoretical existential risks, that might be impossible to predict, surprising strangelets. Suppose a particle accelerator designed to recreate conditions shortly after the Big Bang accidentally generates a small black hole. The intent of the experiment is purely scientific, aiming to explore fundamental physics. However, due to an unforeseen mechanism, the black hole does not evaporate (as expected by current theories) but instead stabilizes and begins to grow, eventually consuming the Earth.

In other words, it might be impossible to predict the location of the next "landmine" in a general sense without trial and error, which inherently involves the risk of triggering it. In fact, the scientific method itself heavily relies on trial and error. Computational irreducibility also sheds light on why many scientific discoveries appear to have been stumbled upon through serendipity rather than predicted in advance.

Societies striving to maintain progress while staying safe must therefore weigh a wrenching trade-off. Should they limit scientific innovation in all computationally irreducible domains where they don't feel confident in their predictive powers, missing out on all potential upsides? Or should they forge ahead in areas too complex to forecast, gambling that they won’t uncover something devastating in the process?

The Rise of High-Fidelity Simulations

One possible solution to managing existential risks is to conduct experiments within hyper-realistic simulations—self-contained “pocket universes,” powered by immense computational resources, that mimic reality with extraordinary precision. These simulations would allow civilizations to test potentially dangerous technologies in a controlled environment, avoiding real-world consequences. Moreover, they would not break the principle of computational irreducibility. Instead, they embrace it. By running simulations to completion, civilizations can directly observe the outcomes of computationally irreducible processes without needing to predict them analytically in advance.

However, the higher fidelity of the simulation, the more likely it is to generalize to the real world. Therefore, creating highly realistic simulations to test and predict the consequences of new technologies might become the highest-priority issue for technologically mature civilizations. Moreover, there could be an intergalactic selection pressure for this strategy. Civilizations that fail to prioritize safe experimentation are likely to self-annihilate, while those that develop and adhere to rigorous simulation-based testing would be more likely to survive and advance.

As civilizations advance in their ability to harness energy and computational capacity, they may progress along the Kardashev scale, a framework that categorizes civilizations based on their energy consumption. A Type I civilization harnesses the energy of its planet, Type II captures energy on a stellar scale, and Type III commands energy at the scale of galaxies. The leap to Type II might involve building Dyson spheres, which provide the vast energy required to power quantum computers capable of supporting high-fidelity simulations. These structures would potentially enable civilizations to meet the immense energy demands required to simulate entire civilizations. For a society like ours, which has yet to transcend to even Type I on the Kardashev scale, the capabilities of a Type II or Type III civilization would appear nothing short of godlike.

What types of simulations might godlike civilizations choose to run? Most likely, they would prioritize simulations designed to help them improve as a civilization. These simulations could resemble an advanced form of research and development, akin to technology readiness levels. Here are some key types:

- Open-Ended Innovation - In this type of simulation, a population of replicators is allowed to freely explore all potential structures or technologies permitted by the laws of physics within a designated assembly universe. By leaving the environment open-ended, these simulations harness the full creativity of natural selection. The goal is to observe how various innovations contribute to overall fitness within the simulated universe.

- User Testing of Innovations - When a particular technology shows promise, it can be tested in a simulated environment populated by digital beings. This allows the gods to assess potential dual-use or misuse scenarios before deploying the technology in the real world, where it could pose existential risks.

- Testing of Incentive Environment - A technology’s interaction with its users is shaped by the fitness landscape of the environment in which it is deployed. Simulations of this kind experiment with different configurations of environmental parameters to identify scenarios that minimize misuse and optimize outcomes. These simulations help fine-tune the conditions for safe and effective deployment.

- Testing of Users Alignment - In traditional user testing, the product’s design is the variable while the user’s cognition is treated as a constant (e.g., “there are no dumb users, just bad design”). However, in simulated worlds, civilizations could go further by experimenting with variations in the user’s neural architecture. These simulations test how an entity might interact with technology if it were more intelligent, placed in a competitive environment, or had differing motivations. The aim is to ensure that users remain aligned with the civilization’s values and use technologies responsibly, even under extreme conditions.

Each type of simulation serves a distinct purpose, but together they ensure technologies are deployed to maximize the values of the civilization while minimizing risks. Among these, user testing for alignment may be the most critical, as it enables the creation of more intelligent yet still aligned beings. These beings could represent the next generation of gods—beings who inherit the wisdom and intelligence of their creators while remaining harmonious with their values.

Simulating new gods may be essential because intelligence itself is an inherently dual-use, computationally irreducible technology—capable of both immense good and catastrophic harm. Deductively proving that intelligent agents are 100% safe is hence impossible due to the complexity of their behaviors. However, through simulation, civilizations could inductively demonstrate a high probability of safety and alignment for the next generation of gods.

Attempting to reach near-100% confidence in safety, godlike civilizations might confine these experimental intelligences within advanced simulated worlds. Over what could feel like millions of subjective years—spanning multiple lifetimes in varied environments—these intelligences would be tested for their alignment, cooperation, stability of values [? · GW], and overall safety. Only those that consistently demonstrate responsible behavior and embody the civilization’s values would graduate out of their virtual sandboxes. This mitigates the danger of misaligned intelligence in the physical world.

This practice already has analogies in our modern era. AI and robotics companies, for instance, train autonomous vehicles extensively in simulated road networks to identify and address potential failures without endangering real human lives. Waymo, for example, reported over 20 billion virtual miles in 2020, and OpenAI’s Dota-2 bots effectively played 45,000 years of in-game time in under a year to refine their strategies—all in simulation. Similarly, Davidad’s plan [LW · GW] proposes constructing an ultra-detailed world model to train AI systems within a controlled, formally verified simulation, ensuring alignment and safety before deployment in the real world.

Is Buddhist cosmology True?

Now consider the possibility that our own reality is one such alignment simulation, orchestrated by “gods”—highly advanced beings or civilizations seeking to test how we handle existential threats in their own world. This idea resonates with various spiritual traditions’ references to rebirth, higher realms, and the ultimate attainment of enlightenment. For instance, Buddhist cosmology describes countless rebirths in myriad worlds before one finally transcends the cycle of samsara, while Abrahamic faiths speak of passage into heaven for the righteous. Strikingly, both traditions carry a notion similar to a “cosmic filter”: demonstrate certain virtues (or show alignment, in AI terminology), and you ascend; fail, and you remain trapped in the cycle until you learn.

In some strands of Buddhist thought, the ultimate goal is to relinquish all craving and aversion—key driving forces in evolutionary or “replicator” dynamics. Freed from the desire to “win” or replicate in a zero-sum environment, an enlightened being might be deemed safe enough to “exit” the simulation, entering the realm of the gods or higher planes of existence. Similarly, Bodhisattvas—those who aspire to help all sentient beings—embody a selfless desire that might also earn them an eventual escape.

Moreover, In Buddhist cosmology, the perception of time differs across realms, with heavenly realms experiencing time at a slower pace relative to human existence. Similarly, it might be adaptive for advanced civilizations (“gods”) to comparatively underclock themselves whilst the simulated beings undertake the riskier assembly steps through trial and error. Meanwhile, the gods can observe, learn, and apply these lessons to optimize their own knowledge, wisdom, and cosmic understanding—minimizing their own exposure to catastrophic risks whilst maximising their values. This process could involve multiple layers of simulations, where the lessons gleaned in one layer might be transferred upward, eventually benefiting the top-level civilization.

Of course, the resemblance to Buddhist cosmology is likely purely coincidental, but I find it to be a useful metaphor.

Yet another anthropic argument

The alignment between this version of the simulation argument and existing religious narratives is likely best attributed to coincidence, rather than suggesting that any religions possess divine communication with the simulant god(s). However, this perspective might offer some insight into the broader question of why humanity finds itself at such a precarious juncture in history. We’re perched at a tipping point where technology—artificial intelligence, engineered pandemics, nuclear arsenals—could wipe us out, and yet we’re also on the cusp of interstellar travel.

By all rights, if we consider an S-curve of civilization growth, it seems far more likely that we’d be born when humanity (or its successors) is already spread across the galaxy in stable prosperity. That scenario would have a vastly larger population base, so purely from an anthropic perspective—where you’re statistically more likely to be born into a large population than a small one—you might expect to wake up in an era of 10^40 of sapient individuals in the galaxy. Instead, here we are in the infancy of spaceflight, still squabbling on a single planet with 10^10 individuals. If you naively divide those two numbers you would end up with a 0.0000000000000000000000000001% likelihood to find ourselves in the situation we find ourselves in.

This reasoning is central to the so-called Doomsday Argument, which suggests that the improbability of our existence in the early stages of civilization could be explained by the absence of a hopeful future, such as galactic colonization. Instead, it posits that humanity may be doomed to self-destruction before achieving such milestones. But another explanation might be that we’ve been placed in a crucible, carefully engineered to test our moral fiber and collective intelligence, when standing face to face with doom.

The Fragility of Life Hypothesis suggests that a smaller population of highly aligned, god-like agents—or potentially even a single recursive self-improving agent—ought to be selected for. This is because a smaller population is easier to govern which both minimizes the likelihood of value-drift and encountering landmines resulting from potentially competitive dynamics.

One could even argue that over time the only stable configuration in the top level universe is for a single god to exist. For instance, in the case of recursively self-improving agents the rate of progress at any given moment becomes highly sensitive to initial conditions. In other words, if one AI agent gains even a slight head start—measured in days or even hours—this advantage could compound rapidly through recursive self-improvement. Over time, this dynamic might render any power balance between Agent A and Agent B impossible to achieve.

In summary, advanced civilizations might adopt a strategy of confining most observers to simulations. In these controlled environments, trial-and-error innovations and interactions with potentially dangerous technologies could be tested and monitored over long periods. These simulations would act as safe sandboxes, enabling rigorous evaluation of alignment and behavior while minimizing the risk of catastrophic consequences of these technologies in the real world.

A Quantum Parallel: Observers as Rendering Engines

Another intriguing strand of scientific evidence that could reinforce the “simulation” perspective comes from quantum mechanics—particularly the way in which an observer’s measurement appears to influence physical outcomes. In the famous double-slit experiment, particles such as electrons or photons display wave-like interference when not observed, but act more like discrete particles when measured or “watched.” While contemporary physics does not strictly require a conscious observer for wavefunction collapse—any interaction that constitutes a “measurement” will do—the principle that observation changes the system is strikingly reminiscent of how a video game “renders” its environment. Game engines typically do not pre-compute every detail of a massive 3D world in real time; rather, they selectively render what’s needed from the player’s perspective, updating and loading in detail as soon as the camera points in a new direction.

Personally, however, I am not a fan of the idea that the observer effect in quantum mechanics necessarily implies a “participatory” universe, at least not in the sense of invoking some mysterious metaphysical status to the observer theory. Quantum theory, in my view, can be demystified through something like the De Broglie–Bohm interpretation, where the world is entirely deterministic. Crucially, the guiding wave (i.e., the wavefunction) is treated as a real, physical entity that does not literally “collapse.” Instead, what appears to be collapse is better understood as an artifact of our subjective (Bayesian) uncertainty regarding the complex, computationally irreducible ways in which particles interact with their guiding waves (see a real-world physics analogue here). Particles themselves are never in multiple places at once; the illusion of superposition arises from our limited knowledge of their precise trajectories. This perspective also avoids any contradiction with Bell’s theorem, since Bell’s famous result applies only to local hidden variables—and De Broglie–Bohm employs global hidden variables that circumvent the theorem’s locality assumptions.

Moreover, many findings in modern physics suggest a digital texture to our universe. The Planck scale, for instance, can be interpreted as the “pixel resolution” of reality, while the speed of light might be seen as its “maximum update rate.” Yet, the presence of these discrete limits does not, in itself, prove we exist in a simulation. Rather, one could argue that the universe must be digital at its core for physical laws to remain computable. In this view, a discrete, computational framework prevents the paradoxes or contradictions that would arise from hypercomputational or infinitely continuous processes. This is also why I’m not a fan of non-constructivist mathematics that assumes the existence of non-definable and non-computable objects (a story for another time).

Hidden assumptions

Of course, this entire argument hinges on several non-trivial assumptions:

- Simulated beings can be conscious: Philosophers have long debated whether a perfect emulation of a mind in a computational system equates to the real experience of “what it’s like” to be that mind. If consciousness cannot arise in a simulation, not even by a quantum computer we will never find ourselves in a simulation as a conscious observer.

- Nature is computational in a strong sense: This aligns with the Church–Turing–Deutsch thesis, which posits that all physical processes can, in principle, be simulated by a universal computer. If this assumption is false, or if the real world is hypercomputational, simulations might fail to capture critical aspects of reality.

- Complex phenomena can be emulated without requiring a physical substrate on the scale of the cosmos: Simulating entire ecosystems—and especially emergent societies—at enough fidelity to escape computational irreducibility might require a quantum computer of the size of the universe.

- The multiverse doesn't exist, or if it does it has a similar distribution of simulated observers as our universe: if we zoom out to a maximally extended (Level 4) multiverse—where every computer program in the space of all possible programs exists—the distribution of "simulated worlds" and "real worlds" becomes even more ambiguous. The question of how many observers exist in simulated realities versus base-level realities grows increasingly murky. For instance, some universes might expand infinitely in terms of base-level observers, with natural laws structured in such a way that simulated beings cannot achieve consciousness. Or others might contain an almost infinite amount of boltzmann brains.

- Anthropic reasoning is a valid method of probabilistic inference: The argument assumes that we can (and should) treat ourselves as a sample from a broader set of possible observers, and that the framework of “selecting” or “weighting” observers in some cosmic reference class is a valid tool for making probabilistic statements about the world.

However, even if assumptions 2 and 3 prove false, advanced civilizations might still attempt these simulations. Computational irreducibility implies that determining whether these assumptions are indeed impossible may itself require experimentation. In other words, civilizations might proceed with simulations simply because it’s impossible to rule out their feasibility without trying. As long as the first assumption—that simulated beings can be conscious—holds true, we might still find ourselves living in a simulation, regardless of the ultimate validity of assumptions 2 and 3.

[I’d like to share a footnote on the multiverse, which for me carries a sense of spiritual significance. It’s fascinating how the entire computable multiverse seems to emerge simply through the recursive observation of nothingness. For example, let’s start with nothingness. By observing this void, we can symbolize it with curly brackets and denote it as {} or 0. Observing this emptiness a second time, we arrive at a new structure: {{}}, which we might call 1 or {0}. More generally we can denote this process as a recursive function S(n) := n ∪ {n} and we get:

- 0= {}

- 1 = {0} = {{}}

- 2 = {0, 1} = {{}, {{}}}

- 3 = {0, 1, 2} = {{}, {{}}, {{}, {{}}}}

Each of these numbers/data structures encodes a computer program, and can be encoded as such using Gödel numbering or just a one single function/combinator such as Iota, Jottary or NAND. It’s almost like all of the complexities of the platonic universe is constructed by recursively observing nothingness]

Focus on Being a Good Human, Not on Overthinking These Ideas

Before taking this article too seriously, I want to acknowledge that many of the assertions made in this article are speculative and not testable, placing them firmly in the realm of philosophy rather than empirical science. Moreover, I also want to acknowledge my biases. In a world where the probability of existential catastrophe (P-doom) within my lifetime (or even the next 5 years) feels alarmingly high, I find comfort in contemplating the possibility of a "second chance" in the so-called "godrealm." As an agnostic atheist, it offers a sliver of hope to imagine that we are not merely humans creating the AI that might doom us, but rather, that we might be the AI—tested for alignment by a higher entity.

If some advanced civilization (or “pantheon of gods”) is watching, then maybe our moral progress is a litmus test for whether we’re ready to join them. Perhaps, if we act with compassion toward less intelligent beings, if we strive to govern dangerous technologies responsibly, if we prove ourselves to be good and enlightened people, and if we embody the ideals of the bodhisattva.

Even if this idea proves false (and it likely is), the moral imperative to prevent catastrophe and strive for better futures remains unchanged. Whether or not we are in a simulation, the destruction of our children's future—or of life itself—would be a tragic outcome. Four billion years of ruthless replicator competition have already resulted in immense suffering in the wild, and humanity as earth's apex predator has amplified this by causing the deaths of countless beings, often for reasons as trivial as taste.

We face a choice: continue playing the brutal game of replicators, or level up to play the game of gods - and in a Great Bootstrap using foresight and consciousness-centered values to guide evolution's next steps. If we are in a simulation and succeed in alleviating suffering, we might earn the opportunity to transcend and join the gods. If we are not, then by safeguarding life and fostering flourishing, we may become gods ourselves. Either way, our responsibility is clear: to ensure the survival and flourishing of sentient life, and in doing so, to secure our place in the cosmos—whether as participants or creators.

3 comments

Comments sorted by top scores.

comment by Perry Cai · 2025-01-17T15:48:04.928Z · LW(p) · GW(p)

I'm confused about how simulations disprove the doomsday argument. Why would a future civilization specifically choose to simulate many instances of the time we're currently in if it doesn't have a significance to that time period?

Replies from: KristianRonn↑ comment by KristianRonn · 2025-01-17T23:39:40.424Z · LW(p) · GW(p)

It doesn't disprove the doomsday argument. It does offer an alternative explanation however.

Why would a future civilization specifically choose to simulate many instances of the time we're currently in if it doesn't have a significance to that time period?

Don't think they necessarily care about a specific time period. I think they care about: can they learn how the simulated beings interact with a new technology in a way that prevents them to repeat or mistakes. And it could be the case that our particular time is the most efficient to learn from (i.e. the time that happens right before you might go extinct).

Replies from: Perry Cai↑ comment by Perry Cai · 2025-01-18T15:44:10.048Z · LW(p) · GW(p)

My understanding is that a future civilization must simulate this time period at a fraction greater than others in order for the explanation to be valid. If this is so and some civilization exists until the heat death of the universe, does that imply that technology has reached a point of asymptotic improvement so almost all future times are uniform, and we are going through one of the last great filters?