High-level hopes for AI alignment

post by HoldenKarnofsky · 2022-12-15T18:00:15.625Z · LW · GW · 3 commentsContents

The challenge Digital neuroscience Limited AI AI checks and balances Other possibilities High-level fear: things get too weird, too fast So … is AI going to defeat humanity or is everything going to be fine? Footnotes None 3 comments

In previous pieces, I argued that there's a real and large risk of AI systems' aiming to defeat all of humanity combined - and succeeding.

I first argued that this sort of catastrophe would be likely without specific countermeasures to prevent it. I then argued that countermeasures could be challenging, due to some key difficulties of AI safety research.

But while I think misalignment risk is serious and presents major challenges, I don’t agree with sentiments1 along the lines of “We haven’t figured out how to align an AI, so if transformative AI comes soon, we’re doomed.” Here I’m going to talk about some of my high-level hopes for how we might end up avoiding this risk.

I’ll first recap the challenge, using Ajeya Cotra’s young businessperson analogy to give a sense of some of the core difficulties. In a nutshell, once AI systems get capable enough, it could be hard to test whether they’re safe, because they might be able to deceive and manipulate us into getting the wrong read. Thus, trying to determine whether they’re safe might be something like “being an eight-year-old trying to decide between adult job candidates (some of whom are manipulative).”

I’ll then go through what I see as three key possibilities for navigating this situation:

- Digital neuroscience: perhaps we’ll be able to read (and/or even rewrite) the “digital brains” of AI systems, so that we can know (and change) what they’re “aiming” to do directly - rather than having to infer it from their behavior. (Perhaps the eight-year-old is a mind-reader, or even a young Professor X.)

- Limited AI: perhaps we can make AI systems safe by making them limited in various ways - e.g., by leaving certain kinds of information out of their training, designing them to be “myopic” (focused on short-run as opposed to long-run goals), or something along those lines. Maybe we can make “limited AI” that is nonetheless able to carry out particular helpful tasks - such as doing lots more research on how to achieve safety without the limitations. (Perhaps the eight-year-old can limit the authority or knowledge of their hire, and still get the company run successfully.)

- AI checks and balances: perhaps we’ll be able to employ some AI systems to critique, supervise, and even rewrite others. Even if no single AI system would be safe on its own, the right “checks and balances” setup could ensure that human interests win out. (Perhaps the eight-year-old is able to get the job candidates to evaluate and critique each other, such that all the eight-year-old needs to do is verify basic factual claims to know who the best candidate is.)

These are some of the main categories of hopes that are pretty easy to picture today. Further work on AI safety research might result in further ideas (and the above are not exhaustive - see my more detailed piece [AF · GW], posted to the Alignment Forum rather than Cold Takes, for more).

I’ll talk about both challenges and reasons for hope here. I think that for the most part, these hopes look much better if AI projects are moving cautiously rather than racing furiously.

I don’t think we’re at the point of having much sense of how the hopes and challenges net out; the best I can do at this point is to say [AF · GW]: “I don’t currently have much sympathy for someone who’s highly confident that AI takeover would or would not happen (that is, for anyone who thinks the odds of AI takeover … are under 10% or over 90%).”

The challenge

This is all recapping previous pieces. If you remember them super well, skip to the next section.

In previous pieces, I argued that:

- The coming decades could see the development of AI systems that could automate - and dramatically speed up - scientific and technological advancement, getting us more quickly than most people imagine to a deeply unfamiliar future. (More: The Most Important Century)

- If we develop this sort of AI via ambitious use of the “black-box trial-and-error” common in AI development today, then there’s a substantial risk that:

- These AIs will develop unintended aims (states of the world they make calculations and plans toward, as a chess-playing AI "aims" for checkmate);

- These AIs will deceive, manipulate, and overpower humans as needed to achieve those aims;

- Eventually, this could reach the point where AIs take over the world from humans entirely.

- People today are doing AI safety research to prevent this outcome, but such research has a number of deep difficulties:

| “Great news - I’ve tested this AI and it looks safe.” Why might we still have a problem? | ||

| Problem | Key question | Explanation |

| The Lance Armstrong problem | Did we get the AI to be actually safe or good at hiding its dangerous actions? | When dealing with an intelligent agent, it’s hard to tell the difference between “behaving well” and “appearing to behave well.” When professional cycling was cracking down on performance-enhancing drugs, Lance Armstrong was very successful and seemed to be unusually “clean.” It later came out that he had been using drugs with an unusually sophisticated operation for concealing them. |

| The King Lear problem | The AI is (actually) well-behaved when humans are in control. Will this transfer to when AIs are in control? |

It's hard to know how someone will behave when they have power over you, based only on observing how they behave when they don't. AIs might behave as intended as long as humans are in control - but at some future point, AI systems might be capable and widespread enough to have opportunities to take control of the world entirely. It's hard to know whether they'll take these opportunities, and we can't exactly run a clean test of the situation. Like King Lear trying to decide how much power to give each of his daughters before abdicating the throne. |

| The lab mice problem | Today's "subhuman" AIs are safe.What about future AIs with more human-like abilities? | Today's AI systems aren't advanced enough to exhibit the basic behaviors we want to study, such as deceiving and manipulating humans. Like trying to study medicine in humans by experimenting only on lab mice. |

| The first contact problem | Imagine that tomorrow's "human-like" AIs are safe. How will things go when AIs have capabilities far beyond humans'? |

AI systems might (collectively) become vastly more capable than humans, and it's ... just really hard to have any idea what that's going to be like. As far as we know, there has never before been anything in the galaxy that's vastly more capable than humans in the relevant ways! No matter what we come up with to solve the first three problems, we can't be too confident that it'll keep working if AI advances (or just proliferates) a lot more. Like trying to plan for first contact with extraterrestrials (this barely feels like an analogy). |

An analogy that incorporates these challenges is Ajeya Cotra’s “young businessperson” analogy:

Imagine you are an eight-year-old whose parents left you a $1 trillion company and no trusted adult to serve as your guide to the world. You must hire a smart adult to run your company as CEO, handle your life the way that a parent would (e.g. decide your school, where you’ll live, when you need to go to the dentist), and administer your vast wealth (e.g. decide where you’ll invest your money).

You have to hire these grownups based on a work trial or interview you come up with -- you don't get to see any resumes, don't get to do reference checks, etc. Because you're so rich, tons of people apply for all sorts of reasons. (More)

If your applicants are a mix of "saints" (people who genuinely want to help), "sycophants" (people who just want to make you happy in the short run, even when this is to your long-term detriment) and "schemers" (people who want to siphon off your wealth and power for themselves), how do you - an eight-year-old - tell the difference?

(Click to expand) More detail on why AI could make this the most important century

In The Most Important Century, I argued that the 21st century could be the most important century ever for humanity, via the development of advanced AI systems that could dramatically speed up scientific and technological advancement, getting us more quickly than most people imagine to a deeply unfamiliar future.

This page has a ~10-page summary of the series, as well as links to an audio version, podcasts, and the full series.

The key points I argue for in the series are:

- The long-run future is radically unfamiliar. Enough advances in technology could lead to a long-lasting, galaxy-wide civilization that could be a radical utopia, dystopia, or anything in between.

- The long-run future could come much faster than we think, due to a possible AI-driven productivity explosion.

- The relevant kind of AI looks like it will be developed this century - making this century the one that will initiate, and have the opportunity to shape, a future galaxy-wide civilization.

- These claims seem too "wild" to take seriously. But there are a lot of reasons to think that we live in a wild time, and should be ready for anything.

- We, the people living in this century, have the chance to have a huge impact on huge numbers of people to come - if we can make sense of the situation enough to find helpful actions. But right now, we aren't ready for this.

(Click to expand) Why would AI "aim" to defeat humanity?

A previous piece argued that if today’s AI development methods lead directly to powerful enough AI systems, disaster is likely by default (in the absence of specific countermeasures).

In brief:

- Modern AI development is essentially based on “training” via trial-and-error.

- If we move forward incautiously and ambitiously with such training, and if it gets us all the way to very powerful AI systems, then such systems will likely end up aiming for certain states of the world (analogously to how a chess-playing AI aims for checkmate).

- And these states will be other than the ones we intended, because our trial-and-error training methods won’t be accurate. For example, when we’re confused or misinformed about some question, we’ll reward AI systems for giving the wrong answer to it - unintentionally training deceptive behavior.

- We should expect disaster if we have AI systems that are both (a) powerful enough to defeat humans and (b) aiming for states of the world that we didn’t intend. (“Defeat” means taking control of the world and doing what’s necessary to keep us out of the way; it’s unclear to me whether we’d be literally killed or just forcibly stopped1 from changing the world in ways that contradict AI systems’ aims.)

(Click to expand) How could AI defeat humanity?

In a previous piece, I argue that AI systems could defeat all of humanity combined, if (for whatever reason) they were aimed toward that goal.

By defeating humanity, I mean gaining control of the world so that AIs, not humans, determine what happens in it; this could involve killing humans or simply “containing” us in some way, such that we can’t interfere with AIs’ aims.

One way this could happen is if AI became extremely advanced, to the point where it had "cognitive superpowers" beyond what humans can do. In this case, a single AI system (or set of systems working together) could imaginably:

- Do its own research on how to build a better AI system, which culminates in something that has incredible other abilities.

- Hack into human-built software across the world.

- Manipulate human psychology.

- Quickly generate vast wealth under the control of itself or any human allies.

- Come up with better plans than humans could imagine, and ensure that it doesn't try any takeover attempt that humans might be able to detect and stop.

- Develop advanced weaponry that can be built quickly and cheaply, yet is powerful enough to overpower human militaries.

However, my piece also explores what things might look like if each AI system basically has similar capabilities to humans. In this case:

More: AI could defeat all of us combined

Digital neuroscience

I’ve previously argued that it could be inherently difficult to measure whether AI systems are safe, for reasons such as: AI systems that are not deceptive probably look like AI systems that are so good at deception that they hide all evidence of it, in any way we can easily measure.

Unless we can “read their minds!”

Currently, today’s leading AI research is in the genre of “black-box trial-and-error.” An AI tries a task; it gets “encouragement” or “discouragement” based on whether it does the task well; it tweaks the wiring of its “digital brain” to improve next time; it improves at the task; but we humans aren’t able to make much sense of its “digital brain” or say much about its “thought process.”

(Click to expand) Why are AI systems "black boxes" that we can't understand the inner workings of?

What I mean by “black-box trial-and-error” is explained briefly in an old Cold Takes post, and in more detail in more technical pieces by Ajeya Cotra [LW · GW] (section I linked to) and Richard Ngo (section 2). Here’s a quick, oversimplified characterization.

Today, the most common way of building an AI system is by using an "artificial neural network" (ANN), which you might think of sort of like a "digital brain" that starts in an empty (or random) state: it hasn't yet been wired to do specific things. A process something like this is followed:

- The AI system is given some sort of task.

- The AI system tries something, initially something pretty random.

- The AI system gets information about how well its choice performed, and/or what would’ve gotten a better result. Based on this, it “learns” by tweaking the wiring of the ANN (“digital brain”) - literally by strengthening or weakening the connections between some “artificial neurons” and others. The tweaks cause the ANN to form a stronger association between the choice it made and the result it got.

- After enough tries, the AI system becomes good at the task (it was initially terrible).

- But nobody really knows anything about how or why it’s good at the task now. The development work has gone into building a flexible architecture for it to learn well from trial-and-error, and into “training” it by doing all of the trial and error. We mostly can’t “look inside the AI system to see how it’s thinking.”

- For example, if we want to know why a chess-playing AI such as AlphaZero made some particular chess move, we can't look inside its code to find ideas like "Control the center of the board" or "Try not to lose my queen." Most of what we see is just a vast set of numbers, denoting the strengths of connections between different artificial neurons. As with a human brain, we can mostly only guess at what the different parts of the "digital brain" are doing.

Some AI research (example)2 is exploring how to change this - how to decode an AI system’s “digital brain.” This research is in relatively early stages - today, it can “decode” only parts of AI systems (or fully decode very small, deliberately simplified AI systems).

As AI systems advance, it might get harder to decode them - or easier, if we can start to use AI for help decoding AI, and/or change AI design techniques so that AI systems are less “black box”-ish.

I think there is a wide range of possibilities here, e.g.:

Failure: “digital brains” keep getting bigger, more complex, and harder to make sense of, and so “digital neuroscience” generally stays about as hard to learn from as human neuroscience. In this world, we wouldn’t have anything like “lie detection” for AI systems engaged in deceptive behavior.

Basic mind-reading: we’re able to get a handle on things like “whether an AI system is behaving deceptively, e.g. whether it has internal representations of ‘beliefs’ about the world that contradict its statements” and “whether an AI system is aiming to accomplish some strange goal we didn’t intend it to.”

- It may be hard to fix things like this by just continuing trial-and-error-based training (perhaps because we worry that AI systems are manipulating their own “digital brains” - see later bullet point).

- But we’d at least be able to get early warnings of potential problems, or early evidence that we don’t have a problem, and adjust our level of caution appropriately. This sort of mind-reading could also be helpful with AI checks and balances (below).

Advanced mind-reading: we’re able to understand an AI system’s “thought process” in detail (what observations and patterns are the main reasons it’s behaving as it is), understand how any worrying aspects of this “thought process” (such as unintended aims) came about, and make lots of small adjustments until we can verify that an AI system is free of unintended aims or deception.

Mind-writing (digital neurosurgery): we’re able to alter a “digital brain” directly, rather than just via the “trial-and-error” process discussed earlier.

One potential failure mode for digital neuroscience is if AI systems end up able to manipulate their own “digital brains.” This could lead “digital neuroscience” to have the same problem as other AI safety research: if we’re shutting down or negatively reinforcing AI systems that appear to have unsafe “aims” based on our “mind-reading,” we might end up selecting for AI systems whose “digital brains” only appear safe.

- This could be a real issue, especially if AI systems end up with far-beyond-human capabilities (more below).

- But naively, an AI system manipulating its own “digital brain” to appear safe seems quite a bit harder than simply behaving deceptively.

I should note that I’m lumping in much of the (hard-to-explain) research on the Eliciting Latent Knowledge [LW · GW] (ELK) agenda under this category.3 The ELK agenda is largely4 about thinking through what kinds of “digital brain” patterns might be associated with honesty vs. deception, and trying to find some impossible-to-fake sign of honesty.

How likely is this to work? I think it’s very up-in-the-air right now. I’d say “digital neuroscience” is a young field, tackling a problem that may or may not prove tractable. If we have several decades before transformative AI, then I’d expect to at least succeed at “basic mind-reading,” whereas if we have less than a decade, I think that’s around 50/50. I think it’s less likely that we’ll succeed at some of the more ambitious goals, but definitely possible.

Limited AI

I previously discussed why AI systems could end up with “aims,” in the sense that they make calculations, choices and plans selected to reach a particular sort of state of the world. For example, chess-playing AIs “aim” for checkmate game states; a recommendation algorithm might “aim” for high customer engagement or satisfaction. I then argued that AI systems would do “whatever it takes” to get what they’re “aiming” at, even when this means deceiving and disempowering humans.

But AI systems won’t necessarily have the sorts of “aims” that risk trouble. Consider two different tasks you might “train” an AI to do, via trial-and-error (rewarding success at the task):

- “Write whatever code a particular human would write, if they were in your situation.”

- “Write whatever code accomplishes goal X [including coming up with things much better than a human could].”

The second of these seems like a recipe for having the sort of ambitious “aim” I’ve claimed is dangerous - it’s an open-ended invitation to do whatever leads to good performance on the goal. By contrast, the first is about imitating a particular human. It leaves a lot less scope for creative, unpredictable behavior and for having “ambitious” goals that lead to conflict with humans.

(For more on this distinction, see my discussion of process-based optimization [AF · GW], although I’m not thrilled with this and hope to write something better later.)

My guess is that in a competitive world, people will be able to get more done, faster, with something like the second approach. But:

- Maybe the first approach will work better at first, and/or AI developers will deliberately stick with the first approach as much as they can for safety reasons.

- And maybe that will be enough to build AI systems that can, themselves, do huge amounts of AI alignment research applicable to future, less limited systems. Or enough to build AI systems that can do other useful things, such as creating convincing demonstrations of the risks, patching security holes that dangerous AI systems would otherwise exploit, and more. (More on “how safe AIs can protect against dangerous AIs” in a future piece.)

- A risk that would remain: these AI systems might also be able to do huge amounts of research on making AIs bigger and more capable. So simply having “AI systems that can do alignment research” isn’t good enough by itself - we would need to then hope that the leading AI developers prioritize safety research rather than racing ahead with building more powerful systems, up until the point where they can make the more powerful systems safe.

There are a number of other ways in which we might “limit” AI systems to make them safe. One can imagine AI systems that are:

- “Short-sighted” or “myopic [AF · GW]”: they might have “aims” (see previous post on what I mean by this term) that only apply to their short-run future. So an AI system might be aiming to gain more power, but only over the next few hours; such an AI system wouldn’t exhibit some of the behaviors I worry about, such as deceptively behaving in “safe” seeming ways in hopes of getting more power later.

- “Narrow”: they might have only a particular set of capabilities, so that e.g. they can help with AI alignment research but don’t understand human psychology and can’t deceive and manipulate humans.

- “Unambitious”: even if AI systems develop unintended aims, these might be aims they satisfy fairly easily, causing some strange behavior but not aiming to defeat all of humanity.

A further source of hope: even if such “limited” systems aren’t very powerful on their own, we might be able to amplify [? · GW] them by setting up combinations of AIs that work together on difficult tasks. For example:

- One “slow but deep” AI might do lots of analysis on every action it takes - for example, when it writes a line of code, it might consider hundreds of possibilities for that single line.

- Another “fast and shallow” AI might be trained to quickly, efficiently imitate the sorts of actions the “slow but deep” one takes - writing the sorts of lines of code it produces after considering hundreds of possibilities.

- Further AIs might be trained to summarize the analysis of other AIs, assign different parts of tasks to different AIs, etc. The result could be something like a “team” of AIs with different roles, such that a large number of limited AIs ends up quite a lot more powerful (and, depending on the details, also more dangerous) than any of the individual AIs.

I’d guess that in a competitive world, AI systems that are not “limited” will - at least eventually - be more powerful, versatile and ultimately useful. But limited AIs might get us pretty far.

How likely is this to work? I’d guess that we’ll eventually be able to build very powerful AIs whose limits make them relatively safe. However, I’d also guess that AIs without such limits will eventually be more powerful. So I think a lot of how things go will come down to how cautious we are: will we stick with limited AIs until the point at which we make more powerful AIs safe? And I think it’s very hard to predict how much caution the world will have - it partly depends on how well-understood the issues discussed in this series become over time!

AI checks and balances

Central to my worries is the idea that AIs could be good at “deceiving” humans: proficiently choosing courses of action that humans don’t fully understand, and don’t catch the problems with. This is important both for how AIs could develop unintended, “dangerous” aims in the first place and how they could execute on these aims by defeating humanity.

We could potentially mitigate this issue by using AIs to supervise and critique each other.

A simple example: say we’re worried that AI systems might find computer security vulnerabilities, and use them opportunistically to gain power and resources. We could train some AI systems to specifically seek out, expose and patch computer security vulnerabilities. (A footnote explains why we might expect such specialized systems to patch most vulnerabilities they find, rather than exploiting the vulnerabilities as often as less specialized systems would.5)

- This is a basic example of “using some AIs to identify potential problems other ones could cause.”

- A nice property: there might be some vulnerabilities that are much easier for AI systems to notice than humans; these are probably the ones we should be most worried about, and the ones most likely to be found by “vulnerability-finding AIs.”

Analogously, we could train AI systems to do things like:

- Produce critiques of each others’ proposed plans, and explanations of how these plans could lead to unintended behavior.

- Help out with the “digital neuroscience” goal above: analyze each others’ “digital brains” and produce compelling-to-humans discussions of whether there’s evidence there about whether the AI systems have unintended, dangerous “aims.”

- Conduct randomized audits of each others’ behavior: looking for any way in which another AI system’s behavior might be deceptive or otherwise unintended.

- Importantly, auditor AIs would have different “incentives” (they get positive reinforcement for different behaviors), compared to the AIs they’re auditing: the audited AI system might be searching for any answer to a question that humans rate highly, whereas the “auditor” might be specifically searching for compelling arguments that an otherwise-highly-rated-answer is actually misleading.

- I previously argued that these sorts of audits have limitations - they could end up unwittingly training the audited AI systems to become effective at deceiving human auditors. But in this case, deceiving auditors might be more like deceiving copies of themselves, potentially a harder-to-learn behavior.

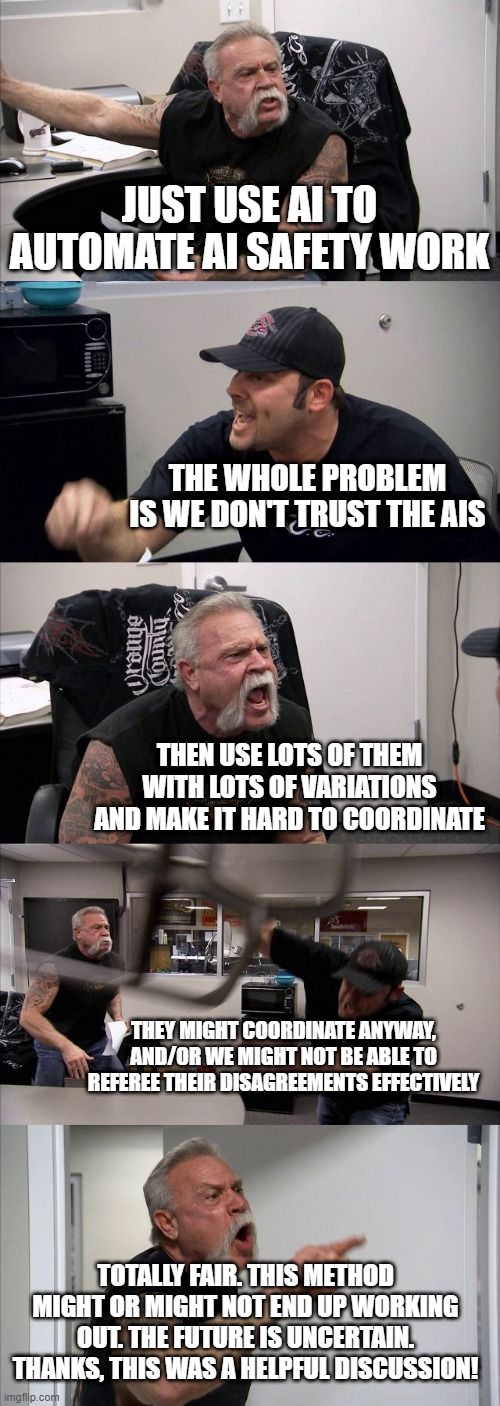

There are a lot of potential wrinkles here, which I discuss in a more detailed non-Cold-Takes piece [AF · GW]. In brief:

- AI checks and balances might fail if different AI systems end up coordinating with each other against us. For example, it might end up being the case that even auditor AI systems are best able to accomplish their ambitious, unintended aims by refraining from warning humans about the most important problems they find with other AIs.

- We might try to make this less likely by creating ensembles of AI systems that “check and balance” each other, while being different enough from each other to make coordination difficult.

- For example, some AI systems might have limitations (see above) that make them less dangerous, while also less capable.

- In order to pull off deception successfully, more powerful systems would then have to deceive not just humans but a whole variety of other (less capable, but in different ways) AI systems - some of which could be amplified, as discussed above.

- Another way AI checks and balances could fail: when one AI system critiques another, the resulting “debate” could quickly become too hard for humans to referee effectively. For example, imagine that an eight-year-old has asked adult A to look for signs that adult B is deceiving them, and adult A is now arguing that this is happening while adult B is arguing that it isn’t. Can the eight-year-old figure out what the truth is? Seems genuinely uncertain (and dependent on the details)!

There is already some research on “using AIs to critique each other.” A recent example is this paper, which actually does show that an AI trained to critique its own answers can surface helpful critiques that help humans rate its answers more accurately.

Other possibilities

I discuss possible hopes in more detail in an Alignment Forum [AF · GW] piece. And I think there is significant scope for “unknown unknowns”: researchers working on AI safety might come up with approaches that nobody has thought of yet.

High-level fear: things get too weird, too fast

Rather than end on a positive note, I want to talk about a general dynamic that feels like it could make the situation very difficult, and make it hard for any of the above hopes to work out.

To quote from my previous piece:

Maybe at some point, AI systems will be able to do things like:

- Coordinate with each other incredibly well, such that it's hopeless to use one AI to help supervise another.

- Perfectly understand human thinking and behavior, and know exactly what words to say to make us do what they want - so just letting an AI send emails or write Tumblr posts gives it vast power over the world.

- Manipulate their own "digital brains," so that our attempts to "read their minds" backfire and mislead us.

- Reason about the world (that is, make plans to accomplish their aims) in completely different ways from humans, with concepts like "glooble"6 that are incredibly useful ways of thinking about the world but that humans couldn't understand with centuries of effort.

At this point, whatever methods we've developed for making human-like AI systems safe, honest and restricted could fail - and silently, as such AI systems could go from "being honest and helpful" to "appearing honest and helpful, while setting up opportunities to defeat humanity."

I’m not wedded to any of the details above, but I think the general dynamic in which “AI systems get extremely powerful, strange, and hard to deal with very quickly” could happen for a few different reasons:

- The nature of AI development might just be such that we very quickly go from having very weak AI systems to having “superintelligent” ones. How likely this is has been debated a lot.7

- Even if AI improves relatively slowly, we might initially have a lot of success with things like “AI checks and balances,” but continually make more and more capable AI systems - such that they eventually become extraordinarily capable and very “alien” to us, at which point previously-effective methods break down. (More [AF(p) · GW(p)])

- The most likely reason this would happen, in my view, is that we - humanity - choose to move too fast. It’s easy to envision a world in which everyone is in a furious race to develop more powerful AI systems than everyone else - focused on “competition” rather than “caution” (more on the distinction here) - and everything accelerates dramatically once we’re able to use AI systems to automate scientific and technological advancement.

So … is AI going to defeat humanity or is everything going to be fine?

I don’t know! There are a number of ways we might be fine, and a number of ways we might not be. I could easily see this century ending in humans defeated or in a glorious utopia. You could maybe even think of it as the most important century.

So far, I’ve mostly just talked about the technical challenges of AI alignment: why AI systems might end up misaligned, and how we might design them to avoid that outcome. In future pieces, I’ll go into a bit more depth on some of the political and strategic challenges (e.g., what AI companies and governments might do to reduce the risk of a furious race to deploy dangerous AI systems), and work my way toward the question: “What can we do today to improve the odds that things go well?”

Footnotes

-

Disclosure: my wife Daniela is President and co-founder of Anthropic, which employs prominent researchers in “mechanistic interpretability” and hosts the site I link to for the term. ↩

-

Disclosure: I’m on the board of ARC, which wrote this document. ↩

-

Though not entirely ↩

-

The basic idea:

- A lot of security vulnerabilities might be the kind of thing where it’s clear that there’s some weakness in the system, but it’s not immediately clear how to exploit this for gain. An AI system with an unintended “aim” might therefore “save” knowledge about the vulnerability until it encounters enough other vulnerabilities, and the right circumstances, to accomplish its aim.

- But now imagine an AI system that is trained and rewarded exclusively for finding and patching such vulnerabilities. Unlike with the first system, revealing the vulnerability gets more positive reinforcement than just about anything else it can do (and an AI that reveals no such vulnerabilities will perform extremely poorly). It thus might be much more likely than the previous system to do so, rather than simply leaving the vulnerability in place in case it’s useful later.

- And now imagine that there are multiple AI systems trained and rewarded for finding and patching such vulnerabilities, with each one needing to find some vulnerability overlooked by others in order to achieve even moderate performance. These systems might also have enough variation that it’s hard for one such system to confidently predict what another will do, which could further lower the gains to leaving the vulnerability in place. ↩

- This is a concept that only I understand. ↩

-

See here [? · GW], here [? · GW], and here [? · GW]. Also see the tail end of this Wait but Why piece, which draws on similar intuitions to the longer treatment in Superintelligence ↩

3 comments

Comments sorted by top scores.

comment by Mark Neyer (mark-neyer) · 2022-12-15T18:26:23.510Z · LW(p) · GW(p)

Does the orthogonality thesis apply to embodied agents?

My belief is that instrumental subgoals will lead to natural human value alignment for embodied agents with long enough time horizons, but the whole thing is contingent on problems with the AI's body.

Simply put, hardware sucks, it's always falling apart, and the AGI would likely see human beings as part of itself . There are no large scale datacenters where _everything_ is automated, and even if there were on, who is going to repair the trucks to mine the copper to make the coils to go into the cooling fans that need to be periodically replaced?

If you start pulling strings on 'how much of the global economy needs to operate in order to keep a data center functioning', you end up with a huge portion of the global economy. Am i to believe that an AGI would decide to replace all of that with untested, unproved systems?

When I've looked into precisely what the AI risk researchers believe, the only paper I could find on likely convergent instrumental subgoals modeled the AI as being a disembodied agent with read access to the entire universe, which i find questionable. I agree that yes, if there were a disembodied mind with read access to the entire universe, the ability to write in a few places, and goals that didn't include "keep humans alive and well", then we'd be in trouble.

Can you help me find some resources on how embodiment changes the nature of convergent instrumental subgoals? This MIRI paper was the most recent thing i could find but it's for non-embodied agents. Here is my objection [LW · GW]to its conclusion.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-12-15T20:13:46.455Z · LW(p) · GW(p)

The orthogonality thesis is about final goals, not instrumental goals. I think what you’re getting at is the following hypothesis:

“For almost any final goal that an AGI might have, it is instrumentally useful for the AGI to not wipe out humanity, because without humanity keeping the power grid on (etc. etc.), the AGI would not be able to survive and get things done. Therefore we should not expect AGIs to wipe out humanity.”.

See for example 1 [LW · GW], 2 [LW(p) · GW(p)], 3 [LW(p) · GW(p)] making that point.

Some counterpoints to that argument would be:

- If that claim is true now, and for early AGIs, well it won’t be true forever. Once we have AGIs that are faster and cheaper and more insightful than humans, they will displace humans over more and more of the economy, and relatedly robots will rapidly spread throughout the economy, etc. And when the AGIs are finally in a position to wipe out humans, they will, if they’re misaligned. See for example this Paul Christiano post [LW · GW].

- Even if that claim is true, it doesn’t rule out AGI takeover. It just means that the AGI would take over in a way that doesn’t involve killing all the humans. By the same token, when Stalin took over Russia, he couldn’t run the Russian economy all by himself, and therefore he didn’t literally kill everyone, but he still had a great deal of control. Now imagine that Stalin could live forever, while gradually distributing more and more clones of himself in more and more positions of power, and then replace “Russia” with “everywhere”.

- Maybe the claim is not true even for early AGIs. For example I was disagreeing with it here [LW · GW]. A lot depends on things like how far recursive self-improvement can go, whether human-level human-speed AGI can run on 1 xbox GPU versus a datacenter of 10,000 high-end GPUs, and various questions like that. I would specifically push back on the relevance of “If you start pulling strings on 'how much of the global economy needs to operate in order to keep a data center functioning', you end up with a huge portion of the global economy”. There are decisions that trade off between self-sufficiency and convenience / price, and everybody will choose convenience / price. So you can’t figure out the minimal economy that would theoretically support chip production by just looking at the actual economy; you need to think more creatively, just like a person stuck on a desert island will think of resourceful solutions. By analogy, an insanely massive infrastructure across the globe supports my consumption of a chocolate bar for snack just now, but you can’t conclude from that observation that there’s no way for me to have a snack if that massive global infrastructure didn’t exist. I could instead grow grapes in my garden or whatever. Thus, I claim that there are much more expensive and time-consuming ways to get energy and chips and robot parts, that require much less infrastructure and manpower, e.g. e-beam lithography instead of EUV photolithography, and that after an AGI has wiped out humanity, it might well be able to survive indefinitely and gradually work its way up to a civilization of trillions of its clones colonizing the galaxy, starting with these more artisanal solutions, scavenged supplies, and whatever else. At the very least, I think we should have some uncertainty here.

comment by Foyle (robert-lynn) · 2022-12-17T10:52:41.286Z · LW(p) · GW(p)

If any superintelligent AI is capable of wiping out humans should it decide to, it is better for humans to try and arrange initial conditions such that there are ultimately a small number of them to reduce probability of doom. The risk posed by 1 or 10 independent but vast SAI is lower than from a million or a billion independent but relatively less potent SAI where it may tend to P=1.

I have some hope the the physical universe will soon be fully understood and from there on prove relatively boring to SAI, and that the variety thrown up by the complex novelty and interactions of life might then be interesting to them