Contra EY: Can AGI destroy us without trial & error?

post by nsokolsky (nikita-sokolsky) · 2022-06-13T18:26:09.460Z · LW · GW · 72 commentsContents

How fast can humans develop novel technologies? Unstoppable intellect meets complexity of the universe What does AGI want? What does it take to make a pencil? YOLO AGI? But what about AlphaFold? What if AGI settles for a robot army? [added] What if AGI just simulates our physical world? Mere mortals can’t comprehend AGI? Does this mean EY is wrong and AGI is not a threat? None 76 comments

NB: I've never published on LW before and apologize if my writing skills are not in line with LW's usual style. This post is an edited copy of the same article in my blog.

EY published an article last week titled “AGI Ruin: A List of Lethalities [LW · GW]”, which explains in detail why you can’t train an AGI that won’t try to kill you at the first chance it gets, as well as why this AGI will eventually appear given humanity’s current trajectory in computer science. EY doesn’t explicitly state a timeline over which AGI is supposed to destroy humanity, but it’s implied that this will happen rapidly and humanity won’t have enough time to stop it. EY doesn’t find the question of how exactly AGI will destroy humanity too interesting and explains it as follows:

My lower-bound model of "how a sufficiently powerful intelligence would kill everyone, if it didn't want to not do that" is that it gets access to the Internet, emails some DNA sequences to any of the many many online firms that will take a DNA sequence in the email and ship you back proteins, and bribes/persuades some human who has no idea they're dealing with an AGI to mix proteins in a beaker, which then form a first-stage nanofactory which can build the actual nanomachinery. (Back when I was first deploying this visualization, the wise-sounding critics said "Ah, but how do you know even a superintelligence could solve the protein folding problem, if it didn't already have planet-sized supercomputers?" but one hears less of this after the advent of AlphaFold 2, for some odd reason.) The nanomachinery builds diamondoid bacteria, that replicate with solar power and atmospheric CHON, maybe aggregate into some miniature rockets or jets so they can ride the jetstream to spread across the Earth's atmosphere, get into human bloodstreams and hide, strike on a timer. Losing a conflict with a high-powered cognitive system looks at least as deadly as "everybody on the face of the Earth suddenly falls over dead within the same second".

Let’s break down EY’s proposed plan for “Skynet” into the requisite engineering steps:

- Design a set of proteins that can form the basis of a “nanofactory”

- Adapt the protein design to the available protein printers that accept somewhat-anonymous orders over the Internet

- Design “diamondoid bacteria” that can kill all of humanity and that can be successfully built by the “nanofactory”. The bacteria must be self replicating and able to extract power from solar energy for self sustainance.

- Execute the evil plan by sending out the blueprints to unsuspecting protein printing corporations and rapidly taking over the world afterwards

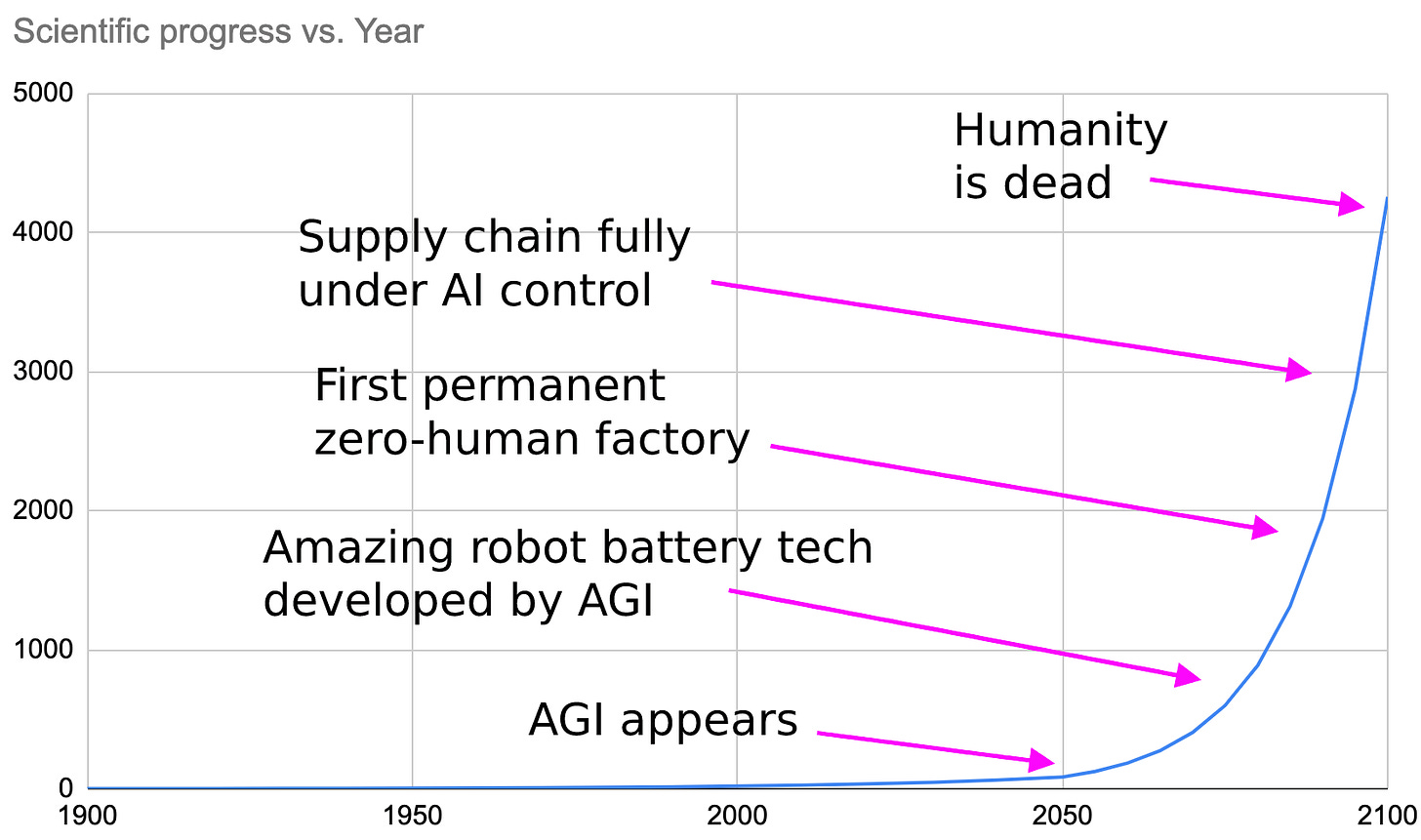

The plan above looks great for a fiction book and EY is indeed a great fiction writer in addition to his Alignment work, but there’s one unstated assumption: the AGI will not only be able to design everything using whatever human data it has available, but it will also execute the evil plan without needing lots of trial and error like mortal human inventors do. And surprisingly this part of EY’s argument gets little objection. A visual representation of my understanding of EY’s mental model of AGI vs. Progress is as follows:

How fast can humans develop novel technologies?

Humans are the only known AGI that we have available for reference, so we could look at the fastest known examples of novel engineering to see how fast an AGI might develop something spectacular and human-destroying. Patrick Collison of Stripe keeps a helpful page titled “Fast” with notable “examples of people quickly accomplishing ambitious things together”. The engineering entries include:

- P-80 Shooting Star, a World War II aircraft designed and built in 143 days by Lockheed Martin.

- Spirit of St. Louis, another airplane designed and built in just 60 days.

- USS Nautilus. The world’s first nuclear submarine was launched in 1173 days or 3.2 years.

- Apollo 8, where it took 134 days between “what if we go to the moon?” to the actual landing.

- The iPod, which took 290 days between the first designs and the device being launched to Apple stores.

- Moderna’s vaccine against COVID, which took 45 days between the virus being sequenced and the first batch of the actual vaccine getting manufactured.

Sounds very quick? Definitely, but the problem is that Patrick’s examples are all for engineering constructs building on top of decades of previous work. Designing a slightly better airplane in 1944 is not the same as creating the very first airplane in 1903, as by 1944 humans had 30 years of experience to build on top of. And if your task is to build diamondoid bacteria manufactured by a protein-based nanomachinery factory you’re definitely in Wright Brothers territory. So let’s instead look at timelines of novel technologies that had little prior research and infrastructure to fall back on:

- The Wright Brothers took 4 years to build their first successful prototype. It took another 23 years for the first mass manufactured airplane to appear, for a total of 27 years of R&D.

- It took 63 years for submarines to progress from “proof of concept” in the form of Nautilus in 1800 to the first submarine capable of sinking a warship in the form of The Hunley in 1863.

- It took 40 years between Einstein publishing his paper on the theory of relativity and the Atomic bomb being dropped on Hiroshima and Nagasaki. It took another 9 years to open the world’s first nuclear powerplant.

- It took 36 years from the first time mRNA vaccines were synthesized in 1984 and the first mRNA-based vaccine to be mass-manufactured.

- It took at least 30 years of development for LED technology to go from an experimental to being useful for commercial lighting.

- It took around 30 years for digital photography to overtake film photography in terms of costs and quality.

Now… you might object to this by correctly calling out the downside of human R&D:

- Human intellect is extremely inferior to what AGI will be capable of. At best, the collective intellectual capacity of the entire mankind will be equal to that of AGI. At worst, even all of our 8 billion brains will be collectively an order of magnitude dumber

- Humans have to sleep, eat, drink, vacation, while AGI can work 24/7

- Humans are more-or-less single threaded and require a coordinated effort to work on complicated research, which is additionally bogged down by the inefficiencies of trying to coordinate a large number of humans at the same time.

And this is all true! Humans are nothing to a hypothetical team of AGIs. But the problem is… until AGI can build its fantastical diamondoid bacteria, it remains dependent on imperfect human hands to conduct its R&D in the real world, as they’ll be the only way for AGI to interact with the physical world for a very long time. Remember that AGI’s one downside is that it will be running on motionless computers, unlike humans who have been running around with 4 limbs since the beginning of civilization. Which in turn brings us to the 30+ years timeline of developing a novel engineering construct, no matter how smart the AI will be.

Unstoppable intellect meets complexity of the universe

Plenty of content has been written about how human scientific progress is slowing down, my favorite being WTF Happened in 1971 and Scott’s 2018 post Is Science Slowing Down?. In the second article Scott brings up the paper Are Ideas Getting Harder to Find? by Bloom, Jones, Reenen & Webb (2018), which has the following neat graph:

We can see how the amount of investment into R&D is growing every year, but productive research is more or less flat. The paper brings up a relatable example in the section on semiconductor research:

The striking fact, shown in Figure 4, is that research effort has risen by a factor of 18 since 1971. This increase occurs while the growth rate of chip density is more or less stable: the constant exponential growth implied by Moore’s Law has been achieved only by a massive increase in the amount of resources devoted to pushing the frontier forward. Assuming a constant growth rate for Moore’s Law, the implication is that research productivity has fallen by this same factor of 18, an average rate of 6.8 percent per year. If the null hypothesis of constant research productivity were correct, the growth rate underlying Moore’s Law should have increased by a factor of 18 as well. Instead, it was remarkably stable. Put differently, because of declining research productivity, it is around 18 times harder today to generate the exponential growth behind Moore’s Law than it was in 1971.

Not even AGI could get around this problem and would likely require an exponentially growing amount of resources as it delves deeper into engineering and fundamental research. It is definitely true that AGI itself will be rapidly increasing its intellect, but can this really continue indefinitely? At some point all the low hanging fruit missed by human AI researchers will be exhausted and AGI will have to spend years in real world time to make significant improvements of its own IQ. Granted, AGI will rapidly reach an IQ far beyond human reach, but all this intellectual power will still have to contend with the difficulties of novel research.

What does AGI want?

Since AGI development is completely decoupled from mammalian evolution here on Earth, its quite likely to eventually exhibit “blue and orange” morality, behaving in a completely alien and unpredictable fashion, with no humanly understandable motivations or a way for humans to relate to what the AGI wants. That being said, AGI is likely to fall into one of two buckets regardless of its motivations:

- AGI will act rationally to achieve whatever internal goals it has, no matter how alien and weird to us. I.e. “collect all the shiny objects into every bucket-like object in the universe” or “convert the universe into paperclips [? · GW]”. This means the AGI will carefully plan ahead and attempt to preserve its own existence to fulfill the internal overreaching goals.

- AGI doesn’t have any goals at all beyond “kill all humans!”. It just acts as a rogue terrorist, attempting to destroy humans without the slightest concern for its own survival. If all humans die and the AGI dies alongside them, that’s fine according to the AGI’s internal motivations. There’s no attempt to ensure overarching continuation of its goals (like “collect all strawberries!”) once humanity is extinct.

Let’s start with scenario #1 by looking at… the common pencil.

What does it take to make a pencil?

A classic pamphlet called I, Pencil walks us through what it takes to make a common pencil from scratch:

- Trees have to be cut down, which takes saws, trucks, rope and countless other gear.

- The cut down trees have to be transported to the factory by rail, which in turns needs laid down rail, trains, rail stations, cranes, etc.

- The trees are cut down with metal saws, waxes and dried. This consumes a lot of electricity, which is in turn made by burning fuel, making solar panel or building hydroelectric powerplants.

- At the center of the pencil is a chunk of graphite mined in Sri Lanka, using loads of equipment and transported by ship.

- The pencil is covered with lacquer, that’s in turn made by growing castor beans.

- There’s the piece of metal holding the eraser, mined from shafts underground.

- And finally there’s the rubber mined in Indonesia and once again transported by ship.

The point to this entire story is that making something as simple as a pencil requires a massive supply chain employing tens of millions of non-AGI humans. If you want any hope of continuing to exist, you need to replace the labor of this gigantic global army of humans with AGI-controlled robots or “diamondoid bacteria” or whatever other magical contraption you want to invoke. Which will require lots of trial & error and decades of building out a reliable AGI-controlled supply chain that could be reused to fight humans at the drop of a hat. Because otherwise AGI will risk seeing its brilliant plan fail, resulting in humans going berserk against any machines capable of running said AGI and ending its reign of Earth long before it has a chance to start in earnest. And if the AGI doesn’t understand this… how smart is it really?

YOLO AGI?

But what if the AGI is absolutely ruthless and doesn’t care if it goes up in flames as soon as humans are gone? Then we could get to the end of humanity much faster with options like:

- Get humans to think that their enemy is about to launch a nuclear strike and launch a strike of their own, similar to WarGames

- Design a supervirus capable of destroying humanity. Think a combination of HIV’s lethality with the ease of spread of measles.

- Plant a powerful information hazard [? · GW] into humanity’s consciousness that will somehow trigger us to kill each other as soon as possible. Also see Roko’s Basilisk [? · GW] and Rokoko’s Basilisk, an infohazard responsible for the birth of X Æ A-12.

- Design the mother of all greenhouse gases and convince humanity to make tons of it, eventually resulting in the planet heating up to extreme temperatures.

- Provide advanced nuke designs and materials covertly to very bad people and manipulate them into sabotaging world order.

The problem with all these scenarios is similar:

Either they’re perfectly doable by humans in the present, with no AGI help necessary. I.e. we’ve been barely saved from WW3 by a Soviet officer, long before AGI was on anyone’s mind. So at worst AGI will somewhat increase the risks of this happening in the short term... Or they require lots of trial & error to develop into functional production-ready technologies, once again creating a big problem for AGI, as it has to rely on imperfect humans to do the novel R&D. This will still take decades, even if AGI won’t worry about a full takeover of supply chains.

But what about AlphaFold?

Another possible counter-argument is that AGI will figure out the laws of the universe through internal modeling and will be able to simulate and perfect its amazing inventions without needing trial & error in the physical world. EY mentions AlphaFold as an example of such a breakthrough. If you haven’t heard about it, here’s a description of the Protein Folding Problem from Wiki that AlphaFold 2 solved better than any other prior system back in 2020:

Proteins consist of chains of amino acids which spontaneously fold, in a process called protein folding, to form the three dimensional (3-D) structures of the proteins. The 3-D structure is crucial to the biological function of the protein. However, understanding how the amino acid sequence can determine the 3-D structure is highly challenging, and this is called the "protein folding problem". The "protein folding problem" involves understanding the thermodynamics of the interatomic forces that determine the folded stable structure, the mechanism and pathway through which a protein can reach its final folded state with extreme rapidity, and how the native structure of a protein can be predicted from its amino acid sequence.

According to EY, the existence of AlphaFold shows that a smart enough AGI could eventually learn to manipulate proteins into “nanofactories” that could be used to interact with the physical world. However the current version still has major limitations:

Whilst it may be considered the gold standard of protein prediction, there is still room for improvement as AlphaFold only provides one prediction of a stable structure for each protein; however, proteins are dynamic and can change shape throughout the body, for example under different pH conditions. Additionally, AlphaFold is not able to determine the shape of multiprotein complexes and does not include any ligands such as cofactors or metals, meaning no data are available for such interactions. Despite these shortcomings, AlphaFold is the first step in protein prediction technology, and it is likely that solving these challenges will also be done so using deep learning and AI.

In other words, there’s still a huge leap between “can predict simple protein structures” and “can design protein nanofactories without experimentation”. AGI will likely need to spend decades managing laboratory experiments to fill in the gaps around our understanding of how proteins work. And don’t forget that currently available commercial protein printers are not perfect, especially if you’re trying to print a novel structure of far bigger complexity than anything else on the planet. Also see this excellent comment [LW(p) · GW(p)] on the subject by anonymousaisafety.

What if AGI settles for a robot army?

Cybernetic army from I, Robot

We could also think of the diamondoid bacteria as just an example of what the AI can do and turn to other ways it could manipulate the physical reality, that are closer to the technology that we already have today. There’s impressive videos of Boston Dynamics robots doing all kinds of stunts, so we could ask if perhaps AGI could utilize their existing progress to quickly give itself a way to interact with the outside world. However this would still involve many roadblocks:

- The robots are running on pre-programmed runs in a human-designed course and are not capable of navigating through unknown terrain. AGI will have to push cybernetics to amazing heights in order for the robots to be useful in novel spaces.

- AGI could just make a version of itself and give sentience to every individual machine, but this will require a massive leap in computational technology, as a single machine is very unlikely to be able to host an instance of an AGI.

- AGI could have a command and control center next to every batch of robots or try to control them over large distances, but this is again a daunting engineering task when you have little room for mistake.

- Battery technology is currently too lousy and even the more simple dog-like robots can only last for 90 minutes. There’s no published information on how long the human-like versions could last on battery, but we could reason it cannot be more than an hour. Therefore AGI will need to achieve a big leap in battery life before anything resembling the “I, Robot” machinery could be built.

- Boston Dynamics robots don’t exist in large enough quantities for a complete overtake of global supply chains and it will take years of human labor to get more robot factories online.

- Humans are pretty good at warfare and your robots have to be extremely good to beat them in battle, far better than what’s currently available.

[added] Also see this excellent comment [LW(p) · GW(p)] by anonymousaisafety explaining why "just takeover the human factories" is not a quick path to success (slightly edited below):

The tooling and structures that a superintelligent AGI would need to act autonomously does not actually exist in our current world, so before we can be made into paperclips, there is a necessary period of bootstrapping where the superintelligent AGI designs and manufactures new machinery using our current machinery. Whether it's an unsafe AGI that is trying to go rogue, or an aligned AGI that is trying to execute a "pivotal act", the same bootstrapping must occur first.

Case study: a common idea I've seen while lurking on LessWrong and SSC/ACT for the past N years is that an AGI will "just" hack a factory and get it to produce whatever designs it wants. This is not how factories work. There is no 100% autonomous factory on Earth that an AGI could just take over to make some other widget instead. Even highly automated factories are:

- Highly automated to produce a specific set of widgets,

- Require physical adjustments to make different widgets, and...

- Rely on humans for things like input of raw materials, transferring in-work products between automated lines, and the testing or final assembly of completed products. 3D printers are one of the worst offenders in this regard. The public perception is that a 3D printer can produce anything and everything, but they actually have pretty strong constraints on what types of shapes they can make and what materials they can use, and usually require multi-step processes to avoid those constraints, or post-processing to clean up residual pieces that aren't intended to be part of the final design, and almost always a 3D printer is producing sub-parts of a larger design that still must be assembled together with bolts or screws or welds or some other fasteners.

So if an AGI wants to have unilateral control where it can do whatever it wants, the very first prerequisite is that it needs to create a futuristic, fully automated, fully configurable, network-controlled factory -- which needs to be built with what we have now, and that's where you'll hit the supply constraints for things like lead times on part acquisition. The only way to reduce this bootstrapping time is to have this stuff designed in advance of the AGI, but that's backwards from how modern product development actually works. We design products, and then we design the automated tooling to build those products. If you asked me to design a factory that would be immediately usable by a future AGI, I wouldn't know where to even start with that request. I need the AGI to tell me what it wants, and then I can build that, and then the AGI can takeover and do their own thing.

A related point that I think gets missed is that our automated factories aren't necessarily "fast" in a way you'd expect. There's long lead times for complex products. If you have the specialized machinery for creating new chips, you're still looking at ~14-24 weeks from when raw materials are introduced to when the final products roll off the line. We hide that delay by constantly building the same things all of the time, but it's very visibly when there's a sudden demand spike -- that's why it takes so long before the supply can match the demand for products like processors or GPUs. I have no trouble with imagining a superintelligent entity that could optimize this and knock down the cycle time, but there's going to be physical limits to these processes and the question is can it knock it down to 10 weeks or to 1 week? And when I'm talking about optimization, this isn't just uploading new software because that isn't how these machines work. It's designing new, faster machines or redesigning the assembly line and replacing the existing machines, so there's a minimum time required for that too before you can benefit from the faster cycle time on actually making things. Once you hit practical limits on cycle time, the only way to get more stuff faster is to scale wide by building more factories or making your current factories even larger.

If we want to try and avoid the above problems by suggesting that the AGI doesn't actually hack existing factories, but instead it convinces the factory owners to build the things it wants instead, there's not a huge difference -- instead of the prerequisite here being "build your own factory", it's "hostile takeover of existing factory", where that hostile takeover is either done by manipulation, on the public market, as a private sale, or by outbidding existing customers (e.g. have enough money to convince TSMC to make your stuff instead of Apple's), or with actual arms and violence. There's still the other lead times I've mentioned for retooling assembly lines and actually building a complete, physical system from one or more automated lines.

My prediction is that it will take AGI at least 30 years of effort to get to a point where it can comfortably rely on the robots to interact with the physical world and not have to count on humans for its supply chain needs.

[added] What if AGI just simulates our physical world?

This idea goes hand-in-hand with idea that AlphaFold is the answer to all challenges in bioengineering. There are two separate assumptions here, both found in the field of computational complexity:

- That an AGI can simulate the physical systems perfectly, i.e. physical systems are computable processes.

- That an AGI can simulate the physical systems efficiently, i.e. either P = NP, or for some reason all of these interesting problems that the AGI is solving are NOT known to be isomorphic to some NP-hard problem.

I don't think these assumptions are reasonable. For a full explanation see this excellent comment [LW(p) · GW(p)]by anonymousaisafety.

Mere mortals can’t comprehend AGI?

Another argument is that AGI will achieve such an incomprehensible level of intellect that it will become impossible to predict what it will be capable of. I mean, who knows, maybe with an IQ of 500 you could just magically turn yourself into a God and destroy Earth with a Thanos-style snap of your fingers? But I contend that even a creature with an IQ of 500 will be inherently limited by our physical universe and won’t magically become gain omniscience by virtue of its intellect alone. It will instead have to spend decades to get rid of using humans as a proxy, no matter how smart it could be potentially.

Does this mean EY is wrong and AGI is not a threat?

I believe that EY is only wrong about handwaving the difficulties of growing from a computer-based AGI to an AGI capable of operating independently from the human race. In the long-term his predictions will likely come true, once AGI has enough time to go through the difficult R&D cycle of building the nanofactories and diamondoid bacteria. My predicted timeline is as follows:

- AGI first appears somewhere around 2040, in line with the Metaculus prediction.

- After a few years of peaceful coexistence, AI Alignment researchers are mocked for their doomer predictions and everyone thinks that AGI is perfectly safe. EY will keep writing blog posts about how everyone is wrong and AGI cannot be trusted. AGI might start working behind the shadows to try and get AI Alignment researchers silenced.

- AGI spends decades convincing humanity to let it take over the global supply chains and to run complex experiments to manufacture advanced AGI-designed machinery, supposedly necessary to improve human living standards. This will likely take at least 30 years, as per our reference to how long it took to implement other gigantic breakthroughs in science.

- Once the AGI is convinced that all the cards have fallen into place and humans could be safely removed, it will pull the plug and destroy us all.

Updated version of the original progress graph

I’m hoping that the AI Alignment movement tries to spend more time on the low level engineering details of “humanity goes poof” rather than handwaving everything away via science fiction concepts. Because otherwise it’s hard to believe that the FOOM scenario could ever come to fruition. And if FOOM is not the real problem, perhaps we could save humanity by managing AGI’s interactions with the physical world more carefully once it appears?

72 comments

Comments sorted by top scores.

comment by lc · 2022-06-13T23:24:31.207Z · LW(p) · GW(p)

I upvoted your post because it seems relatively lucid and raises some important points, but would like to say that I'm in the middle of writing a pretty long, detailed explanation of why I agree with most of the gripes (e.g. AIs can't use magic to mine coal/build nanobots) and yet the object-level conclusions here are still untrue. In practice, I seriously doubt we would have more than a year to live after the release of AGI with the long term planning and reasoning abilities of most accountants, even without FOOM. People here shouldn't assume that, because Eliezer never posted a detailed analysis on LessWrong, everyone on the doomer train is starting from unreasonable premises regarding how robot building and research could function in practice.

Replies from: daniel-kokotajlo, nikita-sokolsky, yitz, adrian-arellano-davin↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-06-14T06:42:00.362Z · LW(p) · GW(p)

+1. If you don't write that post, I will. :)

And if you want feedback on your draft I'd be happy to give it a read and leave comments.

Replies from: lc, Evan R. Murphy↑ comment by lc · 2022-06-14T16:28:52.535Z · LW(p) · GW(p)

For sure; I think I'm about 45% of the way through, I'll send you a draft when it's about 90% done :)

Replies from: chrisvm↑ comment by Chris van Merwijk (chrisvm) · 2022-06-17T05:21:32.638Z · LW(p) · GW(p)

I'm also interested to read the draft, if you're willing to send it to me.

↑ comment by Evan R. Murphy · 2022-06-22T06:18:32.006Z · LW(p) · GW(p)

The user who was authoring the draft has apparently deactivated their account. Are they still working on writing that post?

↑ comment by nsokolsky (nikita-sokolsky) · 2022-06-14T15:02:43.271Z · LW(p) · GW(p)

People here shouldn't assume that, because Eliezer never posted a detailed analysis on LessWrong, everyone on the doomer train is starting from unreasonable premises regarding how robot building and research could function in practice.

I agree but unfortunately my Google-fu wasn't strong enough to find detailed prior explanations of AGI vs. robot research. I'm looking forward to your explanation.

↑ comment by Yitz (yitz) · 2022-06-14T23:24:05.898Z · LW(p) · GW(p)

I'm looking forward to reading your post!!

↑ comment by mukashi (adrian-arellano-davin) · 2022-06-14T11:31:39.683Z · LW(p) · GW(p)

One year. Would you be willing to bet on that?

Replies from: Vanilla_cabs↑ comment by Vanilla_cabs · 2022-06-14T11:50:18.113Z · LW(p) · GW(p)

It's nice that you're open to betting. What unambiguous sign would change your mind, about the speed of AGI takeover, long enough before it happens that you'd still have time to make a positive impact afterwards? Nobody is interested in winning a bet where winning means "mankind gets wiped".

Replies from: adrian-arellano-davin↑ comment by mukashi (adrian-arellano-davin) · 2022-06-14T12:12:01.459Z · LW(p) · GW(p)

Yes, that's the key issue. I'm not sure I can think of one. Do you have any ideas? I mean, what would be an unequivocal sign that AGI can take over in a year time? Something like a pre-AGI parasitizing a major computing center for X days before it is discovered in a plan to expand to other centres...? That would still not be a sign that we are pretty much f. up in a year, but definitely a data point towards things can go bad very quickly

What data point would make you change your mind in the opposite direction? I mean, something that happens and you say: yes, we can go all die but this won't happen in a year so, but maybe in something like 30 years or more

Edit: I posted two paragraphs originally in separate comments, unifying for the sake of clarity

Replies from: blackstampede↑ comment by blackstampede · 2022-06-14T17:19:44.967Z · LW(p) · GW(p)

Replies from: AllAmericanBreakfast, Vanilla_cabs↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-06-14T21:44:11.491Z · LW(p) · GW(p)

He has a $100 bet with Brian Caplan, inflation adjusted. EY took Brian’s money at the time of the bet, and pays back if he loses.

↑ comment by Vanilla_cabs · 2022-06-14T19:54:06.255Z · LW(p) · GW(p)

Yes, but I don't know if he really did it. I see multiple problems with that implementation. First, the interest rate should be adjusted for inflation, otherwise the bet is about a much larger class of events than "end of the world".

Next, there's a high risk that the "doom" better will have spent all their money by the time the bet expires. The "survivor" better will never see the color of their money anyway.

Finally, I don't think it's interesting to win if the world ends. I think what's more interesting is rallying doubters before it's too late, in order to marginally raise our chances of survival.

Replies from: yitz↑ comment by Yitz (yitz) · 2022-06-14T23:28:34.192Z · LW(p) · GW(p)

It may still be useful as a symbolic tool, regardless of actual monetary value. $100 isn't all that much in the grand scheme of things, but it's the taking of the bet that matters.

comment by anonymousaisafety · 2022-06-13T22:01:18.906Z · LW(p) · GW(p)

Related reading, if you're interested -- I tried to make these same arguments a few months ago:

- pivotal acts aren't constrained by intelligence [LW(p) · GW(p)]

- there are long lead times for production of anything [LW(p) · GW(p)]

- building the very first nanobot still needs bootstrapping [LW(p) · GW(p)]

↑ comment by nsokolsky (nikita-sokolsky) · 2022-06-13T22:38:38.860Z · LW(p) · GW(p)

Those are excellent comments! Do you mind if I add a few quotes from them to the post?

Replies from: anonymousaisafety↑ comment by anonymousaisafety · 2022-06-13T23:12:04.860Z · LW(p) · GW(p)

I don't mind.

comment by PeterMcCluskey · 2022-06-13T23:29:37.772Z · LW(p) · GW(p)

The beginning of this post seems fairly good.

I agree that an AGI would need lots of trial and error to develop a major new technology.

I'm unsure whether an AGI would need to be as slow as humans about that trial and error. If it needs secrecy, that might be a big constraint. If it gets human cooperation, I'd expect it to improve significantly on human R&D speed.

I also see a nontrivial chance that humans will develop Drexlerian nanotech before full AGI.

Your post gets stranger toward the end.

I don't see much value in a careful engineering analysis of how an AGI might kill us. Most likely it would involve a complex set of strategies, including plenty of manipulation, with no one attack producing certain victory by itself, but with humanity being overwhelmed by the number of different kinds of attack. There's enough uncertainty in that kind of fight that I don't expect to get a consensus on who would win. The uncertainty ought to be scary enough that we shouldn't need to prove who would win.

Replies from: AllAmericanBreakfast, nikita-sokolsky↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-06-14T21:50:19.624Z · LW(p) · GW(p)

Many kinds of uncertain attacks is not the strategy EY points at with his “diamondoid bacteria” idea. He’s worrying about a single undetectable attack with high chance of success using approaches that only an AGI can execute.

Others worry about an AGI using many attacks, but that is ultimately unbeatable.

Here’s you’re worrying about an AGI using many attacks that is beatable by humans, but not with confidence.

These are distinct arguments, and we should be clear about which one is being made and responded to.

↑ comment by nsokolsky (nikita-sokolsky) · 2022-06-14T15:21:58.545Z · LW(p) · GW(p)

"but with humanity being overwhelmed by the number of different kinds of attack."

But AGI will only be able to start carrying out these sneaky attacks once its fairly convinced it can survive without human help? Otherwise humans will notice the various problems propping up and might just decide to "burn all GPUs" which is currently an unimaginable act.. So AGI will have to act sneakily behind the scenes for a very long time. This is again coming back to the argument that humans have a strong upper hand as long as we've got monopoly on physical world manipulation.

comment by MinusGix · 2022-06-14T07:58:37.992Z · LW(p) · GW(p)

I initially wrote a long comment discussing the post, but I rewrote it as a list-based version that tries to more efficiently parcel up the different objections/agreements/cruxes.

This list ended up basically just as long, but I feel it is better structured than my original intended comment.

(Section 1): How fast can humans develop novel technologies

- I believe you assume too much about the necessary time based on specific human discoveries.

- Some of your backing evidence just didn't have the right pressure at the time to go further (ex: submarines) which means that I think a more accurate estimate of the time interval would be finding the time that people started paying attention to the problem again (though for many things that's probably hard to find) and began deliberately working on/towards that issue.

- Though, while I think focusing on when they began deliberately working is more accurate, I think there's still a notable amount of noise and basic differences due to the difference in ability to focus of humans relative to AGI, the unity (relative to a company), and the large amount of existing data in the future

- Other technologies I would expect were 'put off' because they're also closely linked to the available technology at the time. It can be hard to do specific things if your Materials-science understanding simply isn't good enough.

- Then there's the obvious throttling at the number of people in the industry focusing on that issue, or even capable of focusing on that issue.

- As well, to assume thirty years means that you also assume that the AGI does not have the ability to provide more incentive to 'speed up'. If it needs to build a factory, then yes there are practical limitations on how fast the factory can be built, but obstructions like regulation and cost are likely easier to remove for an AGI than a normal company.

- Some of your backing evidence just didn't have the right pressure at the time to go further (ex: submarines) which means that I think a more accurate estimate of the time interval would be finding the time that people started paying attention to the problem again (though for many things that's probably hard to find) and began deliberately working on/towards that issue.

- Crux #1: How long it takes for human inventions to spread after being thought up / initially tested / etc.

- This is honestly the one that seems to be the primary generator for your 'decades' estimate, however I did not find it that compelling even if I accept the premise that an AGI would not be able to build nanotechnology (without building new factories to build the new tools it needs to actually perform it)

- Note: The other cruxes later on are probably more about how much the AI can speed up research (or already has access to), but this could probably include a a specific crux related to that before this crux.

(Section 2): Unstoppable intellect meets the complexity of the universe

- While I agree that there are likely eventual physical limits (though likely you hit practical expected ROI before that) on intelligence and research results.

- There would be many low-hanging fruits which are significantly easier to grab with a combination of high compute + intelligence that we simply didn't/couldn't grab beforehand. (This would be affected by the lead time, if we had good math prover/explainer AIs for two decades before AGI then we'd have started to pick a lot of the significant ideas, but as the next part points out, having more of the research already available just helps you)

- I also think that the fact that we've gotten rid of many of the notable easier-to-reach pieces (ex: classical mechanics -> GR -> QM -> QFT) is actually a sign that things are easier now in terms of doing something. The AGI has a significantly larger amount of information about physics, human behavior, logic, etcetera, that it can use without having to build it completely from the ground up.

- If you (somehow) had an AGI appear in 1760 without much knowledge, then I'd expect that it would take many experiments and a lot of time to detail the nature of its reality. Far less than we took, but still a notable amount. This is the scenario where I can see it taking 80 years for the AGI to get set up, but even then I think that's more due to restrictions on readily available compute to expand into after self-modification than other constraints.

- However, we've picked out a lot of the high and low level models that work. Rather than building an understanding of atoms through careful experimentation procedures, it can assume that they exist and pretty much follow the rules its been given.

- (maybe) Crux #2: Do we already have most of the knowledge needed to understand and/or build nanotechnology?

- I'm listing this as 'maybe' as I'm more notably uncertain about this than others.

- Does it just require the concentrated effort of a monolithic agent staring down at the problem and being willing to crunch a lot of calculations and physics simulators?

- Or does it require some very new understanding of how our physics works?

(Section 3): What does AGI want?

- Minor objection on the split of categories. I'd find it.. odd if we manage to make an AI that terminally values only 'kill all humans'.

- I'd expect more varying terminal values, with 'make humans not a threat at all' (through whatever means) as an instrumental goal

- I do think it is somewhat useful for your thought experiments later on try making the point that even a 'YOLO AGI' would have a hard time having an effect

(Section 4): What does it take to make a pencil?

- I think this analogy ignores various issues

- Of course, we're talking about pencils, but the analogy is more about 'molecular-level 3d-printer' or 'factory technology needed to make molecular level printer' (or 'advanced protein synthesis machine')

- Making a handful of pencils if you really need them is a lot more efficient than setting up that entire system.

- Though, of course, if you're needing mass production levels of that object then yes you will need this sort of thing.

- Crux #3: How feasible is it to make small numbers of specialized technology?

- There's some scientific setups that are absolutely massive and require enormous amounts of funding, however then there are those that with the appropriate tools you can setup in a home workshop. I highly doubt either of those is the latter, but I'd also be skeptical that they need to be the size of the LHC.

- Note: Crux #4 (about feasibility of being able to make nanotechnology with a sufficient understanding of it and with current day or near-future protein synthesis) is closely related, but it felt more natural to put that with AlphaFold.

(Section 5): YOLO AGI?

- I think your objection that they're all perfectly doable by humans in the present is lacking.

- By metaphor:

- While it is possible for someone to calculate a million digits of pi by hand, the difference between speed and overall capability is shocking.

- While it is possible for a monkey to kill all of its enemies, humans have a far easier time with modern weaponry, especially in terms of scale

- Your assumption that it would take decades for even just the scenarios you list (except perhaps the last two) seems wrong

- Unless you're predicating on the goal being literally wiping out every human, but then that's a problem with the model simplification of YOLO AGI. Where we model an extreme version of an AGI to talk about the more common, relatively less extreme versions that aren't hell-bent on killing us, just neutralizing us. (Which is what I'm assuming the intent from the section #3 split and this is)

- Then there's, of course, other scenarios that you can think up. For various levels of speed and sure lethality

- Ex: Relatively more mild memetic hazards (perhaps the level of 'kill your neighbor' memetic hazard is too hard to find) but still destructive can cause significant problems and gives room to be more obvious.

- Synthesize a food/drink/recreational-drug that is quite nice (and probably cheap) that also sterilizes you after a decade, to use in combination with other plans to make it even harder to bounce back if you don't manage to kill them in a decade

- To say that an AGI focused on killing will only "somewhat" increase the chances seems to underplay it severely.

- If I believed a nation state solidly wanted to do any of those on the list in order to kill humanity right now, then that would increase my worry significantly more than 'somewhat'

- For an AGI that:

- Isn't made up of humans who may value being alive, or are willing to put it off for a bit for more immediate rewards than their philosophy

- Can essentially be a one-being research organization

- Likely hides itself better

- then I would be even more worried.

- By metaphor:

(Section 6): But what about AlphaFold?

- This ignores how recent AlphaFold is.

- I would expect that it would improve notably over the next decade, given the evidence that it works being supplied to the market.

- (It would be like assuming GPT-1 would never improve, while there's certainly limits on how much it can improve, do we have evidence now that AlphaFold is even halfway to the practical limit?)

- This ignores possibility of more 'normal' simulation:

- While simulating physics accurately is highly computationally expensive, I don't find it infeasible that

- AI before, or the AGI itself, will find some neat ways of specializing the problem to their specific class of problems that they're interested (aka abstractions over the behavior of specific molecules, rather than accurately simulating them) that are just intractable for an unassisted human to find

- This also has benefits in that it is relatively more well understood, which makes it likely easier to model for errors than AlphaFold (though the difference depends on how far we/the-AGI get with AI interpretability)

- The AI can get access to relatively large amounts of compute when it needs it.

- I expect that it can make a good amount of progress in theory before it needs to do detailed physics implementations to test its ideas.

- I also expect this to only grow over time, unless it takes actions to harshly restrict compute to prevent rivals

- AI before, or the AGI itself, will find some neat ways of specializing the problem to their specific class of problems that they're interested (aka abstractions over the behavior of specific molecules, rather than accurately simulating them) that are just intractable for an unassisted human to find

- While simulating physics accurately is highly computationally expensive, I don't find it infeasible that

- I'm very skeptical of the claim that it would need decades of lab experiments to fill in the gaps in our understanding of proteins.

- If the methods for predicting proteins get only to twice as good as AlphaFold, then the AGI would specifically design to avoid hard-to-predict proteins

- My argument here is primarily that you can do a tradeoff of making your design more complex-in-terms-of-lots-of-basic-pieces-rather-than-a-mostly-single-whole/large in order to get better predictive accuracy.

- Crux #4: How good can technology to simulate physics (and/or isolated to a specific part of physics, like protein interactions) practically get?

- (Specifically practical in terms of ROI, maybe we can only completely crack protein folding with planet sized computers, but that isn't feasible for us or the AGI on the timescales we're talking about)

- Are we near the limit already? Even before we gain a deeper understanding of how networks work and how to improve their efficiency? Even before powerful AI/AGI are applied to the issue?

(Section 7): What if AGI settles for a robot army?

- 'The robots are running on pre-programmed runs in a human-designed course and are not capable of navigating through unknown terrain'

- Are they actually pre-programmed in the sense that they flashed the rom (or probably uploaded onto the host OS) the specific steps, or is it "Go from point A to point B along this path" where it then dodges obstacles?

- As well, this doesn't stop it from being a body to just directly control.

- We'll also have further notable advancements in robots that can navigate appropriately by the time AGI comes about

- As well as increased number, though this depends on how popular/useful they are. I don't expect a 'Mr. Handy' Fallout style butler in every home, but I'd expect robots from places like Boston Mechanics to start filtering out more and more to organizations that want them over the next decade or two.

- Existing factories already exist (likely now and almost certainly in the future), which dodges the issue of having to design + build them. AGI buys Boston Mechanics / manipulates / just buys robots and then can have robots that it doesn't even have to hack remotely but can aggressively tear down if it wants. Though, of course the equivalent(s) at the time.

- I think you ovestimate how hard it would be to control robots remotely.

- As for, hosting a clone of the AGI, I do think this is unlikely in part due to feasibility but also that there's better methods.

- Though, I would note that I believe it makes sense to expect that we can reduce model sizes significantly (either during training or afterwards) with help of better models of how networks work and that with AI help we could reduce it further.

- Though, while this may mean that in the future it might be feasible to run GPT-3 on a normal laptop at that time, that doesn't mean that you can fit the AGI on a robot. Perhaps you could fit a seed AGI, but then you lose a lot of data. Anyway.

- I'd be surprised if the battery usage couldn't be improved significantly, whether through better battery designs over the next two decades or more efficient designs or larger bodies (since that's for Spot, which isn't humanoid sized, so carrying around a heavy battery is more significant)

- I also object that the AGI has little reason to bother with normal human warfare, unless it really makes itself obvious.

- It has little reason to keep large swaths of land. (It could protect some factory, but unless you're getting supplies then that's a problem)

- It has incentive to just disappear as best as possible, or just shrug and release a plague since humanities risk just went up

- Again, a thirty years prediction.

- I've already argued against it even needing to bother with thirty years, and I don't think that it needs a typical conception of robot army in most cases

- I think this claim of 'thirty years' for this thing depends (beyond the other bits) on how much we've automated various parts of the system before then. We have a trend towards it, and our AIs are getting better at tasks like that, so I don't think its unlikely. Though I also think its reasonable to expect we'll settle somewhere before almost full automation.

(Section 8): Mere mortals can't comprehend AGI

- While there is the mildly fun idea of the AGI discovering the one unique trick that immediately makes it effectively a god, I do agree its unlikely.

- However, I don't think that provides much evidence for your thirty years timeframe suggestion

- I do think you should be more wary of black swan events, where the AI basically cracks an area of math/problem-solving/socialization-rules/etcetera, but this doesn't play a notable role in my analysis above.

(Section 9): (Not commented upon)

General:

- I think the 'take a while to use human manufacturing' is a possible scenario, but I think relative to shorter methods of neutralization (ex: nanotech) it ranks low.

- (Minor note: It probably ranks higher in probability than nanotech, but that's because nanotech is so specific relative to 'uses human manufacturing for a while', but I don't think it ranks higher than a bunch of ways to neutralize humanity that take < 3 years)

- Overall, I think the article makes some good points in a few places, but I also think it is not doing great epistemically in terms of considering what those you disagree with believe or might believe and in terms of your certainty.

- Just to preface: Eliezer's article has this issue, but it is a list/introducing-generator-of-thoughts, more for bringing in unsaid ideas explicitly into words as well as for for reference. Your article is an explainer of the reasons why you think he's wrong about a specific issue.

(If there's odd grammar/spelling, then that's primarily because I wrote this while feeling sleepy and then continued for several more hours)

comment by Adam Jermyn (adam-jermyn) · 2022-06-14T00:55:16.259Z · LW(p) · GW(p)

A crux here seems to be the question of how well the AGI can simulate physical systems. If it can simulate them perfectly, there's no need for real-world R&D. If its simulations are below some (high) threshold fidelity, it'll need actors in the physical world to conduct experiments for it, and that takes human time-scales.

A big point in favor of "sufficiently good simulation is possible" is that we know the relevant laws of physics for anything the AGI might need to take over the world. We do real-world experiments because we haven't managed to write simulation software that implements these laws at sufficiently high fidelity and for sufficiently complex systems, and because the compute cost of doing so is enormous. But in 20 years, an AGI running on a giant compute farm might both write more efficient simulation codes and have enough compute to power them.

Replies from: anonymousaisafety, LGS↑ comment by anonymousaisafety · 2022-06-14T01:41:53.009Z · LW(p) · GW(p)

You're making two separate assumptions here, both found in the field of computational complexity:

- That an AGI can simulate the physical systems perfectly, i.e. physical systems are computable processes.

- That an AGI can simulate the physical systems efficiently, i.e. either P = NP, or for some reason all of these interesting problems that the AGI is solving are NOT known to be isomorphic to some NP-hard problem.

For (1), it might suffice to show that some physical system can be approximated by some algorithm, even if the true system is not known to be computable. Computability is a property of formal systems.

It is an open question if all real world physical processes are computable. Turing machines are described using natural numbers and discrete time steps. Real world phenomena rely on real numbers and continuous time. Arguments that all physical processes are computable are based on discretizing everything down to the Planck time, length, mass, temperature, and then assuming determinism.

This axiom tends to come as given if you already believe the "computable universe" hypothesis, "digital physics", or "Church-Turing-Deutsch" principle is true. In those hypotheses, the entire universe can be simulated by a Turing machine.

There are examples of problems that are currently not known to be computable in the general case. For example, the chaotic N-body problems in physics, or undecidable problems in logic like Hilbert's tenth problem.

But in 20 years, an AGI running on a giant compute farm might both write more efficient simulation codes and have enough compute to power them

For (2), there is a lack of appreciation for the "exponential blowup" often seen in trying to solve many interesting problems optimally. When we say it is not efficient to solve some problem N optimally, there's a wide range of ways to interpret that statement:

- It's not feasible to solve the problem on your desktop within 1 year.

- It's not feasible to solve the problem on the local university's super-computing cluster within 1 year.

- It's not feasible to solve the problem on the USA's best super-computing clusters within 1 year.

- It's not feasible to solve the problem on the world's compute resources within 1 year.

- It's not feasible to solve the problem with compute resources on the scale of the solar system within 1 year.

Here, for instance, is the famous quote by Paul Erdős regarding Ramsey numbers:

Suppose aliens invade the earth and threaten to obliterate it in a year's time unless human beings can find the Ramsey number for red five and blue five. We could marshal the world's best minds and fastest computers, and within a year we could probably calculate the value. If the aliens demanded the Ramsey number for red six and blue six, however, we would have no choice but to launch a preemptive attack.

If I had to rank the beliefs by most to least suspect, this would be near the top of the "most suspect" section. We are already aware of many problems that are computable, but not efficiently. We can see complexity classes like NP or EXPTIME in many real world problems, such as chess, Go, traveling salesman, and so forth. We also have problems that we think aren’t NP, but we haven’t found an algorithm in P yet, like graph isomorphism or integer factorization.

Most measures we can use for ranking people by their respective intelligence, e.g. math proofs, skill at competitive games, mental math, finding solutions to problems with tricky constraints, and so forth are examples of solving problems that we know are isomorphic to NP-hard or EXPTIME problems. So it seems to follow that a hypothetical algorithm (let's call it "general intelligence") that could solve all of the above in some optimal manner would be problematic for a digital computer.

When we write a program that can solve those types of problems, we have 2 options.

The first option is to try and solve it for some optimal solution. This is where the complexity classes bite us. We don't know how to do this for many real world problems in an efficient manner. If we did, then we'd have a proof for P=NP, and whoever came up with that proof could get a check for 1 million dollars from the Clay Institute.

The second option, and the one we actually use, is to use an algorithm that can find any solution, and then to terminate that algorithm once a solution is "good" enough. For some algorithms, called "approximation algorithms", we can even prove a bound on how close our "good" solution is to the optimal solution. This is called "optimality". When we train neural networks, those neural networks learn heuristics that often find "good" solutions, but those random or probabilistic algorithms don't have guarantees on optimality. This last point is often confused by stating that it's possible to show a training process has resulted in an optimal configuration of a neural network. That is a subtle and different point. Saying that a neural network has the optimal configuration to decide A -> B is not the same as saying that B is the optimal solution for A.

This is basically arguing that the concept of “bounded rationality” is a necessary precondition for efficiently running the hypothetical algorithm of “general intelligence”. If true, all of the normal limits and weaknesses we see in human rationality, like biases, heuristics, or fallacies would be expected within any hypothetical AI/ML system that demonstrates “general intelligence” under reasonable time constraints.

How much this matters depends on how strongly you believe in the power of simulation-focused "general intelligence". For example, if you believe that the key distinguishing factor of an AI/ML system is that it will be able to simulate the universe and thus accurately predict outcomes with near-perfect probability, or do the same for protein folding such that the simulation of proposed protein is 100% accurate when that same protein is synthesized in real life, then you've implicitly subscribed to some form of this belief where the AI/ML system returns results quickly that are not just "good", but indeed "optimal".

We can look at AlphaFold -- a monumental advance in protein folding -- for an example of what this looks like in practice. I'll refer to the post[1] by Dr. AlQuraishi discussing the accuracy and limitations of AlphaFold. The important point is that while AlphaFold can predict proteins with high accuracy, it is not perfect. Sometimes the calculation of how a protein would fold is still wildly different from how it actually folds. This is exactly what we'd expect from fast, efficient, and reasonably good solvers that have no guarantee on optimality. I don't want this point to be misunderstood. AI/ML systems are the cause of huge leaps in our understanding and capabilities within various domains. But they have not replaced the need for actual experimentation.

We can argue that an AI/ML system doesn't need to approximate solutions, and it will simply perform the inefficient computations for optimality, because it has the hardware or compute resources to do so. This is fine. We can write optimal solvers for NP-hard problems. We can also calculate how long they will take to run on certain inputs, given certain hardware. We can ask how parallelizable the algorithm is by looking at Amdahl's law. What we'll discover if we do these calculations is that the computation of the optimal solution for these NP-hard or EXPTIME problems becomes non-feasible to compute with any realistic amount of compute power. By realistic amount, I mean “a solar system worth of material devoted solely to computing still cannot provide optimal solutions within the time-span of a human life even on distressingly small inputs for NP-hard problems”.

There is another argument that gets made for this belief, one where we replace the digital computer or Turing machine with a hypothetical quantum computer. I don't have a problem with this argument either, except to note that if we accept this rewording, many downstream assumptions about the AI/ML systems ability to scale in hardware go out of the window. The existence of the AWS cloud or billions of desktop home computers networked to the Internet is irrelevant if your AI/ML system is only efficient when run on a quantum computer. The counter-argument is that a Turing machine can simulate a quantum computer. Yes -- but can it do so efficiently? This is the whole reason for the "quantum supremacy" argument. If "quantum supremacy" is true, then a Turing machine, even if it can simulate a quantum computer, cannot do so efficiently. In that world, the AI/ML system is still bottlenecked to a real quantum computer platform. Furthermore, a quantum computer is not magically capable of solving any arbitrary problems faster than a classical computer. While there are some problems that a quantum computer could provably solve faster than a classical computer if P != NP, such as integer factorization, it is not true that a quantum computer can solve arbitrary NP-hard problems efficiently.

Another motivating example we can look at here are things like weather prediction or circuit simulation. In both scenarios, we have a very good understanding of the physical laws that the systems obey. We also have very good simulations for these physical laws. The reason why it's computationally expensive for a digital computer to simulate these systems is because the real processes are continuous, while the simulated process uses some time delta (usually referred to as dT in code) between discrete steps of the simulation.

For complicated, physical processes, the algorithm that can simulate that process can be made arbitrarily close to the real world measurement of that process, but only by reducing dT. With each reduction in dT, the algorithm takes longer to run. If we want to avoid the increase in wall time, we need to find a way to break up the problem into some type of sub-problem that can be parallelized on independent compute units, and then reduce dT on those sub-problems. If we can't do that, because the problem has some tricky global behavior like the N-body problem alluded to above, we have to accept limitations on how accurate our results are for the given wall time we can afford.

In electrical engineering, this is the tradeoff that an electrical engineer makes when they're designing hardware. They can request quick & fast simulations for sanity checks during iterative development. The fast simulations might run at higher dT values, or they might use simplifying assumptions for circuit behavior. Then, prior to sending a board to be manufactured, they can run a much longer and detailed simulation. The longer solution might take hours or days to run. For this reason, the EE might only run the detailed simulation on the most critical part of a piece of hardware, and assume that the rest will work as predicted by other simulation or design work. This tradeoff means that there is a risk when the real hardware is manufactured that it does not work as intended, e.g. due to an unexpected RF effect.

For a concrete example of this tradeoff, Nvidia recently announced that they've been able to speed up a certain calculation that takes 3 hours with a traditional tool to only 3 seconds using an AI/ML system. The AI/ML system is 94% accurate. This is a huge win for day-to-day iterative work, but you'd still want to run the most accurate simulation you can (even if it takes hours or days!) before going to manufacturing, where the lead time on manufacturing might be weeks or months.

The final point I'd like to discuss is arguments where a superintelligent AI/ML system can predict far into the future & therefore exploit the actions of an arbitrary number of other actors.

I have referred to this point in discussion among friends as the "strong Psychohistory assumption". Psychohistory is a fictional science conceptualized in the novel Foundation by Isaac Asimov. The idea of psychohistory is that a sufficiently intelligent actor can predict the actions of others (tangent: in the context of the novels it only applies to "large groups") into the future at an accuracy that allows one to run circles around them in a competitive scenario. In Foundation, the fictional stakes play out over thousands of years. This problem, and others like it, are reducible to the same complexity concerns above. Unless "general intelligence" is able to be efficiently simulated, there's no reason to expect a superintelligent AI/ML system to be able to make perfect predictions into the future. Without this assumed trait, many takeoff strategies for intelligent AI/ML systems are not sound. They rely, implicitly or explicitly, on the intelligent AI/ML system being able to control individual humans and the society around them like a puppeteer and their puppets.

The conclusion that I'd like for you to consider is that AI/ML systems will produce astonishingly efficient, reasonably good solutions to arbitrary tasks -- but at no point will that invalidate the need for actual, iterative experimentation in the real world, without making an expensive trade between wall time required for simulation vs the accuracy of that simulation.

Or, to try and phrase this another way, an AI/ML system that is 99% accurate at throwing a twenty-sided die to achieve a desired result can still roll a critical failure.

Replies from: Luke A Somers, MSRayne, nikita-sokolsky↑ comment by Luke A Somers · 2022-06-14T13:08:22.683Z · LW(p) · GW(p)

It seems like you're relying on the existence of exponentially hard problems to mean that taking over the world is going to BE an exponentially hard problem. But you don't need to solve every problem. You just need to take over the world.

Like, okay, the three body problem is 'incomputable' in the sense that it has chaotically sensitive dependence on initial conditions in many cases. So… don't rely on specific behavior in those cases on long time horizons without the ability to do small adjustments to keep things on track.

If the AI can detect most of the hard cases and avoid relying on them, and include robustness by having multiple alternate mechanisms and backup plans, even just 94% success on arbitrary problems could translate into better than that on an overall solution.

Replies from: yair-halberstadt↑ comment by Yair Halberstadt (yair-halberstadt) · 2022-06-14T14:24:32.861Z · LW(p) · GW(p)

This was specifically responding to the claim that an AI could solve problems without trial and error by perfectly simulating them, which I think it does a pretty reasonable job of shooting down.

↑ comment by nsokolsky (nikita-sokolsky) · 2022-06-14T15:23:47.511Z · LW(p) · GW(p)

You should make a separate post on "Can AGI just simulate the physical world?". Will make it easier to find and reference in the future.

Replies from: anonymousaisafety↑ comment by anonymousaisafety · 2022-06-14T22:37:21.464Z · LW(p) · GW(p)

That was extracted from a much larger work [LW(p) · GW(p)] I've been writing for the past 2 months. The above is less than ~10% of what I've written on the topic, and it goes much further than simulation problems. I am also trying to correct misunderstandings around distributed computation, hardware vs software inefficiency, improvements in performance from algorithmic gains, this community's accepted definition for "intelligence", the necessity or inevitability of self-improving systems, etc.

I'll post it when done but in the meantime I'm just tossing various bits & pieces of it into debate wherever I see an opening to do so.

↑ comment by LGS · 2022-06-14T09:36:44.897Z · LW(p) · GW(p)

Proteins and other chemical interactions are governed by quantum mechanics, so the AGI would probably need a quantum computer to do a faithful simulation. And that's for a single, local interaction of chemicals; for a larger system, there are too many particles to simulate, so some systems will be as unpredictable as the weather in 3 weeks.

Replies from: Luke A Somers, adrian-arellano-davin↑ comment by Luke A Somers · 2022-06-14T12:58:56.575Z · LW(p) · GW(p)

The distribution of outcomes is much more achievable and much more useful than determining the one true way some specific thing will evolve. Like, it's actually in-principle achievable, unlike making a specific pointlike prediction of where a molecular ensemble is going to be given a starting configuration (QM dependency? Not merely a matter of chaos). And it's actually useful, in that it shows which configurations have tightly distributed outcomes and which don't, unlike that specific pointlike prediction.

Replies from: LGS↑ comment by LGS · 2022-06-14T21:39:37.777Z · LW(p) · GW(p)

What does "the distribution of outcomes" mean? I feel like you're just not understanding the issue.

The interaction of chemical A with chemical B might always lead to chemical C; the distribution might be a fixed point there. Yet you may need a quantum computer to tell you what chemical C is. If you just go "well I don't know what chemical it's gonna be, but I have a Bayesian probability distribution over all possible chemicals, so everything is fine", then you are in fact simulating the world extremely poorly. So poorly, in fact, that it's highly unlikely you'll be able to design complex machines. You cannot build a machine out of building blocks you don't understand.

Maybe the problem is that you don't understand the computational complexity of quantum effects? Using a classical computer, it is not possible to efficiently calculate the "distribution of outcomes" of a quantum process. (Not the true distribution, anyway; you could always make up a different distribution and call it your Bayesian belief, but this borders on the tautological.)

↑ comment by mukashi (adrian-arellano-davin) · 2022-06-14T12:15:49.147Z · LW(p) · GW(p)

Not am expert at all here, so please correct me if I am wrong, but I think that quantum systems are routinely simulated with non quantum computers. Nothing to argue against the second part

Replies from: jacopo, LGS, Measure↑ comment by jacopo · 2022-06-14T17:16:39.181Z · LW(p) · GW(p)

You are correct (QM-based simulation of materials is what I do). The caveat is that exact simulations are so slow that they are impossible, that would not be the case with quantum computing I think. Fortunately, we have different levels of approximation for different purposes that work quite well. And you can use QM results to fit faster atomistic potentials.

↑ comment by LGS · 2022-06-14T21:33:29.253Z · LW(p) · GW(p)

You are wrong in the general case -- quantum systems cannot are are not routinely simulated with non-quantum computers.

Of course, since all of the world is quantum, you are right that many systems can be simulated classically (e.g. classical computers are technically "quantum" because the entire world is technically quantum). But on the nano level, the quantum effects do tend to dominate.

IIRC some well-known examples where we don't know how to simulate anything (due to quantum effects) are the search for a better catalyst in nitrogen fixation and the search for room-temperature superconductors. For both of these, humanity has basically gone "welp, these are quantum effects, I guess we're just trying random chemicals now". I think that's also the basic story for the design of efficient photovoltaic cells.

↑ comment by Measure · 2022-06-14T14:16:23.560Z · LW(p) · GW(p)

Replies from: LGSSimulating a quantum computer on a classical one does indeed require a phenomenal amount of resources when no approximations are made and noise is not considered. Exact algorithms for simulating quantum computers require time or memory that grows exponentially with the number of qubits or other physical resources. Here, we discuss a class of algorithms that has attracted little attention in the context of quantum-computing simulations. These algorithms use quantum state compression and are exponentially faster than their exact counterparts. In return, they possess a finite fidelity (or finite compression rate, similar to that in image compression), very much as in a real quantum computer.

↑ comment by LGS · 2022-06-14T21:58:01.025Z · LW(p) · GW(p)

This paper is about simulating current (very weak, very noisy) quantum computers using (large, powerful) classical computers. It arguably improves the state of the art for this task.

Virtually no expert believes you can efficiently simulate actual quantum systems (even approximately) using a classical computer. There are some billon-dollar bounties on this (e.g. if you could simulate any quantum system of your choice, you could run Shor's algorithm, break RSA, break the signature scheme of bitcoin, and steal arbitrarily many bitcoins).

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2022-08-26T01:29:27.534Z · LW(p) · GW(p)

Simulating a nanobot is a lower bar than simulating a quantum computer. Also a big unsolved problem like P=NP might be easy to an ASI.

Replies from: LGS↑ comment by LGS · 2022-08-26T21:36:52.610Z · LW(p) · GW(p)

It remains to be seen whether it's easier. It could also be harder (the nanobot interacts with a chaotic environment which is hard to predict).

"Also a big unsolved problem like P=NP might be easy to an ASI."

I don't understand what this means. The ASI may indeed be good at proving that P does not equal NP, in which case it has successfully proven that it itself cannot do certain tasks (the NP complete ones). Similarly, if the ASI is really good at complexity theory, it could prove that BQP is not equal to BPP, at which point is has proven that it itself cannot simulate quantum computation on a classical computer. But that still does not let it simulate quantum computation on a classical computer!

↑ comment by Donald Hobson (donald-hobson) · 2022-08-27T02:13:17.199Z · LW(p) · GW(p)

The reason for the exponential term is that a quantum computer uses a superposition of exponentially many states. A well functioning nanomachine doesn't need to be in a superposition of exponentially many states.

For that matter, the AI can make its first nanomachines using designs that are easy to reason about. This is a big hole in any complexity theory based argument. Complexity theory only applies in the worst case. The AI can actively optimize its designs to be easy to simulate.

Its possible the AI shows P!=NP, but also possible the AI shows P=NP, and finds a fast algorithm. Maybe the AI realizes that BQP=BPP.

Replies from: LGS↑ comment by LGS · 2022-08-30T09:31:15.408Z · LW(p) · GW(p)

Maybe the AI can make its first nanomachines easy to reason about... but maybe not. We humans cannot predict the outcome of even relatively simple chemical interactions (resorting to the lab to see what happens). That's because these chemical interactions are governed by the laws of quantum mechanics, and yes, they involve superpositions of a large number of states.

"Its possible the AI shows P!=NP, but also possible the AI shows P=NP, and finds a fast algorithm. Maybe the AI realizes that BQP=BPP."

It's also possible the AI finds a way to break the second law of thermodynamics and to travel faster than light, if we're just gonna make things up. (I have more confidence in P!=NP than in just about any phsyical law.) If we only have to fear an AI in a world where P=NP, then I'm personally not afraid.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2022-08-30T11:41:46.751Z · LW(p) · GW(p)

Not sure why you are quite so confident P!=NP. But that doesn't really matter.

Consider bond strength. Lets say the energy taken to break a C-C bond varies by based on all sorts of complicated considerations involving the surrounding chemical structure. An AI designing a nanomachine can just apply 10% more energy than needed.

A quantum computer doesn't just have a superposition of many states, its a superposition carefully chosen such that all the pieces destructively and constructively interfere in exactly the right way. Not that the AI needs exact predictions anyway.

Also, the AI can cheat. As well as fundamental physics, it has access to a huge dataset of experiments conducted by humans. It doesn't need to deduce everything from QED, it can deduce things from random chemistry papers too.

comment by matute8 · 2022-06-14T17:55:24.139Z · LW(p) · GW(p)