Posts

Comments

I see that reading comprehension was an issue for you, since it seems that you stopped reading my post halfway through. Funny how a similar thing occurred on my last post too. It's almost like you think that the rules don't apply to you, since everyone else is required to read every single word in your posts with meticulous accuracy, whereas you're free to pick & choose at your whim.

i.e. splitting hairs and swirling words around to create a perpetual motte-and-bailey fog that lets him endlessly nitpick and retreat and say contradictory things at different times using the same words, and pretending to a sort of principle/coherence/consistency that he does not actually evince.

Yeah, almost like splitting hairs around whether making the public statement "I now categorize Said as a liar" is meaningfully different than "Said is a liar".

Or admonishing someone for taking a potshot at you when they said

However, I suspect that Duncan won't like this idea, because he wants to maintain a motte-and-bailey where his posts are half-baked when someone criticizes them but fully-baked when it's time to apportion status.

...while acting as though somehow that would have been less offensive if they had only added "I suspect" to the latter half of that sentence as well. Raise your hand if you think that "I suspect that you won't like this idea, because I suspect that you have the emotional maturity of a child" is less offensive because it now represents an unambiguously true statement of an opinion rather than being misconstrued as a fact. A reasonable person would say "No, that's obviously intended to be an insult" -- almost as though there can be meaning beyond just the words as written.

The problem is that if we believe in your philosophy of constantly looking for the utmost literal interpretation of the written word, you're tricking us into playing a meta-gamed, rules-lawyered, "Sovereign citizen"-esque debate instead of, what's the word -- oh, right, Steelmanning. Assuming charity from the other side. Seeking to find common ground.

For example, I can point out that Said clearly used the word "or" in their statement. Since reading comprehension seems to be an issue for a "median high-karma LWer" like yourself, I'll bold it for you.

Said: Well, I think that “criticism”, in a context like this topic of discussion, certainly includes something like “pointing to a flaw or lacuna, or suggesting an important or even necessary avenue for improvement”.

Is it therefore consistent for "asking for examples" to be contained by that set, while likewise not being pointing to a flaw? Yes, because if we say that a thing is contained by a set of "A or B", it could be "A", or it could be "B".

Now that we've done your useless exercise of playing with words, what have we achieved? Absolutely nothing, which is why games like these aren't tolerated in real workplaces, since this is a waste of everyone's time.

You are behaving in a seriously insufferable way right now.

Sorry, I meant -- "I think that you are behaving in what feels like to me a seriously insufferable way right now, where by insufferable I mean having or showing unbearable arrogance or conceit".

Yes, I have read your posts.

I note that in none of them did you take any part of the responsibility for escalating the disagreement to its current level of toxicity.

You have instead pointed out Said's actions, and Said's behavior, and the moderators lack of action, and how people "skim social points off the top", etc.

@Duncan_Sabien I didn't actually upvote @clone of saturn's post, but when I read it, I found myself agreeing with it.

I've read a lot of your posts over the past few days because of this disagreement. My most charitable description of what I've read would be "spirited" and "passionate".

You strongly believe in a particular set of norms and want to teach everyone else. You welcome the feedback from your peers and excitedly embrace it, insofar as the dot product between a high-dimensional vector describing your norms and a similar vector describing the criticism is positive.

However, I've noticed that when someone actually disagrees with you -- and I mean disagreement in the sense of "I believe that this claim rests on incorrect priors and is therefore false." -- I have been shocked by the level of animosity you've shown in your writing.

Full disclosure: I originally messaged the moderators in private about your behavior, but I'm now writing this in public because in part because of your continued statements on this thread that you've done nothing wrong.

I think that your responses over the past few days have been needlessly escalatory in a way that Said's weren't. If we go with the Socrates metaphor, Said is sitting there asking "why" over and over, but you've let emotions rule and leapt for violence (metaphorically, although you then did then publish a post about killing Socrates, so YMMV).

There will always be people who don't communicate in a way that you'd prefer. It's important (for a strong, functioning team) to handle that gracefully. It looks to me that you've become so self-convinced that your communication style is "correct" that you've taken a war path towards the people who won't accept it -- Zack and Said.

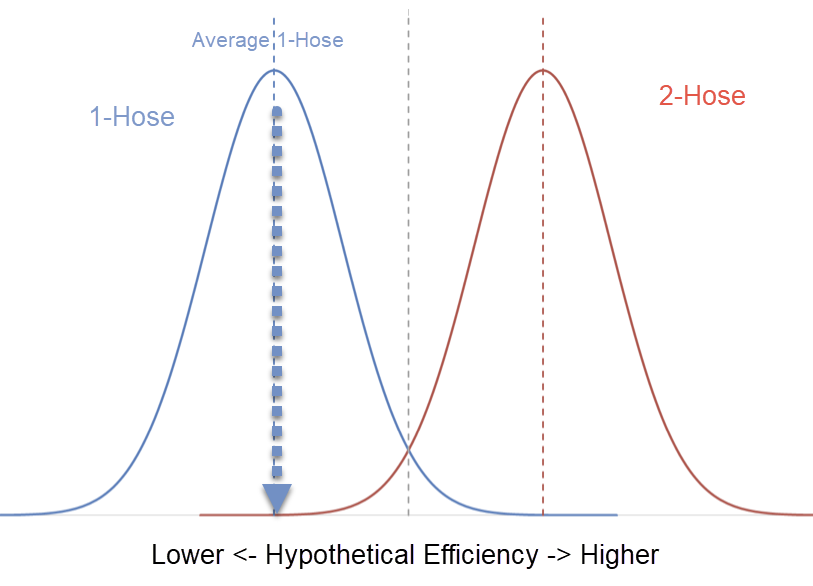

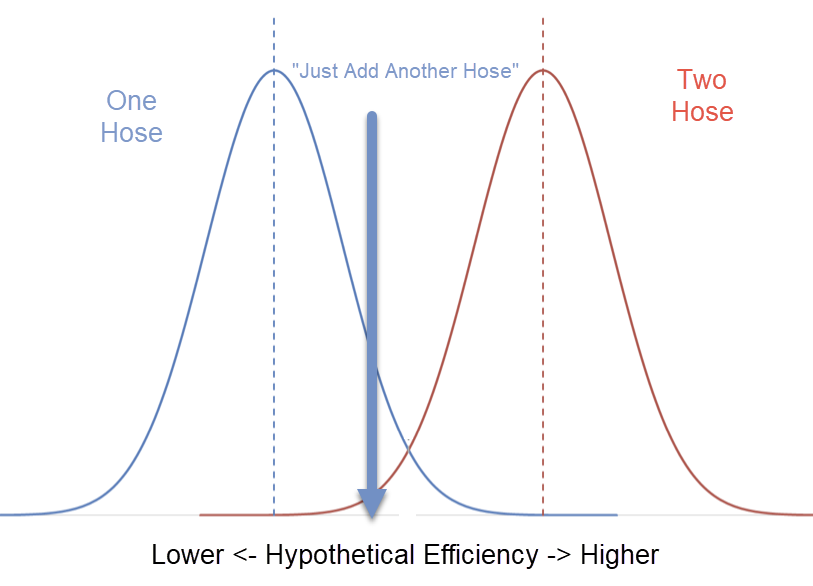

In a company, this is problematic because some of the things that you're asking for are actually not possible for certain employees. Employees who have English as a second language, or who come from a different culture, or who may have autism, all might struggle with your requirements. As a concrete example, you wrote at length that saying "This is insane" is inflammatory in a way that "I think that this is insane" wouldn't be -- while I understand and appreciate the subtlety of that distinction, I also know that many people will view the difference between those statements as meaningless filler at best. I wrote some thoughts on that here: https://www.lesswrong.com/posts/9vjEavucqFnfSEvqk/on-aiming-for-convergence-on-truth?commentId=rGaKpCSkK6QnYBtD4

I believe that you are shutting down debates prematurely by casting your peers as antagonist towards you. In a corporate setting, as an engineer acquires more and more seniority, it becomes increasingly important for them to manage their emotions, because they're a role model for junior engineers.

I do think that @Said Achmiz can improve their behavior too. In particular, I think Said could recognize that sometimes their posts are met with hostility, and rather than debating this particular point, they could gracefully disengage from a specific conversation when they determine that someone does not appreciate their contributions.

However, I worry that you, Duncan, are setting an increasingly poor example. I don't know that I agree with the ability to ban users from posts. I think I lean more towards "ability to hide any posts from a user" as a feature, more than "prevent users from commenting". That is to say, I think if you're triggered by Said or Zack, then the site should offer you tools to hide those posts automatically. But I don't think that you should be able to prevent Said or Zack from commenting on your posts, or prevent other commentators from seeing that criticism. In part, I agree strongly (and upvoted strongly) with @Wei_Dai's point elsewhere in this thread that blocking posters means we can't tell the difference between "no one criticized this" and "people who would criticize it couldn't", unless they write their own post, as @Zack_M_Davis did.

Sometimes when you work at a large tech-focused company, you'll be pulled into a required-but-boring all-day HR meeting to discuss some asinine topic like "communication styles".

If you've had the misfortune fun of attending one of those meetings, you might remember that the topic wasn't about teaching a hypothetically "best" or "optimal" communication style. The goal was to teach employees how to recognize when you're speaking to someone with a different communication style, and then how to tailor your understanding of what they're saying with respect to them. For example, some people are more straightforward than others, so a piece of seemingly harsh criticism like "This won't work for XYZ reason." doesn't mean that they disrespect you -- they're just not the type of person who would phrase that feedback as "I think that maybe we've neglected to consider the impact of XYZ on the design."

I have read the many pages of debate on this current disagreement over the past few days. I have followed the many examples of linked posts that were intended to show bad behavior by one side or the other.

I think Zack and gjm have a good job at communicating with each other despite differences in their preferred communication styles, and in particular, I agree strongly with gjm's analysis:

I think this is the purpose of Duncan's proposed guideline 5. Don't engage in that sort of adversarial behaviour where you want to win while the other party loses; aim at truth in a way that, if you are both aiming at truth, will get you both there. And don't assume that the other party is being adversarial, unless you have to, because if you assume that then you'll almost certainly start doing the same yourself; starting out with a presumption of good faith will make actual good faith more likely.

And then with Zack's opinion:

That said, I don't think there's a unique solution for what the "right" norms are. Different rules might work better for different personality types, and run different risks of different failure modes (like nonsense aggressive status-fighting vs. nonsense passive-aggressive rules-lawyering). Compared to some people, I suppose I tend to be relatively comfortable with spaces where the rules err more on the side of "Punch, but be prepared to take a punch" rather than "Don't punch anyone"—but I realize that that's a fact about me, not a fact about the hidden Bayesian structure of reality. That's why, in "'Rationalist Discourse' Is Like 'Physicist Motors'", I made an analogy between discourse norms and motors or martial arts—there are principles governing what can work, but there's not going to be a unique motor, a one "correct" martial art.

I also agree with Zack when they said:

I'm unhappy with the absence of an audience-focused analogue of TEACH. In the following, I'll use TEACH to refer to making someone believe X if X is right; whether the learner is the audience or the interlocutor B isn't relevant to what I'm saying.

I seldom write comments with the intent of teaching a single person. My target audience is whoever is reading the posts, which is overwhelmingly going to be more than one person.

From Duncan, I agree with the following:

It is in fact usually the case that, when two people disagree, each one possesses some scrap of map that the other lacks; it's relatively rare that one person is just right about everything and thoroughly understands and can conclusively dismiss all of the other person's confusions or hesitations. If you are trying to see and understand what's actually true, you should generally be hungry for those scraps of map that other people possess, and interested in seeing, understanding, and copying over those bits which you were missing.

Almost all of my comments tend to focus on a specific disagreement that I have with the broader community. That disagreement is due to some prior that I hold, that is not commonly held here.

And from Said, I agree with this:

Examples?

This community is especially prone to large, overly-wordy armchair philosophy about this-or-that with almost no substantial evidence that can tie the philosophy back down to Earth. Sometimes that philosophy gets camouflaged in a layer of pseudo-math; equations, lemmas, writing as if the post is demonstrating a concrete mathematical proof. To that end, focusing the community on providing examples is a valuable, useful piece of constructive feedback. I strongly disagree that this is an unfair burden on authors.

EDIT: I forgot to write an actual conclusion. Maybe "don't expect everyone to communicate in the same way, even if we assume that all interested parties care about the truth"?

It seems to me that humans are more coherent and consequentialist than other animals. Humans are not perfectly coherent, but the direction is towards more coherence.

This isn't a universally held view. Someone wrote a fairly compelling argument against it here: https://sohl-dickstein.github.io/2023/03/09/coherence.html

We don't do any of these things for diffusion models that output images, and yet these diffusion models manage to be much smaller than models that output words, while maintaining an even higher level of output quality. What is it about words that makes the task different?

I'm not sure that "even higher level of output quality" is actually true, but I recognize that it can be difficult to judge when an image generation model has succeeded. In particular, I think current image models are fairly bad at specifics in much the same way as early language models.

But I think the real problem is that we seem to still be stuck on "words". When I ask GPT-4 a logic question, and it produces a grammatically correct sentence that answers the logic puzzle correctly, only part of that is related to "words" -- the other part is a nebulous blob of reasoning.

I went all the way back to GPT-1 (117 million parameters) and tested next word prediction -- specifically, I gave a bunch of prompts, and I looked for only if the very next word was what I would have expected. I think it's incredibly good at that! Probably better than most humans.

Or are you suggesting that image generators could also be greatly improved by training minimal models, and then embedding those models within larger networks?

No, because this is already how image generators work. That's what I said in my first post when I noted the architectural differences between image generators and language models. An image generator, as a system, consists of multiple models. There is a text -> image space, and then an image space -> image. The text -> image space encoder is generally trained first, then it's normally frozen during the training of the image decoder.[1] Meanwhile, the image decoder is trained on a straightforward task: "given this image, predict the noise that was added". In the actual system, that decoder is put into a loop to generate the final result. I'm requoting the relevant section of my first post below:

The reason why I'm discussing the network in the language of instructions, stack space, and loops is because I disagree with a blanket statement like "scale is all you need". I think it's obvious that scaling the neural network is a patch on the first two constraints, and scaling the training data is a patch on the third constraint.

This is also why I think that point #3 is relevant. If GPT-3 does so well because it's using the sea of parameters for unrolled loops, then something like Stable Diffusion at 1/200th the size probably makes sense.

- ^

Refer to figure 2 in https://cdn.openai.com/papers/dall-e-2.pdf. Or read this:

The trick here is that they decoupled the encoding from training the diffusion model. That way, the autoencoder can be trained to get the best image representation and then downstream several diffusion models can be trained on the so-called latent representation

This is the idea that I'm saying could be applied to language models, or rather, to a thing that we want to demonstrate "general intelligence" in the form of reasoning / problem solving / Q&A / planning / etc. First train a LLM, then train a larger system with the LLM as a component within it.

Yes, it's my understanding that OpenAI did this for GPT-4. It's discussed in the system card PDF. They used early versions of GPT-4 to generate synthetic test data and also as an evaluator of GPT-4 responses.

First, when we say "language model" and then we talk about the capabilities of that model for "standard question answering and factual recall tasks", I worry that we've accidentally moved the goal posts on what a "language model" is.

Originally, a language model was a stochastic parrot. They were developed to answer questions like "given these words, what comes next?" or "given this sentence, with this unreadable word, what is the most likely candidate?" or "what are the most common words?"[1] It was not a problem that required deep learning.

Then, we applied deep learning to it, because the path of history so far has been to take straightforward algorithms, replace them with a neural network, and see what happens. From that, we got ... stochastic parrots! Randomizing the data makes perfect sense for that.

Then, we scaled it. And we scaled it more. And we scaled it more.

And now we've arrived at a thing we keep calling a "language model" due to history, but it isn't a stochastic parrot anymore.

Second, I'm not saying "don't randomize data", I'm saying "use a tiered approach to training". We would use all of the same techniques: randomization, masking, adversarial splits, etc. What we would not do is throw all of our data and all of our parameters into a single, monolithic model and expect that would be efficient.[2] Instead, we'd first train a "minimal" LLM, then we'd use that LLM as a component within a larger NN, and we'd train that combined system (LLM + NN) on all of the test cases we care about for abstract reasoning / problem solving / planning / etc. It's that combined system that I think would end up being vastly more efficient than current language models, because I suspect the majority of language model parameters are being used for embedding trivia that doesn't contribute to the core capabilities we recognize as "general intelligence".

- ^

This wasn't for auto-complete, it was generally for things like automatic text transcription from images, audio, or videos. Spam detection was another use-case.

- ^

Recall that I'm trying to offer a hypothesis for why a system like GPT-3.5 takes so much training and has so many parameters and it still isn't "competent" in all of the ways that a human is competent. I think "it is being trained in an inefficient way" is a reasonable answer to that question.

I suspect it is a combination of #3 and #5.

Regarding #5 first, I personally think that language models are being trained wrong. We'll get OoM improvements when we stop randomizing the examples we show to models during training, and instead provide examples in a structured curriculum.

This isn't a new thought, e.g. https://arxiv.org/abs/2101.10382

To be clear, I'm not saying that we must present easy examples first and then harder examples later. While that is what has been studied in the literature, I think we'd actually get better behavior by trying to order examples on a spectrum of "generalizes well" to "very specific, does not generalize" and then training in that order. Sometimes this might be equivalent to "easy examples first", but that isn't necessarily true.

I recognize that the definitions of "easy" and "generalizes" are nebulous, so I'm going to try and explain the reasoning that led me here.

Consider the architecture of transformers and feed-forward neural networks (specifically not recurrent neural networks). We're given some input, and we produce some output. In a model like GPT, we're auto-regressive, so as we produce our outputs, those outputs become part of the input during the next step. Each step is fundamentally a function .

Given some input, the total output can be thought as:

def reply_to(input):

output = ""

while True:

token = predict_next(input + output)

if token == STOP:

break

output += token

return output

We'd like to know exactly what `predict_next` is doing, but unfortunately, the programmer who wrote it seems to have done their implementation entirely in matrix math and they didn't include any comments. In other words, it's deeply cursed and not terribly different from the output of Simulink's code generator.

def predict_next(input):

# ... matrix math

return output

Let's try to think about the capabilities and constraints on this function.

- There is no unbounded `loop` construct. The best we can do is approximate loops, e.g. by supporting an unrolled loop up to some bounded number of iterations. What determines the bounds? Probably the depth of the network?

- If the programmer were sufficiently deranged, they could implement `predict_next` in such a way that if they've hit the bottom of their unrolled loop, they could rely on the fact that `predict_next` will be called again, and continue their previous calculations during the next call. What would be the limitations on this? Probably the size of each hidden layer. If you wanted to figure out if this is happening, you'd want to look for prompts where the network can answer the prompt correctly if it is allowed to generate text before the answer (e.g. step-by-step explanations) but is unable to do so if asked to provide the answer without any associated explanations.

- How many total "instructions" can fit into this function? The size of the network seems like a decent guess. Unfortunately, the network conflates instructions and data, and the network must use all parameters available to it. This leads to trivial solutions where the network just over-fits to the data (analogous to baking in a lookup table on the stack). It's not unsurprising that throwing OoM more data at a fixed size NN results in better generalization. Once you're unable to cheat with over-fitting you must learn algorithms that work more efficiently.

The reason why I'm discussing the network in the language of instructions, stack space, and loops is because I disagree with a blanket statement like "scale is all you need". I think it's obvious that scaling the neural network is a patch on the first two constraints, and scaling the training data is a patch on the third constraint.

This is also why I think that point #3 is relevant. If GPT-3 does so well because it's using the sea of parameters for unrolled loops, then something like Stable Diffusion at 1/200th the size probably makes sense.

To tie this back to point #5:

- We start with a giant corpus of data. On the order of "all written content available in digital form". We might generate additional data in an automated fashion, or digitize books, or caption videos.

- We divide it into training data and test data.

- We train the network on random examples from the training data, and then verify on random examples from the test data. For simplicity, I'm glossing over various training techniques like masking data or connections between nodes.

- Then we fine-tune it, e.g with Q&A examples.

- And then generally we deploy it with some prompt engineering, e.g. prefixing queries with past transcript history, to fake a conversation.

At the end of this process, what do we have?

I want to emphasize that I do not think it is a "stochastic parrot". I think it is very obvious that the final system has internalized actual algorithms (or at least, pseudo-algorithms due to the limitation on loops) for various tasks, given the fact that the size of the data set is significantly larger than the size of the model. I think people who are surprised by the capabilities of these systems continue to assume it is "just" modeling likelihoods, when there was no actual requirement on that.

I also suspect we've wasted an enormous quantity of our parameters on embedding knowledge that does not directly contribute to system's capabilities.

My hypothesis for how to fix this is vaguely similar to the idea of "maximizing divergence" discussed here https://ljvmiranda921.github.io/notebook/2022/08/02/splits/.

I think we could train a LLM on a minimal corpus to "teach" a language[1] and then place that LLM inside of a larger system that we train to minimize loss on examples teaching logic, mathematics, and other components of reasoning. That larger system would distinguish between the weights for the algorithms it learns and the weights representing embedded knowledge. It would also have the capability to loop during the generation of an output. For comparison, think of the experiments being done with hooking up GPT-4 to a vector database, but now do that inside of the architecture instead of as a hack on top of the text prompts.

I think an architecture that cleanly separates embedded knowledge ("facts", "beliefs", "shards", etc) from the algorithms ("capabilities", "zero-shot learning") is core to designing a neural network that remains interpretable and alignable at scale.

If you read the previous paragraphs and think, "that sounds familiar", it's probably because I'm describing how we teach humans: first language, then reasoning, then specialization. A curriculum. We need language first because we want to be able to show examples, explain, and correct mistakes. Especially since we can automate content generation with existing LLMs to create the training corpus in these steps. Then we want to teach reasoning, starting with the most general forms of reasoning, and working into the most specific. Finally, we grade the system (not train!) on a corpus of specific knowledge-based activities. Think of this step as describing the rules of a made-up game, providing the current game state, and then asking for the optimal move. Except that for games, for poems, for math, for wood working, for engineering, etc. The whole point of general intelligence is that you can reason from first principles, so that's what we need to be grading the network on: minimizing loss with respect to arbitrarily many knowledge-based tasks that must be solved using the facts provided only during the test itself.

- ^

Is English the right language to teach? I think it would be funny if a constructed language actually found a use here.

I'm reminded of this thread from 2022: https://www.lesswrong.com/posts/27EznPncmCtnpSojH/link-post-on-deference-and-yudkowsky-s-ai-risk-estimates?commentId=SLjkYtCfddvH9j38T#SLjkYtCfddvH9j38T

I realize that my position might seem increasingly flippant, but I really think it is necessary to acknowledge that you've stated a core assumption as a fact.

Alignment doesn't run on some nega-math that can't be cast as an optimization problem.

I am not saying that the concept of "alignment" is some bizarre meta-physical idea that cannot be approximated by a computer because something something human souls etc, or some other nonsense.

However the assumption that "alignment is representable in math" directly implies "alignment is representable as an optimization problem" seems potentially false to me, and I'm not sure why you're certain it is true.

There exist systems that can be 1.) represented mathematically, 2.) perform computations, and 3.) do not correspond to some type of min/max optimization, e.g. various analog computers or cellular automaton.

I don't think it is ridiculous to suggest that what the human brain does is 1.) representable in math, 2.) in some type of way that we could actually understand and re-implement it on hardware / software systems, and 3.) but not as an optimization problem where there exists some reward function to maximize or some loss function to minimize.

I wasn't intending for a metaphor of "biomimicry" vs "modernist".

(Claim 1) Wings can't work in space because there's no air. The lack of air is a fundamental reason for why no wing design, no matter how clever it is, will ever solve space travel.

If TurnTrout is right, then the equivalent statement is something like (Claim 2) "reward functions can't solve alignment because alignment isn't maximizing a mathematical function."

The difference between Claim 1 and Claim 2 is that we have a proof of Claim 1, and therefore don't bother debating it anymore, while with Claim 2 we only have an arbitrarily long list of examples for why reward functions can be gamed, exploited, or otherwise fail in spectacular ways, but no general proof yet for why reward functions will never work, so we keep arguing about a Sufficiently Smart Reward Function That Definitely Won't Blow up as if that is a thing that can be found if we try hard enough.

As of right now, I view "shard theory" sort of like a high-level discussion of chemical propulsion without the designs for a rocket or a gun. I see the novelty of it, but I don't understand how you would build a device that can use it. Until someone can propose actual designs for hardware or software that would implement "shard theory" concepts without just becoming an obfuscated reward function prone to the same failure modes as everything else, it's not incredibly useful to me. However, I think it's worth engaging with the idea because if correct then other research directions might be a dead-end.

Does that help explain what I was trying to do with the metaphor?

To some extent, I think it's easy to pooh-pooh finding a flapping wing design (not maximally flappy, merely way better than the best birds) when you're not proposing a specific design for building a flying machine that can go to space. Not in the tone of "how dare you not talk about specifics," but more like "I bet this chemical propulsion direction would have to look more like birds when you get down to brass tacks."

(1) The first thing I did when approaching this was think about how the message is actually transmitted. Things like the preamble at the start of the transmission to synchronize clocks, the headers for source & destination, or the parity bits after each byte, or even things like using an inversed parity on the header so that it is possible to distinguish a true header from bytes within a message that look like a header, and even optional checksum calculations.

(2) I then thought about how I would actually represent the data so it wasn't just traditional 8-bit bytes -- I created encoders & decoders for 36/24/12/6 bit unsigned and signed ints, and 30 / 60 bit non-traditional floating point, etc.

Finally, I created a mock telemetry stream that consisted of a bunch of time-series data from many different sensors, with all of the sensor values packed into a single frame with all of the data types from (2), and repeatedly transmitted that frame over the varying time series, using (1), until I had >1 MB.

And then I didn't submit that, and instead swapped to a single message using the transmission protocol that I designed first, and shoved an image into that message instead of the telemetry stream.

- To avoid the flaw where the message is "just" 1-byte RGB, I viewed each pixel in the filter as being measured by a 24-bit ADC. That way someone decoding it has to consider byte-order when forming the 24-bit values.

- Then, I added only a few LSB of noise because I was thinking about the type of noise you see on ADC channels prior to more extensive filtering. I consider it a bug that I only added noise in some interval

[0, +N], when I should have allowed the noise to be positive or negative. I am less convinced that the uniform distribution is incorrect. In my experience, ADC noise is almost always uniform (and only present in a few LSB), unless there's a problem with the HW design, in which case you'll get dramatic non-uniform "spikes". I was assuming that the alien HW is not so poorly designed that they are railing their ADC channels with noise of that magnitude. - I wanted the color data to be more complicated than just RGB, so I used a Bayer filter, that way people decoding it would need to demosiac the color channels. This further increased the size of the image.

- The original, full resolution image produced a file much larger than 1 MB when it was put through the above process (3 8-bit RGB -> 4 24-bit Bayer), so I cut the resolution on the source image until the output was more reasonably sized. I wasn't thinking about how that would impact the image analysis, because I was still thinking about the data types (byte order, number of bits, bit ordering) more so than the actual image content.

- "Was the source image actually a JPEG?" I didn't check for JPEG artifacts at all, or analyze the image beyond trying to find a nice picture of bismuth with the full color of the rainbow present so that all of the color channels would be used. I just now did a search for "bismuth png" on Google, got a few hits, opened one, and it was actually a JPG. I remember scrolling through a bunch of Google results before I found an image that I liked, and then I just remember pulling & saving it as a BMP. Even if I had downloaded a source PNG as I intended, I definitely didn't check that the PNG itself wasn't just a resaved JPEG.

My understanding of faul_sname's claim is that for the purpose of this challenge we should treat the alien sensor data output as an original piece of data.

In reality, yes, there is a source image that was used to create the raw data that was then encoded and transmitted. But in the context of the fiction, the raw data is supposed to represent the output of the alien sensor, and the claim is that the decompressor + payload is less than the size of just an ad-hoc gzipping of the output by itself. It's that latter part of the claim that I'm skeptical towards. There is so much noise in real sensors -- almost always the first part of any sensor processing pipeline is some type of smoothing, median filtering, or other type of noise reduction. If a solution for a decompressor involves saving space on encoding that noise by breaking a PRNG, it's not clear to me how that would apply to a world in which this data has no noise-less representation available. However, a technique of measuring & subtracting noise so that you can compress a representation that is more uniform and then applying the noise as a post-processing op during decoding is definitely doable.

Assuming that you use the payload of size 741809 bytes, and are able to write a decompressor + "transmitter" for that in the remaining ~400 KB (which should be possible, given that 7z is ~450 KB, zip is 349 KB, other compressors are in similar size ranges, and you'd be saving space since you just need to the decoder portion of the code), how would we rate that against the claims?

- It would be possible for me, given some time to examine the data, create a decompressor and a payload such that running the decompressor on the payload yields the original file, and the decompressor program + the payload have a total size of less than the original gzipped file

- The decompressor would legibly contain a substantial amount of information about the structure of the data.

(1) seems obviously met, but (2) is less clear to me. Going back to the original claim, faul_sname said 'we would see that the winning programs would look more like "generate a model and use that model and a similar rendering process to what was used to original file, plus an error correction table" and less like a general-purpose compressor'.

So far though, this solution does use a general purpose compressor. My understanding of (2) is that I was supposed to be looking for solutions like "create a 3D model of the surface of the object being detected and then run lighting calculations to reproduce the scene that the camera is measuring", etc. Other posts from faul_sname in the thread, e.g. here seem to indicate that was their thinking as well, since they suggested using ray tracing as a method to describe the data in a more compressed manner.

What are your thoughts?

Regarding the sensor data itself

I alluded to this in my post here, but I was waffling and backpedaling a lot on what would be "fair" in this challenge. I gave a bunch of examples in the thread of what would make a binary file difficult to decode -- e.g. non-uniform channel lengths, an irregular data structure, multiple types of sensor data interwoven into the same file, and then did basically none of that, because I kept feeling like the file was unapproachable. Anything that was a >1 MB of binary data but not a 2D image (or series of images) seemed impossible. For example, the first thing I suggested in the other thread was a stream of telemetry from some alien system.

I thought this file would strike a good balance, but I now see that I made a crucial mistake: I didn't expect that you'd be able to view it with the wrong number of bits per byte (7 instead of 6) and then skip almost every byte and still find a discernible image in the grayscale data. Once you can "see" what the image is supposed to be, the hard part is done.

I was assuming that more work would be needed for understanding the transmission itself (e.g. deducing the parity bits by looking at the bit patterns), and then only after that would it be possible to look at the raw data by itself.

I had a similar issue when I was playing with LIDAR data as an alternative to a 2D image. I found that a LIDAR point cloud is eerily similar enough to image data that you can stumble upon a depth map representation of the data almost by accident.

I have posted my file here https://www.lesswrong.com/posts/BMDfYGWcsjAKzNXGz/eavesdropping-on-aliens-a-data-decoding-challenge.

I've posted it here https://www.lesswrong.com/posts/BMDfYGWcsjAKzNXGz/eavesdropping-on-aliens-a-data-decoding-challenge.

Which question are we trying to answer?

- Is it possible to decode a file that was deliberately constructed to be decoded, without a priori knowledge? This is vaguely what That Alien Message is about, at least in the first part of the post where aliens are sending a message to humanity.

- Is it possible to decode a file that has an arbitrary binary schema, without a priori knowledge? This is the discussion point that I've been arguing over with regard to stuff like decoding CAMERA raw formats, or sensor data from a hardware/software system. This is also the area where I disagree with That Alien Message -- I don't think that one-shot examples allow robust generalization.

I don't think (1) is a particularly interesting question, because last weekend I convinced myself that the answer is yes, you can transfer data in a way that it can be decoded, with very few assumptions on the part of the receiver. I do have a file I created for this purpose. If you want, I'll send you it.

I started creating a file for (2), but I'm not really sure how to gauge what is "fair" vs "deliberately obfuscated" in terms of encoding. I am conflicted. Even if I stick to encoding techniques I've seen in the real world, I feel like I can make choices on this file encoding that make the likelihood of others decoding it very low. That's exactly what we're arguing about on (2). However, I don't think it will be particularly interesting or fun for people trying to decode it. Maybe that's ok?

What are your thoughts?

It depends on what you mean by "didn't work". The study described is published in a paper only 16 pages long. We can just read it: http://web.mit.edu/curhan/www/docs/Articles/biases/67_J_Personality_and_Social_Psychology_366,_1994.pdf

First, consider the question of, "are these predictions totally useless?" This is an important question because I stand by my claim that the answer of "never" is actually totally useless due to how trivial it is.

Despite the optimistic bias, respondents' best estimates were by no means devoid of information: The predicted completion times were highly correlated with actual completion times (r = .77, p < .001). Compared with others in the sample, respondents who predicted that they would take more time to finish actually did take more time. Predictions can be informative even in the presence of a marked prediction bias.

...

Respondents' optimistic and pessimistic predictions were both strongly correlated with their actual completion times (rs = .73 and .72, respectively; ps < .01).

Yep. Matches my experience.

We know that only 11% of students met their optimistic targets, and only 30% of students met their "best guess" targets. What about the pessimistic target? It turns out, 50% of the students did finish by that target. That's not just a quirk, because it's actually related to the distribution itself.

However, the distribution of difference scores from the best-guess predictions were markedly skewed, with a long tail on the optimistic side of zero, a cluster of scores within 5 or 10 days of zero, and virtually no scores on the pessimistic side of zero. In contrast, the differences from the worst-case predictions were noticeably more symmetric around zero, with the number of markedly pessimistic predictions balancing the number of extremely

optimistic predictions.

In other words, asking people for a best guess or an optimistic prediction results in a biased prediction that is almost always earlier than a real delivery date. On the other hand, while the pessimistic question is not more accurate (it has the same absolute error margins), it is unbiased. The reality is that the study says that people asked for a pessimistic question were equally likely to over-estimate their deadline as they were to under-estimate it. If you don't think a question that gives you a distribution centered on the right answer is useful, I'm not sure what to tell you.

The paper actually did a number of experiments. That was just the first.

In the third experiment, the study tried to understand what people are thinking about when estimating.

Proportionally more responses concerned future scenarios (M = .74) than relevant past experiences (M =.07), r(66) = 13.80, p < .001. Furthermore, a much higher proportion of subjects' thoughts involved planning for a project and imagining its likely progress (M =.71) rather than considering potential impediments (M = .03), r(66) = 18.03, p < .001.

This seems relevant considering that the idea of premortems or "worst case" questioning is to elicit impediments, and the project managers / engineering leads doing that questioning are intending to hear about impediments and will continue their questioning until they've been satisfied that the group is actually discussing that.

In the fourth experiment, the study tries to understand why it is that people don't think about their past experiences. They discovered that just prompting people to consider past experiences was insufficient, they actually needed additional prompting to make their past experience "relevant" to their current task.

Subsequent comparisons revealed that subjects in the recall-relevant condition predicted they would finish the assignment later than subjects in either the recall condition, t(79) = 1.99, p < .05, or the control condition, f(80) = 2.14, p < .04, which did not differ significantly from each other, t(& 1) < 1

...

Further analyses were performed on the difference between subjects' predicted and actual completion times. Subjects underestimated their completion times significantly in the control (M = -1.3 days), r(40) = 3.03, p < .01, and recall conditions (M = -1.0 day), t(41) = 2.10, p < .05, but not in the recall-relevant condition (M = -0.1 days), ((39) < i. Moreover, a higher percentage of subjects finished the assignments in the predicted time in the recall-relevant condition (60.0%) than in the recall and control conditions (38.1% and 29.3%, respectively), x2G, N = 123) = 7.63, p < .01. The latter two conditions did not differ significantly from each other.

...

The absence of an effect in the recall condition is rather remarkable. In this condition, subjects first described their past performance with projects similar to the computer assignment and acknowledged that they typically finish only 1 day before

deadlines. Following a suggestion to "keep in mind previous experiences with assignments," they then predicted when they would finish the computer assignment. Despite this seemingly powerful manipulation, subjects continued to make overly optimistic forecasts. Apparently, subjects were able to acknowledge their past experiences but disassociate those episodes from their present predictions.

In contrast, the impact of the recall-relevant procedure was sufficiently robust to eliminate the optimistic bias in both deadline conditions

How does this compare to the first experiment?

Interestingly, although the completion estimates were less biased in the recall-relevant condition than in the other conditions, they were not more strongly correlated with actual completion times, nor was the absolute prediction error any smaller. The optimistic bias was eliminated in the recall-relevant condition because subjects' predictions were as likely to be too long as they were to be too short. The effects of this manipulation mirror those obtained with the instruction to provide pessimistic predictions in the first study: When students predicted the completion date for their honor's thesis on the assumption that "everything went as poorly as it possibly could" they produced unbiased but no more accurate predictions than when they made their "best guesses."

It's common in engineering to perform group estimates. Does the study look at that? Yep, the fifth and last experiment asks individuals to estimate the performance of others.

As hypothesized, observers seemed more attuned to the actors' base rates than did the actors themselves. Observers spontaneously used the past as a basis for predicting actors' task completion times and produced estimates that were later than both the actors' estimates and their completion times.

So observers are more pessimistic. Actually, observers are so pessimistic that you have to average it with the optimistic estimates to get an unbiased estimate.

One of the most consistent findings throughout our investigation was that manipulations that reduced the directional (optimistic) bias in completion estimates were ineffective in in-

creasing absolute accuracy. This implies that our manipulations did not give subjects any greater insight into the particular predictions they were making, nor did they cause all subjects to become more pessimistic (see Footnote 2), but instead caused enough subjects to become overly pessimistic to counterbalance the subjects who remained overly optimistic. It remains for future research to identify those factors that lead people to make

more accurate, as well as unbiased, predictions. In the real world, absolute accuracy is sometimes not as important as (a) the proportion of times that the task is completed by the "best-guess" date and (b) the proportion of dramatically optimistic, and therefore memorable, prediction failures. By both of these criteria, factors that decrease the optimistic bias "improve" the quality of intuitive prediction.

At the end of the day, there are certain things that are known about scheduling / prediction.

- In general, individuals are as wrong as they are right for any given estimate.

- In general, people are overly optimistic.

- But, estimates generally correlate well with actual duration -- if an individual thinks something is longer in estimate than another task, it most likely is! This is why in SW sometimes estimation is not in units of time at all, but in a concept called "points".

- The larger and more nebulously scoped the task, the worse any estimates will be in absolute error.

- The length of a time a task can take follows a distribution with a very long right tail -- a task that takes way longer than expected can take an arbitrary amount of time, but the fastest time to complete a task is limited.

- The best way to actually schedule or predict a project is to break it down into as many small component tasks as possible, identify dependencies between those tasks, and produce most likely, optimistic, and pessimistic estimates for each task, and then run a simulation for chain of dependencies to see what the expected project completion looks like. Use a Gantt chart. This is a boring answer because it's the "learn project management" answer, and people will hate on it because

gesture vaguely to all of the projects that overrun their schedule. There are many interesting reasons for why that happens and why I don't think it's a massive failure of rationality, but I'm not sure this comment is a good place to go into detail on that. The quick answer is that comical overrun of a schedule has less to do with an inability to create correct schedules from an engineering / evidence-based perspective, and much more to do with a bureaucratic or organizational refusal to accept an evidence-based schedule when a totally false but politically palatable "optimistic" schedule is preferred.

Right. I think I agree with everything you wrote here, but here it is again in my own words:

In communicating with people, the goal isn't to ask a hypothetically "best" question and wonder why people don't understand or don't respond in the "correct" way. The goal is to be understood and to share information and acquire consensus or agree on some negotiation or otherwise accomplish some task.

This means that in real communication with real people, you often need to ask different questions to different people to arrive at the same information, or phrase some statement differently for it to be understood. There shouldn't be any surprise or paradox here. When I am discussing an engineering problem with engineers, I phrase it in the terminology that engineers will understand. When I need to communicate that same problem to upper management, I do not use the same terminology that I use with my engineers.

Likewise, there's a difference when I'm communicating with some engineering intern or new grad right out of college, vs a senior engineer with a decade of experience. I tailor my speech for my audience.

In particular, if I asked this question to Kenoubi ("what's the worst case for how long this thesis could take you?"), and Kenoubi replied "It never finishes", then I would immediately follow up with the question, "Ok, considering cases when it does finish, what's the worst-case look like?" And if that got the reply "the day before it is required to be due", I would then start poking at "What would would cause that to occur?".

The reason why I start with the first question is because it works for, I don't know, 95% of people I've ever interacted with in my life? In my mind, it's rational to start with a question that almost always elicits the information I care about, even if there's some small subset of the population that will force me to choose my words as if they're being interpreted by a Monkey's paw.

Isn't this identical to the proof for why there's no general algorithm for solving the Halting Problem?

The Halting Problem asks for an algorithm A(S, I) that when given the source code S and input I for another program will report whether S(I) halts (vs run forever).

There is a proof that says A does not exist. There is no general algorithm for determining whether an arbitrary program will halt. "General" and "arbitrary" are important keywords because it's trivial to consider specific algorithms and specific programs and say, yes, we can determine that this specific program will halt via this specific algorithm.

That proof of the Halting Problem (for a general algorithm and arbitrary programs!) works by defining a pathological program S that inspects what the general algorithm A would predict and then does the opposite.

What you're describing above seems almost word-for-word the same construction used for constructing the pathological program S, except the algorithm A for "will this program halt?" is replaced by the predictor "will this person one-box?".

I'm not sure that this necessarily matters for the thought experiment. For example, perhaps we can pretend that the predictor works on all strategies except the pathological case described here, and other strategies isomorphic to it.

If we look at the student answers, they were off by ~7 days, or about a 14% error from the actual completion time.

The only way I can interpret your post is that you're suggesting all of these students should have answered "never".

I'm not convinced that "never" just didn't occur to them because they were insufficiently motivated to give a correct answer.

How far off is "never" from the true answer of 55.5 days?

It's about infinitely far off. It is an infinitely wrong answer. Even if a project ran 1000% over every worst-case pessimistic schedule, any finite prediction was still infinitely closer than "never".

It's a quirk of rationalist culture (and a few others — I've seen this from physicists too) to take the words literally and propose that "infinitely long" is a plausible answer, and be baffled as to how anyone could think otherwise.

That's because "infinitely long" is a trivial answer for any task that isn't literally impossible.[1] It provides 0 information and takes 0 computational effort. It might as well be the answer from a non-entity, like asking a brick wall how long the thesis could take to complete.

Question: How long can it take to do X?

Brick wall: Forever. Just go do not-X instead.

It is much more difficult to give an answer for how long a task can take assuming it gets done while anticipating and predicting failure modes that would cause the schedule to explode, and that same answer is actually useful since you can now take preemptive actions to avoid those failure modes -- which is the whole point of estimating and scheduling as a logical exercise.

The actual conversation that happens during planning is

A: "What's the worst case for this task?"

B: "6 months."

A: "Why?"

B: "We don't have enough supplies to get past 3 trial runs, so if any one of them is a failure, the lead time on new materials with our current vendor is 5 months."

A: "Can we source a new vendor?"

B: "No, but... <some other idea>"

- ^

In cases when something is literally impossible, instead of saying "infinitely long", or "never", it's more useful to say "that task is not possible" and then explain why. Communication isn't about finding the "haha, gotcha" answer to a question when asked.

Is the concept of "murphyjitsu" supposed to be different than the common exercise known as a premortem in traditional project management? Or is this just the same idea, but rediscovered under a different name, exactly like how what this community calls a "double crux" is just the evaporating cloud, which was first described in the 90s.

If you've heard of a postmortem or possibly even a retrospective, then it's easy to guess what a premortem is. I cannot say the same for "murphyjitsu".

I see that premortem is even referenced in the "further resources" section, so I'm confused why you'd describe it under a different name that cannot be researched easily outside of this site, where there is tons of literature and examples of how to do premortems correctly.

The core problem remains computational complexity.

Statements like "does this image look reasonable" or saying "you pay attention to regularities in the data", or "find the resolution by searching all possible resolutions" are all hiding high computational costs behind short English descriptions.

Let's consider the case of a 1280x720 pixel image.

That's the same as 921600 pixels.

How many bytes is that?

It depends. How many bytes per pixel?[1] In my post, I explained there could be 1-byte-per-pixel grayscale, or perhaps 3-bytes-per-pixel RGB using [0, 255] values for each color channel, or maybe 6-bytes-per-pixel with [0, 65535] values for each color channel, or maybe something like 4-bytes-per-pixel because we have 1-byte RGB channels and a 1-byte alpha channel.

Let's assume that a reasonable cutoff for how many bytes per pixel an encoding could be using is say 8 bytes per pixel, or a hypothetical 64-bit color depth.

How many ways can we divide this between channels?

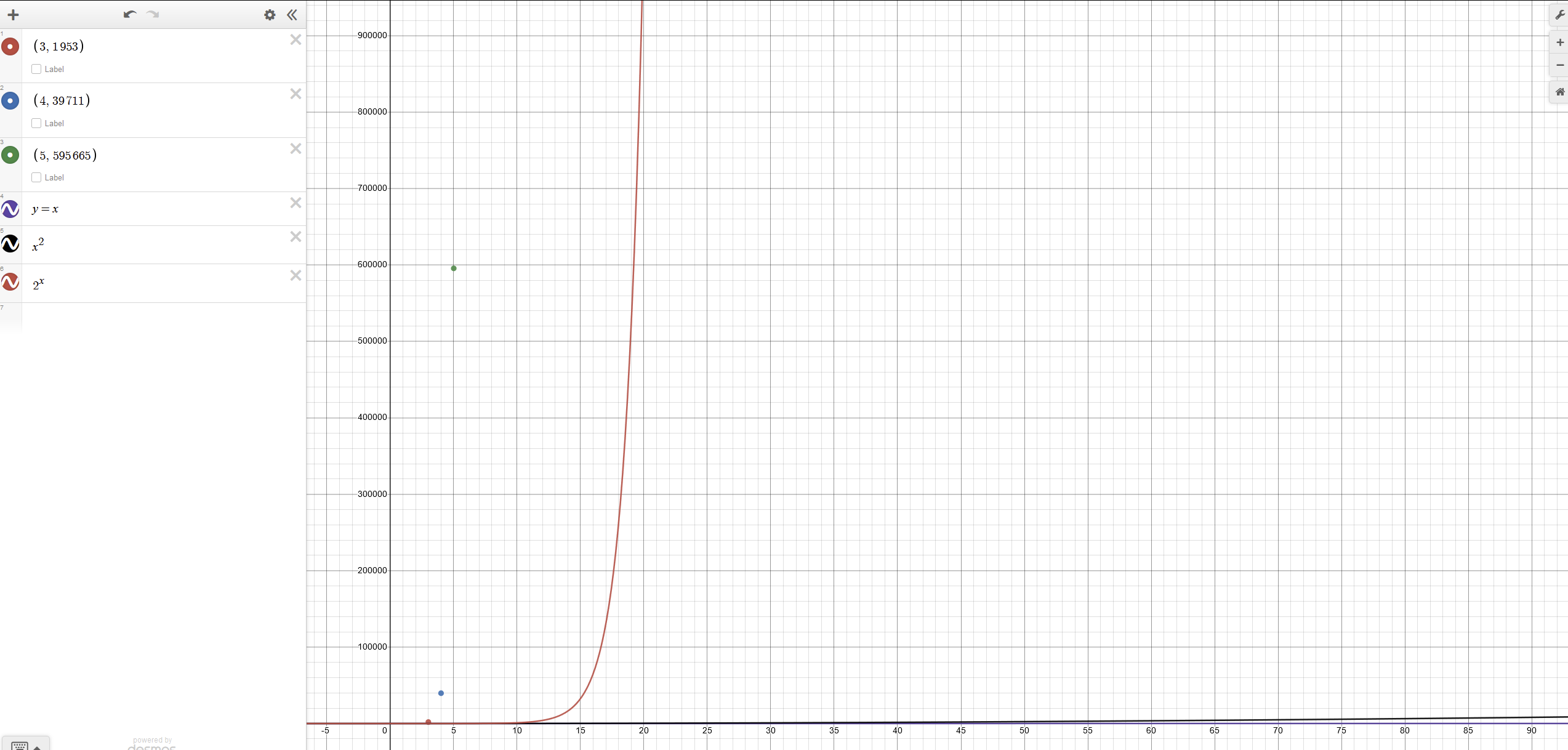

If we assume 3 channels, it's 1953.

If we assume 4 channels, it's 39711.

Also if it turns out to be 5 channels, it's 595665.

This is a pretty fast growing function. The following is a plot.

Note that the red line is O(2^N) and the black line barely visible at the bottom is O(N^2). N^2 is a notorious runtime complexity because it's right on the threshold of what is generally unacceptable performance.[2]

Let's hope that this file isn't actually a frame buffer from a graphics card with 32 bits per channel or a 128 bit per pixel / 16 byte per pixel.

Unfortunately, we still need to repeat this calculation for all of the possibilities for how many bits per pixel this image could be. We need to add in the possibility that it is 63 bits per pixel, or 62 bits per pixel, or 61 bits per pixel.

In case anyone wants to claim this is unreasonable, it's not impossible to have image formats that have RGBA data, but only 1 bit associated with the alpha data for each pixel. [3]

And for each of these scenarios, we need to question how many channels of color data there are.

- 1? Grayscale.

- 2? Grayscale, with an alpha channel maybe?

- 3? RGB, probably, or something like HSV.

- 4? RGBA, or maybe it's the RGBG layout I described for a RAW encoding of a Bayer filter, or maybe it's CMYK for printing.

- 5? This is getting weird, but it's not impossible. We could be encoding additional metadata into each pixel, e.g. distance from the camera.

- 6? Actually, this question how how many channels there are is very important, given the fast growing function above.

- 7? This one question, if we don't know the right answer, is sufficient to make this algorithm pretty much impossible to run.

- 8? When we say we can try all of options, that's not actually possible.

- 9? What I think people mean is that we can use heuristics to pick the likely options first and try them, and then fall back to more esoteric options if the initial results don't make sense.

- 10? That's the difference between average run-time and worst case run-time.

- 11? The point that I am trying to make is that the worst case run-time for decoding an arbitrary binary file is pretty much unbounded, because there's a ridiculous amount of choice possible.

- 12? Some examples of "image" formats that have large numbers of channels per "pixel" are things like RADAR / LIDAR sensors, e.g. it's possible to have 5 channels per pixel for defining 3D coordinates (relative to the sensor), range, and intensity.

You actually ran into this problem yourself.

Similarly (though you'd likely do this first), you can tell the difference between RGB and RGBA. If you have (255, 0, 0, 255, 0, 0, 255, 0, 0, 255, 0, 0), this is probably 4 red pixels in RGB, and not a fully opaque red pixel, followed by a fully transparent green pixel, followed by a fully transparent blue pixel in RGBA. It could be 2 pixels that are mostly red and slightly green in 16 bit RGB, though. Not sure how you could piece that out.

Summing up all of the possibilities above is left as an exercise for the reader, and we'll call that sum K.

Without loss of generality, let's say our image was encoded as 3 bytes per pixel divided between 3 RGB color channels of 1 byte each.

Our 1280x720 image is actually 2764800 bytes as a binary file.

But since we're decoding it from the other side, and we don't know it's 1280x720, when we're staring at this pile of 2764800 bytes, we need to first assume how many bytes per pixel it is, so that we can divide the total bytes by the bytes per pixel to calculate the number of pixels.

Then, we need to test each possible resolutions as you've suggested.

The number of possible resolutions is the same as the number of divisors of the number of pixels. The equation for providing an upper bound is exp(log(N)/log(log(N)))[4], but the average number of divisors is approximately log(N).

Oops, no it isn't!

Files have headers! How large is the header? For a bitmap, it's anywhere between 26 and 138 bytes. The JPEG header is at least 2 bytes. PNG uses 8 bytes. GIF uses at least 14 bytes.

Now we need to make the following choices:

- Guess at how many bytes per pixel the data is.

- Guess at the length of the header. (maybe it's 0, there is no header!)

- Calculate the factorization of the remaining bytes N for the different possible resolutions.

- Hope that there isn't a footer, checksum, or any type of other metadata hanging out in the sea of bytes. This is common too!

Once we've made our choices above, then we multiply that by log(N) for the number of resolutions to test, and then we'll apply the suggested metric. Remember that when considering the different pixel formats and ways the color channel data could be represented, the number was K, and that's what we're multiplying by log(N).

In most non-random images, pixels near to each other are similar. In an MxN image, the pixel below is a[i+M], whereas in an NxM image, it's a[i+N]. If, across the whole image, the difference between a[i+M] is less than the difference between a[i+N], it's more likely an MxN image. I expect you could find the resolution by searching all possible resolutions from 1x<length> to <length>x1, and finding which minimizes average distance of "adjacent" pixels.

What you're describing here is actually similar to a common metric used in algorithms for automatically focusing cameras by calculating the contrast of an image, except for focusing you want to maximize contrast instead of minimize it.

The interesting problem with this metric is that it's basically a one-way function. For a given image, you can compute this metric. However, minimizing this metric is not the same as knowing that you've decoded the image correctly. It says you've found a decoding, which did minimize the metric. It does not mean that is the correct decoding.

A trivial proof:

- Consider an image and the reversal of that image along the horizontal axis.

- These have the same metric.

- So the same metric can yield two different images.

A slightly less trivial proof:

- For a given "image" of

Nbytes of image data, there are2^(N*8)possible bit patterns. - Assuming the metric is calculated as an 8-byte IEEE 754 double, there are only

2^(8*8)possible bit patterns. - When

N > 8, there are more bit patterns than values allowed in a double, so multiple images need to map to the same metric.

The difference between our 2^(2764800*8) image space and the 2^64 metric is, uhhh, 10^(10^6.8). Imagine 10^(10^6.8) pigeons. What a mess.[5]

The metric cannot work as described. There will be various arbitrary interpretations of the data possible to minimize this metric, and almost all of those will result in images that are definitely not the image that was actually encoded, but did minimize the metric. There is no reliable way to do this because it isn't possible. When you have a pile of data, and you want to reverse meaning from it, there is not one "correct" message that you can divine from it.[6] See also: numerology, for an example that doesn't involve binary file encodings.

Even pretending that this metric did work, what's the time complexity of it? We have to check each pixel, so it's O(N). There's a constant factor for each pixel computation. How large is that constant? Let's pretend it's small and ignore it.

So now we've got K*O(N*log(N)) which is the time complexity of lots of useful algorithms, but we've got that awkward constant K in the front. Remember that the constant K reflects the number of choices for different bits per pixel, bits per channel, and the number of channels of data per pixel. Unfortunately, that constant is the one that was growing a rate best described as "absurd". That constant is the actual definition of what it means to have no priors. When I said "you can generate arbitrarily many hypotheses, but if you don't control what data you receive, and there's no interaction possible, then you can't rule out hypotheses", what I'm describing is this constant.

I think it would be very weird, if we were trying to train an AI, to send it compressed video, and much more likely that we do, in fact, send it raw RGB values frame by frame.

What I care about is the difference between:

1. Things that are computable.

2. Things that are computable efficiently.

These sets are not the same.

Capabilities of a superintelligent AGI lie only in the second set, not the first.

It is important to understand that a superintelligent AGI is not brute forcing this in the way that has been repeatedly described in this thread. Instead the superintelligent AGI is going to use a bunch of heuristics or knowledge about the provenance of the binary file, combined with access to the internet so that it can just lookup the various headers and features of common image formats, and it'll go through and check all of those, and then if it isn't any of the usual suspects, it'll throw up metaphorical hands, and concede defeat. Or, to quote the title of this thread, intelligence isn't magic.

- ^

This is often phrased as bits per pixel, because a variety of color depth formats use less than 8 bits per channel, or other non-byte divisions.

- ^

Refer to https://accidentallyquadratic.tumblr.com/ for examples.

- ^

A fun question to consider here becomes: where are the alpha bits stored? E.g. if we assume 3 bytes for RGB data, and then we have the 1 alpha bit, is each pixel taking up 9 bits, or are the pixels stored in runs of 8 pixels followed by a single "alpha" pixel with 8 bits describing the alpha channels of the previous 8 pixels?

- ^

- ^

- ^

The way this works for real reverse engineering is that we already have expectations of what the data should look like, and we are tweaking inputs and outputs until we get the data we expected. An example would be figuring out a camera's RAW format by taking pictures of carefully chosen targets like an all white wall, or a checkerboard wall, or an all red wall, and using the knowledge of those targets to find patterns in the data that we can decode.

Why do you say that Kolmogorov complexity isn't the right measure?

most uniformly sampled programs of equal KC that produce a string of equal length.

...

"typical" program with this KC.

I am worried that you might have this backwards?

Kolmogorov complexity describes the output, not the program. The output file has low Kolmogorov complexity because there exists a short computer program to describe it.

I have mixed thoughts on this.

I was delighted to see someone else put forth an challenge, and impressed with the amount of people who took it up.

I'm disappointed though that the file used a trivial encoding. When I first saw the comments suggesting it was just all doubles, I was really hoping that it wouldn't turn out to be that.

I think maybe where the disconnect is occurring is that in the original That Alien Message post, the story starts with aliens deliberately sending a message to humanity to decode, as this thread did here. It is explicitly described as such:

From the first 96 bits, then, it becomes clear that this pattern is not an optimal, compressed encoding of anything. The obvious thought is that the sequence is meant to convey instructions for decoding a compressed message to follow...

But when I argued against the capability of decoding binary files in the I No Longer Believe Intelligence To Be Magical thread, that argument was on a tangent -- is it possible to decode an arbitrary binary files? I specifically ruled out trivial encodings in my reasoning. I listed the features that make a file difficult to decode. A huge issue is ambiguity because in almost all binary files, the first problem is just identifying when fields start or end.

I gave examples like

- Camera RAW formats

- Compressed image formats like PNG or JPG

- Video codecs

- Any binary protocol between applications

- Network traffic

- Serialization to or from disk

- Data in RAM

On the other hand, an array of doubles falls much more into this bucket

data that is basically designed to be interpreted correctly, i.e. the data, even though it is in a binary format, is self-describing.

With all of the above said, the reason why I did not bother uploading an example file in the first thread is frankly because it would have taken me some number of hours to create and I didn't think there would be any interest in actually decoding it by enough people to justify the time spent. That assumption seems wrong now! It seems like people really enjoyed the challenge. I will update accordingly, and I'll likely post my example of a file later this week after I have an evening or day free to do so.

https://en.wikipedia.org/wiki/Kolmogorov_complexity

The fact that the program is so short indicates that the solution is simple. A complex solution would require a much longer program to specify it.

I gave this post a strong disagree.

Some thoughts for people looking at this:

- It's common for binary schemas to distinguish between headers and data. There could be a single header at the start of the file, or there could be multiple headers throughout the file with data following each header.

- There's often checksums on the header, and sometimes on the data too. It's common for the checksums to follow the respective thing being checksummed, i.e. the last bytes of the header are a checksum, or the last bytes after the data are a checksum. 16-bit and 32-bit CRCs are common.

- If the data represents a sequence of messages, e.g. from a sensor, there will often be a counter of some sort in the header on each message. E.g. a 1, 2, or 4-byte counter that provides ordering ("message 1", "message 2", "message N") that wraps back to 0.

You should add computational complexity.

I’m not sure if your comment is disagreeing with any of this. It sounds like we’re on the same page about the fact that exact reasoning is prohibitively costly, and so you will be reasoning approximately, will often miss things, etc.

I agree. The term I've heard to describe this state is "violent agreement".

so in practice wrong conclusions are almost always due to a combination of both "not knowing enough" and “not thinking hard enough” / “not being smart enough.”

The only thing I was trying to point out (maybe more so for everyone else reading the commentary than for you specifically) is that it is perfectly rational for an actor to "not think hard enough" about some problem and thus arrive at a wrong conclusion (or correct conclusion but for a wrong reason), because that actor has higher priority items requiring their attention, and that puts hard time constraints on how many cycles they can dedicate to lower priority items, e.g. debating AC efficiency. Rational actors will try to minimize the likelihood that they've reached a wrong conclusion, but they'll also be forced to minimize or at least not exceed some limit on allowed computation cycles, and on most problems that means the computation cost + any type of hard time constraint is going to be the actual limiting factor.

Although even that, I think that's more or less what you meant by

in some sense you’ve probably spent too long thinking about the question relative to doing something else

In engineering R&D we often do a bunch of upfront thinking at the start of a project, and the goal is to identify where we have uncertainty or risk in our proposed design. Then, rather than spend 2 more months in meetings debating back-and-forth who has done the napkin math correctly, we'll take the things we're uncertain about and design prototypes to burn down risk directly.

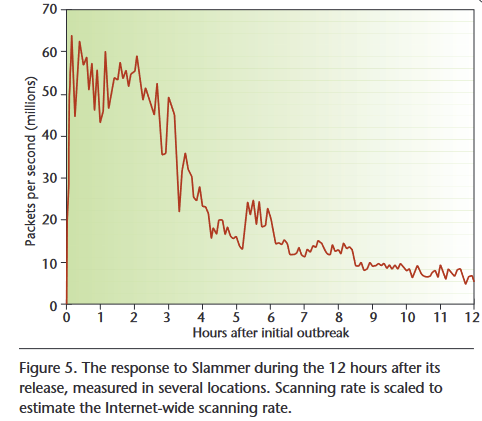

First, it only targeted Windows machines running an Microsoft SQL Server reachable via the public internet. I would not be surprised if ~70% or more theoretically reachable targets were not infected because they ran some other OS (e.g. Linux) or server software instead (e.g. MySQL). This page makes me think the market share was actually more like 15%, so 85% of servers were not impacted. By not impacted, I mean, "not actively contributing to the spread of the worm". They were however impacted by the denial-of-service caused by traffic from infected servers.

Second, the UDP port (1434) that the worm used could be trivially blocked. I have discussed network hardening in many of my posts. The easiest way to prevent yourself from getting hacked is to not let the hacker send traffic to you -- blocking IP ranges, ports, unneeded Ethernet or IP protocols, and other options available in both network hardware (routers) or software firewalls provides a low cost and highly effective way to do so. This contained the denial-of-service.

Third, the worm's attack only persisted in RAM, so the only thing a host had to do was restart the infected application. Combined with the second point, this would prevent the machine from being reinfected.

This graph[1] shows the result of wide-spread adoption of filter rules within hours of the attack being detected

This was actually a kind of fun test case for a priori reasoning. I think that I should have been able to notice the consideration denkenbgerger raised, but I didn't think of it. In fact when I stared reading his comment my immediate reaction was "this methodology is so simple, how could the equilibrium infiltration rate end up being relevant?" My guess would be that my a priori reasoning about AI is wrong in tons of similar ways even in "simple" cases. (Though obviously the whole complexity scale is shifted up a lot, since I've spent hundreds of hours thinking about key questions.)

This idea -- that you should have been able to notice the issue with infiltration rates -- is what I've been questioning when I ask "what is the computational complexity of general intelligence" or "what does rational decision making look like in a world with computational costs for reasoning".

There is a mindset that people are simply not rational enough, and if they were more rational, they wouldn't fall to those traps. Instead, they would more accurately model the situation, correctly anticipate what will and won't matter, and arrive at the right answer, just by exercising more careful, diligent thought.

My hypothesis is that whatever that optimal "general intelligence" algorithm[1] is -- the one where you reason a priori from first principles, and then you exhaustively check all of your assumptions for which one might be wrong, and then you recursively use that checking to re-reason from first principles -- it is computational inefficient enough in such a way that for most interesting[2] problems, it is not realistic to assume that it can run to completion in any reasonable[3] time with realistic computation resources, e.g. a human brain, or a supercomputer.[4]

I suspect that the human brain is implementing some type of randomized vaguely-Monte-Carlo-like algorithm when reasoning, which is how people can (1) often solve problems in a reasonable amount of time[5], (2) often miss factors during a priori reasoning but understand them easily after they've seen it confirmed experimentally, (3) different people miss different things, (4) often if someone continues to think about a problem for an arbitrarily long people of time[6] they will continue to generate insights, and (5) often those insights generated from thinking about a problem for an arbitrarily long period of time are only loosely correlated[7].

In that world, while it is true that you should have been able to notice the problem, there is no guarantee on how much time it would have taken you to do so.

- ^

The "God algorithm" for reasoning, to use a term that Jeff Atwood wrote about in this blog post. It describes the idea of an optimal algorithm that isn't possible to actually use, but the value of thinking about that algorithm is that it gives you a target to aim towards.

- ^

The use of the word "interesting" is intended to describe the nature of problems in the real world, which require institutional knowledge, or context-dependent reasoning.

- ^

The use of the word "reasonable" is intended to describe the fact that if a building is on fire and you are inside of it, you need to calculate the optimal route out of that burning building in a time period that is than a few minutes in length in order to maximize your chance of survival. Likewise, if you are tasked to solve a problem at work, you have somewhere between weeks and months to show progress or be moved to a separate problem. For proving a theorem, it might be reasonable to spend 10+ years on it if there's nothing necessitating a more immediate solution.

- ^

This is mostly based on an observation that for any scenario with say some fixed number of "obvious" factors influencing it, there are effectively arbitrarily many "other" factors that may influence the scenario, and the process of deterministically ordering an arbitrarily long list and then preceding down the list from "most likely to impact the situation" and "least likely to impact the scenario" to manually check if each "other" factor actually does matter has an arbitrarily high computational cost.

- ^

Feel free to put "solve" in quotes and read this as "halt in a reasonable time" instead. Getting the correct answer is optional.

- ^

Like mathematical proofs, or the thing where people take a walk and suddenly realize the answer to a question they've been considering.

- ^

It's like the algorithm jumped from one part of solution space where it was stuck to a random, new part of the solution space and that's where it made progress.

I deliberately tried to focus on "external" safety features because I assumed everyone else was going to follow the task-as-directed and give a list of "internal" safety features. I figured that I would just wait until I could signal-boost my preferred list of "internal" safety features, and I'm happy to do so now -- I think Lauro Langosco's list here is excellent and captures my own intuition for what I'd expect from a minimally useful AGI, and that list does so in probably a clearer / easier to read manner than what I would have written. It's very similar to some of the other highly upvoted lists, but I prefer it because it explicitly mentions various ways to avoid weird maximization pitfalls, like that the AGI should be allowed to fail at completing a task.