Murphyjitsu: an Inner Simulator algorithm

post by CFAR!Duncan (CFAR 2017) · 2022-06-30T21:50:22.510Z · LW · GW · 24 commentsContents

Inner Simulator:

Explicit/Verbal models

Making good use of your inner simulator

Some specific inner sim functions

What happens next?

The Murphyjitsu Technique

Inner Simulator—Further Resources

None

24 comments

Epistemic status: Firm

The concepts underlying the Inner Simulator model and the related practical technique of Murphyjitsu are well-known and well-researched, including Kahneman’s S1/S2, mental simulation, and mental contrasting. Similarly, the problems that this unit seeks to address (such as optimism bias and the planning fallacy) have been studied in detail. There is some academic support for specific substeps of Murphyjitsu (e.g. prospective hindsight), and strong anecdotal support (but no formal research) for the overall technique, which was developed through iterated experimentation and critical feedback. See the Further Resources section for more discussion.

Claim: there is a part of your brain (not a specific physical organ, but a metaphorical one) which is keeping track of everything.

It's not actually recording all of the information in a permanent fashion. Rather, what it's doing is building up a consistent, coherent model of how things work. It watches objects fall, and builds up anticipations: heavy objects drop straight down, lighter ones flutter, sometimes wind moves things in chaotic ways. It absorbs all of the social interactions you observe, and assembles a library of tropes and clichés and standard patterns.

Whenever you observe something that doesn't match with your previous experience, you experience (some amount of) surprise and confusion, which eventually resolves in some kind of update. You were surprised to see your partner fly off the handle about X, and now you have a sense that people can be sensitive about X. You were surprised by how heavy the tungsten cube was, and now you have a sense that objects can sometimes be really, really dense.

You can think of this aggregated sense of how things work as a simulated inner world—a tiny, broad-strokes sketch of the universe that you carry around inside your head. When you move to catch a falling pen, or notice that your friend is upset just by the way they entered the room, you're using this inner simulator. It's a different sort of processing from the explicit/verbal stuff we usually call "thinking," and it results in a very different kind of output.

Inner Simulator:

- Intuitive; part of the cluster we label "System 1"

- Outputs feelings, urges, reflexes, and vivid predictions

- Learns well from experience and examples; responds to being shown

- Good at social judgment, routine tasks, and any situation where you have lots of experience and training data

Explicit/Verbal models

- Analytical; part of the cluster we refer to as "System 2"

- Outputs arguments, calculations, and other legible content

- Learns well from facts and explanations; responds to being told

- Good at comparisons and reframings (e.g. noticing that $1/day ≈ $350/year)

We don't have to think about how to catch a falling pen, or send explicit instructions to our body about how to move to do it, because our inner simulator "knows" how falling objects move, and it "knows" how to make our hand go to a particular place. Similarly, it knows what facial expressions mean, what it’s like to drive from home to work, and what sorts of things tend to go wrong given a set of circumstances. It’s a powerful tool, and learning how to access it and when to trust it is one of the first steps to becoming a whole-brain thinker.

That’s not to say that your inner simulator is superior to your explicit model maker—each has both strengths and weaknesses, and can be either the right tool or the wrong one, depending on the situation. In any given moment, you’re probably receiving feedback from both of these “advisors,” as well as other sources of information like your friends or the internet.

In a sense, it’s your job to balance the competing recommendations from all of these different advisors to arrive at the best possible decision. Your inner sim, for example, provides feedback extremely quickly and is good at any type of task where you have lots of experience to draw on, but tends to fall prey to framing effects and will sometimes sneakily substitute an easy question for a harder one. Your explicit verbal models, on the other hand, are great for abstractions and comparisons, but are slow and vulnerable to wishful thinking and ideological distortions.

You can think of your inner sim as a black box that’s capable of performing a few specific functions, given certain input. It’s very, very good at doing those functions, and not so great with most other things (for instance, inner sim is terrible at understanding large numbers, and causes us to donate the same amount of money if someone asks us to help save 8,000 hypothetical birds from oil spills as if they ask us to save 800,000). But if you need a particular kind of reality check, it helps to know which parts of reality inner sim sees most clearly.

Making good use of your inner simulator

Like most algorithms, your inner simulator will output good and useful information if you give it good and useful input, and it will output useless garbage if that’s what you feed it. It’s an especially good check on wishful thinking and motivated cognition—just imagine its response to a list of New Year’s resolutions—but you need to make sure that you aren’t rigging the game by phrasing questions the wrong way, and you need to make sure that you're asking questions within a domain where your inner sim actually has representative data.

(Because your inner sim will often answer confidently either way, even if the question is outside of your relevant experience! For instance, many people erroneously slam on the brakes the first time they start to skid on ice, even though this is counterproductive—that's because the inner sim "knows" that slamming on the brakes will stop the car, and doesn't know that this does not apply in this novel situation.)

Two useful strategies for avoiding vague, open-ended “garbage” are sticking to concrete examples and looking for next actions.

Asking for Examples

It’s just so frustrating. It’s like, every little thing turns into a fight, you know? And then it’s my fault that we’re fighting, and I have to either pick between defending myself or smoothing things over, and since I’m the only one who ever wants to smooth things over, that means that I’m always the one apologizing. And last week—I told you about what happened while we were stuck in traffic, right? No? So, like, out of nowhere, while I’m trying to focus on not getting into a wreck, all of a sudden we’re back talking about grad school again...

In a situation like this, your inner sim has nothing to grab onto—everything is vague, everything is open to interpretation, and clichés and stereotypes are filling in for actual understanding. It could be that your friend is in the right, and needs your commiseration; it could also be that the situation calls for some harsh truths and tough love. How can you tell, one way or another? Try some of these:

- What were the last couple of things you fought about?

- What were you talking about right before grad school came up?

- When you say you’re the only one who wants to smooth things over, what do you mean? What are you seeing and hearing that give you that sense?

When it’s just “every little thing turns into a fight,” your inner sim literally doesn’t know what to think—there are too many possibilities.

(Another way to think of this is that both it-being-your-fault and it-being-their-fault are consistent with your inner sim's general sense of how-things-work; they're both plausible and your inner sim can't help you distinguish between them.)

But when the argument started with “Do we really have to go over to Frank’s again?” or with “Oh, hey, I see you got new shoes. Nice!” you have a much better clearer sense of what the situation really looks like. The detail helps you narrow down which swath of past experiences you want to draw your intuitions from.

Asking for examples is a handy technique for any conversation. When you keep your inner simulator engaged, and keep feeding it data, you might notice that it’s easier to:

- Notice when your friend’s claim is false. In particular, if you keep your attention focused on concrete detail, you’ll be more likely to notice where the error is, instead of just having a vague sense of something “not adding up.”

- Notice if you’re misunderstanding your friend. When we lis- ten to someone else, we often try to approximate and anticipate what they’re explaining. If you keep asking for examples, you’ll be more likely to notice if you’ve been accidentally adding or leaving out important features of the topic at hand.

- Notice if you’re the one who’s wrong. It’s easy to avoid noticing if you’ve made a mistake—it’s painful! The more concrete your dis- agreement, the easier to notice if there’s a flaw in your own argument, and to update accordingly.

Searching for Next Actions

I’m pretty excited about next year. I’m going to finish paying off my loan, and once the weather gets better, I think I’m going to start running again. Oh! And I’ve been talking to some friends about maybe taking a trip to Europe—that is, if I don’t end up going back to school.

A goal isn’t the same thing as a plan. I might have the goal of exercising more, but if I’m going to make that goal a reality—especially given that I’m not currently exercising as much as I “should”—then I’ll need to think about when and how I’ll get to the gym, what I’ll do when I get there, what the realistic obstacles are going to be, and how I’m going to hold the plan together moment by moment and month by month.

But even before I get to those things, I’ll need to take my next action, which might be printing out a gym coupon, or setting a reminder in my calendar, or looking at my schedule for a good time to buy workout clothes. A next action is a step that sets your plan in motion—it’s both the first thing you’d have to do to build momentum, and also the first roadblock, if left undone. Usually it’s not particularly exciting or dramatic—next actions are often as mundane as putting something on the calendar or looking something up online. If you’re not able to take your next action at the moment you think of it, it’s generally helpful to think of a trigger—some specific event or time that will remind you to follow through.

For instance, if I have a goal of applying to a particular school next fall, then my plan will likely involve things like updating my CV, looking at the application process online, checking my finances, creating a list of plausible contacts for letters-of-recommendation, making decisions about work and relationships, and a host of other things. If, after thinking it through, I decide that my next action is to spend half an hour on the school’s website, then I need a solid trigger to cause me to remember that at the end of a long day and a long commute, when I get home tired and hungry and Netflixy. That trigger might be a phone alarm, or an email reminder in my inbox, or a specific connection to something in my evening routine—when I hear the squeak of my bedroom chair, I’ll remember to go online—but whatever trigger I choose, I’ll be better off having one than not, and better off with a concrete, specific one than a vague, forgettable one.

(For more detail on the process of choosing triggers and actions, see Trigger-Action Planning.)

Some specific inner sim functions

What happens next?

Start a “mental movie” by concretely visualizing a situation, and see what your brain expects to happen. If this is the beginning of the scene, how does the scene end?

- Input: A laptop is balanced on the edge of a table in a busy office.

- Input: You lift a piece of watermelon to your mouth and take a bite.

- Input: You sneak up on your closest colleague at work, take aim with a water gun, and fire.

How shocked am I?

Check your “surprise-o-meter”—visualize a scenario from start to finish, and see whether you “buy” that things would actually play out that way. Common outputs are “seems right/shrug,” “surprised,” and “shocked,” and you may benefit from building a habit of rating your surprise on a scale of one to ten (so that you start to learn to feel the difference between three-out-of-ten surprised and five-out-of-ten surprised, and can detect a shift from e.g. seven down to six).

- Input: You’ve purchased food to feed twenty-five people at your party, and only ten people show up.

- Input: Same party, but seventy people show up.

- Input: You finish your current project in less than half the time you allotted for it.

What went right/wrong?

Use your “pre-hindsight”—start by assuming that your current plan has utterly failed. What explanation leaps to mind about why this happened? What's the "obvious" answer, according to all of your past experience, and your aggregated sense of how things work?

- Input: Think of a specific email you intend to send next week. Turns out, the person you sent it to was extremely irritated by it. What was the part they didn't like?

- Input: Imagine you receive a message from yourself from the future, telling you that you should absolutely stay at your current job. What went wrong in the world where you left?

- Input: It’s now been three months since you read this primer, and you have yet to make deliberate use of your inner simulator. What happened?

The Murphyjitsu Technique

Murphy’s Law states “Anything that can go wrong, will go wrong.” Even worse, even people who are familiar with Murphy's Law are notoriously bad at applying it when making plans and predictions—in a classic experiment, 37 psychology students were asked to estimate how long it would take them to finish their senior theses “if everything went as poorly as it possibly could,” and they still underestimated the time it would take, as a group (the average prediction was 48.6 days, and the average actual completion time was 55.5 days).

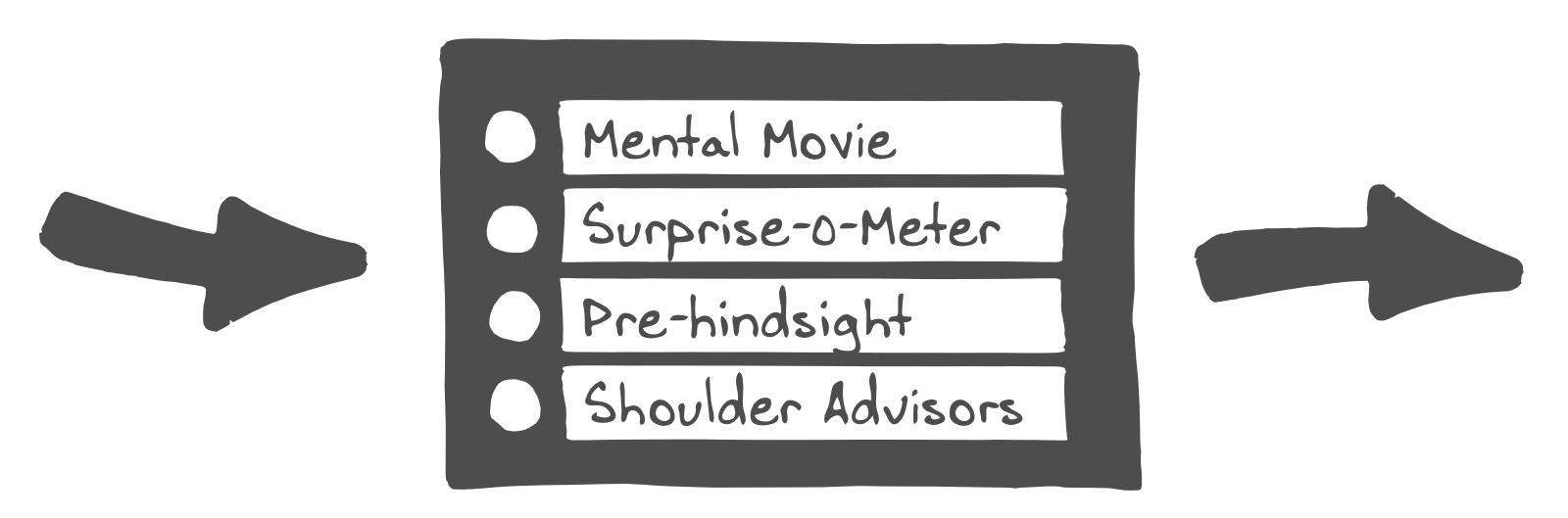

However, where straightforward introspection fails, a deliberate use of inner sim can provide a valuable “second opinion.” Below are the steps for Murphyjitsu, a process for bulletproofing your strategies and plans.

- Step 0: Select a goal. A habit you want to install, or a plan you’d like to execute, or a project you want to complete.

- Step 1: Outline your plan. Be sure to list next actions, concrete steps, and specific deadlines or benchmarks. It’s important that you can actually visualize yourself moving through your plan, rather than having something vague like work out more.

- Step 2: Surprise-o-meter. Imagine you get a message from the future—it’s been months, and it turns out the plan failed/you’ve made little or no progress! Where are you, on the scale from yeah, that sounds right to I literally don’t understand what happened? If you’re completely shocked—good job, your inner sim endorses your plan! If you’re not, though, go to Step 3.

- Step 3: Pre-hindsight. Try to construct a plausible narrative for what kept you from succeeding. Remember to look at both internal and external factors.

- Step 4: Bulletproofing. What single action can you take, to head off the failure mode your inner sim predicts?

- Step 5: Iterate steps 2-4. That’s right—it’s not over yet! Even with your new failsafes, your plan still failed. Are you shocked? If so, victory! If not—keep going.

Irresponsibly cavalier psychosocial speculation: It seems plausible that the reason this works for many people (where simply asking “what could go wrong?” fails) is that, in our evolutionary history, there was a strong selection pressure in favor of individuals with a robust excuse-generating mechanism. When you’re standing in front of the chief, and he’s looming over you with a stone axe and demanding that you explain yourself, you’re much more likely to survive if your brain is good at constructing a believable narrative in which it’s not your fault.

Inner Simulator—Further Resources

Kahneman and Tversky (1982) proposed that people often use a simulation heuristic to make judgments. Mental simulation of a scenario is used to make predictions by imagining a situation and then running the simulation to see what happens next, and it is also to give explanations for events by mentally changing prior events and seeing if the outcomes changes.

Kahneman, D. & Tversky, A. (1982). The simulation heuristic. In D. Kahneman, P. Slovic, & A. Tversky (eds.) Judgment under uncertainty: Heuristics and biases (pp. 201-208).

Research on mental simulation has found that imagining future or hypothetical events draws on much of the same neural circuitry that is used in memory. The ease with which a simulated scenario is generated often seems to be used as a cue to the likelihood of that scenario. For a review, see:

Szpunar, K.K. (2010). Episodic future thought: An emerging concept. Per- spectives on Psychological Science, 5, 142-162. http://goo.gl/g0NNI

“Mental contrasting,” sometimes referred to as gain-pain movies, is a specific algorithm for making optimism and drive more accurate and robust in the face of adversity. In her book Rethinking Positive Thinking, Dr. Gabrielle Oettingen outlines the steps of mental contrasting, along with the underlying justification and examples of results.

Oettingen, Gabrielle (2014). Rethinking Positive Thinking.

“Focusing” is a practice of introspection systematized by psychotherapist Eu- gene Gendlin which seeks to build a pathway of communication and feedback between a person’s “felt sense” of what is going on (an internal awareness which is often difficult to articulate) and their verbal explanations. It can be understood as a method of querying one’s inner simulator (and related parts of System 1). Gendlin’s (1982) book Focusing provides a guide to this technique, which can be used either individually or with others (in therapy or other debugging conversations).

Gendlin, Eugene (1982). Focusing. Second edition, Bantam Books. http://en.wikipedia.org/wiki/Focusing

The idea of identifying the concrete “next action” for any plan was popularized by David Allen in his book Getting Things Done.

Allen, David (2001). Getting Things Done: The Art of Stress-Free Productivity. http://en.wikipedia.org/wiki/Getting_Things_Done

Mitchell, Russo, and Pennington (1989) developed the technique which they called “prospective hindsight.” They found that people who imagined themselves in a future world where an outcome had already occurred were able to think of more plausible paths by which it could occur, compared with people who merely considered the outcome as something that might occur. Decision making researcher Gary Klein has used this technique when consulting with organizations to run “premortems” on projects under consideration: assume that the project has already happened and failed; why did it fail? Klein’s (2007) two-page article provides a useful summary of this technique, and his (2004) book The Power of Intuition includes several case studies.

Mitchell, D., Russo, J., & Pennington, N. (1989). Back to the future: Tem- poral perspective in the explanation of events. Journal of Behavioral Decision Making, 2, 25-38. http://goo.gl/GYW6hg

Klein, G. (2007). Performing a project premortem. Harvard Business Re- view, 85, 18-19. http://hbr.org/2007/09/performing-a-project-premortem/ar/1

Klein, Gary (2004).The Power of Intuition: How to Use Your Gut Feelings to Make Better Decisions at Work.

24 comments

Comments sorted by top scores.

comment by Kenoubi · 2022-07-01T01:32:43.870Z · LW(p) · GW(p)

in a classic experiment, 37 psychology students were asked to estimate how long it would take them to finish their senior theses “if everything went as poorly as it possibly could,” and they still underestimated the time it would take, as a group (the average prediction was 48.6 days, and the average actual completion time was 55.5 days).

That's nuts. Does anyone really think that "if everything went as poorly as it possibly could" that the thesis would ever get done at all? It's so bizarre it makes me question whether the students actually understood what they were being asked.

Replies from: Valentine↑ comment by Valentine · 2022-07-03T17:54:17.793Z · LW(p) · GW(p)

IME the sense that this is nuts seems to be a quirk of STEM thinking. In practice, most non-rationalists seem to interpret "How long will this take if everything goes as poorly as possible?" as something like "Assume it gets done but the process is super shitty. How long will it take?"

It's a quirk of rationalist culture (and a few others — I've seen this from physicists too) to take the words literally and propose that "infinitely long" is a plausible answer, and be baffled as to how anyone could think otherwise.

Many smart political science and English majors don't seem to go down that line of reasoning, for instance.

Replies from: Kenoubi↑ comment by Kenoubi · 2022-07-04T18:19:57.345Z · LW(p) · GW(p)

"Assume it gets done but the process is super shitty. How long will it take?"

I would, in fact, consider that interpretation to be unambiguously "not understanding what they were being asked" , given the question in the post. Not understanding what is being asked is something that happens a fair bit.

I'll give you that if they had asked "assuming it gets done but everything goes as poorly as possible, how long did it take?", it takes a bit of a strange mind to look for some weird scenario that strings things along for years, or centuries, or eons. But "never" is something that happens quite often. Even given that people do respond with (overly optimistic) numbers, I'm not convinced that "never" just didn't occur to them because they were insufficiently motivated to give a correct answer.

If what you say is true, though, that's a bit discouraging for the prospects of communicating with even smart political science and English majors. I don't suppose you know some secret way to get them to respond to what you actually say, not something else kind of similar?

Replies from: Valentine, anonymousaisafety↑ comment by Valentine · 2022-07-04T19:03:18.830Z · LW(p) · GW(p)

I would, in fact, consider that interpretation to be unambiguously "not understanding what they were being asked" , given the question in the post.

Two points:

- "Not understanding what they were being asked" isn't an explanation. This is a common (really, close-to-universal) gap in people's attempts to understand one another. If these students didn't "understand" (noting the ambiguity about what exactly that means), what were they doing instead? "Being stupid"? "Not thinking"? "Falling prey to biases"? None of this tells you what they were doing.

- I think what I described is a perfectly fine way for someone to interpret the question.

For that second point, let's look at the wording again:

…psychology students were asked to estimate how long it would take them to finish their senior theses “if everything went as poorly as it possibly could,”…

"How long it would take". Which someone could reasonably interpret as implying that it would, in fact, get done. (Many (most?) people would consider the question "How long does this take if it never gets done?" to be gibberish.)

And in the context of a senior thesis, it's useless to think about it in terms of it getting done in (say) 20 years. It's gotta get done soon enough to graduate. I think in most cases within a single college term?

So given the context of the question, there are some reasonable constraints a mind might put on the question.

…which you're interpreting as them "unambiguously" not understanding what was actually said.

This attitude about there being an objectively correct interpretation of what was said, and that it matches your interpretation of what was said, and that when people hear something else they're making a mistake, is another way to describe the STEM thing I was talking about.

This works in STEM disciplines basically by definition. It's actually a great power tool. You troubleshoot differences in interpretation by coming to shared agreements about how language works and what concepts to use to interpret things. It makes sense to find out who was in fact wrong. And you do so in part by emphasizing and presenting the argument that justifies your interpretation.

But that communication strategy doesn't work in most places.

The "secret way" I use to communicate with non-rationalists is to assume absolutely everything they do and think makes sense on the inside, and I try to understand that way of making sense. It's not about making them interpret things differently; that's STEM thinking and only works with others who have agreed to STEM communication protocols. Instead, I try to get some clear sense of what it's like to be them, and I account for that in how I communicate.

And in particular, I have to make a point of setting aside my confidence that I'm using correct interpretation protocols (or even that there is such a thing) and that they're not responding to the words that were actually said. That's anti-helpful for communication.

Replies from: Kenoubi↑ comment by Kenoubi · 2022-07-05T11:11:37.787Z · LW(p) · GW(p)

Given the context, I imagine what they were doing is making up a number that was bigger than another number they'd just made up. Humans are cognitive misers. A student would correctly guess that it doesn't really matter if they get this question right and not try very hard. That's actually what I would do in a context where it was clear that a numeric answer was required, I was expected to spend little time answering, and I was motivated not to leave that particular question blank.

My answer of "never" also took little thought (for me). I thought a bit more about it, and if I did interpret it as assuming the thesis gets done (which, yes, one can interpret it that way), then the answer would be "however many days it is until the last date on which the thesis will be accepted". Which is also not the answer the students gave.

It's a bad question that I don't think it would ever even occur to anyone to ask if they weren't trying to have a number that they could aggregate into a meaningless (but impressive-sounding) statistic. It's in the vicinity of a much more useful question, which is "what could go poorly to make this thesis take a long time / longer than expected". And sure, you could answer the original question by decomposing it into "what could go wrong" and "if all of those actually did go wrong, how long would it take". And if you did that you'd have the answer to the first question, which is actually useful, regardless of how accurate the answer to the second is. But that's the actual point of this whole post, right? And in fact the reason for citing that statistic at all is that it appears to demonstrate that these students were not doing that?

↑ comment by anonymousaisafety · 2022-07-04T20:06:59.925Z · LW(p) · GW(p)

If we look at the student answers, they were off by ~7 days, or about a 14% error from the actual completion time.

The only way I can interpret your post is that you're suggesting all of these students should have answered "never".

I'm not convinced that "never" just didn't occur to them because they were insufficiently motivated to give a correct answer.

How far off is "never" from the true answer of 55.5 days?

It's about infinitely far off. It is an infinitely wrong answer. Even if a project ran 1000% over every worst-case pessimistic schedule, any finite prediction was still infinitely closer than "never".

It's a quirk of rationalist culture (and a few others — I've seen this from physicists too) to take the words literally and propose that "infinitely long" is a plausible answer, and be baffled as to how anyone could think otherwise.

That's because "infinitely long" is a trivial answer for any task that isn't literally impossible.[1] It provides 0 information and takes 0 computational effort. It might as well be the answer from a non-entity, like asking a brick wall how long the thesis could take to complete.

Question: How long can it take to do X?

Brick wall: Forever. Just go do not-X instead.

It is much more difficult to give an answer for how long a task can take assuming it gets done while anticipating and predicting failure modes that would cause the schedule to explode, and that same answer is actually useful since you can now take preemptive actions to avoid those failure modes -- which is the whole point of estimating and scheduling as a logical exercise.

The actual conversation that happens during planning is

A: "What's the worst case for this task?"

B: "6 months."

A: "Why?"

B: "We don't have enough supplies to get past 3 trial runs, so if any one of them is a failure, the lead time on new materials with our current vendor is 5 months."

A: "Can we source a new vendor?"

B: "No, but... <some other idea>"

- ^

In cases when something is literally impossible, instead of saying "infinitely long", or "never", it's more useful to say "that task is not possible" and then explain why. Communication isn't about finding the "haha, gotcha" answer to a question when asked.

↑ comment by Kenoubi · 2022-07-05T10:45:56.824Z · LW(p) · GW(p)

Yes, given that question, IMO they should have answered "never". 55.5 days isn't the true answer, because in reality everything didn't go as poorly as possible. You're right, it's a bad question that a brick wall would do a better job of answering correctly than a human who's trying to be helpful.

The answer to your question is useful, but not because of the number. "What could go wrong to make this take longer than expected?" would elicit the same useful information without spuriously forcing a meaningless number to be produced.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-07-05T16:12:38.374Z · LW(p) · GW(p)

I have a sense that this is a disagreement about how to decide what words "really" mean, and I have a sense that I disagree with you about how to do that.

https://www.lesswrong.com/posts/57sq9qA3wurjres4K/ruling-out-everything-else [LW · GW]

"What could go wrong to make this take longer than expected?" would elicit the same useful information without spuriously forcing a meaningless number to be produced.

It is false that that question would elicit the same useful information. Quoting from something I previously wrote elsewhere:

Replies from: anonymousaisafety, Kenoubi, KenoubiSometimes, we ask questions we think should work, but they don't.

e.g. "What could go wrong?" is not a question that works for most people, most of the time.

It turns out, though, that if you just tell people "guess what—I bring to you a message from the future. The plan failed." ... it turns out that, in many cases, you can follow up this blunt assertion with the question "What happened?" and this question does work.

(The difference between "What could go wrong?" and "It went wrong—what happened?" is so large that it became the centerpiece of one of CFAR's four most popular, enduring, and effective classes.)

Similarly: I've noticed that people sometimes ask me "What do you think?" or "Do you have any feedback?"

And it's not, in fact, the case that my brain possesses none of the information they seek. The information is in there, lurking (just as people really do, in some sense, know what could go wrong).

But the question doesn't work, for me. It doesn't successfully draw out that knowledge. Some other question must be asked, to cause the words to start spilling out of my mouth. "Will a sixth grader understand this?", maybe. Or "If I signed your name at the bottom of this essay and told everyone you wrote it, that'd be okay, right?"

↑ comment by anonymousaisafety · 2022-07-05T22:16:26.666Z · LW(p) · GW(p)

Right. I think I agree with everything you wrote here, but here it is again in my own words:

In communicating with people, the goal isn't to ask a hypothetically "best" question and wonder why people don't understand or don't respond in the "correct" way. The goal is to be understood and to share information and acquire consensus or agree on some negotiation or otherwise accomplish some task.

This means that in real communication with real people, you often need to ask different questions to different people to arrive at the same information, or phrase some statement differently for it to be understood. There shouldn't be any surprise or paradox here. When I am discussing an engineering problem with engineers, I phrase it in the terminology that engineers will understand. When I need to communicate that same problem to upper management, I do not use the same terminology that I use with my engineers.

Likewise, there's a difference when I'm communicating with some engineering intern or new grad right out of college, vs a senior engineer with a decade of experience. I tailor my speech for my audience.

In particular, if I asked this question to Kenoubi ("what's the worst case for how long this thesis could take you?"), and Kenoubi replied "It never finishes", then I would immediately follow up with the question, "Ok, considering cases when it does finish, what's the worst-case look like?" And if that got the reply "the day before it is required to be due", I would then start poking at "What would would cause that to occur?".

The reason why I start with the first question is because it works for, I don't know, 95% of people I've ever interacted with in my life? In my mind, it's rational to start with a question that almost always elicits the information I care about, even if there's some small subset of the population that will force me to choose my words as if they're being interpreted by a Monkey's paw.

Replies from: Kenoubi↑ comment by Kenoubi · 2022-07-06T12:22:11.639Z · LW(p) · GW(p)

It didn't work for the students in the study in the OP. That's literally why the OP mentioned it!

Replies from: anonymousaisafety↑ comment by anonymousaisafety · 2022-07-06T19:42:24.283Z · LW(p) · GW(p)

It depends on what you mean by "didn't work". The study described is published in a paper only 16 pages long. We can just read it: http://web.mit.edu/curhan/www/docs/Articles/biases/67_J_Personality_and_Social_Psychology_366,_1994.pdf

First, consider the question of, "are these predictions totally useless?" This is an important question because I stand by my claim that the answer of "never" is actually totally useless due to how trivial it is.

Despite the optimistic bias, respondents' best estimates were by no means devoid of information: The predicted completion times were highly correlated with actual completion times (r = .77, p < .001). Compared with others in the sample, respondents who predicted that they would take more time to finish actually did take more time. Predictions can be informative even in the presence of a marked prediction bias.

...

Respondents' optimistic and pessimistic predictions were both strongly correlated with their actual completion times (rs = .73 and .72, respectively; ps < .01).

Yep. Matches my experience.

We know that only 11% of students met their optimistic targets, and only 30% of students met their "best guess" targets. What about the pessimistic target? It turns out, 50% of the students did finish by that target. That's not just a quirk, because it's actually related to the distribution itself.

However, the distribution of difference scores from the best-guess predictions were markedly skewed, with a long tail on the optimistic side of zero, a cluster of scores within 5 or 10 days of zero, and virtually no scores on the pessimistic side of zero. In contrast, the differences from the worst-case predictions were noticeably more symmetric around zero, with the number of markedly pessimistic predictions balancing the number of extremely

optimistic predictions.

In other words, asking people for a best guess or an optimistic prediction results in a biased prediction that is almost always earlier than a real delivery date. On the other hand, while the pessimistic question is not more accurate (it has the same absolute error margins), it is unbiased. The reality is that the study says that people asked for a pessimistic question were equally likely to over-estimate their deadline as they were to under-estimate it. If you don't think a question that gives you a distribution centered on the right answer is useful, I'm not sure what to tell you.

The paper actually did a number of experiments. That was just the first.

In the third experiment, the study tried to understand what people are thinking about when estimating.

Proportionally more responses concerned future scenarios (M = .74) than relevant past experiences (M =.07), r(66) = 13.80, p < .001. Furthermore, a much higher proportion of subjects' thoughts involved planning for a project and imagining its likely progress (M =.71) rather than considering potential impediments (M = .03), r(66) = 18.03, p < .001.

This seems relevant considering that the idea of premortems or "worst case" questioning is to elicit impediments, and the project managers / engineering leads doing that questioning are intending to hear about impediments and will continue their questioning until they've been satisfied that the group is actually discussing that.

In the fourth experiment, the study tries to understand why it is that people don't think about their past experiences. They discovered that just prompting people to consider past experiences was insufficient, they actually needed additional prompting to make their past experience "relevant" to their current task.

Subsequent comparisons revealed that subjects in the recall-relevant condition predicted they would finish the assignment later than subjects in either the recall condition, t(79) = 1.99, p < .05, or the control condition, f(80) = 2.14, p < .04, which did not differ significantly from each other, t(& 1) < 1

...

Further analyses were performed on the difference between subjects' predicted and actual completion times. Subjects underestimated their completion times significantly in the control (M = -1.3 days), r(40) = 3.03, p < .01, and recall conditions (M = -1.0 day), t(41) = 2.10, p < .05, but not in the recall-relevant condition (M = -0.1 days), ((39) < i. Moreover, a higher percentage of subjects finished the assignments in the predicted time in the recall-relevant condition (60.0%) than in the recall and control conditions (38.1% and 29.3%, respectively), x2G, N = 123) = 7.63, p < .01. The latter two conditions did not differ significantly from each other.

...

The absence of an effect in the recall condition is rather remarkable. In this condition, subjects first described their past performance with projects similar to the computer assignment and acknowledged that they typically finish only 1 day before

deadlines. Following a suggestion to "keep in mind previous experiences with assignments," they then predicted when they would finish the computer assignment. Despite this seemingly powerful manipulation, subjects continued to make overly optimistic forecasts. Apparently, subjects were able to acknowledge their past experiences but disassociate those episodes from their present predictions.

In contrast, the impact of the recall-relevant procedure was sufficiently robust to eliminate the optimistic bias in both deadline conditions

How does this compare to the first experiment?

Interestingly, although the completion estimates were less biased in the recall-relevant condition than in the other conditions, they were not more strongly correlated with actual completion times, nor was the absolute prediction error any smaller. The optimistic bias was eliminated in the recall-relevant condition because subjects' predictions were as likely to be too long as they were to be too short. The effects of this manipulation mirror those obtained with the instruction to provide pessimistic predictions in the first study: When students predicted the completion date for their honor's thesis on the assumption that "everything went as poorly as it possibly could" they produced unbiased but no more accurate predictions than when they made their "best guesses."

It's common in engineering to perform group estimates. Does the study look at that? Yep, the fifth and last experiment asks individuals to estimate the performance of others.

As hypothesized, observers seemed more attuned to the actors' base rates than did the actors themselves. Observers spontaneously used the past as a basis for predicting actors' task completion times and produced estimates that were later than both the actors' estimates and their completion times.

So observers are more pessimistic. Actually, observers are so pessimistic that you have to average it with the optimistic estimates to get an unbiased estimate.

One of the most consistent findings throughout our investigation was that manipulations that reduced the directional (optimistic) bias in completion estimates were ineffective in in-

creasing absolute accuracy. This implies that our manipulations did not give subjects any greater insight into the particular predictions they were making, nor did they cause all subjects to become more pessimistic (see Footnote 2), but instead caused enough subjects to become overly pessimistic to counterbalance the subjects who remained overly optimistic. It remains for future research to identify those factors that lead people to make

more accurate, as well as unbiased, predictions. In the real world, absolute accuracy is sometimes not as important as (a) the proportion of times that the task is completed by the "best-guess" date and (b) the proportion of dramatically optimistic, and therefore memorable, prediction failures. By both of these criteria, factors that decrease the optimistic bias "improve" the quality of intuitive prediction.

At the end of the day, there are certain things that are known about scheduling / prediction.

- In general, individuals are as wrong as they are right for any given estimate.

- In general, people are overly optimistic.

- But, estimates generally correlate well with actual duration -- if an individual thinks something is longer in estimate than another task, it most likely is! This is why in SW sometimes estimation is not in units of time at all, but in a concept called "points".

- The larger and more nebulously scoped the task, the worse any estimates will be in absolute error.

- The length of a time a task can take follows a distribution with a very long right tail -- a task that takes way longer than expected can take an arbitrary amount of time, but the fastest time to complete a task is limited.

- The best way to actually schedule or predict a project is to break it down into as many small component tasks as possible, identify dependencies between those tasks, and produce most likely, optimistic, and pessimistic estimates for each task, and then run a simulation for chain of dependencies to see what the expected project completion looks like. Use a Gantt chart. This is a boring answer because it's the "learn project management" answer, and people will hate on it because

gesture vaguely to all of the projects that overrun their schedule. There are many interesting reasons for why that happens and why I don't think it's a massive failure of rationality, but I'm not sure this comment is a good place to go into detail on that. The quick answer is that comical overrun of a schedule has less to do with an inability to create correct schedules from an engineering / evidence-based perspective, and much more to do with a bureaucratic or organizational refusal to accept an evidence-based schedule when a totally false but politically palatable "optimistic" schedule is preferred.

↑ comment by Kenoubi · 2022-07-06T21:12:06.900Z · LW(p) · GW(p)

The best way to actually schedule or predict a project is to break it down into as many small component tasks as possible, identify dependencies between those tasks, and produce most likely, optimistic, and pessimistic estimates for each task, and then run a simulation for chain of dependencies to see what the expected project completion looks like. Use a Gantt chart. This is a boring answer because it's the "learn project management" answer, and people will hate on it because gesture vaguely to all of the projects that overrun their schedule. There are many interesting reasons for why that happens and why I don't think it's a massive failure of rationality, but I'm not sure this comment is a good place to go into detail on that. The quick answer is that comical overrun of a schedule has less to do with an inability to create correct schedules from an engineering / evidence-based perspective, and much more to do with a bureaucratic or organizational refusal to accept an evidence-based schedule when a totally false but politically palatable "optimistic" schedule is preferred.

I definitely agree that this is the way to get the most accurate prediction practically possible, and that organizational dysfunction often means this isn't used, even when the organization would be better able to achieve its goals with an accurate prediction. But I also think that depending on the type of project, producing an accurate Gantt chart may take a substantial fraction of the effort (or even a substantial fraction of the wall-clock time) of finishing the entire project, or may not even be possible without already having some of the outputs of the processes earlier in the chart. These aren't necessarily possible to eradicate, so the take-away, I think, is not to be overly optimistic about the possibility of getting accurate schedules, even when there are no ill intentions and all known techniques to make more accurate schedules are used.

↑ comment by Kenoubi · 2022-07-06T20:57:40.632Z · LW(p) · GW(p)

In other words, asking people for a best guess or an optimistic prediction results in a biased prediction that is almost always earlier than a real delivery date. On the other hand, while the pessimistic question is not more accurate (it has the same absolute error margins), it is unbiased. The reality is that the study says that people asked for a pessimistic question were equally likely to over-estimate their deadline as they were to under-estimate it. If you don't think a question that gives you a distribution centered on the right answer is useful, I'm not sure what to tell you.

It's interesting that the median of the pessimistic expectations is about equal to the median of the actual results. The mean clearly wasn't, as that discrepancy was literally the point of citing this statistic in the OP:

in a classic experiment, 37 psychology students were asked to estimate how long it would take them to finish their senior theses “if everything went as poorly as it possibly could,” and they still underestimated the time it would take, as a group (the average prediction was 48.6 days, and the average actual completion time was 55.5 days).

So the estimates were biased, but not median-biased (at least that's what Wikipedia appears to say the terminology is). Less biased than other estimates, though. Of course this assumes we're taking the answer to "how long would it take if everything went as poorly as it possibly could" and interpreting it as the answer to "how long will it actually take", and if students were actually asked after the fact if everything went as poorly as it possibly could, I predict they would mostly say no. And treating the text "if everything went as poorly as it possibly could" as if it wasn't even there is clearly wrong too, because they gave a different (more biased towards optimism) answer if it was omitted.

This specific question seems kind of hard to make use of from a first-person perspective. But I guess maybe as a third party one could ask for worst-possible estimates and then treat them as median-unbiased estimators of what will actually happen? Though I also don't know if the median-unbiasedness is a happy accident. (It's not just a happy accident, there's something there, but I don't know whether it would generalize to non-academic projects, projects executed by 3rd parties rather than oneself, money rather than time estimates, etc.)

I do still also think there's a question of how motivated the students were to give accurate answers, although I'm not claiming that if properly motivated they would re-invent Murphyjitsu / the pre-mortem / etc. from whole cloth; they'd probably still need to already know about some technique like that and believe it could help get more accurate answers. But even if a technique like that is an available action, it sounds like a lot of work, only worth doing if the output has a lot of value (e.g. if one suspects a substantial chance of not finishing the thesis before it's due, one might wish to figure out why so one could actively address some of the reasons).

↑ comment by Kenoubi · 2022-07-06T12:33:10.115Z · LW(p) · GW(p)

I have a sense that this is a disagreement about how to decide what words "really" mean, and I have a sense that I disagree with you about how to do that.

https://www.lesswrong.com/posts/57sq9qA3wurjres4K/ruling-out-everything-else [LW · GW]

I had already (weeks ago) approvingly cited and requested for my wife and my best friend to read that particular post, which I think puts it at 99.5th percentile or higher of LW posts in terms of my wanting its message to be understood and taken to heart, so I think I disagree with this comment about as strongly as is possible.

I simply missed the difference between "what could go wrong" and "you failed, what happened" while I was focusing on the difference between "what could go wrong" and "how long could it take if everything goes as poorly as possible".

↑ comment by Kenoubi · 2022-07-06T12:20:11.158Z · LW(p) · GW(p)

You're right - "you failed, what happened" does create a mental frame that "what could go wrong" does not. I don't think "how long could it take if everything goes as poorly as possible" creates any more useful of a frame than "you failed, what happened". But it does, formally, request a number. I don't think that number, itself, is good for anything. I'm not even convinced asking for that number is very effective for eliciting the "you failed, what happened" mindset. I definitely don't think it's more effective for that than just asking directly "you failed, what happened".

comment by anonymousaisafety · 2022-07-01T05:49:29.678Z · LW(p) · GW(p)

Is the concept of "murphyjitsu" supposed to be different than the common exercise known as a premortem in traditional project management? Or is this just the same idea, but rediscovered under a different name, exactly like how what this community calls a "double crux" is just the evaporating cloud, which was first described in the 90s.

If you've heard of a postmortem or possibly even a retrospective, then it's easy to guess what a premortem is. I cannot say the same for "murphyjitsu".

I see that premortem is even referenced in the "further resources" section, so I'm confused why you'd describe it under a different name that cannot be researched easily outside of this site, where there is tons of literature and examples of how to do premortems correctly.

Replies from: Valentine↑ comment by Valentine · 2022-07-01T06:15:46.722Z · LW(p) · GW(p)

I invented the term. I can speak to this.

For one thing, I think I hadn't heard of the premortem when I created the term "murphyjitsu" and the basic technique. I do think there's a slight difference, but it's minor enough that had I known about premortems then I might have just used that term.

Murphyjitsu showed up as a cute name for a process I had created to pragmatically counter planning fallacy thinking in my own mind. Part of the inspiration was from when Anna and Eliezer had created the "sunk cost kata", which was more like a bundle of mental tricks for sidestepping sunk cost thinking. As a martial artist, it bugged me that this was what they thought a "rationality kata" was. So in early 2012 I worked pretty hard to offer candidate examples of real rationality katas. Of the… gosh, five or so I created, only two of them proved to be cognitively powerful, and murphyjitsu was the only one that seemed to be teachable.

(The other one was a cognitive kata drawing inspiration from the Crisis of Faith "technique" [LW · GW]. I was quite bothered by the fact that the Crisis was something you only really got to practice a rare few times in your life, so how could you possibly be good at it when the time comes? So I found ways to train sub-skills and chain them together, much like a martial arts kata… and ripped several old family religious beliefs out of me along the way. I was never able to convey how to do this to anyone else though.)

If I remember right, I introduced murphyjitsu in an early CFAR class on the planning fallacy. It's worth noticing that premortem and murphyjitsu aren't calibrated debiasing techniques: you're basically adding pessimism to counter overoptimism, instead of aiming for truth. Outside view reasoning is closer to a calibrated debiasing move here. So if you're just trying to get the right number for how long something is going to take, you wouldn't want to rely on murphyjitsu or premortem. Just reference class forecast that sucker.

But I also wanted to encourage action effectiveness. One of my major concerns coming into the rationality community was the serious amount of disembodiment I was seeing. Getting time estimates right doesn't matter if it doesn't move your hands and feet. Knowing theories about diet & exercise doesn't matter if you don't enact them. Etc.

So the point of murphyjitsu was to blend helpful pessimism with a drive to do something about the likely future difficulties.

That's the main flavor difference I see between murphyjitsu and premortem. The latter can be a predicative tool to decide whether the action is worth taking. The former has more of a martial arts flavor, like seeing the future problem coming for you and then you counterstrike it. (Take that, Murphy!)

But yes, of course, one could argue that's part of the intent of the premortem anyway. Sure.

I do think it's telling that the name still sticks around ten years later. Flavor/vibe differences matter.

comment by Leon Lang (leon-lang) · 2023-01-16T19:25:33.430Z · LW(p) · GW(p)

Summary:

- We have an inner simulator: it predicts what’s happening and gets updated when there’s a surprise

- It’s part of System I

- It also outputs urges and reflexes

- What it can do (try these out):

- Predicting what happens next (start with a visualized scene)

- Being surprised (visualize unlikely outcome, feel amount of surprise)

- What will have gone wrong? (Visualize your plan to have gone wrong. What does your simulator tell you caused that?)

- We also have Explicit/Verbal mode: arguments, calculations; it responds to “being told”...

- We need both, they each have strengths and weaknesses

- To profit from the inner simulator: ask it questions within a domain where it has lots of data

- Garbage in, garbage out

- Techniques for making use of the simulator:

- Asking for examples in a conversation: this will feed it with useful data

- Searching for Next Actions:

- Goals are not the same as plans.

- To make a goal into a plan, I always need to have a next action

- Can be combined with Trigger-Action Planning [? · GW]

- Murphyjitsu Technique:

- Murphy’s Law: Everything that can go wrong will go wrong.

- People suck at applying this: even when expecting the worst, people are over-optimistic

- The Technique:

- 0. Select a goal

- 1. Outline the plan (including next actions, concrete steps, deadlines, and benchmarks. Be able to visualize the plan)

- 2. Surprise-o-meter: a message from the future tells you the plan failed. If you’re shocked: fine. If not: Go on.

- 3. Pre-hindsight: Construct a plausible narrative for what kept you succeeding (internal and external factors)

- 4. Bulletproofing: do a single action to remove the failure mode.

- Iterate 2–4 until done

- Murphy’s Law: Everything that can go wrong will go wrong.

comment by simon · 2022-07-01T05:14:35.467Z · LW(p) · GW(p)

Literally yesterday [LW · GW] I stumbled upon the obvious-in-retrospect idea that, if an agent selects/generates the plan it thinks is best, then it will tend to be optimistic about the plan it selects/generates, even if it would be unbiased about a random plan.

So, I wonder if this murphyjitsu idea could be in part related to that - the plan you generate is overoptimistic, but then you plan for how the uncertainty in the environment could lead your plan to fail, and now the same bias should overestimate the environment's likelihood of thwarting you.

(Perhaps though this general agent planning bias idea is obvious to everyone though except me, and it's just me who didn't get it until I tried to mentally model how an idea for an AI would work in practice).

(also, it feels like even if this is part of human planning bias, it's not the whole story, and a failure to be specific is a big part of what's going on as noted in the post)

Replies from: Valentine↑ comment by Valentine · 2022-07-01T05:49:03.216Z · LW(p) · GW(p)

Scraping the memory barrel here… as I recall, the original "planning fallacy" research (or something near it) found that people's time estimates were identical in the two scenarios:

- "How long do you expect this to take?"

- "How long do you expect this to take in the best-case scenario?"

The suggestion was much like what I think you're saying here: that when people are forming plans, they create an ideal image of the plan.

Because of this, when I'd teach this CFAR class I'd sometimes describe the planning fallacy this way, as the thing that makes "Things didn't go according to plan" never ever mean "Things went better than we expected!"

comment by trevor (TrevorWiesinger) · 2024-01-13T20:12:52.415Z · LW(p) · GW(p)

I tested it on a bunch of different things, important and unimportant. It did exactly what it said it did; substantially better predictions, immediate results, costs either little or no effort (aside from the initial cringe away from using this technique at all). It just works.

Looking at it like training/practicing a martial art is helpful as well.

comment by Davidmanheim · 2023-12-21T11:56:45.232Z · LW(p) · GW(p)

This is an important post which I think deserves inclusion in the best-of compilation because despite it's usefulness and widespread agreement about that fact, it seems notto have been highlighted well to the community.

comment by PeterL (peter-loksa) · 2024-09-07T10:11:05.333Z · LW(p) · GW(p)

I would like to ask whether there is a good reason for step 1 - outline your plan. I think it would be much easier to use, if we started with just a simple plan or even with no plan, and kept improving/extending it by those iterations.

comment by trevor (TrevorWiesinger) · 2022-07-01T02:31:53.639Z · LW(p) · GW(p)

Murphyjitsu is awesome, way better than Krav Maga, Jiu Jitsu, or any other literal martial art, and I seriously recommend recommending it to others.

Don't forget that if Murphyjitsu works fantastically for 40% of attempts, and is underwhelming for 60% of attempts, then there would be a 0.6^2 = 36% chance that it will be underwhelming on both of your first two tries. Trying Murphyjitsu means trying it seriously at least 3 times.