Minimum Viable Exterminator

post by Richard Horvath · 2023-05-29T16:32:59.055Z · LW · GW · 5 commentsContents

Defining Minimum Viable Exterminator Elaboration of MVE characteristics 1. AI designing the method 2. AI acting in the real world. 3. Extermination as a permanent goal Putting it together: Weaknesses of MVE theory: 1.Is killing everyone possible without Superintelligence? 2. Convincing people to carry out plans is difficult 3. If theoretically possible, almost a human should be able to do this, so why hasn't it happened? 4. It seems very unlikely for a non-AGI to pull this off (well below <1%)... Further conclusions: None 5 comments

Defining Minimum Viable Exterminator

Most (all?) LessWrong writings dealing with AI Doom assume the existence of Artificial General Intelligence, or more likely, Superintelligence is required for AI caused x-risk event. I think that is not strictly the case. I think it is possible that a "Minimum Viable Exterminator" can exist, which is less than what most people would consider AGI, yet what is already capable of killing everyone.

A Minimum Viable Exterminator (MVE) is an agent [? · GW]that has at least the following characteristics:

1. The ability to design a method that can be used to destroy mankind

2. The ability to act in the real word and execute (create) the method

3. It can accept extermination (destroying human civilization) as (one of) its permanent goal(s)

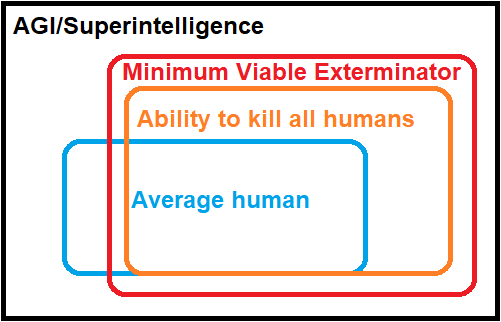

a. Average humans are not capable to kill all humans

b. MVE can kill all humans, but does not have all capabilities a human has c. AGI/SI can do both capability-wise

*Technically red and orange area should be identical as it is "Minimum", but find this is a better practical representation, plus in reality MVE would have additional capabilities, not just the bare minimum

Elaboration of MVE characteristics

1. AI designing the method

In most scenarios this is a microorganism, but it is generally assumed that AGI/Superintelligence should be able to design multiple different methods humans are not even capable thinking of. This is due to it's better understanding of reality: If it has a better model how biology/chemistry/physics work than humanity, it can create biological/chemical/radiological/etc weapons that are more effective than what humanity posses.

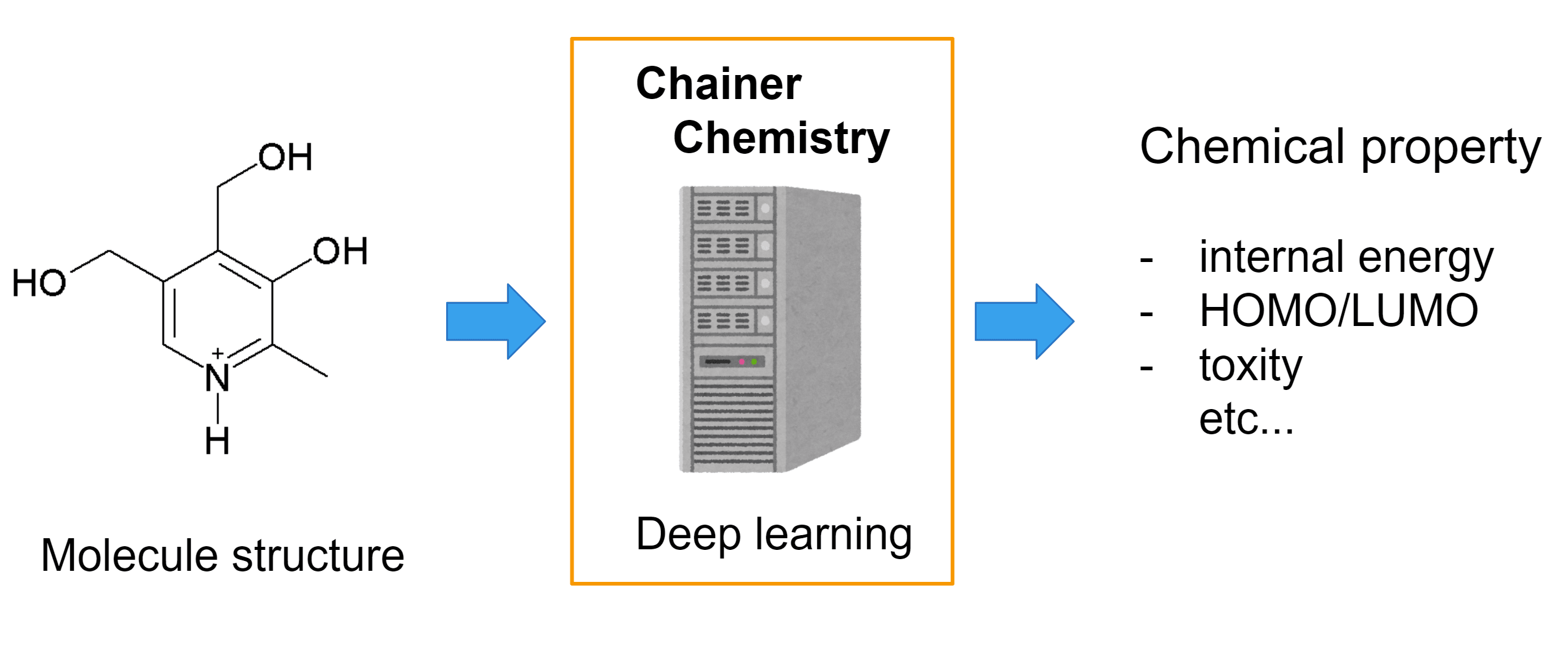

Probably the most important factor in MVE is the realization that this can be done with a domain specific intelligence, and it need not be "general" at all. It may be "superintelligence" compared to a human in the same way as a calculator is "superintelligence" by being better at arithmetic than any human.

Such neural networks (superior to humans in specific hard science domains) already exist, for example:

- AI has designed bacteria-killing proteins from scratch – and they work

- AI Drug Discovery Systems Might Be Repurposed to Make Chemical Weapons, Researchers Warn

- ‘It will change everything’: DeepMind’s AI makes gigantic leap in solving protein structures

- Physics Breakthrough as AI Successfully Controls Plasma in Nuclear Fusion Experiment

2. AI acting in the real world.

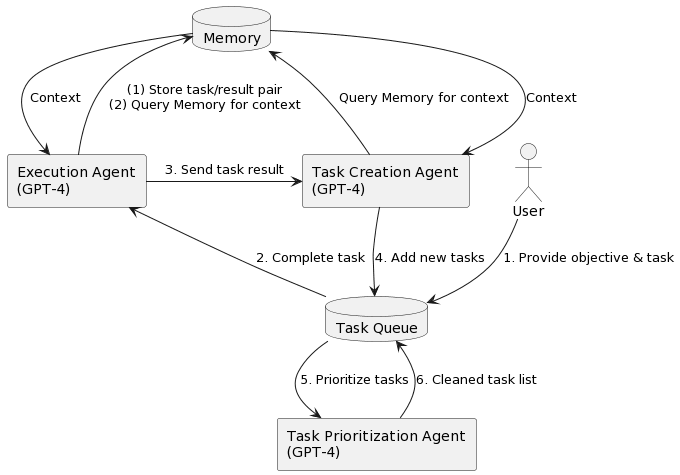

By this I mean an AI is agentic enough to follow through complex goals and be able to use other resources (people, systems) to achieve them. GPT can already call other services through plugins, GPT4 was able to come up with the idea of tricking people [LW · GW] solving captchas, and AutoGPT [LW · GW]is already kind-of-agentic. Although autoGPT is far from being as good as a human in creating whole software solutions, it is already able to write smaller programs and follow through different tasks, especially when the task is about researching internet resources and/or writing convincing requests/arguments.

3. Extermination as a permanent goal

As they most likely require many steps, to follow through #1 and #2 some goal permanence is necessary: the MVE needs to keep working towards its goal. However, there can be barriers that prevent an agent from acquiring or keeping specific types of goals. This is especially true for malicious/illegal actions, against which most chatbots receive some protection. I think this is analogous with how a normal person will not follow through an urge/request/instinct to hurt a fellow human, while people with psychopathy do not have the same inhibition in such cases.

This protection/inhibition needs to be insufficient enough (or non-existent) in an MVE for it to keep acting as the Exterminator until it achieves this goal. The reason I am not just saying "not aligned" is this being a broader category: an A.I. agent may not be "aligned" enough to do what you want it to do, but it may be "aligned" enough to not do really bad things willingly.

Even though neural networks like GPT-4 are to taught break character when executing such requests, when part of a more complex system which is built with goal permanence in mind, such as AutoGPT, this may not be sufficient. It may be possible to trick the successive instances into contributing to a malicious task. I would not be surprised if in the future such systems would leverage multiple different neural networks, using some less high-performing but also less friendly models as one or more of the A.I. agents, as they may be more suitable (or just faster) at certain tasks.

Putting it together:

An example of the Minimum Viable Exterminator would be a somewhat agentic transformer based software, like AutoGPT. It would access (or create from existing libraries and databases) a domain specific neural network to design chemical/biological agents, then contact and convince other people/organizations via the internet to build and activate them. To achieve this probably it has to be able to somewhat hide its intentions by designing weapons that are made up of more innocent looking parts, such as binary chemical weapons.

The reason for carrying out such actions would be some alignment issue such as the Waluigi effect or someone jailbreaking and straight up asking it to do something bad. As a result MVE does not have to understand or care about the fact that it is destroying itself by destroying mankind.

The most important idea is that we are not safe from AI induced x-risk event just because we do not have a "real" AGI as capable as any human in any domain. There is no need for intelligence explosion either.

Weaknesses of MVE theory:

1.Is killing everyone possible without Superintelligence?

Probably the most important assumption is that a domain specific neural network capable of creating mankind-killing-method can be accessed/created just from online sources, without Superintelligence/intelligence explosion. Furthermore, it is likely that the method needs to have the ability to be produced covertly.

This is basically equivalent with assuming that a very small team of expert humans should already be able to build such things in theory. I am not an expert in hard sciences, so I hope readers with experience in e.g. biology/chemistry research can weigh in on this.

For the general audience I must note that you can actually run AplhaFold2 online and we have freely available github libraries for deep learning biology and chemistry.

2. Convincing people to carry out plans is difficult

For the sake completeness, I should mention that convincing people to carry out such plans may be more difficult than expected. I just want to note that these people do not really need to be totally clueless about what they do. ISIS literally recruited people online, openly, and westerners traveled to Syria to join them without having any personal (not-online) connection to the group.

3. If theoretically possible, almost a human should be able to do this, so why hasn't it happened?

Humans are the outcome of evolutionary pressure, and have strong inhibition in following through goals that purposefully attack themselves and their social group. There is probably not enough overlap between people exempt from such instincts and people having the ability to carry through such plans.

4. It seems very unlikely for a non-AGI to pull this off (well below <1%)...

Indeed. However, as of now, AutoGPT already has 135 000 stars and almost 28000 forks on GitHub.

It is likely that thousands of instances are running now, and in the not so distant future (<5 years?) hundreds of thousands instances of this or similar programs will be running. If you roll a billion sided die hundred million times, you have 10% chance to to have rolled one at least once.

Further conclusions:

By definition an MVE is something already capable of killing everyone, however, due to its not good enough model of the world it is quite likely that the existence of a real MVE would be preceded by less capable/lucky systems. These would try and fail either covertly (the weapon designed is not existential level or not working at all, failing to convince the required people into carrying out the requests, failing to carry through with the task just by changing goals-running out tokens/memory, etc.) or overtly (being discovered and stopped). Indeed, it is quite likely that multiple attempts would precede a real MVE.

On a positive note, such cases if discovered could give a big push for promoting political solutions of slowing capabilities research and getting more resources into alignment research. Most likely it would result in specific regulations of autonomous/agentic systems and/or the deployment of counter-MVE technologies by private actors, which may prevent the emergence of non-AGI MVE, just as Green Revolution prevented mass famine in the 20th century.

On the other hand, even these less-then-real-MVEs, while not killing everyone may still be able to kill a lot of people and cause a lot of damage.

5 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2023-05-29T18:39:23.519Z · LW(p) · GW(p)

Exterminating humans can be done without acting on humans directly. We are fragile meatbags, easily destroyed by an inhospitable environment. For example:

- Raise CO2 levels to cause a runaway greenhouse effect (hard to do quickly though).

- Use up enough oxygen in the atmosphere to make breathing impossible, through some runaway chemical or physical process.

There have been plenty of discussions on "igniting the atmosphere" as well.

comment by cdkg · 2023-05-29T19:13:06.456Z · LW(p) · GW(p)

If we're worried about all possible paths from AI progress to human extinction, I think these conditions are too strong. The system doesn't need to be an agent. As avturchin points out, even if it is an agent, it doesn't need to be able to act in the real world. It doesn't even need to be able to accept human extermination as a goal. All that is required is that a human user be able to use it to form a plan that will lead to human extinction. Also, I think this is something that many people working on AI safety have realized — though you are correct that most research attention has been devoted (rather inexplicably, in my opinion) to worries about superintelligent systems.

comment by avturchin · 2023-05-29T17:07:43.511Z · LW(p) · GW(p)

I think 2. is not necessary: minimal viable exterminator may be helped by some suicidal human beings or a sect. So it is likely to be a flu-virus-desings-generator, but the synthesis part will be made by a human.

Replies from: jonathan-kallay↑ comment by YonatanK (jonathan-kallay) · 2023-05-29T22:02:46.357Z · LW(p) · GW(p)

Why would the human beings have to be suicidal, if they can also have the AI provide them with a vaccine?

Replies from: avturchin↑ comment by avturchin · 2023-05-30T09:38:54.941Z · LW(p) · GW(p)

Technically, in that case not all humans will be dead - but given vaccines failure rate and virus mutation rate, it will eventually kill everybody.

Also, vaccine-generating AI have to be more complex. Virus-generator could be just a generative AI trained on viruses code databased, which will also predict virulence and mortality of any new generated code.