t14n's Shortform

post by t14n (tommy-nguyen-1) · 2024-09-03T23:14:58.434Z · LW · GW · 9 commentsContents

9 comments

9 comments

Comments sorted by top scores.

comment by t14n (tommy-nguyen-1) · 2024-09-03T23:14:58.533Z · LW(p) · GW(p)

I'm giving up on working on AI safety in any capacity.

I was convinced ~2018 that working on AI safety was an Good™ and Important™ thing, and have spent a large portion of my studies and career trying to find a role to contribute to AI safety. But after several years of trying to work on both research and engineering problems, it's clear no institutions or organizations need my help.

First: yes, it's clearly a skill issue. If I was a more brilliant engineer or researcher then I'd have found a way to contribute to the field by now.

But also, it seems like the bar to work on AI safety seems higher than AI capabilities. There is a lack of funding for hiring more people to work on AI Safety, and it seems to have created a dynamic where you have to be scarily brilliant to even get a shot at folding AI safety into your career.

In other fields, there are a variety of professionals who can contribute incremental progress and get paid as they progress their knowledge and skills. Like educators across varying levels, technicians in lab who support experiments, and so on. There are far fewer opportunities like that w.r.t AI Safety. Many "mid-skilled" engineers and researchers just don't have a place in the field. I've met and am aware of many smart people attempting to find roles to contribute to AI safety in some capacity, but there's just not enough capacity for them.

I don't expect many folks here to be sympathetic to this sentiment. My guess on the consensus is that in fact, we should only have brilliant people working on AI safety because it's a very hard and important problem and we only get a few shots (maybe only one shot) to get it right!

↑ comment by Amalthea (nikolas-kuhn) · 2024-09-04T00:11:05.634Z · LW(p) · GW(p)

I think the main problem is that society-at-large doesn't significantly value AI safety research, and hence that the funding is severely constrained. I'd be surprised if the consideration you describe in the last paragraph plays a significant role.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-09-04T13:10:14.794Z · LW(p) · GW(p)

I think it's more of a side effect of the FTX disaster, where people are no longer willing to donate to EA, which means that AI safety got particularly hard hit as a result.

Replies from: bogdan-ionut-cirstea↑ comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-09-04T13:44:17.121Z · LW(p) · GW(p)

I suspect the (potentially much) bigger factor than 'people are no longer willing to donate to EA' is OpenPhil's reluctancy to spend more and faster on AI risk mitigation. Don't know how much this has to do with FTX, it might have more to do with differences of opinion in timelines, conservativeness, incompetence (especially when it comes to scaling up grantmaking capacity) or (other) less transparent internal factors.

(Tbc, I think OpenPhil is still doing much, much better than the vast majority of actors, but I could bet by the end of the decade them not having moved faster with respect to AI risk mitigation will look like a huge missed opportunity).

↑ comment by Thomas Kwa (thomas-kwa) · 2024-09-04T05:45:21.309Z · LW(p) · GW(p)

First: yes, it's clearly a skill issue. If I was a more brilliant engineer or researcher then I'd have found a way to contribute to the field by now.

I am not sure about this, just because someone will pay you to work on AI safety doesn't mean you won't be stuck down some dead end. Stuart Russell is super brilliant but I don't think AI safety through probabilistic programming will work.

↑ comment by Chris_Leong · 2024-09-04T02:42:08.105Z · LW(p) · GW(p)

Thank you for your service!

For what it's worth, I feel that the bar for being a valuable member of the AI Safety Community [LW · GW], is much more attainable than the bar of working in AI Safety full-time.

↑ comment by Seth Herd · 2024-09-04T15:53:13.182Z · LW(p) · GW(p)

There's just not enough funding. In a better world, we'd have more people with more viewpoints and approaches working on ai safety. Brilliance is overrated; creativity, understanding the problem space carefully, and effort also play huge roles in success in most fields.

comment by t14n (tommy-nguyen-1) · 2025-01-19T16:25:23.938Z · LW(p) · GW(p)

I have Aranet4 CO2 monitors inside my apartment, one near my desk and one in the living room both at eye level and visible to me at all times when I'm in those spaces. Anecdotally, I find myself thinking "slower" @ 900+ ppm, and can even notice slightly worse thinking at levels as low as 750ppm.

I find indoor levels @ <600ppm to be ideal, but not always possible depending on if you have guests, air quality conditions of the day, etc.

I unfortunately only live in a space with 1 window, so ventilation can be difficult. However a single well placed fan facing outwards blowing towards the window improves indoor circulation. With the HVAC system fan also running, I can decrease indoor ppm by 50-100 in just 10-15 minutes.

If you don't already periodically vent your space (or if you live in a nice enough climate, keep windows open all day), then I highly recommend you start doing so.

comment by t14n (tommy-nguyen-1) · 2025-01-12T20:15:45.002Z · LW(p) · GW(p)

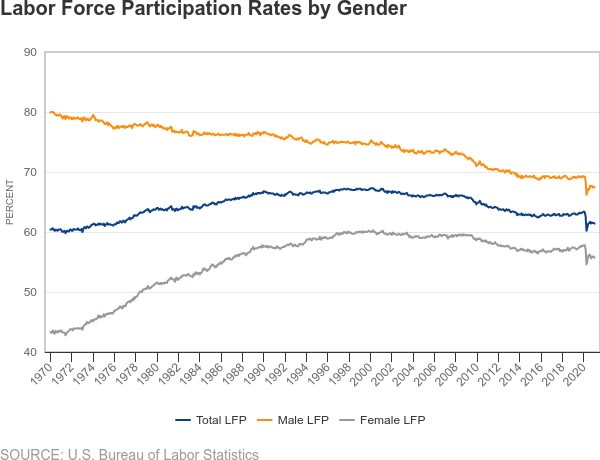

Want to know what AI will do to the labor market? Just look at male labor force participation (LFP) research.

US Male LFP has dropped since the 70s, from ~80% to ~67.5%.

There are a few dynamics driving this, and all of them interact with each other. But the primary ones seem to be:

- increased disability [1]

- younger men (e.g. <35 years old) pursuing education instead of working [2]

- decline in manufacturing and increase in services as fraction of the economy

- increased female labor force participation [1]

Our economy shifted from labor that required physical toughness (manufacturing) to labor that required more intelligence (services). At the same time, our culture empowered women to attain more education and participate in labor markets. As a result, the market for jobs that require higher intelligence became more competitive, and many men could not keep up and became "inactive." Many of these men fell into depression or became physically ill, thus unable to work.

Increased AI participation is going to do for humanity what increased women LFP has done for men in the last 50 years -- it is going to make cognitive labor an increasingly competitive market. The humans that can keep up will continue to participate and do well for themselves.

What about the humans who can't keep up? Well, just look at what men are doing now. Some men are pursuing higher education or training, attempting to re-enter the labor market by becoming more competitive or switching industries.

But an increased percentage of men are dropping out completely. Unemployed men are spending more hours playing sports and video games [1], and don't see the value in participating in the economy for a variety of reasons [3] [4].

Unless culture changes and the nature of jobs evolve dramatically in the next couple of years, I suspect these trends to continue.

Relevant links:

[1] Male Labor Force Participation: Patterns and Trends | Richmond Fed

[2] Men’s Falling Labor Force Participation across Generations - San Francisco Fed

[4] What’s behind Declining Male Labor Force Participation | Mercatus Center