Posts

Comments

re: 1b (development likely impossible to kept secret given scale required)

I'm remind of Dylan Patel's comments (semianalysis) on a recent episode of the Dwarkesh Podcast which goes something like:

if you're Xi Jinping and you're scaling pilled, you can just centralize all the compute and build all the substations for it. You can just hide it inside one of the factories you already have that's drawing power for steel production and re-purpose it as a data center.

Given the success we've seen in training SOTA models with constrained GPU resources (Deepseek), I don't think it's far fetched to think you can hide bleeding edge development. It turns out all you need is a few hundred of the smartest people in your country and a few thousand GPUs.

Hrm...sounds like the size of the Manhattan Project.

I have Aranet4 CO2 monitors inside my apartment, one near my desk and one in the living room both at eye level and visible to me at all times when I'm in those spaces. Anecdotally, I find myself thinking "slower" @ 900+ ppm, and can even notice slightly worse thinking at levels as low as 750ppm.

I find indoor levels @ <600ppm to be ideal, but not always possible depending on if you have guests, air quality conditions of the day, etc.

I unfortunately only live in a space with 1 window, so ventilation can be difficult. However a single well placed fan facing outwards blowing towards the window improves indoor circulation. With the HVAC system fan also running, I can decrease indoor ppm by 50-100 in just 10-15 minutes.

If you don't already periodically vent your space (or if you live in a nice enough climate, keep windows open all day), then I highly recommend you start doing so.

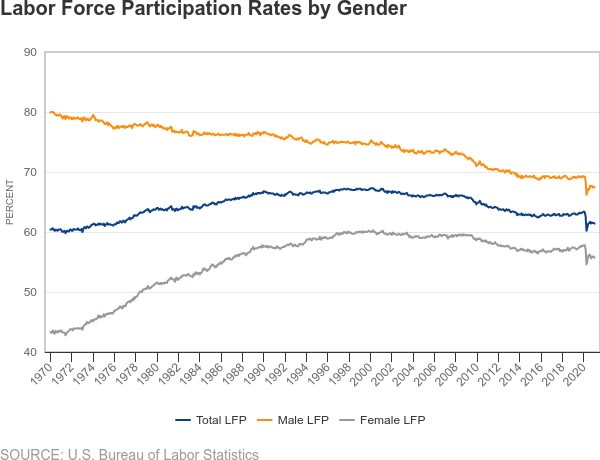

Want to know what AI will do to the labor market? Just look at male labor force participation (LFP) research.

US Male LFP has dropped since the 70s, from ~80% to ~67.5%.

There are a few dynamics driving this, and all of them interact with each other. But the primary ones seem to be:

- increased disability [1]

- younger men (e.g. <35 years old) pursuing education instead of working [2]

- decline in manufacturing and increase in services as fraction of the economy

- increased female labor force participation [1]

Our economy shifted from labor that required physical toughness (manufacturing) to labor that required more intelligence (services). At the same time, our culture empowered women to attain more education and participate in labor markets. As a result, the market for jobs that require higher intelligence became more competitive, and many men could not keep up and became "inactive." Many of these men fell into depression or became physically ill, thus unable to work.

Increased AI participation is going to do for humanity what increased women LFP has done for men in the last 50 years -- it is going to make cognitive labor an increasingly competitive market. The humans that can keep up will continue to participate and do well for themselves.

What about the humans who can't keep up? Well, just look at what men are doing now. Some men are pursuing higher education or training, attempting to re-enter the labor market by becoming more competitive or switching industries.

But an increased percentage of men are dropping out completely. Unemployed men are spending more hours playing sports and video games [1], and don't see the value in participating in the economy for a variety of reasons [3] [4].

Unless culture changes and the nature of jobs evolve dramatically in the next couple of years, I suspect these trends to continue.

Relevant links:

[1] Male Labor Force Participation: Patterns and Trends | Richmond Fed

[2] Men’s Falling Labor Force Participation across Generations - San Francisco Fed

[4] What’s behind Declining Male Labor Force Participation | Mercatus Center

re: public track records

I have a fairly non-assertive, non-confrontational personality, which causes me to defer to "safer" strategies (e.g. nod and smile, don't think too hard about what's being said, or at least don't vocalize counterpoints). Perhaps others here might relate. These personality traits are reflected in "lazy thinking" online -- e.g. not posting even when I feel like I'm right about X, not sharing an article or sending a message for fear of looking awkward/revealing a preference about myself that others might not agree with.

I notice that people who are very assertive and/or competitive, who see online discussions as "worth winning", will be much more publicly vocal about their arguments and thought process. Meek people (like me), may not see the worth in undertaking the risk of publicly revealing arguments or preferences. Embarrassment, shame, potentially being shunned for your revealed preferences, and so on -- there are many social risks to being public with your arguments and thought process. And if you don't value the "win" in the public sphere, why take on that risk?

Perhaps something that holds people back from publishing more is that many people tie their offline identity to their online identities. Or perhaps it's just a cultural inclination -- maybe most people are like me and don't value the status/social reward of being correct and sharing about it.

It's enough to be privately rigorous and correct.

Skill ceilings across humanity is quite high. I think of super genius chess players, Terry Tao, etc.

A particular individual's skill ceiling is relatively low (compared to these maximally gifted individuals). Sure, everyone can be better at listening, but there's a high non-zero chance you have some sort of condition or life experience that makes it more difficult to develop it (hearing disability, physical/mental illness, trauma, an environment of people who are actually not great at communicating themselves, etc).

I'm reminded of what Samo Burja calls "completeness hypothesis":

> It is the idea that having all of the important contributing pieces makes a given effect much, much larger than having most of the pieces. Having 100% of the pieces of a car produces a very different effect than having 90% of the pieces. The four important pieces for producing mastery in a domain are good feedback mechanisms, extreme motivation, the right equipment, and sufficient time. According to the Completeness Hypothesis, people that stably have all four of these pieces will have orders-of-magnitude greater skill than people that have only two or three of the components.

This is not a fatalistic recommendation to NOT invest in skill development. Quite the opposite.

I recommend Dan Luu's 95th %-tile is not that good.

Most people do not approach anywhere near their individual skill ceiling because they lack the four things that Burja lists. As Luu points out, most people don't care that much to develop their skills. People do not care to find good feedback loops, cultivate the motivation, or carve out sufficent time to develop skills. Certain skills may be limited by resources (equipment), but there are hacks that can lead to skill development at a sub-optimal rate (e.g. calisthenics for muscle mass development vs weighted training. Maybe you can't afford a gym membership but push-ups are free).

As @sunwillrise mentioned, there are diminishing returns for developing a skill. The gap from 0th % -> 80th % is actually quite narrow. 80th % -> 98% requires work but is doable for most people, and you probably start to experience diminishing returns around this range.

98%+ results are reserved for those who can have long-term stable environments to cultivate the skill, or the extremely talented.

I'm giving up on working on AI safety in any capacity.

I was convinced ~2018 that working on AI safety was an Good™ and Important™ thing, and have spent a large portion of my studies and career trying to find a role to contribute to AI safety. But after several years of trying to work on both research and engineering problems, it's clear no institutions or organizations need my help.

First: yes, it's clearly a skill issue. If I was a more brilliant engineer or researcher then I'd have found a way to contribute to the field by now.

But also, it seems like the bar to work on AI safety seems higher than AI capabilities. There is a lack of funding for hiring more people to work on AI Safety, and it seems to have created a dynamic where you have to be scarily brilliant to even get a shot at folding AI safety into your career.

In other fields, there are a variety of professionals who can contribute incremental progress and get paid as they progress their knowledge and skills. Like educators across varying levels, technicians in lab who support experiments, and so on. There are far fewer opportunities like that w.r.t AI Safety. Many "mid-skilled" engineers and researchers just don't have a place in the field. I've met and am aware of many smart people attempting to find roles to contribute to AI safety in some capacity, but there's just not enough capacity for them.

I don't expect many folks here to be sympathetic to this sentiment. My guess on the consensus is that in fact, we should only have brilliant people working on AI safety because it's a very hard and important problem and we only get a few shots (maybe only one shot) to get it right!

Morris Chang (founder of TSMC and titan in the fabrication process) had a lecture at MIT giving an overview of the history in chip design and manufacturing. [1] There's a diagram ~34:00 that outlines the chip design process, and where foundries like TSMC slot into the process.

I also recommend skimming Chip War by Chris Miller. Has a very US-centric perspective, but gives a good overview of the major companies that developed chips from the 1960s-1990s, and the key companies that are relevant/bottlenecks to the manufacturing process circa-2022.

1: TSMC founder Morris Chang on the evolution of the semiconductor industry

There's "Nothing, Forever" [1] [2], which had a few minutes of fame when it initially launched but declined in popularity after some controversy (a joke about transgenderism generated by GPT-3). It was stopped for a bit, then re-launched after some tweaking with the dialogue generation (perhaps an updated prompt? GPT 3.5? There's no devlog so I guess we'll never know). There are clips of "season 1" on YouTube prior to the updated dialogue generation.

There's also ai_sponge, which was taken down from Twitch and YouTube due to it's incredibly racy jokes (e.g. sometimes racist, sometimes homophobic, etc) and copyright concerns. It was a parody of Spongebob where 3D models of Spongebob characters (think the PS2 Spongebob games) would go around Bikini Bottom and interact with each other. Most of the content was mundane, like Spongebob asking Mr. Krabs for a raise, or Spongebob and Patrick asking about each others' days. But I suppose they were using an open, non-RLHF'ed model that would generate less friendly scripts.

1. Nothing, Forever - Wikipedia

2. WatchMeForever - Twitch