What counts as defection?

post by TurnTrout · 2020-07-12T22:03:39.261Z · LW · GW · 21 commentsContents

Formalism Game Theorems Discussion None 21 comments

Thanks to Michael Dennis for proposing the formal definition; to Andrew Critch for pointing me in this direction; to Abram Demski for proposing non-negative weighting; and to Alex Appel, Scott Emmons, Evan Hubinger, philh, Rohin Shah, and Carroll Wainwright for their feedback and ideas.

There's a good chance I'd like to publish this at some point as part of a larger work. However, I wanted to make the work available now, in case that doesn't happen soon.

They can't prove the conspiracy... But they could, if Steve runs his mouth.

The police chief stares at you.

You stare at the table. You'd agreed (sworn!) to stay quiet. You'd even studied game theory together. But, you hadn't understood what an extra year of jail meant.

The police chief stares at you.

Let Steve be the gullible idealist. You have a family waiting for you.

Sunlight stretches across the valley, dappling the grass and warming your bow. Your hand anxiously runs along the bowstring. A distant figure darts between trees, and your stomach rumbles. The day is near spent.

The stags run strong and free in this land. Carla should meet you there. Shouldn't she? Who wants to live like a beggar, subsisting on scraps of lean rabbit meat?

In your mind's eye, you reach the stags, alone. You find one, and your arrow pierces its barrow. The beast shoots away; the rest of the herd follows. You slump against the tree, exhausted, and never open your eyes again.

You can't risk it.

People talk about 'defection' in social dilemma games, from the prisoner's dilemma to stag hunt to chicken. In the tragedy of the commons, we talk about defection. The concept has become a regular part of LessWrong discourse.

Informal definition. A player defects when they increase their personal payoff at the expense of the group.

This informal definition is no secret, being echoed from the ancient Formal Models of Dilemmas in Social Decision-Making to the recent Classifying games like the Prisoner's Dilemma [LW · GW]:

you can model the "defect" action as "take some value for yourself, but destroy value in the process".

Given that the prisoner's dilemma is the bread and butter of game theory and of many parts of economics, evolutionary biology, and psychology, you might think that someone had already formalized this. However, to my knowledge, no one has.

Formalism

Consider a finite -player normal-form game, with player having pure action set and payoff function . Each player chooses a strategy (a distribution over ). Together, the strategies form a strategy profile . is the strategy profile, excluding player 's strategy. A payoff profile contains the payoffs for all players under a given strategy profile.

A utility weighting is a set of non-negative weights (as in Harsanyi's utilitarian theorem). You can consider the weights as quantifying each player's contribution; they might represent a percieved social agreement or be the explicit result of a bargaining process.

When all are equal, we'll call that an equal weighting. However, if there are "utility monsters", we can downweight them accordingly.

We're implicitly assuming that payoffs are comparable across players. We want to investigate: given a utility weighting, which actions are defections?

Definition. Player 's action is a defection against strategy profile and weighting if

- Social loss:

If such an action exists for some player , strategy profile , and weighting, then we say that there is an opportunity for defection in the game.

Remark. For an equal weighting, condition (2) is equivalent to demanding that the action not be a Kaldor-Hicks improvement.

Our definition seems to make reasonable intuitive sense. In the tragedy of the commons, each player rationally increases their utility, while imposing negative externalities on the other players and decreasing total utility. A spy might leak classified information, benefiting themselves and Russia but defecting against America.

Definition. Cooperation takes place when a strategy profile is maintained despite the opportunity for defection.

Theorem 1. In constant-sum games, there is no opportunity for defection against equal weightings.

Theorem 2. In common-payoff games (where all players share the same payoff function), there is no opportunity for defection.

Edit: In private communication, Joel Leibo points out that these two theorems formalize the intuition between the proverb "all's fair in love and war": you can't defect in fully competitive or fully cooperative situations.

Proposition 3. There is no opportunity for defection against Nash equilibria.

An action is a Pareto improvement over strategy profile if, for all players ,.

Proposition 4. Pareto improvements are never defections.

Game Theorems

We can prove that formal defection exists in the trifecta of famous games. Feel free to skip proofs if you aren't interested.

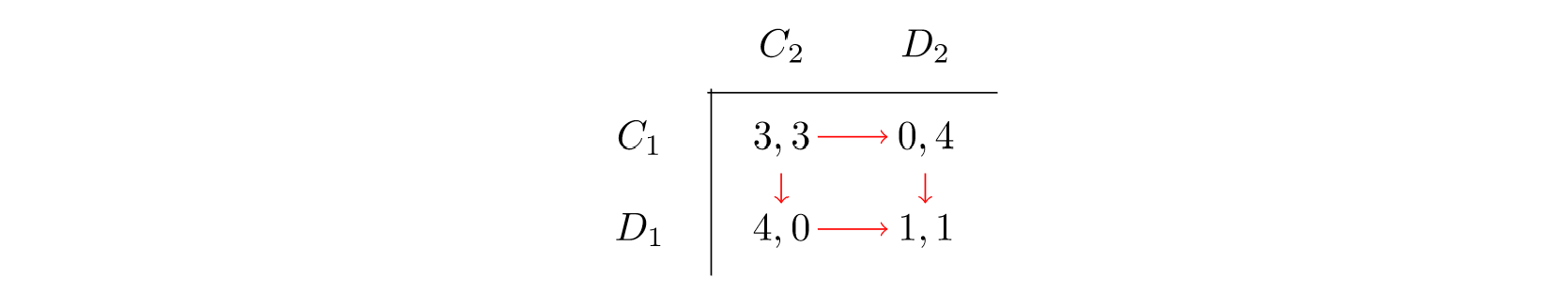

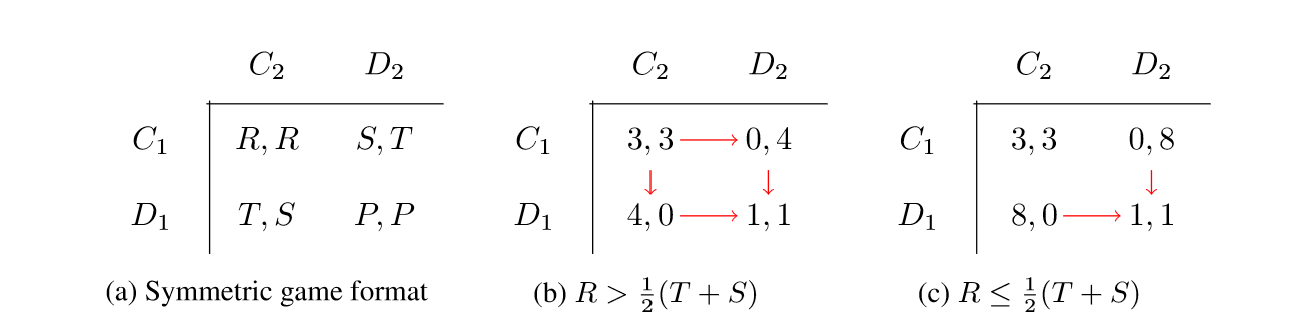

Theorem 5. In symmetric games, if the Prisoner's Dilemma inequality is satisfied, defection can exist against equal weightings.

Proof. Suppose the Prisoner's Dilemma inequality holds. Further suppose that . Then . Then since but , both players defect from with .

Suppose instead that . Then , so . But , so player 1 defects from with action , and player 2 defects from with action . QED.

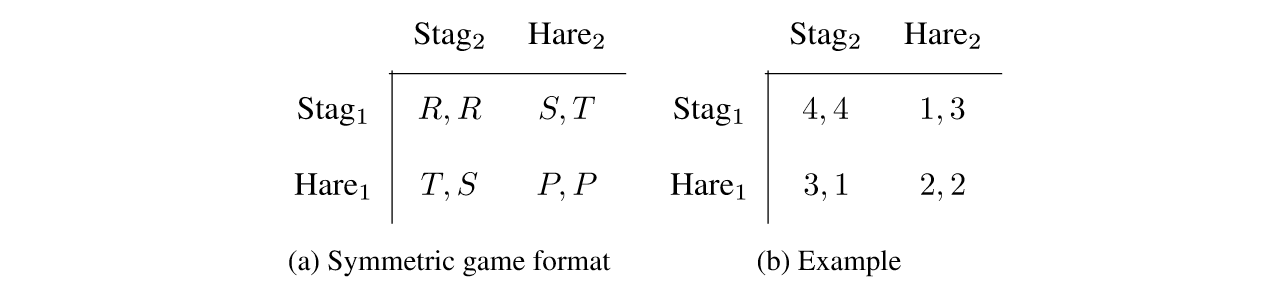

Theorem 6. In symmetric games, if the Stag Hunt inequality is satisfied, defection can exist against equal weightings.

Proof. Suppose that the Stag Hunt inequality is satisfied. Let be the probability that player 1 plays . We now show that player 2 can always defect against strategy profile for some value of .

For defection's first condition, we determine when :

This denominator is positive ( and ), as is the numerator. The fraction clearly falls in the open interval .

For defection's second condition, we determine when

Combining the two conditions, we have

Since , this holds for some nonempty subinterval of . QED.

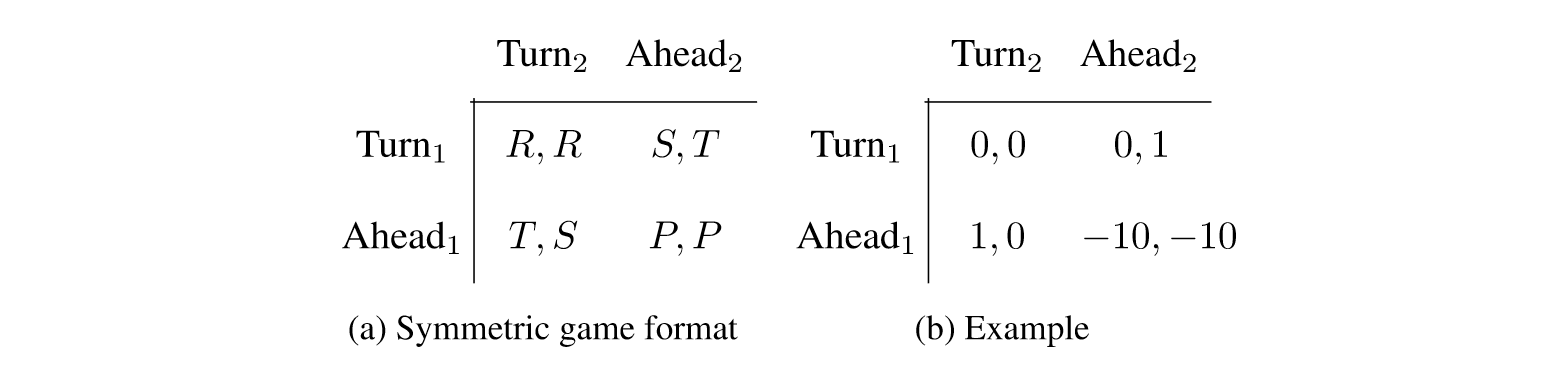

Theorem 7. In symmetric games, if the Chicken inequality is satisfied, defection can exist against equal weightings.

Proof. Assume that the Chicken inequality is satisfied. This proof proceeds similarly as in theorem 6. Let be the probability that player 1's strategy places on .

For defection's first condition, we determine when :

The inequality flips in the first equation because of the division by , which is negative ( and ). , so ; this reflects the fact that is a Nash equilibrium, against which defection is impossible (proposition 3).

For defection's second condition, we determine when

The inequality again flips because is negative. When , we have , in which case defection does not exist against a pure strategy profile.

Combining the two conditions, we have

Because ,

QED.

Discussion

This bit of basic theory will hopefully allow for things like principled classification of policies: "has an agent learned a 'non-cooperative' policy in a multi-agent setting?". For example, the empirical game-theoretic analyses (EGTA) of Leibo et al.'s Multi-agent Reinforcement Learning in Sequential Social Dilemmas say that apple-harvesting agents are defecting when they zap each other with beams. Instead of using a qualitative metric, you could choose a desired non-zapping strategy profile, and then use EGTA to classify formal defections from that. This approach would still have a free parameter, but it seems better.

I had vague pre-theoretic intuitions about 'defection', and now I feel more capable of reasoning about what is and isn't a defection. In particular, I'd been confused by the difference between power-seeking [LW · GW] and defection, and now I'm not.

21 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2020-07-14T04:48:20.874Z · LW(p) · GW(p)

Planned summary for the Alignment Newsletter:

We often talk about cooperating and defecting in general-sum games. This post proposes that we say that a player P has defected against a coalition C (that includes P) currently playing a strategy S when P deviates from the strategy S in a way that increases his or her own personal utility, but decreases the (weighted) average utility of the coalition. It shows that this definition has several nice intuitive properties: it implies that defection cannot exist in common-payoff games, uniformly weighted constant-sum games, or arbitrary games with a Nash equilibrium strategy. A Pareto improvement can also never be defection. It then goes on to show the opportunity for defection can exist in the Prisoner’s dilemma, Stag hunt, and Chicken (whether it exists depends on the specific payoff matrices).

comment by jimmy · 2020-07-13T18:33:20.963Z · LW(p) · GW(p)

As others have mentioned, there's an interpersonal utility comparison problem. In general, it is hard to determine how to weight utility between people. If I want to trade with you but you're not home, I can leave some amount of potatoes for you and take some amount of your milk. At what ratio of potatoes to milk am I "cooperating" with you, and at what level am I a thieving defector? If there's a market down the street that allows us to trade things for money then it's easy to do these comparisons and do Coasian payments as necessary to coordinate on maximizing the size of the pie. If we're on a deserted island together it's harder. Trying to drive a hard bargain and ask for more milk for my potatoes is a qualitatively different thing when there's no agreed upon metric you can use to say that I'm trying to "take more than I give".

Here is an interesting and hilarious experiment about how people play an iterated asymmetric prisoner's dilemma. The reason it wasn't more pure cooperation is that due to the asymmetry there was a disagreement between the players about what was "fair". AA thought JW should let him hit "D" some fraction of the time to equalize the payouts, and JW thought that "C/C" was the right answer to coordinate towards. If you read their comments, it's clear that AA thinks he's cooperating in the larger game, and that his "D" aren't anti-social at all. He's just trying to get a "fair" price for his potatoes, and he's mistaken about what that is. JW, on the other hand, is explicitly trying use his Ds to coax A into cooperation. This conflict is better understood as a disagreement over where on the Pareto frontier ("at which price") to trade than it is about whether it's better to cooperate with each other or defect.

In real life problems, it's usually not so obvious what options are properly thought of as "C" or "D", and when trying to play "tit for tat with forgiveness" we have to be able to figure out what actually counts as a tit to tat. To do so, we need to look at the extent to which the person is trying to cooperate vs trying to get away with shirking their duty to cooperate. In this case, AA was trying to cooperate, and so if JW could have talked to him and explained why C/C was the right cooperative solution, he might have been able to save the lossy Ds. If AA had just said "I think I can get away with stealing more value by hitting D while he cooperates", no amount of explaining what the right concept of cooperation looks like will fix that, so defecting as punishment is needed.

In general, the way to determine whether someone is "trying to cooperate" vs "trying to defect" is to look at how they see the payoff matrix, and figure out whether they're putting in effort to stay on the Pareto frontier or to go below it. If their choice shows that they are being diligent to give you as much as possible without giving up more themselves, then they may be trying to drive a hard bargain, but at least you can tell that they're trying to bargain. If their chosen move is conspicuously below (their perception of) the Pareto frontier, then you can know that they're either not-even-trying, or they're trying to make it clear that they're willing to harm themselves in order to harm you too.

In games like real life versions of "stag hunt", you don't want to punish people for not going stag hunting when it's obvious that no one else is going either and they're the one expending effort to rally people to coordinate in the first place. But when someone would have been capable of nearly assuring cooperation if they did their part and took an acceptable risk when it looked like it was going to work, then it makes sense to describe them as "defecting" when they're the one that doesn't show up to hunt the stag because they're off chasing rabbits.

"Deliberately sub-Pareto move" I think is a pretty good description of the kind of "defection" that means you're being tatted, and "negligently sub-Pareto" is a good description of the kind of tit to tat.

↑ comment by TurnTrout · 2020-07-13T20:12:09.474Z · LW(p) · GW(p)

As others have mentioned, there's an interpersonal utility comparison problem. In general, it is hard to determine how to weight utility between people.

I actually don't think this is a problem for the use case I have in mind. I'm not trying to solve the comparison problem. This work formalizes: "given a utility weighting, what is defection?". I don't make any claim as to what is "fair" / where that weighting should come from. I suppose in the EGTA example, you'd want to make sure eg reward functions are identical.

"Deliberately sub-Pareto move" I think is a pretty good description of the kind of "defection" that means you're being tatted, and "negligently sub-Pareto" is a good description of the kind of tit to tat.

Defection doesn't always have to do with the Pareto frontier - look at PD, for example. , , are usually all Pareto optimal.

Replies from: jimmy↑ comment by jimmy · 2020-07-16T06:27:15.059Z · LW(p) · GW(p)

I actually don't think this is a problem for the use case I have in mind. I'm not trying to solve the comparison problem. This work formalizes: "given a utility weighting, what is defection?". I don't make any claim as to what is "fair" / where that weighting should come from. I suppose in the EGTA example, you'd want to make sure eg reward functions are identical.

This strikes me as a particularly large limitation. If you don't have any way of creating meaningful weightings of utility between agents then you can't get anything meaningful out. If you're allowed to play with that free parameter then you can simply say "I'm not a utility monster, this genuinely impacts me more than you [because I said so!]" and your actual outcomes aren't constrained at all.

Defection doesn't always have to do with the Pareto frontier - look at PD, for example. (C,C), (C,D), (D,C) are usually all Pareto optimal.

That's why I talk about "in the larger game" and use scare quotes on "defection". I think the word has to many different connotations and needs to be unpacked a bit.

The dictionary definition, for example, is:

A lack: a failure; especially, failure in the performance of duty or obligation.

n.The act of abandoning a person or a cause to which one is bound by allegiance or duty, or to which one has attached himself; a falling away; apostasy; backsliding.

n.Act of abandoning a person or cause to which one is bound by allegiance or duty, or to which one has attached himself; desertion; failure in duty; a falling away; apostasy; backsliding.

This all fits what I was talking about, and the fact that the options in prisoners dilemma are traditionally labeled "Cooperate" and "Defect" doesn't mean they fit the definition. It smuggles in these connotations when they do not necessarily apply.

The idea of using tit for tat to encourage cooperation requires determining what ones "duty" is and what "failing" this duty is, and "doesn't maximize total utility" does not actually work as a definition for this purpose because you still have to figure out how to do that scaling.

Using the Pareto frontier allows you to distinguish between cooperative and non-cooperative behavior without having to make assumptions/claims about whose preferences are more "valid". This is really important for any real world application, because you don't actually get those scalings on a silver platter, and therefore need a way to distinguish between "cooperative" and "selfishly destructive" behavior as separate from "trying to claim a higher weight to one's own utility".

Replies from: TurnTrout↑ comment by TurnTrout · 2020-07-16T11:58:53.343Z · LW(p) · GW(p)

This strikes me as a particularly large limitation. If you don't have any way of creating meaningful weightings of utility between agents then you can't get anything meaningful out. If you're allowed to play with that free parameter then you can simply say "I'm not a utility monster, this genuinely impacts me more than you [because I said so!]" and your actual outcomes aren't constrained at all.

This just isn't what I want to use the definition for. It's meant to be descriptive, not prescriptive.

Similarly, while other definitions of "defection" also exist, I'm interested in the sense I outlined in the post. In particular, I'm not interested in any proposed definition that labels defection in PD as not-defection.

comment by TurnTrout · 2021-12-17T20:10:49.786Z · LW(p) · GW(p)

This post's main contribution is the formalization of game-theoretic defection as gaining personal utility at the expense of coalitional utility.

Rereading, the post feels charmingly straightforward and self-contained. The formalization feels obvious in hindsight, but I remember being quite confused about the precise difference between power-seeking and defection—perhaps because popular examples of taking over the world are also defections against the human/AI coalition. I now feel cleanly deconfused about this distinction. And if I was confused about it, I'd bet a lot of other people were, too.

I think this post is valuable as a self-contained formal insight into the nature of defection. If I could vote on it, I'd give it a 4 (or perhaps a 3, if the voting system allowed it).

comment by sen · 2020-07-13T00:27:04.064Z · LW(p) · GW(p)

I think your focus on payoffs is diluting your point. In all of your scenarios, the thing enabling a defection is the inability to view another player's strategy before committing to a strategy. Perhaps you can simplify your definition to the following:

- "A defect is when someone (or some sub-coalition) benefits from violating their expected coalition strategy."

You can define a function that assigns a strategy to every possible coalition. Given an expected coalition strategy C, if the payoff for any sub-coalition strategy SC is greater than their payoff in C, then the sub-coalition SC is incentivized to defect. (Whether that means SC joins a different coalition or forms their own is irrelevant.)

This makes a few things clear that are hidden in your formalization. Specifically:

- The main difference between this framing and the framing for Nash Equilibrium is the notion of an expected coalition strategy. Where there is an expected coalition strategy, one should aim to follow a "defection-proof" strategy. Where there is no expected coalition strategy, one should aim to follow a Nash Equilibrium strategy.

- Your Proposition 3 is false. You would need a variant that takes coalitions into account.

I believe all of your other theorems and propositions follow from the definition as well.

This has other benefits as well.

- It factors the payoff table into two tables that are easier to understand: coalition selection and coalition strategy selection.

- It's better-aligned with intuition. Defection in the colloquial sense is when someone deserts "their" group (i.e., joins a new coalition in violation of the expectation). Coalition selection encodes that notion cleanly. The payoff tables for coalitions cleanly encodes the more generalized notion of "rational action" in scenarios where such defection is possible.

↑ comment by TurnTrout · 2020-07-14T13:44:16.585Z · LW(p) · GW(p)

In all of your scenarios, the thing enabling a defection is the inability to view another player's strategy before committing to a strategy.

Depends what you mean by "committing". If I move after you in PD, and I can observe your action, and I see you cooperate, then the best response (putting aside Newcomblike issues) is to defect.

"A defect is when someone (or some sub-coalition) benefits from violating their expected coalition strategy."

This feels quite different from defection. Imagine we're negotiating resource allocation. The "expected coalition strategy" is, let's say, "no one gets any". By this definition, is it a defection to then propose an even allocation of resources (a Pareto improvement)?

Another question: how does this idea differ from the core in cooperative game theory?

Replies from: sen↑ comment by sen · 2020-07-15T08:07:56.973Z · LW(p) · GW(p)

The "expected coalition strategy" is, let's say, "no one gets any". By this definition, is it a defection to then propose an even allocation of resources (a Pareto improvement)?

In my view, yes. If we agreed that no one should get any resources, then it's a violation for you to get resources or for you to deceive me into getting resources.

I think the difference is in how the two of us view a strategy. In my view, it's perfectly acceptable for the coalition strategy to include a clause like "it's okay to do X if it's a pareto improvement for our coalition." If that's part of the coalition strategy we agree to, then pareto improvements are never defections. If our coalition strategy does exclude unilateral actions that are pareto improvements, then it is a defection to take such actions.

Another question: how does this idea differ from the core in cooperative game theory?

I'm not a mathematician or an economist, my knowledge on this hasn't been tested, and I just discovered the concept from your reply. Please read the following with a lot of skepticism because I don't know how correct it is.

Some type differences:

- A core is a set of allocations. I'm going to call it core allocations so it's less confusing.

- A defection is a change in strategy (per both of our definitions).

As far as the relationship between the two:

- A core allocation satisfies a particular robustness property: it's stable under coalition refinements. A "coalition refinement" here is an operation with a coalition is replaced by a partition of that coalition. Being stable under coalition refinements, the coalition will not partition itself for rational reasons. So if you have coalitions {A, B} and {C}, then every core allocation is robust against {A, B} splitting up into {A}, {B}.

- Defections (per my definition) don't deal strictly with coalition refinements. If one member leaves a coalition to join another, that's still a defection. In this scenario, {A, B}, {C} is replaced with {A}, {B, C}. Core allocations don't deal with this scenario since {A}, {B, C} is not a refinement of {A, B}, {C}. As a result, core allocations are not necessarily robust to defections.

I could be wrong about core allocations being about only refinements. I think I'm safe in saying though that core allocations are robust against some (maybe all) defections.

Replies from: Dagon↑ comment by Dagon · 2020-07-15T13:59:07.919Z · LW(p) · GW(p)

This is an important disagreement on terminology, and may be a good reason to avoid "cooperate" and "defect" as technical words. They have a much broader meaning than used here.

Whether "defect" is about reducing sum of payouts of the considered participants, or about violating agreements (even with better outcomes), or about some other behavior, use of the word without specification is going to be ambiguous.

The actual post is about payouts. Please keep in mind that these are _not_ resources, but utility. The pain of violating expectations and difficulty in future cooperation is already included (or should be) in those payout numbers.

Replies from: sen↑ comment by sen · 2020-07-15T17:48:52.445Z · LW(p) · GW(p)

This can turn into a very long discussion. I'm okay with that, but let me know if you're not so I can probe only the points that are likely to resolve. I'll raise the contentious points regardless, but I don't want to draw focus on them if there's little motivation to discuss them in depth.

I agree that a split in terminology is warranted, and that "defect" and "cooperate" are poor choices. How about this:

- Coalition members may form consensus on the coalition strategy. Members of a coalition may follow the consensus coalition strategy or violate the consensus coalition strategy.

- Members of a coalition may benefit the coalition or hurt the coalition.

- Benefiting the coalition means raising its payoff regardless of consensus. Hurting the coalition means reducing its payoff regardless of consensus. A coalition may form consensus on the coalition strategy regardless of the optimality of that strategy.

Contentious points:

- I expect that treating utility so generally will lead to paradoxes, particularly when utility functions are defined in terms of other utility functions. I think this is an extremely important case, particularly when strategies take trust into account. As a result, I expect that such a general notion of utility will lead to paradoxes when using it to reason about trust.

- "Utility is not a resource." I think this is a useful distinction when trying to clarify goals, but not a useful distinction when trying to make decisions given a set of goals. In particular, once the payoff tables are defined for a game, the goals must already have been defined, and so utility can be treated as a resource in that game.

↑ comment by Dagon · 2020-07-15T18:02:11.382Z · LW(p) · GW(p)

I'm not sure a long discussion with me is helpful - I mostly wanted to point out that there's a danger of being misunderstood and talking past each other, and "use more words" is often a better approach than "argue about the words".

I am especially the wrong person to argue about fundamental utility-aggregation problems. I don't think ANYONE has a workable theory about how Utilitarianism really works without an appeal to moral realism that I don't think is justified.

Replies from: sen↑ comment by sen · 2020-07-16T07:35:25.239Z · LW(p) · GW(p)

Understood. I do think it's significant though (and worth pointing out) that a much simpler definition yields all of the same interesting consequences. I didn't intend to just disagree for the sake of getting clearer terminology. I wanted to point out that there seems to be a simpler path to the same answers, and that simpler path provides a new concept that seems to be quite useful.

comment by Dagon · 2020-07-13T00:58:40.185Z · LW(p) · GW(p)

It's worth being careful to acknowledge that this set of assumptions is far more limited than the game-theoretical underpinnings. Because it requires interpersonal utility summation, you can't normalize in the same ways, and you need to do a LOT more work to show that any given situation fits this model. Most situations and policies don't even fit the more general individual-utility model, and I suspect even fewer will fit this extension.

That said, I like having it formalized, and I look forward to the extension to multi-coalition situations. A spy can benefit Russia and the world more than they hurt the average US resident.

Replies from: TurnTroutcomment by ESRogs · 2020-07-13T04:32:45.346Z · LW(p) · GW(p)

Combining the two conditions, we have

Since , this holds for some nonempty subinterval of .

I want to check that I'm following this. Would it be fair to paraphrase the two parts of this inequality as:

1) If your credence that the other player is going to play Stag is high enough, you won't even be tempted to play Hare.

2) If your credence that the other player is going to play Hare is high enough, then it's not defection to play Hare yourself.

?

↑ comment by ESRogs · 2020-07-13T04:50:34.601Z · LW(p) · GW(p)

I guess the rightmost term could be zero or negative, right? (If the difference between T and P is greater than or equal to the difference between P and S.) In that case, the payoffs would be such that there's no credence you could have that the other player will play Hare that would justify playing Hare yourself (or justify it as non-defection, that is).

So my claim #1 is always true, but claim #2 depends on the payoff values.

In other words, Stag Hunt could be subdivided into two games: one where the payoffs never justify playing Hare (as non-defection), and one where they sometimes do, depending on your credence that the other player will play Stag.