Classifying games like the Prisoner's Dilemma

post by philh · 2020-07-04T17:10:01.965Z · LW · GW · 28 commentsContents

Cake Eating: W>∙>∙>Z (two games) Let's Party: W>Z>∙>∙ (two games) Studying For a Test: W>X>Z>Y (two games) Stag hunt: W>Y>Z>X (two games) The Abundant Commons: X>W>∙>∙ (five games) Farmer's Dilemma: Y>W>X>Z (two games) Anti-coordination: ∙>∙>W>Z (two games) Prisoner's Dilemma/Too Many Cooks: Y>W>Z>X (three games) A graph A change of basis? Footnotes None 28 comments

Note: the math and the picture didn't transfer. I may try to fix it in future, but for now you might want to just read it at the original site. [Mod/Edit note: Should all be fixed now!]

Consider games with the following payoff matrix:

| Player 2 | |||

|---|---|---|---|

| Krump | Flitz | |

| Player 1 | Krump | ||

| Flitz |

One such game is the Prisoner's Dilemma (in which strategy "Krump" is usually called "Cooperate", and "Flitz" is usually called "Defect"). But the Prisoner's Dilemma has additional structure. Specifically, to qualify as a PD, we must have . gives the motivation to defect if the other player cooperates, and gives that motivation if the other player defects. With these two constraints, the Nash equilibrium is always going to be Flitz/Flitz for a payoff of . is what gives the dilemma its teeth; if instead , then that equilibrium is a perfectly fine outcome, possibly the optimal one.

I usually think of a Prisoner's Dilemma as also having . That specifies that mutual cooperation has the highest total return - it's "socially optimal" in a meaningful sense1 - while mutual defection has the lowest. It also means you can model the "defect" action as "take some value for yourself, but destroy value in the process". (Alternatively, "cooperate" as "give some of your value to your playmate2, adding to that value in the process".) We might consider instead:

- If , then defecting while your playmate cooperates creates value (relative to cooperating). From a social perspective, Krump/Flitz or Flitz/Krump is preferable to Krump/Krump; and in an iterated game of this sort, you'd prefer to alternate with than to get a constant . Wikipedia still classes this as a Prisoner's Dilemma, but I think that's dubious terminology, and I don't think it's standard. I might offhand suggest calling it the Too Many Cooks game. (This name assumes that you'd rather go hungry than cook, and that spoiled broth is better than no broth.)

- If , then defecting while your playmate defects creates value. I have no issue thinking of this as a Prisoner's Dilemma; my instinct is that most analyses of the central case will also apply to this.

By assigning different values to the various numbers, what other games can we get?

As far as I can tell, we can classify games according to the ordering of (which determine individual outcomes) and of (which determine the social outcomes). Sometimes we'll want to consider the case when two values are equal, but for simplicity I'm going to classify them assuming there are no equalities. Naively there would be possible games, but

- Reversing the order of everything doesn't change the analysis, it just swaps the labels Krump and Flitz. So we can assume without loss of generality that . That eliminates half the combinations.

- Obviously , so it's just a question of where falls in comparison to them. That eliminates another half.

- If then . That eliminates another four combinations.

- If then , eliminating another four.

- If then , eliminating four.

- If then , eliminating two.

- If then , eliminating two.

That brings us down to just 20 combinations, and we've already looked at three of them, so this seems tractable. In the following, I've grouped games together mostly according to how interesting I think it is to distinguish them, and I've given them names when I didn't know an existing name. Both the names and the grouping should be considered tentative.

Cake Eating: (two games)

In this game, you can either Eat Cake or Go Hungry. You like eating cake. You like when your playmate eats cake. There's enough cake for everyone, and no reason to go hungry. The only Nash equilibrium is the one where everyone eats cake, and this is the socially optimal result. Great game! We should play it more often.

(If , then if you had to choose between yourself and your playmate eating cake, you'd eat it yourself. If , then in that situation you'd give it to them. Equalities between and signify indifference to (yourself, your playmate) eating cake in various situations.)

Let's Party: (two games)

In this game, you can either go to a Party or stay Home. If you both go to a party, great! If you both stay home, that's cool too. If either of you goes to a party while the other stays home, you'd both be super bummed about that.

Home/Home is a Nash equilibrium, but it's not optimal either individually or socially.

In the case , this is a pure coordination game, which doesn't have the benefit of an obvious choice that you can make without communicating.

(Wikipedia calls this the assurance game on that page, but uses that name for the Stag Hunt on the page for that, so I'm not using that name.)

Studying For a Test: (two games)

You can either Study or Bunk Off. No matter what your playmate does, you're better off Studying, and if you Study together you can help each other. If you Bunk Off, then it's more fun if your playmate Bunks Off with you; but better still for you if you just start Studying.

The only Nash equilibrium is Study/Study, which is also socially optimal.

Stag hunt: (two games)

You can either hunt Stag or Hare (sometimes "Rabbit"). If you both hunt Stag, you successfully catch a stag between you, which is great. If you both hunt Hare, you each catch a hare, which is fine. You can catch a hare by yourself, but if you hunt Stag and your playmate hunts Hare, you get nothing.

This also works with . If then two people hunting Hare get in each other's way.

The Nash equilibria are at Stag/Stag and Hare/Hare, and Stag/Stag is socially optimal. Hare/Hare might be the worst possible social result, though I think this game is usually described with .

See: The Schelling Choice is "Rabbit", not "Stag" [LW · GW].

The Abundant Commons: (five games)

You can Take some resource from the commons, or you can Leave it alone. There's plenty of resource to be taken, and you'll always be better off taking it. But if you and your playmate both play Take, you get in each other's way and reduce efficiency (unless ).

If then you don't intefere with each other significantly; the socially optimal result is also the Nash equilibrium. But if then the total cost of interfering is more than the value of resource either of you can take, and some means of coordinating one person to Take and one to Leave would be socially valuable.

If then if (for whatever reason) you Leave the resource, you'd prefer your partner Takes it. If you'd prefer them to also Leave it.

An interesting case here is and . Take/Leave and Leave/Take are social optimal, but the Leave player would prefer literally any other outcome.

Take/Take is the only Nash equilibrium.

Farmer's Dilemma: (two games)

In this game, you can Work (pitch in to help build a mutual resource) or Shirk (not do that). If either of you Works, it provides more than its cost to both of you. Ideally, you want to Shirk while your playmate Works; but if your playmate Shirks, you'd rather Work than leave the work undone. The Nash equilibria are at Work/Shirk and Shirk/Work.

If then the socially optimal outcome is Work/Work, and a means to coordinate on that outcome would be socially useful. If , the socially optimal outcome is for one player to Work while the other Shirks, but with no obvious choice for which one of you it should be.

Also known as Chicken, Hawk/Dove and Snowdrift.

Anti-coordination: (two games)

In this game, the goal is to play a different move than your playmate. If then there's no reason to prefer one move over another, but if they're not equal there'll be some maneuvering around who gets which reward. If you're not happy with the outcome, then changing the move you play will harm your playmate more than it harms you. The Nash equilibria are when you play different moves, and these are socially optimal.

Prisoner's Dilemma/Too Many Cooks: (three games)

Covered in preamble.

(I'm a little surprised that this is the only case where I've wanted to rename the game depending on the social preference of the outcomes. That said, the only other games where isn't forced to be greater or less than are the Farmer's Dilemma and the Abundant Commons, and those are the ones I'd most expect to want to split in future.)

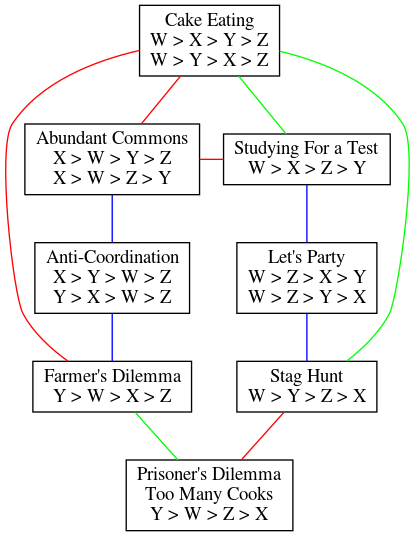

A graph

I made a graph of these games. I only classified them according to ordering of (i.e. I lumped Prisoner's Dilemma with Too Many Cooks), and I drew an edge whenever two games were the same apart from swapping two adjacent values. It looks like this:

The lines are colored according to which pair of values is swapped (red first two, blue middle two, green last two). I'm not sure we learn much from it, but I find the symmetry pleasing.

A change of basis?

I don't want to look too deep into this right now, but here's a transformation we could apply. Instead of thinking about these games in terms of the numbers , we think in terms of "the value of Player 2 playing Flitz over Krump":

- , the value to Player 1, if Player 1 plays Krump.

- , the value to Player 2, if Player 1 plays Krump.

- , the value to Player 1, if Player 1 plays Flitz.

- , the value to Player 2, if Player 1 plays Flitz.

These four numbers determine , up to adding a constant value to all of them, which doesn't change the games. For example, Prisoner's Dilemma and Too Many Cooks both have . A Prisoner's Dilemma also has while Too Many Cooks has .

So what happens if we start thinking about these games in terms of instead? Does this give us useful insights? I don't know.

Of course, for these numbers to point at one of the games studied in this post, we must have . I think if you relax that constraint, you start looking into games slightly more general than these. But I haven't thought about it too hard.

Footnotes

[1] My use of the phrase comes from Ellickson's Order Without Law. Part of why I'm writing this is to help clarify my thinking about that book. I don't mean to imply anything in particular by it, I just like the ring of it better than alternatives like "welfare maximizing". ↩

[2] Calling them your "opponent" assumes a level of antagonism that may not be present. ↩

28 comments

Comments sorted by top scores.

comment by Raemon · 2020-07-04T22:39:07.934Z · LW(p) · GW(p)

I'd heard another friend discuss this idea a few months back, and thought it was a useful thing for someone to write up.

Something I found a bit difficult reading this was the arbitrariness of W, X, Y and Z (I had trouble remembering which was which). I think I'd have found it a little easier to parse the examples if they used something like XX, XY, YX, and YY. (Honestly, CC, CD, DC, DD would have been easiest to map onto my existing models, although I get part of the point here was to break from the Cooperate/Defect concept)

Replies from: philhcomment by Raemon · 2020-07-08T00:34:45.073Z · LW(p) · GW(p)

Curated.

A year ago, a mathematician friend of mine commented that "as far as I can tell, nobody has published a paper that just outlines all the different types of 2x2 quadrant-game payoffs", and they spent a weekend charting out the different payoff matrixes, and just meditating on how they felt about each one, and how it fit into their various game theory intuitions. But, they didn't get around to publishing it AFAICT.

This seems like a really obvious thing to do, but prior to this post I don't think anyone had written it up publicly. (if someone does know of an existing article, feel free to link here). But regardless I think a good writeup of this is useful to have in the LessWrong body of knowledge.

Real life is obviously more complicated than 2x2 payoff games, but having a set of crisp formulations is helpful for orientation on more complex issues. And, the fact that many people default to using prisoner's dilemma all the time even when it's not really appropriate seems like an actual problem that needed fixing.

I have some sense that the pedagogy of this post could be improved. I'd previously commented that using different symbols would be helpful for me. I have a nagging sense that there is other more useful feedback I could give on how to articulate some of the games, but don't have clear examples.

Those concerns are relatively minor though. Overall, thanks for the great post. :)

Replies from: Zack_M_Davis↑ comment by Zack_M_Davis · 2020-07-09T04:01:25.695Z · LW(p) · GW(p)

if someone does know of an existing article

Herbert Gintis's Game Theory Evolving (2nd edition) offers the following exercise. (Bolding and hyperlinks mine.)

6.15 Characterizing 2 x 2 Normal Form Games I

Replies from: philhWe say a normal form game is generic if no two payoffs for the same player are equal. Suppose and are the payoff matrices for Alice and Bob, so the payoff to Alice's strategy against Bob's strategy is for Alice and for Bob. We say two generic 2 x 2 games with payoff matrices (A, B) and (C, D) are equivalent if, for all i, j, k, l = 1, 2:

and

In particular, if a constant is added to the payoffs to all the pure strategies of one player when played against a given pure strategy of the other player, the resulting game is equivalent to the original.

Show that equivalent 2 x 2 generic games have the same number of pure Nash equilibria and the same number of strictly mixed Nash equilibria. Show also that every generic 2 x 2 game is equivalent to either the prisoner's dilemma (§3.11), the battle of the sexes (§3.9), or the hawk-dove (§3.10). Note that this list does not include throwing fingers (§3.8), which is not generic.

↑ comment by philh · 2020-07-09T23:01:56.014Z · LW(p) · GW(p)

Thanks for finding this! I'm a bit confused, though; it suggests that the game with payoffs

3,3 2,1

1,2 0,0

(an instance of Cake Eating), is equivalent to one of those named games. But... which? It only has one pure Nash equilibrium, so it can't be either hawk-dove or BOS, which both have two. And it can't be equivalent to PD - an instance of that would be

3,3 1,4

4,1 0,0

and these aren't equivalent. We have (3 > 1) but (3 < 4). So what am I missing?

(I had intended to try look this up myself, but I'm unlikely to do that in a timely manner, so I'm just leaving a comment. No obligation on you, of course.)

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2022-09-30T11:33:40.880Z · LW(p) · GW(p)

My best guess is that the book considers cake-eating to be trivial (just both eat cake), and is therefore not worried about even thinking about it, so it slipped the list.

comment by Nisan · 2020-07-13T04:20:44.205Z · LW(p) · GW(p)

Von Neumann and Morgenstern [LW · GW] also classify the two-player games, but they get only two games, up to equivalence. The reason is they assume the players get to negotiate beforehand. The only properties that matter for this are:

-

The maximin value , which represents each player's best alternative to negotiated agreement (BATNA).

-

The maximum total utility .

There are two cases:

-

The inessential case, . This includes the Abundant Commons with . No player has any incentive to negotiate, because the BATNA is Pareto-optimal.

-

The essential case, . This includes all other games in the OP.

It might seem strange that VNM consider, say, Cake Eating to be equivalent to Prisoner's Dilemma. But in the VNM framework, Player 1 can threaten not to eat cake in order to extract a side payment from Player 2, and this is equivalent to threatening to defect.

comment by Jameson Quinn (jameson-quinn) · 2020-07-06T21:27:56.249Z · LW(p) · GW(p)

Nicely done.

In my experience, "Farmer's Dilemma" (aka Chicken/Snowdrift) is both more common than PD, and harder for human players to coordinate away from pathological outcomes. I think it should be the prototypical "nasty" game instead of PD. We've all been assigned a group project at school; we have not all been interrogated by the police in a situation where the payoffs are truly PD.

comment by Gunnar_Zarncke · 2021-12-15T14:11:27.189Z · LW(p) · GW(p)

This is the only one I rated 9. It looks like a boring post full of formulas but it is actually quite short and - as Reamon wrote in the curation - completes the system of all possible cooperation games giving them succinct definitions and names.

comment by abramdemski · 2020-07-10T17:13:48.900Z · LW(p) · GW(p)

(Under "farmer's dilemma":)

If 2W>X+Y then the socially optimal outcome is Work/Work, and a means to coordinate on that outcome would be socially useful. If 2W<X+Y, the socially optimal outcome is for one player to Work while the other Shirks, but with no obvious choice for which one of you it should be.

(Under PD / too many cooks:)

(I'm a little surprised that this is the only case where I've wanted to rename the game depending on the social preference of the outcomes. That said, the only other games where X+Y isn't forced to be greater or less than 2X are the Farmer's Dilemma and the Abundant Commons, and those are the ones I'd most expect to want to split in future.)

To my personal taste, "chicken" is the game where 2W<X+Y; I think of chicken as fundamentally being a game where there isn't a fair and socially optimal outcome w/o correlated randomness (ie in correlated equilibria, we have the "stop light" solution where one player gets a signal to go straight, with fair odds of who gets to go). It becomes "hawk/dove" when 2WX+Y, representing the idea that fighting can't increase the amount of resources (at best the hawk strategy doesn't destroy anything unless there's another hawk; realistically it burns some resources either way, just a lot more when there are two hawks). However, I realize this isn't standard -- it seems like a lot of people consider both games to be "chicken".

(By the way, I was surprised you labelled the section "farmer's dilemma" -- just from my experience, "chicken" is far more common, and "hawk/dove" is also quite common, but not as common as "chicken"; whereas I'd never actually heard "farmer's dilemma" before.)

[I also want to briefly point out that it's questionable to assume that the utility functions of the two players are comparable, and hence, that social welfare is necessarily captured by the sum of the two payoffs. Utility functions are always "up to a scalar", so really "symmetric game" should mean something more complicated ... but all that being said, I think you handled this the right way in the end, because we don't want to deal with that extra complexity in a classification like this.]

Replies from: philh↑ comment by philh · 2020-07-12T21:08:13.496Z · LW(p) · GW(p)

To my personal taste, “chicken” is the game where 2W<X+Y; I think of chicken as fundamentally being a game where there isn’t a fair and socially optimal outcome w/o correlated randomness (ie in correlated equilibria, we have the “stop light” solution where one player gets a signal to go straight, with fair odds of who gets to go). It becomes “hawk/dove” when 2W≥X+Y, representing the idea that fighting can’t increase the amount of resources (at best the hawk strategy doesn’t destroy anything unless there’s another hawk; realistically it burns some resources either way, just a lot more when there are two hawks).

Hm. I agree that Hawk/Dove feels weird with . Chicken... I think I don't have strong feelings on the question. To the extent that I do, it's hard to disentangle from "actually, the outcome where you crash into each other really is super super bad", but that's not actually necessarily true. If I think in terms of "if you both swerve, then you're both cowards", then yeah, does feel natural. For the Farmer's Dilemma I feel like it can go both ways - there can be gains from working together, but also inefficiencies if e.g. you only have one bulldozer.

Farmer's Dilemma is definitely the less common name. (I didn't think I coined it myself but couldn't find any other references to it. From memory, I wrote most of the linked article without realizing it was the same as Chicken.) But I also just like it better as a name, and I have the vague sense that people equipped with that name will have an easier time recognizing instances of the problem. It's probably relevant that Order Without Law talks about problems that it calls Prisoner's Dilemmas, that I think are often Farmer's Dilemmas, in the context of... well, ranching, but close enough.

Replies from: abramdemski↑ comment by abramdemski · 2020-07-13T17:12:02.441Z · LW(p) · GW(p)

Yep, very interesting :) The different names/stories definitely make me think about the games in very different ways, such that it feels quite non-obvious that the Farmer's Dilemma is Chicken (or Hawk/Dove), intuitively.

(Actually, my mental tag for this is going to be the problem of cleaning common spaces/dishes in an apartment -- it's better if someone does it, but no one wants to do it individually.)

Also, I've probably heard people refer to the commons game as a PD, too. I think PD informally becomes a tag for a coming-apart of the individual and social good.

comment by TurnTrout · 2020-07-05T13:42:35.094Z · LW(p) · GW(p)

If , then defecting while your playmate cooperates creates value (relative to cooperating). From a social perspective, Krump/Flitz or Flitz/Krump is preferable to Krump/Krump; and in an iterated game of this sort, you'd prefer to alternate with , than to get a constant . Wikipedia still classes this as a Prisoner's Dilemma, but I think that's dubious terminology, and I don't think it's standard. I might offhand suggest calling it the Too Many Cooks game.

But the only Nash is still , and so there is an incentive to defect from Krump/Flitz. Furthermore, that defection reduces total social welfare, so I think it makes sense to call this variant "PD" still.

Replies from: philh↑ comment by philh · 2020-07-05T17:36:39.885Z · LW(p) · GW(p)

I absolutely think it makes sense, I just don't know if I endorse it. Maybe it's context dependent; someone who just wants to write about the Nash equilibria of games won't have any reason to distinguish these cases, and lumping them all under "Prisoner's Dilemma" seems absolutely fine. But Iterated Prisoner's Dilemma (in my sense) is probably a very different game from Iterated Too Many Cooks, but I'm not sure I've ever heard anyone talk about ITMC. And from a social perspective, "punish anyone who defects in a PD" makes sense but "punish anyone who doesn't cook in TMC" risks leaving value on the table.

Maybe it would make sense to call them a "(something) Prisoner's Dilemma" and a "(something else) Prisoner's Dilemma" but I'm not sure what the somethings would be.

Replies from: philhcomment by habryka (habryka4) · 2020-07-04T22:44:34.293Z · LW(p) · GW(p)

I just cleaned up all the formatting and converted the LaTeX. Sorry for the inconvenience!

Replies from: philhcomment by romeostevensit · 2020-07-04T19:27:03.361Z · LW(p) · GW(p)

Would love to see similar for public goods games as there are so many simulations based off of them.

Very tempting to meme the graph of relations into the kabbalah tree of life diagram.

Replies from: philhcomment by Oscar_Cunningham · 2020-07-04T19:17:59.974Z · LW(p) · GW(p)

I've been wanting to do something like this for a while, so it's good to see it properly worked out here.

If you wanted to expand this you could look at games which weren't symmetrical in the players. So you'd have eight variables, W, X, Y and Z, and w, x, y and z. But you'd only have to look at the possible orderings within each set of four, since it's not necessarily valid to compare utilities between people. You'd also be able to reduce the number of games by using the swap-the-players symmetry.

Replies from: philh, philh↑ comment by philh · 2020-07-09T23:14:43.729Z · LW(p) · GW(p)

Oh, I hadn't seen it before, but this file on wikipedia seems like it might be roughly that expanded version? Info Direct link to pdf I haven't looked closely at it though. The pdf doesn't render in firefox for me, but does render in evince, my external pdf viewer.

↑ comment by philh · 2020-07-04T21:29:51.868Z · LW(p) · GW(p)

I don't in general think there's anything wrong with comparing utilities between people in these things - that's what I'm doing when I talk about whether - but it would be simpler not to do so. Still, even then I think extending to all 8 would give far too many possibilities to be manually tractable - I make it .

But it wouldn't be too hard to write a program to classify them according to Nash equilibria, if someone wanted to do that. That might be a decent start.

comment by Sniffnoy · 2021-04-09T03:51:51.979Z · LW(p) · GW(p)

I'm reminded of this paper, which discusses a smaller set of two-player games. What you call "Cake Eating" they call the "Harmony Game". They also use the more suggestive variable names -- which I believe come from existing literature -- R (reward), S (sucker's payoff), T (temptation), P (punishment) instead of (W, X, Y, Z). Note that in addition to R > P (W > Z) they also added the restrictions T > P (Y > Z) and R > S (W > X) so that the two options could be meaningfully labeled "cooperate" and "defect" instead of "Krump" and "Flitz" (the cooperate option is always better for the other player, regardless of whether it's better or worse for you). (I'm ignoring cases of things being equal, just like you are.)

(Of course, the paper isn't actually about classifying games, it's an empirical study of how people actually play these games! But I remember it for being the first place I saw such a classification...)

With these additional restrictions, there are only four games: Harmony Game (Cake Eating), Chicken (Hawk-Dove/Snowdrift/Farmer's Dilemma), Stag Hunt, and Prisoner's Dilemma (Too Many Cooks).

I'd basically been using that as my way of thinking about two-player games, but this broader set might be useful. Thanks for taking the time to do this and assign names to these.

I do have to wonder about that result that Zack_M_Davis mentions... as you mentioned, where's the Harmony Game in it? Also, isn't Battle of the Sexes more like Chicken than like Stag Hunt? I would expect to see Chicken and Stag Hunt, not Battle of the Sexes and Chicken, which sounds like the same thing twice and seems to leave out Stag Hunt. But maybe Battle of the Sexes is actually equivalent, in the sense described, to Stag Hunt rather than Chicken? That would be surprising, but I didn't set down to check whether the definition is satsified or not...

comment by sab (sbarzowski) · 2020-07-04T18:25:40.634Z · LW(p) · GW(p)

Note: the math and the picture didn't transfer. I may try to fix it in future, but for now you might want to just read it at the original site.

Could you add a link?

Replies from: philhcomment by philh · 2021-10-03T09:29:26.910Z · LW(p) · GW(p)

I might get around to editing the post at some point, but unless/until I do, a note:

Anti-coordination: (two games)

In this game, the goal is to play a different move than your playmate. If then there’s no reason to prefer one move over another, but if they’re not equal there’ll be some maneuvering around who gets which reward. If you’re not happy with the outcome, then changing the move you play will harm your playmate more than it harms you. The Nash equilibria are when you play different moves, and these are socially optimal.

Correction: if you're not happy with the outcome, changing your move will harm your playmate more than it harms you if , and harm you more than them if .

Also, Battle of the Sexes is an instance of this game if you relabel the moves. If you label them "do the thing you/they prefer", you get . ( is "we both do my thing", is "we both do their thing", is "I do my thing and they do theirs" and is "I do their thing and they do mine". if going alone to either is equally bad.) If you label them "do the thing they/you prefer", you get . You won't get . (That would imply payoffs like: I most want to do "my thing" together. Failing that I want to do "their thing" together. But if we do go separately, I'd rather do "their thing" than "my thing". Which wouldn't be a BoS.)

comment by Jerdle (daniel-amdurer) · 2021-06-26T04:08:29.509Z · LW(p) · GW(p)

X>W>Z>Y is interesting. It's similar to a PD, and so it appears that many of the same systems that evolved to enforce cooperation in PDs misfire in those cases and end up with Z (based on some observation, not any good evidence). However, unlike in a PD, Z is a worse outcome than the purely selfish X.

If a PD has the option to transfer value to your playmate and create more value, a XWZY (aka Deadlock) has the option to transfer value to your playmate inefficiently, so, in the inefficient state Z, both people are sacrificing to benefit the other, and yet each would be better off if the norm of sacrifice were not present.

EDIT: All of this assumes 2W > X + Y.

comment by MikkW (mikkel-wilson) · 2020-07-07T02:40:43.529Z · LW(p) · GW(p)

I like the analysis, but W, X, Y, Z is confusing terminology- it'd be easier to read and process if I didn't have to constantly remind myself which situation each variable corresponds to