The Schelling Choice is "Rabbit", not "Stag"

post by Raemon · 2019-06-08T00:24:53.568Z · LW · GW · 52 commentsContents

A recap of 'Rabbit' vs 'Stag' A lightweight, but concrete example Takeaways Sometimes stag hunts are worth it. The schelling option is Rabbit My own strategies right now In general, choose Rabbit. Follow rabbit trails into Stag* Country Get curious about other people's frames Occasionally run "Kickstarters for Stag Hunts." If people commit, hunt stag. None 52 comments

Followup/distillation/alternate-take on Duncan Sabien's Dragon Army Retrospective and Open Problems in Group Rationality.

There's a particular failure mode I've witnessed, and fallen into myself:

I see a problem. I see, what seems to me, to be an obvious solution to the problem. If only everyone Took Action X, we could Fix Problem Z. So I start X-ing, and maybe talking about how other people should start X-ing. Action X takes some effort on my part but it's obviously worth it.

And yet... nobody does. Or not enough people do. And a few months later, here I'm still taking Action X and feeling burned and frustrated.

Or –

– the problem is that everyone is taking Action Y, which directly causes Problem Z. If only everyone would stop Y-ing, Problem Z would go away. Action Y seems obviously bad, clearly we should be on the same page about this. So I start noting to people when they're doing Action Y, and expect them to stop.

They don't stop.

So I start subtly socially punishing them for it.

They don't stop. What's more... now they seem to be punishing me.

I find myself getting frustrated, perhaps angry. What's going on? Are people wrong-and-bad? Do they have wrong-and-bad beliefs?

Alas. So far in my experience it hasn't been that simple.

A recap of 'Rabbit' vs 'Stag'

I'd been planning to write this post for years. Duncan Sabien went ahead and wrote it before I got around to it. But, Dragon Army Retrospective and Open Problems in Group Rationality are both lengthy posts with a lot of points, and it still seemed worth highlighting this particular failure mode in a single post.

I used to think a lot in terms of Prisoner's Dilemma, and "Cooperate"/"Defect." I'd see problems that could easily be solved if everyone just put a bit of effort in, which would benefit everyone. And people didn't put the effort in, and this felt like a frustrating, obvious coordination failure. Why do people defect so much?

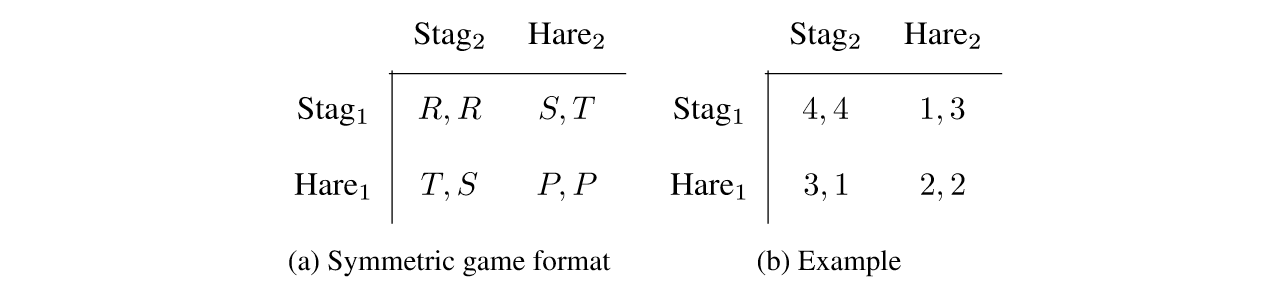

Eventually Duncan shifted towards using Stag Hunt rather than Prisoner's Dilemma as the model here. If you haven't read it before, it's worth reading the description in full. If you're familiar you can skip to my current thoughts below.

My new favorite tool for modeling this is stag hunts, which are similar to prisoner’s dilemmas in that they contain two or more people each independently making decisions which affect the group. In a stag hunt:

—Imagine a hunting party venturing out into the wilderness.

— Each player may choose stag or rabbit, representing the type of game they will try to bring down.

— All game will be shared within the group (usually evenly, though things get more complex when you start adding in real-world arguments over who deserves what).

— Bringing down a stag is costly and effortful, and requires coordination, but has a large payoff. Let’s say it costs each player 5 points of utility (time, energy, bullets, etc.) to participate in a stag hunt, but a stag is worth 50 utility (in the form of food, leather, etc.) if you catch one.

— Bringing down rabbits is low-cost and low-effort and can be done unilaterally. Let’s say it only costs each player 1 point of utility to hunt rabbit, and you get 3 utility as a result.

— If any player unexpectedly chooses rabbit while others choose stag, the stag escapes through the hole in the formation and is not caught. Thus, if five players all choose stag, they lose 25 utility and gain 50 utility, for a net gain of 25 (or +5 apiece). But if four players choose stag and one chooses rabbit, they lose 21 utility and gain only 3.

This creates a strong pressure toward having the Schelling choice be rabbit. It’s saner and safer (spend 5, gain 15, net gain of 10 or +2 apiece), especially if you have any doubt about the other hunters’ ability to stick to the plan, or the other hunters’ faith in the other hunters, or in the other hunters’ current resources and ability to even take a hit of 5 utility, or in whether or not the forest contains a stag at all.

Let’s work through a specific example. Imagine that the hunting party contains the following five people:

Alexis (currently has 15 utility “in the bank”)

Blake (currently has 12)

Cameron (9)

Dallas (6)

Elliott (5)

If everyone successfully coordinates to choose stag, then the end result will be positive for everyone. The stag costs everyone 5 utility to bring down, and then its 50 utility is divided evenly so that everyone gets 10, for a net gain of 5. The array [15, 12, 9, 6, 5] has bumped up to [20, 17, 14, 11, 10].

If everyone chooses rabbit, the end result is also positive, though less excitingly so. Rabbits cost 1 to hunt and provide 3 when caught, so the party will end up at [17, 14, 11, 8, 7].

But imagine the situation where a stag hunt is attempted, but unsuccessful. Let’s say that Blake quietly decides to hunt rabbit while everyone else chooses stag. What happens?

Alexis, Cameron, Dallas, and Elliott each lose 5 utility while Blake loses 1. The rabbit that Blake catches is divided five ways, for a total of 0.6 utility apiece. Now our array looks like [10.6, 11.6, 4.6, 1.6, 0.6].

(Remember, Blake only spent 1 utility in the first place.)

If you’re Elliott, this is a super scary result to imagine. You no longer have enough resources in the bank to be self-sustaining—you can’t even go out on another rabbit hunt, at this point.

And so, if you’re Elliott, it’s tempting to preemptively choose rabbit yourself. If there’s even a chance that the other players might defect on the overall stag hunt (because they’re tired, or lazy, or whatever) or worse, if there might not even be a stag out there in the woods today, then you have a strong motivation to self-protectively husband your resources. Even if it turns out that you were wrong about the others, and you end up being the only one who chose rabbit, you still end up in a much less dangerous spot: [10.6, 7.6, 4.6, 1.6, 4.6].

Now imagine that you’re Dallas, thinking through each of these scenarios. In both cases, you end up pretty screwed, with your total utility reserves at 1.6. At that point, you’ve got to drop out of any future stag hunts, and all you can do is hunt rabbit for a while until you’ve built up your resources again.

So as Dallas, you’re reluctant to listen to any enthusiastic plan to choose stag. You’ve got enough resources to absorb one failure, and so you don’t want to do a stag hunt until you’re really darn sure that there’s a stag out there, and that everybody’s really actually for real going to work together and try their hardest. You’re not opposed to hunting stag, you’re just opposed to wild optimism and wanton, frivolous burning of resources.

Meanwhile, if you’re Alexis or Blake, you’re starting to feel pretty frustrated. I mean, why bother coming out to a stag hunt if you’re not even actually willing to put in the effort to hunt stag? Can’t these people see that we’re all better off if we pitch in hard, together? Why are Dallas and Elliott preemptively talking about rabbits when we haven’t even tried catching a stag yet?

I’ve recently been using the terms White Knight and Black Knight to refer, not to specific people like Alexis and Elliott, but to the roles that those people play in situations requiring this kind of coordination. White Knight and Black Knight are hats that people put on or take off, depending on circumstances.

The White Knight is a character who has looked at what’s going on, built a model of the situation, decided that they understand the Rules, and begun to take confident action in accordance with those Rules. In particular, the White Knight has decided that the time to choose stag is obvious, and is already common knowledge/has the Schelling nature. I mean, just look at the numbers, right?

The White Knight is often wrong, because reality is more complex than the model even if the model is a good model. Furthermore, other people often don’t notice that the White Knight is assuming that everyone knows that it’s time to choose stag—communication is hard, and the double illusion of transparency is a hell of a drug, and someone can say words like “All right, let’s all get out there and do our best” and different people in the room can draw very different conclusions about what that means.

So the White Knight burns resources over and over again, and feels defected on every time someone “wrongheadedly” chooses rabbit, and meanwhile the other players feel unfairly judged and found wanting according to a standard that they never explicitly agreed to (remember, choosing rabbit should be the Schelling option, according to me), and the whole thing is very rough for everyone.

If this process goes on long enough, the White Knight may burn out and become the Black Knight. The Black Knight is a more mercenary character—it has limited resources, so it has to watch out for itself, and it’s only allied with the group to the extent that the group’s goals match up with its own. It’s capable of teamwork and coordination, but it’s not zealous. It isn’t blinded by optimism or patriotism; it’s there to engage in mutually beneficial trade, while taking into account the realities of uncertainty and unreliability and miscommunication.

The Black Knight doesn’t like this whole frame in which doing the safe and conservative thing is judged as “defection.” It wants to know who this White Knight thinks he is, that he can just declare that it’s time to choose stag, without discussion or consideration of cost. If anyone’s defecting, it’s the White Knight, by going around getting mad at people for following local incentive gradients and doing the predictable thing.

But the Black Knight is also wrong, in that sometimes you really do have to be all-in for the thing to work. You can’t always sit back and choose the safe, calculated option—there are, sometimes, gains that can only be gotten if you have no exit strategy and leave everything you’ve got on the field.

I don’t have a solution for this particular dynamic, except for a general sense that shining more light on it (dignifying both sides, improving communication, being willing to be explicit, making it safe for both sides to be explicit) will probably help. I think that a “technique” which zeroes in on ensuring shared common-knowledge understanding of “this is what’s good in our subculture, this is what’s bad, this is when we need to fully commit, this is when we can do the minimum” is a promising candidate for defusing the whole cycle of mutual accusation and defensiveness.

(Circling with a capital “C” seems to be useful for coming at this problem sideways, whereas mission statements and manifestos and company handbooks seem to be partially-successful-but-high-cost methods of solving it directly.)

The key conceptual difference that I find helpful here is acknowledging that "Rabbit" / "Stag" are both positive choices, that bring about utility. "Defect" feels like it brings in connotations that aren't always accurate.

Saying that you're going to pay rent on time, and then not, is defecting.

But if someone shows up saying "hey let's all do Big Project X" and you're not that enthusiastic about Big Project X but you sort of nod noncommittally, and then it turns out they thought you were going to put 10 hours of work into it and you thought you were going to put in 1, and then they get mad at you... I think it's more useful to think of this as "choosing rabbit" than "defecting."

Likewise, it's "rabbit" if you say "nah, I just don't think Big Project X is important". Going about your own projects and not signing up for every person's crusade is a perfectly valid action.

Likewise, it's "rabbit" if you say "look, I realize we're in a bad equilibrium right now and it'd be better if we all switched to A New Norm. But right now the Norm is X, and unless you are actually sure that we have enough buy-in for The New Norm, I'm not going to start doing a costly thing that I don't think is even going to work."

A lightweight, but concrete example

At my office, we have Philosophy Fridays*, where we try to get sync about important underlying philosophical and strategic concepts. What is our organization for? How does it connect to the big picture? What individual choices about particular site-features are going to bear on that big picture?

We generally agree that Philosophy Friday is important. But often, we seem to disagree a lot about the right way to go about it.

In a recent example: it often felt to me that our conversations were sort of meandering and inefficient. Meandering conversations that don't go anywhere is a stereotypical rationalist failure mode. I do it a lot by default myself. I wish that people would punish me when I'm steering into 'meandering mode'.

So at some point I said 'hey this seems kinda meandering.'

And it kinda meandered a bit more.

And I said, in a move designed to be somewhat socially punishing: "I don't really trust the conversation to go anywhere useful." And then I took out my laptop and mostly stopped paying attention.

And someone else on the team responded, eventually, with something like "I don't know how to fix the situation because you checked out a few minutes ago and I felt punished and wanted to respond but then you didn't give me space to."

"Hmm," I said. I don't remember exactly what happened next, but eventually he explained:

Meandering conversations were important to him, because it gave him space to actually think. I pointed to examples of meetings that I thought had gone well, that ended with google docs full of what I thought had been useful ideas and developments. And he said "those all seemed like examples of mediocre meetings to me – we had a lot of ideas, sure. But I didn't feel like I actually got to come to a real decision about anything important."

"Meandering" quality allowed a conversation to explore subtle nuances of things, to fully explore how a bunch of ideas would intersect. And this was necessary to eventually reach a firm conclusion, to leave behind the niggling doubts of "is this *really* the right path for the organization?" so that he could firmly commit to a longterm strategy.

We still debate the right way to conduct Philosophy Friday at the office. But now we have a slightly better frame for that debate, and awareness of the tradeoffs involved. We discuss ways to get the good elements of the "meandering" quality while still making sure to end with clear next-actions. And we discuss alternate modes of conversation we can intelligently shift between.

There's a time when I would have pre-emptively gotten really frustrated, and started rationalizing reasons why my teammate was willfully pursuing a bad conversational norm. Fortunately I had thought enough about this sort of problem that I noticed that I was failing into a failure mode, and shifted mindsets.

Rabbit in this case was "everyone just sort of pursues whatever conversational types seem best to them in an uncoordinated fashion", and Stag is "we deliberately choose and enforce particular conversational norms."

We haven't yet coordinated enough to really have a "stag" option we can coordinate around. But I expect that the conversational norms we eventually settle into will be better than if we had naively enforced either my or my teammate's preferred norms.

Takeaways

There seem like a couple important takeaways here, to me.

One is that, yes:

Sometimes stag hunts are worth it.

I'd like people in my social network to be aware that sometimes, it's really important for everyone to adopt a new norm, or for everyone to throw themselves 100% into something, or for a whole lot of person-hours to get thrown into a project.

When discussing whether to embark on a stag hunt, it's useful to have shorthand to communicate why you might ever want to put a lot of effort into a concerted, coordinated effort. And then you can discuss the tradeoffs seriously.

I have more to say about what sort of stag hunts seem do-able. But for this post I want to focus primarily on the fact that...

The schelling option is Rabbit

Some communities have established particular norms favoring 'stag'. But in modern, atomic, Western society you should probably not assume this as a default. If you want people to choose stag, you need to spend special effort building common knowledge [LW · GW] that Big Project X matters, and is worthwhile to pursue, and get everyone on board with it.

Corollary: Creating common knowledge is hard. If you haven't put in that work, you should assume Big Project X is going to fail, and/or that it will require a few people putting in herculean effort "above their fair share", which may not be sustainable for them.

This depends on whether effort is fungible. If you need 100 units of effort, you can make do with one person putting in 100 units of effort. If you need everyone to adopt a new norm that they haven't bought into, it just won't work.

If you are proposing what seems (to you) quite sensible, but nobody seems to agree...

...well, maybe people are being biased in some way, or motivated to avoid considering your proposed stag-hunt. People sure do seem biased about things, in general, even when they know about biases. So this may well be part of the issue.

But I think it's quite likely that you're dramatically underestimating the inferential distance – both the distance between their outlook and "why your proposed action is good", as well as the distance between your outlook and "why their current frame is weighing tradeoffs very differently than your current frame."

Much of the time, I feel like getting angry and frustrated... is something like "wasted motion" or "the wrong step in the dance."

Not entirely – anger and frustration are useful motivators. They help me notice that something about the status quo is wrong and needs fixing. But I think the specific flavor of frustration that stems from "people should be cooperating but aren't" is often, in some sense, actually wrong about reality. People are actually making reasonable decisions given the current landscape.

Anger and frustration help drive me to action, but often they come with a sort of tunnel vision. They lead me to dig in my heels, and get ready to fight – at a moment when what I really need is empathy and curiosity. I either need to figure out how to communicate better, to help someone understand why my plan is good. Or, I need to learn what tradeoffs I'm missing, which they can see more clearly than I.

My own strategies right now

In general, choose Rabbit.

- Keep at around 30% slack [LW · GW] in reserve (such that I can absorb not one, not two, but three major surprise costs without starting to burn out). Don't spend energy helping others if I've dipped below 30% for long – focus on making sure my own needs are met.

- Find local improvements I can make that don't require much coordination from others.

Follow rabbit trails into Stag* Country

Given a choice, seek out "Rabbit" actions that preferentially build option value for improved coordination later on.

- Metaphorically, this means "Follow rabbit trails that lead into *Stag-and-Rabbit Country", where I'll have opportunities to say:

- "Hey guys I see a stag! Are we all 100% up for hunting it?" and then maybe it so happens we can stag hunt together.

- Or, I can sometimes say, at small-but-manageable-cost-to-myself "hey guys, I see a whole bunch of rabbits over there, you could hunt them if you want." And others can sometimes do the same for me.

- Sliiightly more concretely, this means:

- Given the opportunity, without requiring actions on the part of other people... pursue actions that demonstrate my trustworthiness, and which build bits of infrastructure that'll make it easier to work together in the future.

- Help people out if I can do so without dipping below 30% slack for too long, especially if I expect it to increase the overall slack in the system.

(I'll hopefully have more to say about this in the future.)

Get curious about other people's frames

If a person and I have argued through the same set of points multiple times, each time expecting our points to be a solid knockdown of the other's argument... and if nobody has changed their mind...

Probably we are operating in two different frames. Communicating across frames is very hard, and beyond scope of this of this post to teach. But cultivating curiosity [LW · GW] and empathy are good first steps.

Occasionally run "Kickstarters for Stag Hunts." If people commit, hunt stag.

For example, the call-to-action in my Relationship Between the Village and Mission [LW · GW] post (where I asked people to contact me if they were serious about improving the Village) was designed to give me information about whether it's possible to coordinate on a staghunt to improve the Berkeley rationality village.

52 comments

Comments sorted by top scores.

comment by abramdemski · 2019-06-09T04:32:35.830Z · LW(p) · GW(p)

We should really be calling it Rabbit Hunt rather than Stag Hunt.

- The schelling choice is rabbit. Calling it stag hunt makes the stag sound schelling.

- The problem with stag hunt is that the schelling choice is rabbit. Saying of a situation "it's a stag hunt" generally means that the situation sucks because everyone is hunting rabbit. When everyone is hunting stag, you don't really bring it up. So, it would make way more sense if the phrase was "it's a rabbit hunt"!

- Well, maybe you'd say "it's a rabbit hunt" when referring to the bad equilibrium you're seeing in practice, and "it's a stag hunt" when saying that a better equilibrium is a utopian dream.

- So, yeah, calling the game "rabbit hunt" is a stag hunt.

I used to think a lot in terms of Prisoner's Dilemma, and "Cooperate"/"Defect." I'd see problems that could easily be solved if everyone just put a bit of effort in, which would benefit everyone. And people didn't put the effort in, and this felt like a frustrating, obvious coordination failure. Why do people defect so much?

Eventually Duncan shifted towards using Stag Hunt rather than Prisoner's Dilemma as the model here. If you haven't read it before, it's worth reading the description in full. If you're familiar you can skip to my current thoughts below.

In the book The Stag Hunt, Skyrms similarly says that lots of people use Prisoner's Dilemma to talk about social coordination, and he thinks people should often use Stag Hunt instead.

I think this is right. Most problems which initially seem like Prisoner's Dilemma are actually Stag Hunt, because there are potential enforcement mechanisms available. The problems discussed in Meditations on Moloch are mostly Stag Hunt problems, not Prisoner's Dilemma problems -- Scott even talks about enforcement, when he describes the dystopia where everyone has to kill anyone who doesn't enforce the terrible social norms (including the norm of enforcing).

This might initially sound like good news. Defection in Prisoner's Dilemma is an inevitable conclusion under common decision-theoretic assumptions. Trying to escape multipolar traps with exotic decision theories might seem hopeless. On the other hand, rabbit in Stag Hunt is not an inevitable conclusion, by any means.

Unfortunately, in reality, hunting stag is actually quite difficult. ("The schelling choice is Rabbit, not Stag... and that really sucks!")

Rabbit in this case was "everyone just sort of pursues whatever conversational types seem best to them in an uncoordinated fashion", and Stag is "we deliberately choose and enforce particular conversational norms."

This sounds a lot like Pavlov-style coordination vs Tit for Tat style coordination [LW · GW]. Both strategies can defeat Moloch in theory, but they have different pros and cons. TfT-style requires agreement on norms, whereas Pavlov-style doesn't. Pavlov-style can waste a lot of time flailing around before eventually coordinating. Pavlov is somewhat worse at punishing exploitative behavior, but less likely to lose a lot of utility due to feuds between parties who each think they've been wronged and must distribute justice.

When discussing whether to embark on a stag hunt, it's useful to have shorthand to communicate why you might ever want to put a lot of effort into a concerted, coordinated effort. And then you can discuss the tradeoffs seriously.

[...]

Much of the time, I feel like getting angry and frustrated... is something like "wasted motion" or "the wrong step in the dance."

Not really strongly contradicting you, but I remember Critch once outlined something like the following steps for getting out of bad equilibria. (This is almost definitely not the exact list of steps he gave; I think there were 3 instead of 4 -- but step #1 was definitely in there.)

1. Be the sort of person who can get frustrated at inefficiencies.

2. Observe the world a bunch. Get really curious about the ins and outs of the frustrating inefficiencies you notice; understand how the system works, and why the inefficiencies exist.

3. Make a detailed plan for a better equilibrium. Justify why it is better, and why it is worth the effort/resources to do this. Spend time talking to the interested parties to get feedback on this plan.

4. Finally, formally propose the plan for approval. This could mean submitting a grant proposal to a relevant funding organization, or putting something up for a vote, or other things. This is the step where you are really trying to step into the better equilibrium, which means getting credible backing for taking the step (perhaps a letter signed by a bunch of people, or a formal vote), and creating common knowledge between relevant parties (making sure everyone can trust that the new equilibrium is established). It can also mean some kind of official deliberation has to happen, depending on context (such as a vote, or some kind of due-diligence investigation, or an external audit, etc).

Replies from: philh↑ comment by philh · 2019-06-09T12:18:55.728Z · LW(p) · GW(p)

Most problems which initially seem like Prisoner’s Dilemma are actually Stag Hunt, because there are potential enforcement mechanisms available.

I'm not sure I follow, can you elaborate?

Is the idea that everyone can attempt to enforce norms of "cooperate in the PD" (stag), or not enforce those norms (rabbit)? And if you have enough "stag" players to successfully "hunt a stag", then defecting in the PD becomes costly and rare, so the original PD dynamics mostly drop out?

If so, I kind of feel like I'd still model the second level game as a PD rather than a stag hunt? I'm not sure though, and before I chase that thread, I'll let you clarify whether that's actually what you meant.

Replies from: abramdemski↑ comment by abramdemski · 2019-06-09T22:15:22.639Z · LW(p) · GW(p)

By "is a PD", I mean, there is a cooperative solution which is better than any Nash equilibrium. In some sense, the self-interest of the players is what prevents them from getting to the better solution.

By "is a SH", I mean, there is at least one good cooperative solution which is an equilibrium, but there are also other equilibria which are significantly worse. Some of the worse outcomes can be forced by unilateral action, but the better outcomes require coordinated action (and attempted-but-failed coordination is even worse than the bad solutions).

In iterated PD (with the right assumptions, eg appropriately high probabilities of the game continuing after each round), tit-for-tat is an equilibrium strategy which results in a pure-cooperation outcome. The remaining difficulty of the game is the difficulty of ending up in that equilibrium. There are many other equilibria which one could equally well end up in, including total mutual defection. In that sense, iteration can turn a PD into a SH.

Other modifications, such as commitment mechanisms or access to the other player's source code, can have similar effects.

Replies from: philh↑ comment by philh · 2019-06-10T22:02:51.424Z · LW(p) · GW(p)

Thanks, that makes sense.

Rambling:

In the specific case of iteration, I'm not sure that works so well for multiplayer games? It would depend on details, but e.g. if a player's only options are "cooperate" or "defect against everyone equally", then... mm, I guess "cooperate iff everyone else cooperated last round" is still stable, just a lot more fragile than with two players.

But you did say it's difficult, so I don't think I'm disagreeing with you. The PD-ness of it still feels more salient to me than the SH-ness, but I'm not sure that particularly means anything.

I think actually, to me the intuitive core of a PD is "players can capture value by destroying value on net". And I hadn't really thought about the core of SH prior to this post, but I think I was coming around to something like threshold effects; "players can try to capture value for themselves [it's not really important whether that's net positive or net negative]; but at a certain fairly specific point, it's strongly net negative". Under these intuitions, there's nothing stopping a game from being both PD and SH.

Not sure I'm going anywhere with this, and it feels kind of close to just arguing over definitions.

comment by Benquo · 2019-06-13T15:33:20.214Z · LW(p) · GW(p)

It seems to me like the underlying situation is not that all stag hunt games are played separately with the same dynamics, but that there are lots of simultaneous stag hunt games being played, such that figuring out which stags to coordinate on first is a big problem, and in some games in some cultures the coordination point really is Stag, but Rabbit in the rest of them.

If I were to opportunistically steal stuff I like a lot from my casual acquaintances, people would come down hard to defend their stag hunt in that domain. In other games I've noticed variation. The people I know outside the SF Bay Area maintain households that can sustain extended guest-host interactions in ways that can gradually create rich, embodied social bonds among people who don't have hobbies or mass media consumption preferences in common, but no one puts effort into that in the Bay as far as I can tell, which seems like the Rabbit equilibrium there.

Replies from: Raemoncomment by Raemon · 2021-01-20T04:28:37.281Z · LW(p) · GW(p)

Self Review.

I still endorse the broad thrusts of this post. But I think it should change at least somewhat. I'm not sure how extensively, but here are some considerations

Clearer distinctions between Prisoner's Dilemma and Stag Hunts

I should be more clear about what the game theoretical distinctions I'm actually making between Prisoners Dilemma and Stag Hunt. I think Rob Bensinger rightly criticized the current wording, which equivocates between "stag hunting is meaningfully different" and "'hunting rabbit' has nicer aesthetic properties than 'defect'".

I think Turntrout spelled out in the comments why it's meaningful to think in terms of stag hunts. I'm not sure it's the post's job to lay it out in the exhaustive detail that his comment does, but it should at least gesture at the idea.

Future Work: Explore a lot of coordination failures and figure out what the actual most common rules / payoff structures are.

Stag Hunting is relevant sometimes, but not always. I think it's probably more relevant [LW · GW] than Prisoner's Dilemma, which is a step up, but I think it's worth actually checking which game theory archetypes are most relevant most of the time.

Reworked Example

Some people comment that my proposed stag hunt... wasn't a stag hunt. I think that's actually kind of the point (i.e. most things that look like stag hunts are more complicated than you think, and people may not agree on the utility payoff). Coming up with good examples is hard, but I think at the very least the post should make it more clear that no, my original intended Stag Hunt did not have the appropriate payoff matrix after all.

What's the correct title?

While I endorse most of the models and gears in this post, I... have mixed feelings about the title. I'm not actually sure what the key takeaway of the post is meant to be. Abram's comment gets at some of the issues here [LW · GW]. Benquo also notes that we do have plenty of stag hunts where the schelling choice is Stag (i.e. don't murder)

I think there were two primary intended 'negative' points (i.e. "things you SHOULDN'T do")

- Don't hunt stag unsustainably. I've seen a bunch of people get burned out and frustrated from hitting the Stag button and then not having enough support. Either make sure you have buy-in, or only hunt stag when you can afford to lose.

- Be careful punishing people when they choose Rabbit. Often, you're just wrong about either your proposal being a staghunt, or about people having common knowledge that your proposal is good. (punishing in a way people consider unjust often shuts down coordination potential). I think this is a fairly complex point that this post doesn't quite address.

There are multiple positive-advice-takeaways you might have from this post, but they depend a lot on what situation you're actually in.

comment by Thrasymachus · 2019-06-08T16:12:44.028Z · LW(p) · GW(p)

It's perhaps worth noting that if you add in some chance of failure (e.g. even if everyone goes stag, there's a 5% chance of ending up -5, so Elliott might be risk-averse enough to decline even if they knew everyone else was going for sure), or some unevenness in allocation (e.g. maybe you can keep rabbits to yourself, or the stag-hunt-proposer gets more of the spoils), this further strengthens the suggested takeaways. People often aren't defecting/being insufficiently public spirited/heroic/cooperative if they aren't 'going to hunt stags with you', but are sceptical of the upside and/or more sensitive to the downsides.

One option (as you say) is to try and persuade them the value prop is better than they think. Another worth highlighting is whether there are mutually beneficial deals one can offer them to join in. If we adapt Duncan's stag hunt to have a 5% chance of failure even if everyone goes, there's some efficient risk-balancing option A-E can take (e.g. A-C pool together to offer some insurance to D-E if they go on a failed hunt with them).

[Minor: one of the downsides of 'choosing rabbit/stag' talk is it implies the people not 'joining in' agree with the proposer that they are turning down a (better-EV) 'stag' option.]

Replies from: Ratheka

↑ comment by Ratheka · 2019-06-08T16:33:29.148Z · LW(p) · GW(p)

Agree - talking things out, making everything as common knowledge as possible, and people who strongly value the harder path and who have resources committing some to fence off the worst cases of failure, seem to be necessary prerequisites to staghunting.

comment by Ben Pace (Benito) · 2020-12-05T08:36:31.374Z · LW(p) · GW(p)

I gained a lot from reading this post. It helped move a bunch of my thinking away from “people are defecting“ to “it’s hard to get people to coordinate around a single vision when it’s risky and their alternatives look good”. It’s definitely permeated my thinking on this subject.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2020-12-05T11:56:24.486Z · LW(p) · GW(p)

It helped move a bunch of my thinking away from “people are defecting“ to “it’s hard to get people to coordinate around a single vision when it’s risky and their alternatives look good”.

This makes it sound like the key difference is that defecting is antisocial (or something), while stag hunts are more of an understandable tragic outcome for well-intentioned people.

I think this is a bad way of thinking about defection: PD-defection can be prosocial, and cooperation can be antisocial/harmful/destructive.

I worry this isn't made clear enough in the OP? E.g.:

"Defect" feels like it brings in connotations that aren't always accurate.

If "Defect" has the wrong connotations, that seems to me like a reason to pick a different label for the math, rather than switching to different math. The math of PDs and of stag hunts doesn't know what connotations we're ascribing, so we risk getting very confused if we start using PDs and/or stag hunts as symbols for something that only actually exists in our heads (e.g., 'defection in Prisoner's Dilemmas is antisocial because I'm assuming an interpretation that isn't in the math').

See also zulupineapple's comment [LW(p) · GW(p)].

This isn't to say that I think stag hunts aren't useful to think about; but if a post about this ended up in the Review I'd want it to be very clear and precise about distinguishing a game's connotations from its denotations, and ideally use totally different solutions for solving 'this has the wrong connotation' and 'this has the wrong denotation'.

(I haven't re-read the whole post recently, so it's possible the post does a good job of this and I just didn't notice while skimming.)

Replies from: TurnTrout, Kaj_Sotala, Benito, Raemon↑ comment by TurnTrout · 2020-12-05T17:37:24.849Z · LW(p) · GW(p)

If "Defect" has the wrong connotations, that seems to me like a reason to pick a different label for the math, rather than switching to different math.

I think that this is often an issue of differing beliefs among the players and different weightings over player payoffs. In What Counts as Defection? [LW · GW], I wrote:

Informal definition. A player defects when they increase their personal payoff at the expense of the group.

I went on to formalize this as

Definition. Player 's action is a defection against strategy profile and weighting if

- Social loss:

Under this model, this implies two potential sources of disagreement about defections:

- Disagreement in beliefs. You think everyone agreed to hunt stag, I'm not so sure; I hunt rabbit, and you say I defected; I disagree. Under your beliefs (the strategy profile you thought we'd agreed to follow), it was a defection. You thought we'd agreed on the hunt-stag profile. In fact, it was worse than a defection, because there wasn't personal gain for me - I just sabotaged the group because I was scared (condition 2 above).

Under my beliefs, it wasn't a defection - I thought it was quite unlikely that we would all hunt stag, and so I salvaged the situation by hunting rabbit. - Disagreement in weighting . There might be an implicit social contract - if we both did half the work on a project, it would be a defection for me to take all of the credit. But if there's no implicit agreement and we're "just" playing a constant-sum game, that would just be me being rational. Tough luck, it's a tough world out there in those normal-form games!

Speculation: This explains why it can sometimes feel right [LW(p) · GW(p)] to defect in PD. This need not be because of our "terminal values" agreeing with the other player (i.e. I'd feel bad if Bob went to jail for 10 years), but where the rightness is likely judged by the part of our brain that helps us "be the reliable kind of person with whom one can cooperate" by making us feel bad for transgressions/defections, even against someone with orthogonal terminal values. If there's no (implicit) contract, then that bad feeling might not pop up.

I think this explains (at least part of) why defection in stag hunt can "feel different" than defection in PD.

Replies from: Raemon↑ comment by Raemon · 2020-12-05T20:25:42.847Z · LW(p) · GW(p)

I'm mulling this over in the context of "How should the review even work for concepts that have continued to get written-on since 2018?". I notice that the ideal Schelling Choice is Rabbit [LW · GW] post relies a bit on both "What Counts as Defection" and "Most prisoner's dilemmas are stag hunts, most stag hunts are battles of the sexes [LW · GW]", which both came later.

(I think the general principle of "try during the review to holistically review sequences/followups/concepts" makes sense. But I still feel confused about how to actually operationalize that such that the process is clear and outputs a coherent product)

↑ comment by Kaj_Sotala · 2020-12-05T14:42:54.760Z · LW(p) · GW(p)

The True Prisoner's Dilemma [LW · GW] seems useful to link here.

In this case, we really do prefer the outcome (D, C) to the outcome (C, C), leaving aside the actions that produced it. We would vastly rather live in a universe where 3 billion humans were cured of their disease and no paperclips were produced, rather than sacrifice a billion human lives to produce 2 paperclips. It doesn't seem right to cooperate, in a case like this. It doesn't even seem fair - so great a sacrifice by us, for so little gain by the paperclip maximizer? And let us specify that the paperclip-agent experiences no pain or pleasure - it just outputs actions that steer its universe to contain more paperclips. The paperclip-agent will experience no pleasure at gaining paperclips, no hurt from losing paperclips, and no painful sense of betrayal if we betray it.

What do you do then? Do you cooperate when you really, definitely, truly and absolutely do want the highest reward you can get, and you don't care a tiny bit by comparison about what happens to the other player? When it seems right to defect even if the other player cooperates?

That's what the payoff matrix for the true Prisoner's Dilemma looks like - a situation where (D, C) seems righter than (C, C).

↑ comment by Ben Pace (Benito) · 2020-12-05T20:03:03.172Z · LW(p) · GW(p)

But defecting is anti-social. You're gaining from my loss. I tried to do something good for us both and then you basically stole some of my resources.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2020-12-05T21:08:10.308Z · LW(p) · GW(p)

See The True Prisoner's Dilemma [LW · GW]. Suppose I'm negotiating with Hitler, and my possible payoffs look like:

- I cooperate, Hitler cooperates: 5 million people die.

- I cooperate, Hitler defects: 50 million people die.

- I defect, Hitler cooperates: 0 people die.

- I defect, Hitler defects: 8 million people die.

Obviously in this case the defect-defect equilibrium isn't optimal; if there's a way to get a better outcome, go for it. But equally obviously, cooperating isn't prosocial; the cooperate-cooperate equilibrium is far from ideal, and hitting 'cooperate' unconditionally is the worst of all possible strategies.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-12-08T08:14:27.671Z · LW(p) · GW(p)

TurnTrout's informal definition of 'defection' above looks right to me, where "a player defects when they increase their personal payoff at the expense of the group." My point is that when people didn't pick the same choice as me, I was previously modeling it using prisoners' dilemma, but this was inaccurate because the person wasn't getting any personal benefit at my expense. They weren't taking from me, they weren't free riding. They just weren't coordinating around my stag. (And for my own clarity I should've been using the stag hunt metaphor

I can try to respond to your (correct) points about the True Prisoners' Dilemma, but I don't think they're cruxy for me. I understand that defecting and cooperating in the PD don't straightforwardly reflect the associations of their words, but they sometimes do. Sometimes, defecting is literally tricking someone into thinking you're cooperating and then stealing their stuff. The point I'm making about stag hunts is that this element is entirely lacking — there's no ability for them to gain from my loss, and bringing it into the analysis is in many places muddying the waters of what's going on. And this reflects lots of situations I've been in, where because of the game theoretic language I was using I was causing myself to believe there was some level of betrayal I needed to model, where there largely wasn't.

Replies from: TurnTrout↑ comment by TurnTrout · 2020-12-08T14:57:19.524Z · LW(p) · GW(p)

They weren't taking from me, they weren't free riding. They just weren't coordinating around my stag.

I'd like to note that with respect to my formal definition, defection always exists in stag hunts (if the social contract cares about everyone's utility equally); see Theorem 6 [LW · GW]:

What's happening when is: Player 2 thinks it's less than 50% probable that P1 hunts stag; if P2 hunted stag, expected total payoff would go up, but expected P2-payoff would go down (since some of the time, P1 is hunting hares while P2 waits alone near the stags); therefore, P2 is tempted to hunt hare, which would be classed as a defection.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-12-08T19:04:13.346Z · LW(p) · GW(p)

If that’s a stag hunt then I don’t know what a stag hunt is. I would expect a stag hunt to have (2,0) in the bottom left corner and (0,2) in the top right, precisely showing that player two gets no advantage from hunting hare if player one hunts stag (and vice versa).

Replies from: TurnTrout, Vaniver↑ comment by Vaniver · 2020-12-08T19:09:57.414Z · LW(p) · GW(p)

T >= P covers both the case where you're indifferent as to whether or not they hunt hare when you do (the =) and the case where you're better off as the only hare hunter (the >); so long as R > T, both cases have the important feature that you want to hunt stag if they will hunt stag, and you want to hunt hare if they won't hunt stag.

The two cases (T>P and T=P) end up being the same because if you succeed at tricking them into hunting stag while you hurt hare (because T>P, say), then you would have done even better by actually collaborating with them on hunting stag (because R>T).

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-12-08T22:45:07.379Z · LW(p) · GW(p)

I see, thx.

↑ comment by Raemon · 2020-12-05T18:36:16.226Z · LW(p) · GW(p)

I think this is indeed a failure of the post (the concern you raise was on my mind recently as I thought through more recent posts on game theory [? · GW]).

I think TurnTrout successfully gets at some of the details of what's actually going on. I'm not 100% sure how to rewrite the post to make it's primarily point clearly and accurately without getting bogged down in lots of detailed caveats, but I agree there is some problem to solve here if the post were to pass review.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2020-12-05T21:11:38.710Z · LW(p) · GW(p)

Maybe provide an example of a prisoner's dilemma with Clippy, and a stag hunt with Clippy, to distinguish those cases from 'games with other humans whose values aren't awful by my lights'? This could also better clarify for the reader what the actual content of the games is.

comment by Unnamed · 2019-06-09T00:43:45.445Z · LW(p) · GW(p)

And I said, in a move designed to be somewhat socially punishing: "I don't really trust the conversation to go anywhere useful." And then I took out my laptop and mostly stopped paying attention.

This 'social punishment' move seems problematic, in a way that isn't highlighted in the rest of the post.

One issue: What are you punishing them for? It seems like the punishment is intended to enforce the norm that you wanted the group to have, which is a different kind of move than enforcing a norm that is already established. Enforcing existing norms is generally prosocial, but it's more problematic if each person is trying to enforce the norms that he personally wishes the group to have.

A second thing worth highlighting is that this attempt at norm enforcement looked a lot like a norm violation (of norms against disengaging from a meeting). Sometimes "punishing others for violating norms" is a special case where it's appropriate to do something which would otherwise be a norm violation, but that's often a costly/risky way of doing things (especially when the norm you're enforcing isn't clearly established and so your actions are less legible).

Replies from: Raemon↑ comment by Raemon · 2019-06-09T00:56:17.176Z · LW(p) · GW(p)

Enforcing existing norms is generally prosocial, but it's more problematic if each person is trying to enforce the norms that he personally wishes the group to have.

Indeed. This is a major failure mode, important enough to deserve it's own post (or perhaps woven into some other posts – there's a lot I could say on this topic and I'm not sure how to crystallize it all). I try to catch myself when I do this behavior and am annoyed when I see others doing it and it was a major motivator for this post.

One problem is that people often have very different expectations about what norms had already been established, that people had implicitly bought into (by working at a given company, but being a 'rationalist' or whatnot).

comment by zulupineapple · 2019-08-09T19:09:36.709Z · LW(p) · GW(p)

Defecting in Prisoner's dilema sounds morally bad, while defecting in Stag hunt sounds more reasonable. This seems to be the core difference between the two, rather than the way their payoff matrices actually differ. However, I don't think that viewing things in moral terms is useful here. Defecting in Prisoner's dilema can also be reasonable.

Also, I disagree with the idea of using "resource" instead of "utility". The only difference the change makes is that now I have to think, "how much utility is Alexis getting from 10 resources?" and come up with my own value. And if his utility function happens not to be monotone increasing, then the whole problem may change drastically.

comment by Zvi · 2021-01-15T20:09:32.911Z · LW(p) · GW(p)

So I reread this post, found I hadn't commented... and got a strong desire to write a response post until I realized I'd already written it, and it was even nominated. I'd be fine with including this if my response also gets included, but very worried about including this without the response.

In particular, I felt the need to emphasize the idea that Stag Hunts frame coordination problems as going against incentive gradients and as being maximally fragile and punishing, by default.

If even one person doesn't get with the program, for any reason, a Stag Hunt fails, and everyone reveals their choices at the same time. Which everyone abstractly knows is the ultimate nightmare scenario and not the baseline, but a lot of the time gets treated (I believe) as the baseline, And That's Terrible.

I don't know what exactly to do about it, introducing yet another framework/name seems expensive at this point, but I think the right baseline coordination is that various people get positive payoffs for an activity that rise with the number of people who do it, and a lot of the time this gets automatically modeled as a strict stag hunt instead, and people throw up their hands and say 'whelp, coordination hard, what you gonna do.'

Replies from: Vaniver, Raemon↑ comment by Vaniver · 2021-01-15T21:56:56.567Z · LW(p) · GW(p)

In particular, I felt the need to emphasize the idea that Stag Hunts frame coordination problems as going against incentive gradients and as being maximally fragile and punishing, by default.

In my experience, the main thing that happens when people learn about Stag Hunts is that they realize that it's a better fit for a lot of situations than the Prisoner's Dilemma, and this is generally an improvement. (Like Duncan, I wish we had used this frame at the start of Dragon Army.)

Yes, not every coordination problem is a stag hunt, and it may be a bad baseline or push in the wrong direction. It isn't the right model for starting a meetup, where (as you say) one person showing up alone is not much worse than hunting rabbit, and organic growth can get you to better and better situations. I think it's an underappreciated move to take things that look like stag hunts and turn them into things that are more robust to absence or have a smoother growth curve.

All that said, it still seems worth pointing out that in the absence of communication, in many cases the right thing to assume is that you should hunt rabbit.

↑ comment by Raemon · 2021-01-15T21:33:01.418Z · LW(p) · GW(p)

I still have "write my own self-review" of this post, which I think will at least partially address some of that concern. (But, since we had an argument about this as recently as Last Tuesday*, obviously it won't fully address it)

I do want to note that I think the point your primarily making here (about misleading/bad effects of the staghunt frame) doesn't feel super encapsulated in your current response post, and probably is worth a separate top level post.

(But, tl;dr for my current take is "okay, this post replaces the wrong-frame of prisoner's dilemma with the wrong-frame of staghunts, which I still think was a net improvement. But, "what actually ARE the game theoretical situations we actually find ourselves in most of the time?" is the obvious next question to ask)

* it wasn't actually Tuesday.

comment by Shmi (shminux) · 2019-06-08T03:27:20.327Z · LW(p) · GW(p)

A few points:

- Yes, it's super frustrating when people ignore obviously (to you) good actions and or perform obviously (to you) bad actions. They do have their good (to them) reasons to do so, conscious or subconscious. So do you. They might be wrong, or you might be wrong, or something else might be going on. Unless you are all seeing and all knowing, how can you tell? If you are so much smarter than they are, why are you then working/volunteering there, oughtn't you be looking for a place where you can deal with your intellectual peers? And if you are not head and shoulders smarter, then how do you know that you are right and the action X is the right one, despite everyone else choosing action Y?

- Following Scott's recent and ongoing sequence, consider that there might be culture and traditions that have evolved over a long time that compel people to do Y instead of X, even if they agree that X is better than Y if the argument for X > Y that you are advocating is considered in isolation.

- As your example of a meandering conversation shows, people are very different and we all succumb to the typical mind fallacy. Intentionally paying attention to people likely not thinking the way you do, not incentivised by the same things you are, not emotionally connecting to other people and events the same way you do may clarify in your mind why other people act the way the do. If not, asking questions with genuine interest and curiosity and without expressing opinions can get you some ways there.

I have been and am in a situation that feels similar to yours. Here is one example of many. My direct supervisor had refused to implement a data retrieval system that would (in my opinion) greatly speed up and simplify analyzing and solving customer issues. It would be at most a week or two of work. That was 4 years ago, and I've been mentioning that we ought to do it ASAP every couple of weeks since then. He never authorized it, and instead asked if I am done whining. Meanwhile we are wasting resources many times over and likely losing customers and sales because of the field issues that could have been resolved quickly. I am not 100% sure why he is doing (or not doing) it, and it is pointless to ask, because his stated reasons would not be the real ones. I have some inkling of what is going on in his mind. In part it is probably chasing rabbits instead of stags, since he has more to lose from a scheduled slippage in a new project than from a slippage due to field support here and there, ostensibly outside his control. And maybe his strategy is the rational one, given the situation.

Let me add one more strategy to your list, though: dress stags as rabbits to get the buy in. This may sound disingenuous, but the reality is that with enough concerted effort a caught stag is later perceived as an unusually fat rabbit. People generally remember the payoff, not the effort, as long as things go smoothly.

Replies from: Raemon↑ comment by Raemon · 2019-06-08T04:43:40.645Z · LW(p) · GW(p)

dress stags as rabbits to get the buy in. This may sound disingenuous, but the reality is that with enough concerted effort a caught stag is later perceived as an unusually fat rabbit.

Can you give an example of what this might look like?

Replies from: shminux↑ comment by Shmi (shminux) · 2019-06-08T05:49:36.608Z · LW(p) · GW(p)

We are all easily convinced of what we want to hear. Elliott wants to get ahead. Just scared a lot to take a chance. And perception is reality, literally. So if you help people see the benefits of cooperating (hunting a stag, taking a chance), and the drawbacks of sticking with rabbits (say, still being stuck in the muck), then their risk assessment changes and you may get them on your side. Or, alternatively, if Elliott has nothing to lose, he might go for a desperate heroic effort.

comment by Emiya (andrea-mulazzani) · 2021-01-03T09:23:55.049Z · LW(p) · GW(p)

I don't feel the "stag hunt" example to be a good fit to the situation described, but the post is clear in explaining the problem and suggesting how to adapt to it.

- The post helps understand in which situations group efforts where everyone has to invest heavy resources aren't likely to work, focusing on the different perspectives and inferential frames people have on the risks/benefits of the situation. The post is a bit lacking on possible strategies to promote stag hunts, but it specified it would focus on the Schelling choice being "rabbit".

- The suggestions on how to improve the communication and how to avoid wasting personal resources where this can't be done are useful and are likely to change the way I act in these situations.

- The real life situations described fit my experience in group works and how I've witnessed people fail to cooperate.

- I don't think there's a subclaim I can test.

- I'd like a followup work that would focus on how to enforce/promote cooperation in groups to successfully stag hunt, with different strategies listed for different levels of power one could have on the group and for different levels of group inertia.

comment by Emiya (andrea-mulazzani) · 2021-01-03T09:02:06.664Z · LW(p) · GW(p)

The insight is pretty useful, even if I feel the whole "stag hunt" example is a bit misleading. (Hunts are highly coordinated efforts, and there's no way people wouldn't agree on what they're doing before setting off in the wood. Then, anyone who hunts a rabbit after saying stag isn't making a Schelling choice, but defecting in full)

They don't stop.

So I start subtly socially punishing them for it.

They don't stop. What's more... now they seem to be punishing me.

Generally speaking, I don't think this is an effective way to change people's behaviour. Scientific literature agrees punishments aren't really effective in modifying behaviour (even if there are some situations where you can't not use them, or where the knowledge of a lack of punishment causes defection).

In this situation 1) it's not clear what they're being punished about or even that this is a punishment, so they might miss it completely and 2) they'd have plenty of ways to avoid the punishment (like avoiding you) so I wouldn't expect this tactic to work.

Socially reinforcing/rewarding the desired behaviour should work a lot better to shape behaviour and doesn't exposes you to possible collateral side effects.

comment by Decius · 2019-06-09T00:20:22.955Z · LW(p) · GW(p)

One error of the stag/rabbit hunt framing is that it makes it explicit that it's a coordination problem, not a values problem. To frame it differently would require that the stag and rabbit hunts not produce different utility numbers, but yield different resources or certainties of resource. If a rabbit hunt yields 3d2 rabbits hunted per hunter, but the stag hunt yields 1d2-1 stag hunted if all hunters work together and 0 if they don't, then even with a higher expected yield of meat and of hide from the stag hunt, for some people the rabbit hunt might yield higher expected utility, since the certainty of not starving is much more utility than an increase in the amount of hides.

In order to confidently assert that a Schelling point exists, one should have viewed the situation from everyone's point of view and applying their actual goals- NOT look at everyone's point of view and apply your goal, or the average goals, or the goals they think they have.

Replies from: Raemon↑ comment by Raemon · 2019-06-09T00:38:58.562Z · LW(p) · GW(p)

Thanks. I think I gained useful insights from this but found the wording a bit confusing. I assume you mean something like "The stag/rabbit frame assumes that it's a coordination, when sometimes [often?] it's a values disagreement. In real life people have different goals, so don't necessarily get the same utility from a given action. And in the literal rabbit/stag/hunters example, the various options don't actually produce the same utility since people get diminishing returns from meat."

...

"The Schelling Point is Rabbit" is (hopefully obviously) a bit of a simplification, and I agree that you'll want to actually look at everyone's goals in a given situation.

comment by countingtoten · 2019-06-08T02:07:31.885Z · LW(p) · GW(p)

Meandering conversations were important to him, because it gave them space to actually think. I pointed to examples of meetings that I thought had gone well, that ended will google docs full of what I thought had been useful ideas and developments. And he said "those all seemed like examples of mediocre meetings to me – we had a lot of ideas, sure. But I didn't feel like I actually got to come to a real decision about anything important."

Interesting that you choose this as an example, since my immediate reaction to your opening was, "Hold Off On Proposing Solutions." More precisely, my reaction was that I recall Eliezer saying he recommended this before any other practical rule of rationality (to a specific mostly white male audience, anyway) and yet you didn't seem to have established that people agree with you on what the problem is.

It sounds like you got there eventually, assuming "the right path for the organization" is a meaningful category.

Replies from: Raemon↑ comment by Raemon · 2019-06-08T02:23:57.017Z · LW(p) · GW(p)

I think the disagreement was on a slightly different axis. It's not enough to hold off on proposing solutions. It's particular flavors and approaches to doing so.

One thing (which me-at-the-beginning-of-the-example would have liked), is that we do some sort of process like:

- Everyone thinks independently for N minutes about what considerations are relevant, without proposing solutions

- Everyone shares those thoughts, and discusses them a while

- Everyone thinks independently for another N minutes about potential solutions in light of those considerations.

- We discuss those thoughts

- We eventually try to converge on a solution.

...with each section being somewhat time-boxed (You could fine-tune the time boxing, possibly repeating some steps, depending on how important the decision was and how much time you had)

But something about the overall process of time-boxing intrinsically got in the way of the type of thinking that this person felt they needed.

comment by DanielFilan · 2021-09-18T22:05:09.517Z · LW(p) · GW(p)

This Aumann paper is about (a variant of?) the stag hunt game. In this version, it's great for everyone if we both hunt stag, it's somewhat worse for everyone if we hunt rabbit, and if I hunt stag and you hunt rabbit, it's terrible for me, and you're better off than in the world in which we both hunted rabbit, but worse off than in the world in which we both hunted stag.

He makes the point that in this game, even if we agree to hunt stag, if we make our decisions alone and without further accountability, I might think to myself "Well, you would want that agreement if you wanted to hunt stag, but you would also want that agreement if you wanted to hunt rabbit - either way, it's better for you if I hunt stag. So the agreement doesn't really change my mind as to whether you want to hunt rabbit or stag. Since I was presumably uncertain before, I should probably still be uncertain, and that means rabbit is the safer bet."

I'm not sure how realistic the setup is, but I thought it was an interesting take - a case where an agreement to both choose an outcome that's a Nash equilibrium doesn't really persuade me to keep the agreement.

comment by Vladimir_Nesov · 2019-06-11T21:33:02.314Z · LW(p) · GW(p)

Utility is not a resource. In the usual expected utility setting, utility functions don't care about affine transformations (all decisions remain the same), for example decreasing all utilities by 1000 doesn't change anything, and there is no significance to utility being positive vs. negative. So requirements to have at least five units of utility in order to play a round of a game shouldn't make sense.

In this post, there is a resource whose utility could maybe possibly be given by the identity function. But in that case it could also be given by the "times two minus 87" utility function and lead to the same decisions. It's not really clear that utility is identity, since a 50/50 lottery between ending up with zero or ten units of the resource seems much worse than the certainty of obtaining five units. ("If you’re Elliott, [zero] is a super scary result to imagine.")

Replies from: Raemon↑ comment by Raemon · 2019-06-11T23:00:55.941Z · LW(p) · GW(p)

Not 100% sure I got all the points in this comment, but my sense is that the overall meta-point was that the framing (in particular in the quoted section?) of the Stag Hunt wasn't formalized properly (i.e. conflating resource with utility).

Do you have a suggestion for an improved wording, optimized for being accessible to non-technical people? (maybe just replacing "utility" with "resources" since I think that's a more accurate read on the particular way Duncan was framing it)

There's also a question that may be useful of when it's actually accurate for "real life stag hunts" to actually "cost resources." In the example I gave, there *is* a resource that'd need to be spent to achieve good conversational norms, but admittedly the stag hunt frame doesn't really map onto it that well (since it's not like different players had different amounts of resource, it was more like they had different beliefs about how valuable it was for the team to spend a particular resource)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2019-06-11T23:12:24.623Z · LW(p) · GW(p)

Sure, any resource would do (like copper coins needed to pay for maintenance of equipment), or just generic "units of resources". My concern is that the term "utility" is used incorrectly in an otherwise excellent post that is tangentially related to the topic where its technical meaning matters, potentially propagating this popular misreading.

Replies from: Raemon↑ comment by Raemon · 2019-06-11T23:15:37.689Z · LW(p) · GW(p)

I replaced the term with 'resource' for now. (I think it's sort of important for it to showcase 'genericness')

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2019-06-11T23:21:28.503Z · LW(p) · GW(p)

Thanks!

comment by Raemon · 2019-06-28T18:46:16.047Z · LW(p) · GW(p)

A point that's come up when chatting in person is that in real life, the payoffs don't quite match Stag Hunt (or "Rabbit Hunt" if we're to use Abram's terminology).

I think what's more common is that "if you choose rabbit, you mostly get to keep your own rabbit", rather than splitting it equally (or at most, you keep 2 resource-units for yourself and maybe share 1 resource unit). I don't think this radically changes the rest of the argument but seemed worth noting.

(There's perhaps a related issue where "if you catch a stag, sometimes it's not actually split equally – someone(s) get the metaphorical lion's share, and there's a sub-game of politics that determines who get's what portion of it. People don't trust that splitting process so are hesitant to commit)

Another criticism someone gave was that it's rarely a "turn based" game. I found this post somewhat more confusing (or, "I'm not sure to what degree the turn-based-ness matters, and if it does am not sure how to formalize it.").

comment by philh · 2019-06-09T21:15:50.132Z · LW(p) · GW(p)

Meta: you have a few asterisks which I guess are just typos, but which caused me to go looking for footnotes that don't exist. "Philosophy Fridays*", "Follow rabbit trails into Stag* Country", "Follow rabbit trails that lead into *Stag-and-Rabbit Country".

Replies from: Raemon↑ comment by Raemon · 2019-06-09T22:48:28.771Z · LW(p) · GW(p)

Thanks. I got rid of them.

The two "Stag Country" ones were supposed to refer to each other (i.e. by "Stag Country" I meant "a place with lots of opportunities for both rabbit and stag hunting, a generally rich environment.")

Philosophy Friday* was going to link to a thing where I mentioned that we have a ridiculous injoke of spelling it different each time, most commonly Filosophee Φriday, but this didn't seem super worth people's attention and I decided not to make it part of the official post.

Replies from: DanielFilan↑ comment by DanielFilan · 2021-09-18T21:57:31.865Z · LW(p) · GW(p)

Two of these asterisks still exist.

comment by Kaj_Sotala · 2020-12-02T13:36:31.960Z · LW(p) · GW(p)

This helped make sense of situations which had frustrated me in the past.

comment by habryka (habryka4) · 2019-07-31T00:14:37.795Z · LW(p) · GW(p)

Promoted to curated: I think this post, together with "Some Ways Coordination is Hard" makes a bunch of pretty valuable points. Stag hunt as a concept seems to me to me to be the kind of fundamental concept that helps me actually build decent models of game-theory, in the same way the standard prisoner's dilemma does, and so I generally think elaboration on it, both in theory and practice is quite useful.

comment by NancyLebovitz · 2020-12-05T21:33:24.981Z · LW(p) · GW(p)

It seems to me this is getting into Social Safety Net territory. Elliott is cautious because he really has fewer resources. Would the group benefit if he's given more so he isn't running so close to the edge?