Slack

post by Zvi · 2017-09-30T11:10:00.135Z · LW · GW · 74 commentsContents

Definition: Slack. The absence of binding constraints on behavior. Related Slackness Out to Get You and the Attack on Slack You Can Afford It The Slackless Like of Maya Millennial “Give Me Slack or Kill Me” – J.R. “Bob” Dobbs A Final Note None 74 comments

Epistemic Status: Reference post. Strong beliefs strongly held after much thought, but hard to explain well. Intentionally abstract.

Disambiguation: This does not refer to any physical good, app or piece of software.

Further Research (book, recommended but not at all required, take seriously but not literally): The Book of the Subgenius

Related (from sam[ ]zdat, recommended but not required, take seriously and also literally, entire very long series also recommended): The Uruk Machine

Further Reading (book): Scarcity: Why Having Too Little Means So Much

Previously here (not required): Play in Hard Mode, Play in Easy Mode, Out to Get You

Leads to (I’ve been scooped! Somewhat…): Sabbath Hard and Go Home

An illustrative little game: Carpe Diem: The Problem of Scarcity and Abundance

Slack is hard to precisely define, but I think this comes close:

Definition: Slack. The absence of binding constraints on behavior.

Poor is the person without Slack. Lack of Slack compounds and traps.

Slack means margin for error. You can relax.

Slack allows pursuing opportunities. You can explore. You can trade.

Slack prevents desperation. You can avoid bad trades and wait for better spots. You can be efficient.

Slack permits planning for the long term. You can invest.

Slack enables doing things for your own amusement. You can play games. You can have fun.

Slack enables doing the right thing. Stand by your friends. Reward the worthy. Punish the wicked. You can have a code.

Slack presents things as they are without concern for how things look or what others think. You can be honest.

You can do some of these things, and choose not to do others. Because you don’t have to.

Only with slack can one be a righteous dude.

Slack is life.

Related Slackness

Slack in project management is the time a task can be delayed without causing a delay to either subsequent tasks or project completion time. The amount of time before a constraint binds.

Slack the app was likely named in reference to a promise of Slack in the project sense.

Slacks as trousers are pants that are actual pants, but do not bind or constrain.

Slackness refers to vulgarity in West Indian culture, behavior and music. It also refers to a subgenre of dancehall music with straightforward sexual lyrics. Again, slackness refers to the absence of a binding constraint. In this case, common decency or politeness.

A slacker is one who has a lazy work ethic or otherwise does not exert maximum effort. They slack off. They refuse to be bound by what others view as hard constraints.

Out to Get You and the Attack on Slack

Many things in this world are Out to Get You. Often they are Out to Get You for a lot, usually but not always your time, attention and money.

If you Get Got for compact amounts too often, it will add up and the constraints will bind.

If you Get Got even once for a non-compact amount, the cost expands until the you have no Slack left. The constraints bind you.

You might spend every spare minute and/or dollar on politics, advocacy or charity. You might think of every dollar as a fraction of a third-world life saved. Racing to find a cure for your daughter’s cancer, you already work around the clock. You could have an all-consuming job or be a soldier marching off to war. It could be a quest for revenge, for glory, for love. Or you might spend every spare minute mindlessly checking Facebook or obsessed with your fantasy football league.

You cannot relax. Your life is not your own.

It might even be the right choice! Especially for brief periods. When about to be run over by a truck or evicted from your house, Slack is a luxury you cannot afford. Extraordinary times call for extraordinary effort.

Most times are ordinary. Make an ordinary effort.

You Can Afford It

No, you can’t. This is the most famous attack on Slack. Few words make me angrier.

The person who says “You Can Afford It” is saying to ignore constraints that do not bind you. If you do, all constraints soon bind you.

Those who do not value Slack soon lose it. Slack matters. Fight to keep yours!

Ask not whether you can afford it. Ask if it is Worth It.

Unless you can’t afford it. Affordability is invaluable negative selection. Never positive selection.

The You Can Afford It tax on Slack quickly approaches 100% if unchecked.

If those with extra resources are asked to share the whole surplus, all are poor or hide their wealth. Wealth is a burden and makes you a target. Those visibly flush rush to spend their bounty.

Where those with free time are given extra work, all are busy or look busy. Those with copious free time seek out relatively painless time sinks they can point to.

When looking happy means you deal with everything unpleasant, no one looks happy for long.

The Slackless Like of Maya Millennial

Things are bad enough when those with Slack are expected to sacrifice for others. Things are much worse when the presence of Slack is viewed as a defection.

An example of this effect is Maya Millennial (of The Premium Mediocre Life of Maya Millennial). She has no Slack.

Constraints bind her every action. Her job in life is putting up a front of the person she wants to show people that she wants to be. If her constraints noticeably failed to bind the illusion would fail.

Every action is being watched. If no one is around to watch her, the job falls to her. She must post all to Facebook, to Snapchat, to Instagram. Each action and choice signals who she is and her loyalty to the system. Not doing that this time could mean missing her one chance to make it big.

Maya never has free time. There is signaling to do! At a minimum, she must spend such time on alert and on her phone lest she miss something.

Maya never has spare cash. All must be spent to advance and fit her profile.

Maya lacks free speech, free association, free taste and free thought. All must serve.

Maya is in a world where she must signal she has no Slack. Slack means insufficient dedication and loyalty. Slack cannot be trusted. Slack now means slack later, which means failure. Future failure means no opportunity.

This is more common than one might think.

“Give Me Slack or Kill Me” – J.R. “Bob” Dobbs

The aim of this post was to introduce Slack and give an intuitive picture of its importance.

The short-term practical takeaways are:

Make sure that under normal conditions you have Slack. Value it. Guard it. Spend it only when Worth It. If you lose it, fight to get it back. This provides motivation for fighting things Out To Get You, lest you let them eat your Slack.

Make sure to run a diagnostic test every so often to make sure you’re not running dangerously low, and to engineer your situation to force yourself to have Slack. I recommend Sabbath Hard and Go Home with my take to follow soon.

Also respect the Slack of others. Help them value and guard it. Do not spend it lightly.

A Final Note

I kept this short rather than add detailed justifications. Hopefully the logic is intuitive and builds on what came before. I hope to expand on the details and models later. For a very good book-length explanation of why lacking Slack is awful, see Scarcity: Why Having Too Little Means So Much.

74 comments

Comments sorted by top scores.

comment by Gordon Seidoh Worley (gworley) · 2017-10-02T21:08:35.693Z · LW(p) · GW(p)

This reminds me that not everyone knows what I know.

If you work with distributed systems, by which I mean any system that must pass information between multiple, tightly integrated subsystems, there is a well understood concept of maximum sustainable load and we know that number to be roughly 60% of maximum possible load for all systems.

I don't have a link handy to show you the math, but the basic idea is that the probability that one subsystem will have to wait on another increases exponentially with the total load on the system and the load level that maximizes throughput (total amount of work done by the system over some period of time) comes in just above 60%. If you do less work you are wasting capacity (in terms of throughput); if you do more work you will gum up the works and waste time waiting even if all the subsystems are always busy.

We normally deal with this in engineering contexts, but as is so often the case this property will hold for basically anything that looks sufficiently like a distributed system. Thus the "operate at 60% capacity" rule of thumb will maximize throughput in lots of scenarios: assembly lines, service-oriented architecture software, coordinated work within any organization, an individual's work (since it is normally made up of many tasks that information must be passed between with the topology being spread out over time rather than space), and perhaps most surprisingly an individual's mind-body.

"Slack" is a decent way of putting this, but we can be pretty precise and say you need ~40% slack to optimize throughput: more and you tip into being "lazy", less and you become "overworked".

↑ comment by Raemon · 2018-07-08T01:26:58.620Z · LW(p) · GW(p)

This comment is still real good. Some thoughts I've been reflecting on re: 60% rule:

I've roughly been living my life at 60% for the past year (after a few years of doing a lot of over-extension and burnout). It has... been generally good.

I do notice that, say, at the last NYC Winter Solstice, I was operating at like 105% capacity. And the result was a more polished, better thing than would have existed if I'd been operating at 60%. Locally, you do get more results if you burn your reserves, esp if you are working on something with a small number of moving parts. This is why it's so easy to fall into the trap.

But, like, you can't just burn your reserves all the time. It just doesn't work.

And if you're building a thing that is bigger than you and requires a bunch of people coordinating at once, I think it's especially important to keep yourself _and_ your allies working at 60%. This is what maximizes the awesomeness-under-the-curve.

The main worry I have about my current paradigm, where I think I'm lacking: Most of my 60%-worth of effort I spend these days doesn't really go into _improvement_ of my overall capacity, or in especially building new skills. A year-ish ago, when I dialed back my output to something sustainable, I think I cut into my self-improvement focus. (Before moving to Berkeley, I HAD focused efforts on raising the capacity of people around me, which had a similar effect, but I don't think I've done that _much_ since moving here)

↑ comment by adamShimi · 2022-04-23T22:03:29.183Z · LW(p) · GW(p)

My good action of the day is to have fallen in the rabbit hole of discovering the justification behind your comment.

First, it's more queueing theory than distributed systems theory (slightly pedantic, but I'm more used to the latter, which explained my lack of knowledge of this result).

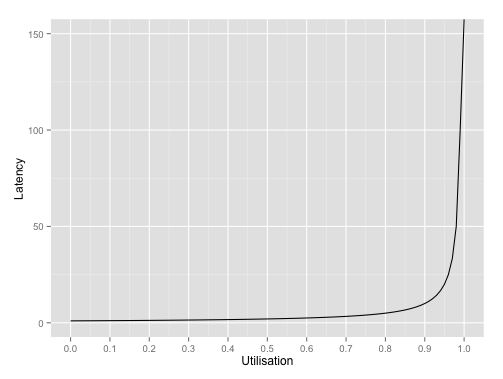

Second, even if you look through Queueing theory resources, it's not that obvious where to look. I've finally found a helpful blog post which basically explains how under basic models of queues the average latency behaves like , which leads to the following graph (utilization is used instead of load, but AFAIK these are the same things):

This post and a bunch of other places mentions 80% rather than 60%, but that's not that important for the point IMO.

One thing I wonder is how this result changes with more complex queuing models, but I don't have the time to look into it. Maybe this pdf (which also includes the maths for the mentioned derivation) has the answer.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-07-22T02:33:57.632Z · LW(p) · GW(p)

"helpful blog post" is down, here it is on wayback

↑ comment by Conor Moreton · 2017-10-04T07:49:18.819Z · LW(p) · GW(p)

This was a brand-new concept to me, and I suspect it's going to immediately be useful to me. Thank you very much for taking the time to explain clearly.

↑ comment by whpearson · 2017-10-03T22:02:07.628Z · LW(p) · GW(p)

I've come across this called Queueing Theory but I suspect it has been discovered many times.

↑ comment by Benquo · 2017-10-04T16:38:43.726Z · LW(p) · GW(p)

Huh. That's for a well-defined concrete goal outside the system that doesn't require substantial revision by the system you're evaluating. This suggests that if you see yourself operating at 60% of capacity, you're at neutral (neither accumulating debt nor making progress) with respect to metacognitive slack. Fortunately, if your metacognition budget is very small, even a very small investment - say, the occasional sabbath - can boost the resources devoted to it by one or more orders of magnitude, which should show substantial results even with diminishing returns.

↑ comment by twentythree · 2017-10-04T17:05:42.110Z · LW(p) · GW(p)

I saw this explained well in a book called The Phoenix Project. The book talks about what software development can learn from decades of manufacturing process improvements.

This blog post shows the graph presented by the book, which makes a similar but more general point to yours and further formalizes the Slack concept.

Seems it's hard to pin down the source of this concept, but it apparently follows from Little's Law.

↑ comment by Neuroff · 2017-10-05T01:44:12.977Z · LW(p) · GW(p)

and perhaps most surprisingly an individual's mind-body

Could you elaborate on this bit? Or maybe give an example of what you mean?

↑ comment by Gordon Seidoh Worley (gworley) · 2017-10-05T18:35:36.683Z · LW(p) · GW(p)

I don't know of a great way to phrase this so it doesn't get mixed up with notions of personal productivity, but the basic idea here is that you are yourself a complex system made of many distributed parts that pass information around and so you should expect if you try to operate above 60% of your maximum capacity you'll experience problems.

Take physical exercise, for example. If you are doing about 60% of what you are capable of doing you'll probably be able to do it for a long time because you are staying safely below the point of exhausting energy reserves and damaging cells/fibers faster than they can be repaired. Of course this is a bit of a simplification, because different subsystems have different limits and you'll run into problems if you work any subsystem over 60% capacity, so your limiting factor is probably not respiration but something related to repair and replacement, thus you may have to operate overall at less than 60% capacity to keep the constraining subsystem from being overworked. Thus you can, say, walk 20 miles at a slow pace no problem and no need to rest but will tire out and need to rest after running 5 miles at top speed.

Same sort of thing happens with mental activities, like if you concentrate too hard for too long you lose the ability to concentrate for a while but if you concentrate lightly you can do it for a long time (think trying to read in a noisy room vs. trying to read in a quiet room). It doesn't really matter how this happens (ego depletion? homeostatic regulation encouraging you to meet other needs?), the point is there is something getting overworked that needs time to clear and recover.

To sneak in an extra observation here, it's notable where this doesn't happen or only rarely happens. Systems that need high uptime tend to be highly integrated such that information doesn't have to be shared but instead contain a lot of mutual information. For example, the respiration system doesn't share information between its parts so much as it is a tightly integrated whole that immediately responds to changes to any of its parts because it can have zero downtime so it has to keep working even when parts are degraded and being repaired. But high integration introduces quadratic complexity scaling with system size, so such tight integration is limited by how much complexity the system can manage without being subject to unexpected complex failures. Beyond some scaling limit of what evolution/engineering can manage, systems can only get more complex by being less integrated than their subsystems, and so you run into this capacity issue everywhere we are not so unlucky as to need 100% uptime.

comment by Elizabeth (pktechgirl) · 2017-10-01T17:16:16.064Z · LW(p) · GW(p)

I find it interesting that some of the things that give slack also take it away. The obvious example is cell phones. Especially at first they gave slack by letting you leave the house while you were waiting for an important phone call, but eventually ate it by creating an expectation that you'd always be available.

↑ comment by mako yass (MakoYass) · 2017-10-07T00:23:04.451Z · LW(p) · GW(p)

Soylent gives me slack by saving time during lunch, until shorter lunch breaks become the norm. No, it's not really the new technology that's eating your slack, your manager is doing it. Those people who decided they aught to be able to call you on a whim. All we have to do is tell them no and they'll find a way to live with it. Sometimes it can be hard to tell them no, and that's why we have unions.

↑ comment by ialdabaoth · 2017-10-08T21:12:51.258Z · LW(p) · GW(p)

This is because social structures strive to keep slack homeostatic at your level. As soon as you have more slack than you need to service your superiors in the social hierarchy, they will take that slack for themselves.

comment by Chris_Leong · 2017-10-01T13:33:54.328Z · LW(p) · GW(p)

I clicked +1, but in way of feedback I would suggest trying to be more precise with your definitions. "The absence of binding constraints on behavior" sounds just like a synonym for freedom. If that was the concept that this article identified, it would be kind of pointless, but you've actually identified a new and useful concept.

This has a few advantages:

Firstly, it makes the article easier to understand. Someone people learn better by example, others better by explicit definition.

Secondly, it helps you make sure that what you have identified is indeed a single concept and not a few closely related ideas rolled into one.

Third, it allows you clarify the concept in your own head and pick more central examples to illustrate it.

Fourth, it helps set social norms by encouraging other people to carefully define their terms.

I would make an alternative definition as follows:

Firstly, we start off by assuming some kind of resource (ie. time, energy, money, social capital)

Now we can define Slack as keeping some of a resource spare so that you can spend it when opportunities come up (ie. to do things ethically/properly/just for fun/for personal growth, ect).

This is a more specific concept than freedom, it is related to the freedom provided by having spare resources.

There is also a typical paradigm of how people end up in situations without enough slack. Basically, they underestimate the future opportunity cost of either spending or committing to spend a particular resource now. For example, they spend a lot money at a casino, without realising that there is a chance that they may lose their job, in which case their excess money would suddenly become much more useful. Or they commit to too many projects because they want to be agreeable in the present, without realising how a lack of time for recuperation will wear them out when the work ends up more tiring than they expect. More broadly, it is deciding what you can spend or commit now, whilst ignoring the unknowns that might pop up in the future.

↑ comment by Zvi · 2017-10-01T17:22:23.919Z · LW(p) · GW(p)

Thank you. I agree that the definition isn't perfect and can likely be improved. "Freedom provided by having spare resources" isn't a bad second attempt but I sense we can do better; I will continue to think about the best way to pin this down concisely. Suggestions welcome!

↑ comment by ialdabaoth · 2017-10-07T23:59:38.899Z · LW(p) · GW(p)

"Freedom by having spare tolerances in your action -> utility map".

Resources are just one set of inputs to the function that maps actions to utility. Slack is what happens when your utility map has a plateau rather than a sharp peak.

I.e., arrange all your available actions on an N-dimensional field, separated by distinguishable salience. Then create an N+1 dimensional graph, where each such action is mapped to the total utility that results from that action. You have lots of slack if your region of maximum utility look like plateaus - that is, noticeable adjustments to your input don't pertub you out of your 'win' - and you have no slack if your region of maximum utility looks like a sharp peak - that is, noticeable adjustments to your input almost instantly perturb you out of your 'win' and into a significantly lower-utility part of your action space.

↑ comment by DragonGod · 2017-10-03T19:23:43.981Z · LW(p) · GW(p)

Suppose we are in state X, and a change Y1 is proposed which will lead to a better state X1. Then suppose the individual with power to implement Y1 replied:

Y1 is not bad, but I had a sense that more is possible. I am waiting for Yj. Suggestions appreciated.

Would you not suspect that there was some deeper irrationality at play--that the individual was at least a motivated sceptic?

By induction the argument in quotes can be applied against any Yi by appealing to an even better Yj. You have just given yourself a fully general counterargument (FGA) against any proposed change.

What is your true rejection of Chris_Leong's proposed change?

If a better definition is your true rejection, then beware the FGA. Either implement the proposed change, or explicitly state the requirements for a definition you would accept. FGAs are very tempting, and we change our minds less often than we think. Masking your true rejection behind a FGA only exacerbates the problem. Stating explicitly what your criteria are would make your true rejection plainly visible, and destroy the FGA.

Personally? I think you should do both.

↑ comment by Zvi · 2017-10-03T21:37:29.183Z · LW(p) · GW(p)

I am happy to say more about my thinking and did so in the other comment, but I will say that this comment seemed hostile and uncharitable. (But to be clear, I am 100% not calling in the Sunshine Regiment or even down-voting the comment, I just hope to make this less common in the future by highlighting it).

Responding to promises to put thought into constructive criticisms, and an indication of willingness to change one's mind, with accusations of fully general counterarguments, suspicions of irrationality and resistance to change, and demands for true rejections, seems to me neither charitable, reasonable nor productive. I'm confused why you pulled out such big guns and essentially accused me of responding in bad faith. If you wanted more detailed thoughts on the definition, you could simply have asked for them (and I gave them anyway).

Chris did not even quite suggest a new definition; I highlighted his phrase as pointing towards a potential better version and was myself pointing out that it wasn't half bad. I didn't even reject it at the time, although I do so now on reflection. I definitely wasn't saying that it was better than the original but I was rejecting it because I was holding out for better still. I also promised to think more about it. I hadn't even rejected Yi yet.

In addition, changing definitions is expensive once you've opened a concept to public discussion. It would in fact be perfectly reasonable to say that yes, Yi is better than X, but it is not better enough yet to justify switching, or that we should consider whether there exists Yj before paying switching costs.

↑ comment by DragonGod · 2017-10-04T02:49:50.906Z · LW(p) · GW(p)

It was not my intention to be hostile or overly uncharitable. Furthermore, it seems we have different expectations. Pointing out that you made a fully general counterargument (when that is in fact what you did) is not in my opinion bad behaviour. Pointing out that you come across to me as a motivated sceptic and are hiding your true rejection is not in my opinion bad behaviour. Pointing out that you acted irrationally (insomuch as fully general counterarguments and motivated scepticism are irrational) is not bad behaviour.

I gave an example to highlight the (to me) blatantly obvious irrationality in your reply.

You seem to expect that I interpret your arguments in "good faith". I do; I assume there was no malice or ill intent behind the rationalist faux pas. I assume it wasn't intentional and sought to draw it to your attention. I assume your intentions were benevolent; that in my honest opinion is what charity is.

My enemies (not to say you're one, but I'd rather not alter the saying) are not inherently evil, but neither are they inherently rational. You seem to expect that I interpret your arguments in the most rational way possible? What even? We are aspiring rationalists--getting it right is hard, sometimes we make mistakes, sometimes we commit errors of rationality. We have a bias blind spot that are preventing us from seeing our own biases. I've seen Scott fall for correspondence bias (well I wasn't there when it happened, but I read the comments) and don't even let me get started on Eliezer Yudkowsky. We are not perfect rationalists; we are not above error and bias; we are not beyond logical fallacies. We try to overcome our bias, we have not eliminated them.

I don't know if you think you've already overcome bias, but if then we live in entirely different worlds. To suggest that because I point out that you acted irrationally I am being "overly hostile" and "you want to discourage it"? I'm sorry, but this sounds like a blatant attempt to shut down criticism (you seem to be fine with criticism of other areas). (Now, I almost fell for correspondence bias and suggested you might be someone who prides themselves in their rationality, and me pointing out your irrationality was causing you to lose status, but "correspondence bias", so I wouldn't). Also, "big guns" rubs me the wrong way. Arguments are not soldiers, I do not aim to cause you to lose status (I think your falling for correspondence bias here). I did not simply want a clarification on the definition, I did not accuse you of responding in bad faith, I thought you were being irrational, so I called you out on it.

If you think accusations of irrationality are accusations of bad faith, then once again, we live in different worlds. I don't assume that you are uber rational, that you are so skilled in the way that common errors of rationality are beyond you. I don't assume that for everyone. Being rational is hard, and I know that I certainly struggle.

Now I'll be explicit; this is part of my way of good cognitive citizenship, this is my way of proper epistemic hygiene, this is my way of raising the sanity waterline. I love lesswrong, I boast about lesswrong. Anyone who knows me well enough knows about lesswrong. Lesswrong is a safe space for me. I kind of think of it like a garden--my garden. I am happy when I see debates being resolved and people changing their minds. I want the standards of discourse on LW to be as high as possible. It is because I care that I point out irrationality when I see it. If lesswrong was on the average more rational, then it grants me positive effect. I feel warm and fuzzy. I am not your enemy. (At least not the sense it is commonly used. The way I internally refer to enemies and opponents is just assuming everyone is a player in a game of unknown alignment (zero sum, cooperative, etc)).

I'll be charitable, I'll assume this was not a deliberate effort to shut down criticism. I'll assume that you do not think yourself to be beyond bias. I'll assume that there was a scenario based cause behind your attempt to suppress my criticism.

I'll assume my opponents are angels if that's the norm promoted here--but assume my opponents are rational? Why I've never heard a more silly idea.

Now as for the implied threat to downvote my post. Well, in the spirit of charity, I'll assume this was not a deliberate threat, was not an indication of willingness to do such and was a slip. I'm sorry, but if calling the "sunshine regiment" is a option that is even in your search space and/or sufficiently salient enough that you felt the need to stress that you wouldn't do it in response to constructive criticism (and my criticism was constructive; I specified viable courses of action for you to take)? Then I don't even.

But worse still, if the sunshine regiment was actually an option if there would have actually been moderator action on my post because I pointed out that you were being irrational? Well then that would break my heart. I really love lesswrong and go above and beyond to recommend it. But if that was truly a viable option for you, then it would be heart breaking. It'll seem lesswrong wasn't what I thought it would be, it wasn't what I hoped it would be.

P.S: I think I'll write a post titled "Beware the fully general counterargument" soon. Permission to use this exchange as part of the post?

There is a reason why I don't think it's perfectly valid, and that involves, "shifting the goal posts", "fully general counterarguments", "motivated scepticism", "hiding your true rejection", and possibly other biases/fallacies. I think I'll possibly throw in "proving too much", I'll try and do justice it in my post on it.

P. P. S: I did not consider switching costs. I think that even if you do, because of the failures of rationality I highlighted above, that you should explicitly specify your requirements for Yj.

↑ comment by gjm · 2017-10-04T12:28:42.181Z · LW(p) · GW(p)

I think you are being unreasonable. I mean, really unreasonable.

First, let's look at the original exchange. Chris says "I think your definition isn't quite right and X would be an improvement". Zvi says "You might be right, but I think one can do still better; I'll think about it and am open to suggestions". And you say: That's a fully general counterargument; Zvi should immediately have rewritten his article to use Chris's proposed definition, and not doing so shows that he is engaging in motivated skepticism and not offering his true rejection.

Sorry, but that's just silly. Rewriting the article in line with a slightly modified definition would be a pile of work; if Zvi thinks the slightly modified definition is neither a huge improvement nor the best one can do, it is absolutely reasonable for him to look and see if something better can be found. (In your more recent comment you say "I did not consider switching costs". OK then: so you didn't consider the obviously most important factor here but were still happy to leap from "Zvi didn't do so-and-so" to "Zvi is being a motivated skeptic, hiding his true rejection, etc., etc.". What the hell?)

Even if in fact nothing better can be found, it is absolutely not reasonable to expect that every time A finds a suboptimality in something B has written B should do anything more than acknowledge it. Does Chris's refinement of Zvi's definition invalidate everything Zvi wrote? It doesn't seem like it. So if Zvi's article was useful before, it's still useful now. Leaving it as it is, with one prominent comment describing that refinement and an agreement from Zvi right there under it, will do just fine.

And what's this "fully general counterargument" business? First of all, Zvi wasn't making a counterargument (nor purporting to make one). He agreed with what Chris said, after all. It would be a counterargument if Chris had added something like "... so you should rewrite your article to use my revised definition", but he didn't so it isn't. Second, being "fully general" doesn't make things wrong, it makes them incomplete. There's nothing wrong with saying "You say X. I disagree." even though "I disagree" is a thing anyone can say about anything. It can provide useful context for an actual argument that follows; it can simply be a statement of the speaker's position, when providing that information is worth the small effort it takes but engaging in a detailed explanation of why isn't worth the much larger effort that takes; move it one step closer to what Zvi wrote ("You say X. I disagree, but I don't have my reasons perfectly straight in my mind yet") and it's a placeholder for a later more detailed explanation. Nothing wrong with any of that.

Your most recent comment says a thing or two about correspondence bias, so I'd like to draw your attention to what I find a striking feature of both of your replies to Zvi in this thread: you shift very rapidly from observing a particular (alleged) defect in what he wrote to speculating about how he's thinking badly. So you go straight from "this is a fully general counterargument" to guessing at particular kinds of bias that might be in Zvi's head. He's a motivated skeptic! He isn't giving his true rejection! And you adopt what seems to me (and I think, from what he says in response, also to Zvi) a needlessly accusatory tone.

You could e.g. just have written this: "What do you find still unsatisfactory about Chris's proposed refinement of your definition, and what are you looking for in suggestions for further improvements? What would it take to justify revising the article to use a better definition?". That would have done the same job of soliciting more explicit criteria from Zvi. If you were extra-anxious to point out the logical defect you think you see in what he wrote: "That seems like something one could say to any proposed improvement. What do you find still unsatisfactory [etc.]". What advantage do you see in the much more confrontational approach you took?

(Your recent comment suggests that the answer is something like "By saying such things I hope to make people examine their biases and reduce them". Take a look at what's actually happening here. Does it seem like that's working well?)

So much for the original exchange. Now let's look at your more recent comment. It doubles down on the accusatory, confrontational approach. It takes Zvi's statement that (despite the disapproval he expresses) he isn't downvoting your comment, and casts it as a "threat to downvote", which makes no sense to me at all: downvoting a single comment is not an action major enough to be worth threatening, and Zvi's whole point is that he isn't doing it. I think you may have misconstrued his reason for saying what he said, which (I am guessing, on the basis that it's what I have meant when I've said similar things in the past) is not at all "I didn't downvote you, but I could and you'd better watch out in case I do, bwahahahaa" but "I know I'm saying something negative about what you wrote, and you may suspect I've downvoted you and feel that as a hostile action; but in fact I haven't downvoted you and I'd like you to know that in the hope that it will help our interactions be friendlier than they otherwise might be".

Similarly for the bit about the Sunshine Regiment; the point here is that there is a general policy on LW2 of being nice to one another when there isn't a cogent reason, and Zvi's statement that your comment was needlessly hostile could be taken as a suggestion that you be officially censured for it, and so he wanted to make it clear that he doesn't want that. (This one is less obviously not-a-concealed-threat, but I am still 95% confident that it was not intended as anything of the sort.)

And then you start talking about how it would break your heart if you got censured somehow for being needlessly confrontational, and how Less Wrong is your garden. This attempt to tug at your readers' heartstrings looks extremely odd alongside the no-fuzziness-allowed demands you've been making of Zvi. (Not because there's anything wrong with feeling strongly about things, of course. But because "if you do X it will make me sad" is generally an even worse counterargument to X than Zvi's allegedly fully general "I think one can do better than X".)

Take a look at what you've written to Zvi in this thread. Look in particular at all the things that (1) refer explicitly to "opponents" and "enemies" and (2) make uncomplimentary statements or conjectures about Zvi's intellect or character. And then, please, ask yourself the following question: This "way [you] internally refer to enemies and opponents" -- is it actually leading to good outcomes for you? Because I don't think it is. In so far as this thread is any guide, I think it's leading you to adopt an approach to discussion that annoys other people and makes them less, not more, receptive to anything useful you may have to say.

↑ comment by Zvi · 2017-10-04T12:41:12.618Z · LW(p) · GW(p)

You have my full permission to use this exchange if you feel it would be illustrative, and I thank you for asking permission rather than forgiveness. The topic is definitely worthy of more attention.

I also want to make clear that it was not my intent to make any implied threats. Quite the opposite; I was concerned that my reply would be viewed as an attack or call for reaction, and wanted to make it clear I did not want that. Saying "I'm not even going to downvote" was me saying I didn't think it even rose to that level of disagreement, nothing more.

I also think that we have an even bigger issue with karma than I thought, if a downvote as a threat is even a coherent concept. I mean, wow. I knew numbers on things were powerful but it seems I didn't know it enough (I suspect it's one of those no-matter-how-many-times-you-update-it's-not-nearly-enough things, since it's a class of incentives-matter).

Anyway, no offense taken. I am not your enemy in either direction, and I hope we can avoid talking about others as if they are enemies or coming across as if we're thinking that way. I do think you give that impression quite heavily here, even if unintended, and that this is bad.

↑ comment by DragonGod · 2017-10-05T17:15:27.253Z · LW(p) · GW(p)

I also want to make clear that it was not my intent to make any implied threats. Quite the opposite; I was concerned that my reply would be viewed as an attack or call for reaction, and wanted to make it clear I did not want that. Saying "I'm not even going to downvote" was me saying I didn't think it even rose to that level of disagreement, nothing more.

Understood.

I also think that we have an even bigger issue with karma than I thought, if a downvote as a threat is even a coherent concept.

Considering I have -12 karma on both posts (possibly from the same people) I think so. The idea of tying certain features to certain karma levels is pretty scary. If criticism is met with downvotes, then even if you don't care about karma in itself, insomuch as your karma determines what you can and cannot do, you may want watch what you say.

________________________________________________________________

In favour of the spirit of civilisation, I would like to say a few things:

I don't think of you as an enemy. "Opponent" is an internal label (that in certain circumstances) I apply to everyone not myself. This does not preclude cooperation.

I may have come across as hostile and accusatory; I apologise. That was not my intention. (I do not apologise for mentioning FGA, and motivated cognition (so perhaps you can file this as an apology that is not really an apology), but I apologise for offense received (unless you find the very suggestion of irrationality offensive, in which case I retract my apology (so once again an apology that is not really an apology, or as I prefer to say a "conditional apology")).My intention was to be a helpful critic. I was not suggesting that you must change you to change definition immeidately: My suggestions were:

If a better definition is your true rejection, then beware the FGA. Either implement the proposed change, or explicitly state the requirements for a definition you would accept. FGAs are very tempting, and we change our minds less often than we think. Masking your true rejection behind a FGA only exacerbates the problem. Stating explicitly what your criteria are would make your true rejection plainly visible, and destroy the FGA.

More explicitly: If you were indeed holding out for a better definition:

Temporary implement the proposed change (insomuch as it is better than what you originally proposed).

Explicitly specify what your requirements are for a new definition. This way, you would precommit to accepting a definition that meets your explicit requirements. You wouldn't be able to use an FGA to reject any proposed change, and would be able to overcome motivated cognition.

Irrationality is the default. Overcoming motivated cognition and being rational is an active effort; I stand by this statement. We are aspiring rationalists, and we are not beyond errors. We are overcoming bias, and have not overcome bias. When I point out what I perceive as errors in rationality, it's not meant to be offensive. If my wording makes it offensive, I can optimise that. If you find the concept of being irrational is offensive, then I wouldn't even bother optimising wording.

My initial message can be summed as thus:

The reply you gave can be applied to any proposed change, and thus is a fully general counterargument. Be careful how you use it. FGAs support motivated cognition. If actual quality of the definition is not your true rejection, then you would be a motivated sceptic. With an FGA, you can resist any definition proposed no matter how good it is. It is better to remove temptation than to try and overcome it. Thus, it is better if you remove the temptation that the FGA offers. Two ways to do this are:

Commit to accepting any definition better than the current one.

Explicitly state your criteria for accepting a definition. This criteria must be externally verifiable. This is precommitting to accepting a definition that meets the proposed standards. You'll be able to remove the temptation of the FGA and motivated cognition.

Based on this:

In addition, changing definitions is expensive once you've opened a concept to public discussion. It would in fact be perfectly reasonable to say that yes, Yi is better than X, but it is not better enough yet to justify switching, or that we should consider whether there exists Yj before paying switching costs.

It may be that you find "1" to be an unacceptable choice. I was not aware of that argument when I proposed "1". Personally, I would still go ahead with "1", but I'm not you, and we have different value systems. I have not seen a good argument against "2", and I urge you to enact it.

↑ comment by Zvi · 2017-10-03T21:18:38.665Z · LW(p) · GW(p)

I am happy to say more after having had time to reflect.

I do like the idea of spare resources quite a bit, but it doesn't encompass properly the class of things you can bounded on. I also think that defining via the negative is actually important here. A person who must wear a suit and tie, or the color blue, lacks Slack in this way, and one who is not so forced has it, but it is odd to think of this as spare resources. In many cases the way you retain Slack is to avoid incentive structures that impose constraints rather than having resources. Perhaps this is simply a case of not knowing what you've got till its gone, but I think that getting there would prove confusing.

Another intuition that points against spare resources is that you can substitute the ability to acquire resources for resources. Slack can often come simply from the ability to trade, including trade among things not literally traded (e.g. trading time for money for emotional stability etc etc) or the ability to produce. Again, you can call all such things "resources" or even "spare resources" but that would imply a pretty non-useful and non-intuitive definition of spare and resources, that wouldn't help explain the concept very well.

That all does suggest freedom might be a good word but I think it's not right. Certainly Slack implies freedom and freedom requires Slack, but freedom is a very overloaded (and loaded) word that has a lot of meanings that would be misleading. My model of how people think about concepts says that if we use the word freedom in this way, people will pattern match heavily on their current affectations and models of freedom, and won't grok the concept we're pointing towards with Slack as easily. I also think there'd be an instinct to think things of the class "oh, that's just..." and that's a curiosity stopper.

↑ comment by Rob Bensinger (RobbBB) · 2017-10-03T23:52:58.429Z · LW(p) · GW(p)

"I also think there'd be an instinct to think things of the class "oh, that's just..." and that's a curiosity stopper."

This is an important idea (and an important argument in favor of jargon proliferation) that I don't recall having seen presented before explicitly.

↑ comment by Chris_Leong · 2017-10-05T05:51:25.067Z · LW(p) · GW(p)

I don't understand how your definition is different from freedom.

I'm using resource in a very broad sense in that there is something that can be modelled as roughly on a linear scale and that there is some level beneath where bad things happen (often this is 0, but not always). So emotional stability can be thrown into a linear scale in very high level models.

comment by ialdabaoth · 2017-10-04T01:14:06.420Z · LW(p) · GW(p)

I assert that this is also about Slack, but possibly a different kind of Slack:

https://frustrateddemiurge.tumblr.com/post/144927712238/affordance-widths

↑ comment by Raemon · 2017-10-04T03:51:02.721Z · LW(p) · GW(p)

I'd forgotten that this specifically included the "strategize about how to increase your affordance widths" part, which brings it more in line with this post.

↑ comment by ialdabaoth · 2017-10-05T20:44:36.618Z · LW(p) · GW(p)

The thing is, Zvi's post here is about *not losing your affordance width*; it says nothing about how to increase it. Such a post would be highly appreciated.

comment by Czynski (JacobKopczynski) · 2020-05-02T19:03:30.942Z · LW(p) · GW(p)

I think this is a valuable concept to have, but despite thinking about it on and off ever since this was first posted (and reading the Book of the Subgenius) I still don't really understand it well enough to act on it.

comment by moridinamael · 2017-10-01T15:39:43.447Z · LW(p) · GW(p)

I found this post immediately valuable. i appreciated your conciseness. These ideas are simple but not obvious; spending too many words explaining a simple thing makes it seem complicated.

I don't feel that Maya Millennial describes me or anybody I know. People may be in thrall to various different traps of the superego, but by no means is the only common manifestation "social media astroturfing, FOMO, 'premium mediocre something something', thinly veiled middle class trap anxiety". A rich guy becomes slave to his airplane refurbishing hobby just as easily.

comment by Thomas Ambrose · 2017-10-10T05:25:59.079Z · LW(p) · GW(p)

Reminds me of Meditations on Moloch. "Slack" is anything that you have/want/enjoy that you would not need/want/care about if you were optimized for competition. Which is to say, Slack is any resource you could sacrifice to Moloch for an advantage.

Thanks for sharing. I've been thinking about this sort of thing a lot the past few years, and it's nice to have a concept handle that broadly encompasses buffer money/free time/focused attention/etc, instead of referring to each individually.

comment by Brandon_Reinhart · 2017-10-04T03:52:00.744Z · LW(p) · GW(p)

Maya has adopted the goal of Appearing-to-Achieve and competition in that race burns slack as a kind of currency. She's going all-in in an attempt to purchase a shot at Actually-Achieving. Many of us might read this and consider ourselves exempt from that outcome. We have either achieved a hard goal or are playing on hard mode to get there. Be wary.

The risk for the hard mode achiever is that they unknowingly transform Lesser Goals into Greater. The slackful hobby becomes a consuming passion or a competitive attractor and then sets into a binding constraint. When every corner of your house is full of magic cards and you no longer enjoy playing but must play nonetheless, when winemaking demands you wake up early to stir the lees and spend all night cleaning, when you cannot possibly miss a night of guitar practice, you have made of your slack a sacrifice to the Gods of Achievement. They are ever hungry, and ever judging.

This isn't to say you cannot both enjoy and succeed at many things, but be wary. We have limited resources - we cannot Do All The Things Equally Well. Returns diminish. Margins shrink. Many things that are enjoyable in small batches are poisonous to the good health of Slack when taken in quantity. To the hard mode achiever the most enjoyable efforts are often those that beckon - "more, more, ever more, you can be the best, you can overcome, you know how to put in the work, you know how to really get there, just one more night of focus, just a little bit more effort" - and the gods watch and laugh and thirst and drink of your time and energy and enjoyment and slack. Until the top decks are no longer strong, the wine tastes of soured fruit, the notes no longer sound sweet and all is obligation and treadmill and not good enough and your free time feels like work because you have made it into work.

comment by Gunnar_Zarncke · 2017-10-04T11:20:21.745Z · LW(p) · GW(p)

On management you write

Slack in project management is the time a task can be delayed without causing a delay to either subsequent tasks or project completion time. The amount of time before a constraint binds.

I think this is a nice short reference, but a lot lurks behind, because slack in project or process management has a long history and a lot of theory behind it. I think slack in this context can be equated with buffer capacity, at least mostly. Buffers can be good or bad. Toyota saw buffers as bad and invented Just in Time to deal with the consequences. If we follow their insights it is possible to go without much slack and still reap most benefits. But does this translate to private life? Maybe a better or at least more intermediate trade-off is Drum Buffer Rope. It may depend on personal style and situation. I know a few people who plan their life heavily and reap efficiency gains. Are there other insights from production that we could compare?

↑ comment by Benquo · 2017-10-04T16:39:59.512Z · LW(p) · GW(p)

Note that the production analogy assumes a level of value-alignment (or easiness of value alignment) that's not necessarily present in just living your life.

If I recall correctly, Toyota is the company that installed the pull-cord anyone on the assembly line could pull to halt the process, which seems like quite a lot of slack in some sense, without being a buffer in the sense I take you to mean.

There's a sense in which buffer that's not being used productively is more like clutter than slack. These seem worth distinguishing between, since they're very different kinds of having more than enough.

↑ comment by Gunnar_Zarncke · 2017-10-04T21:16:40.717Z · LW(p) · GW(p)

[a] buffer that's not being used productively is more like clutter than slack.

I like this specific observation and totally agree. Buffers can be slack, but there are definitely other ways. The key seems to be granting options - like with the pull-cord.

comment by Matt Goldenberg (mr-hire) · 2017-10-03T20:54:21.573Z · LW(p) · GW(p)

The opposite of slack would be... deliberate constraints? Which I find very valuable. In addition to the value of deliberate constraints- Parkinson's law is a real thing, as are search costs, analysis paralysis, eustress (distress's motivating cousin). I find when I'm structured and extremely busy, I'm productive and happy, but when I have slack, I'm not.

Could this be a case of Reversing the advice you get?

↑ comment by JacekLach · 2017-10-04T10:39:19.363Z · LW(p) · GW(p)

Yes, this post was very useful as advice to reverse to me. I think it possible now that one of the biggest problems with how I'm living my life today is optimising too hard for slack.

Low-confidence comment disclaimer; while I've had the concept pretty much nailed down before, I never before thought about it as something you might have too much off. After reading this post I realised that some people do not have enough slack in their life, implying you can choose to have less or more slack, implying it's possible too have too much slack.

I don't have abstract 'this is what too much slack looks like' clearly defined right now, but one thing resonates from my own experience. I often find myself with free time, and 'waste it away'. I don't really do anything on most weekends. Having more constraints as guidance for behaviour in free time could likely remediate that; but I seem to be very good at talking myself out of any recurrent commitments, saying that they would reduce my freedom/flexibility/slack.

At the same time, it seems to me that I'm happiest, most 'alive', most in the 'flow', in situations with exactly the kind of binding constraints this post talks of avoiding. The constraints focus you on the present, on the very moment, on being. For me this is clearest in sailing regattas - a clear purpose that acts as a binding constraint (to go as fast as possible while staying safe - a safety margin does not for slack make, since you are not willing to ignore crossing it), consuming all your attention (at least during the time you're responsible for the ship, and often more).

I suppose one can stretch the metaphor and say that having no slack on too many dimensions is likely to squash you; but having slack everywhere leaves you floating around aimlessly. Keeping most constraints slack and choosing only a couple aligned ones to bind against is possibly a way to find purpose.

↑ comment by Gunnar_Zarncke · 2017-10-04T21:23:14.374Z · LW(p) · GW(p)

I think we have to distinguish slack from freedom or indeed total absence of constraints. When I was younger I was fond of saying that freedom is overrated, because all this striving for freedom comes at significant costs of its own. Deliberately limiting oneself can indeed create some slack. For example, I don't have a driver's license (initially for environmental reasons), which might look like a lack of freedom to go where I want. But I noticed that this doesn't take notable options away (I live in a big city with good public transportation). I and my environment adapt and if I really need a car I can take a taxi from all the saved car costs. Maybe not the best example to illustrate this, but the best I currently have on offer :-)

comment by Dr_Manhattan · 2017-09-30T22:56:00.539Z · LW(p) · GW(p)

Related (I think): if you're early in your career, use some of the government-mandated slack (aka weekends) to differentiate yourself and build up human capital. If you're "a programmer" and are perceived as a generic resource, the system will often drive you to compete on few visible dimensions - like hours. If you have unique and scarce skills you have bargaining power to have more Slack and your employer might even pay for you to gain more human capital. (I still continue to reinvest part of my slack time into my career, though I a) like what I do and learning more of it b) don't really have to)

↑ comment by tristanm · 2017-10-01T00:07:49.398Z · LW(p) · GW(p)

Maya Millenial almost certainly already believes this. Instagram would be replaced by Github, of course, and maybe a technical blog. The main thing being that she is basically *required* to spend nearly all of her extra time on developing these external markers of expertise. But also that she is driven by the belief that these things make her more likely to acquire Slack in the future.

↑ comment by Dr_Manhattan · 2017-10-01T00:39:42.712Z · LW(p) · GW(p)

Agree, this could be a trap, e.g. people feel Github is a required resume item now. I think the strong indicator variable here is whether the thing you're doing is the generally socially acceptable thing (possibly a trap) vs something differentiated.

I personally got into the ML wave before it was cool and everyone in the world wants to be a Data Scientist. Back then it was called "Data Mining" and I decided to do it because I saw being a SWE was intellectually a dead end and wanted to do more mathy things in my job. The generalizable part of this is following your curiosity towards things make sense but are not yet another step on the treadmill.

↑ comment by tristanm · 2017-10-01T01:00:44.081Z · LW(p) · GW(p)

It may be tough to know what the next stepping stone is, in the sense of investing in skills that will be important in the future but aren't super hyped today. And also skills that will be relevant for a long time. I have a Generation X friend who essentially has this problem - despite having over a decade of ogramming experience, he essentially has to start over from scratch if he wants to be competitive in the job market again. While financially stable I'm sure he's still looking for something that is intellectually stimulating and fulfilling. But he also believes (rightly in my opinion) that he's earned a lot of Slack over the many years of his career. Therefore he's basically forced into Maya's situation, but at a serious disadvantage due to where he's invested his Slack already (like having a family).

comment by the gears to ascension (lahwran) · 2017-09-30T12:03:20.760Z · LW(p) · GW(p)

Whoa this is like The Problem In My Life Right Now (tm)

comment by NancyLebovitz · 2022-01-07T16:16:08.989Z · LW(p) · GW(p)

Slack: Getting Past Burnout, Busywork, and the Myth of Total Efficiency by Tom DeMarco

Another book: Slack (unallocated time) is essential for change, learning, and even doing things well.

I'm pretty sure this is the book with the description of what happens when two companies that don't do the work to write good contracts attempt to deal with each other.

comment by habryka (habryka4) · 2017-10-31T05:27:52.636Z · LW(p) · GW(p)

Our latest font changes made this post a bit harder to read, so I cleaned it up and converted it to our editor.

comment by whpearson · 2017-10-05T20:16:45.316Z · LW(p) · GW(p)

For some reason I remembered "In praise of Idleness" by Bertrand Russell. Seems relevant, although not entirely the same. Some choice quotes.

Another disadvantage is that in universities studies are organized, and the man who thinks of some original line of research is likely to be discouraged. Academic institutions, therefore, useful as they are, are not adequate guardians of the interests of civilization in a world where everyone outside their walls is too busy for unutilitarian pursuits.

In a world where no one is compelled to work more than four hours a day, every person possessed of scientific curiosity will be able to indulge it, and every painter will be able to paint without starving, however excellent his pictures may be. Young writers will not be obliged to draw attention to themselves by sensational pot-boilers, with a view to acquiring the economic independence needed for monumental works, for which, when the time at last comes, they will have lost the taste and capacity. Men who, in their professional work, have become interested in some phase of economics or government, will be able to develop their ideas without the academic detachment that makes the work of university economists often seem lacking in reality. Medical men will have the time to learn about the progress of medicine, teachers will not be exasperatedly struggling to teach byroutine methods things which they learnt in their youth, which may, in the interval, have been proved to be untrue.

comment by tristanm · 2017-10-01T04:06:57.075Z · LW(p) · GW(p)

I think a nice, short definition of Slack would be, "A small gradient of the cost function in the vicinity of the optimum." In other words, the penalty for making sub-optimal decisions is not very high, because the region of acceptable behaviors around the best one is fairly large.

And yet here we are, where the penalties for sub-optimal decisions are indeed quite high. The obvious question is, "How did it get to be this way?" Well, typically non-smooth cost functions indicate more complexity, so in some sense the decision landscape has gotten more complicated than it used to be. Intuitively, this would seem to be because there is some sort of adversarial process going on where everyone's strategies have adapted to become more effective.

The narrative that I usually tell myself to explain this goes something like, at some point along the way, firms realized (especially firms that accomplish cognitively demanding tasks) that the majority of their value was being created by a small number of extremely talented people. But these people were not just talented, but also mono-maniacal in their dedication to their jobs. They essentially filled most of their waking hours doing one thing, because they genuinely love it (and they love being hyper-focused). My assumption is that the ratio of the value these people created for their firms over the cost of hiring them was much greater than one. So firms realized that they could cut down on costs heavily by reducing overall hiring and focusing on locating just these people. Therefore they start to look for the signs of these people: Heavy activity outside of work dedicated to their field, a genuine love of it (maybe shown by writing articles, blogs or textbooks, giving talks, etc.), and willingness to work long hours. People adapt by trying to signal these things too even if it means cutting into the time they spend doing things they actually enjoy, but the job market is much tighter now because firms have reduced hiring. In short, firms are winning, people are losing.

But it still leaves the question of why the hyper-talented people are being paid less than they're worth? That seems to be the crux of it all, some kind of market inefficiency that allows firms to win big by getting super-people at a bargain. My naive guess is that it has something to do with the employees themselves, by the nature of their personalities they are actually willing to work for less than they are worth, but I am pretty uncertain about that. I would appreciate any answers from someone with more economic expertise if they have some ideas.

↑ comment by Zvi · 2017-10-01T17:30:11.155Z · LW(p) · GW(p)

My model of this problem is that firms cannot pay the hyper-talented what they are worth because the other employees would not stand for it. We have constructed norms whereby hyper-productive CEOs and other executives can get pay that is many times that of their employees, and still this causes massive resentment and even calls for government intervention. If programmers that were 100x as valuable as the average got even 10x the pay, the other employees would strike or riot. Equity does seem to be one way around this trap in some circumstances.

I have personal experience with this. I got [company I used to work for] to hire a very good programmer for a contract, he got the job done ahead of schedule and made us tons of money, and we couldn't renew for another project, because other programmers found out what he was paid and threatened to quit. So he took a higher-paying job elsewhere.

↑ comment by tristanm · 2017-10-02T18:04:25.644Z · LW(p) · GW(p)

Implicitly I've been assuming that we're talking about tech workers here, but that may not be totally fair. I should probably extend this model to include most millenials that are highly career-focused, probably based in major coastal cities. In which case, you could probably extend this to sales/marketing and finance people as well.

I think if we do this, then it may be true that the "hyper-talented" people are still the issue here, but it could also be due to more general labor surplus issues as well. Then you get a lot of highly educated, highly motivated young people crowding into major hubs into a few high paying fields, under the belief that these are the primary, if not the only pathways to success.

Then the question becomes, is this actually true? Are they correctly identifying where their best chance at happiness lies, and it just happens that there truly are fewer opportunities for financial stability for most people these days?

If yes, then I think we could probably reduce the "slack" problem to general economic problems, but I'm not sure if that's the direction you were intending to go with these posts. One thing I don't know is whether or not Maya is essentially acting rationally under her incentive structures, and the incentive structures are the main culprit, or whether she is valuing certain things too highly, such as prestige and social approval.

↑ comment by Zvi · 2017-10-03T20:48:36.756Z · LW(p) · GW(p)

My model says that Maya is acting the way humans react to things in similar situations, so whether or not her actions are "rational" the system is still the problem. Maya's actions seem like they could be rational for certain utility functions, and you can't play such games by halves (hence the lack of Slack) but my guess is that most Mayas are making a mistake playing the game at all. She should probably quit.

It does seem like financial stability is in practice much harder to achieve than it used to be, for most people. Especially when student loans and health care get involved. There's less margin for error even with a "good" job and good jobs are harder to find in a pinch for most people. It is unclear to me how much of that is increased needs (letting consumerism, signaling and positional goods eat all your Slack, general high standards, and not putting up with boring or physically demanding work like people used to) versus how much is a real crisis (student loans, health insurance, child care and other forced expenses, lack of jobs with good pay and job security, being treated like dirt due to that, and taking of Slack in order to control people).

For programmers, especially top programmers, this isn't an excuse. If I'm a superstar programmer who is worth 100x normal programmer, I can't get 100x or even 10x, but I can get a new job any time I want and likely earn 1.5x with good perks. That really should be good enough to have Slack.

↑ comment by whpearson · 2017-10-03T22:31:22.037Z · LW(p) · GW(p)

I like to look at energy usage per capita over time as an indicator of whether we can do more stuff than we could before. E.g. can people own cars. Technology changes this a bit, it makes cars more efficient/cheaper to make so more people can have cars. But looking at money etc makes things too easy to fudge.

So getting energy and people , the energy consumption per capita in 1980 it was 64 million BTU per person. In ~2014 it was 73 million BTU per person. So things are getting better energy wise for the globe. I won't try to break down per country to see if things are getting better for the US, things get complicated via global trade (exporting energy consumption etc). You could expect that the majority of the increase in energy consumption has happened other places than the US (Asia had 300% energy consumption growth) during that time period though so that there might be a net negative in the US.

It is late, so I'm not going to continue. I think we have a rising inequality from various things, so even if there was an increased energy usage over all, people may feel worse off.

↑ comment by bfinn · 2022-04-26T16:36:38.755Z · LW(p) · GW(p)

Or if you’re paid by results not the hour, as a contractor, you can earn the same in less time. Or even as an employee, you can just be paid to waste most of your time (though this is fairly unsatisfactory). Eg a friend of mine worked with an excellent programmer who would do nothing for months - literally spend most of the time in the pub or messing around with things that interested him - and occasionally spend a weekend programming furiously to produce what was presented to the (crappy) management as what the entire team had been working on for months.

↑ comment by whpearson · 2017-10-01T05:22:53.724Z · LW(p) · GW(p)

There was some furore a while back about wage fixing between the big tech companies. Whether it has been fixed or not is another matter.

I think that that sort of fixing is possible because a programmers value is based on the scale they work at. If you fix a bug that effects 1 million people per year and leads to 10 cents more per transaction, you have earned the company 100k per year. If your company only has 10k customers, then the same bug in the same time is only 1k per year. So you are worth different amounts to different businesses. As there are only so many very high scale businesses, they can collude.

Similar things might apply to lawyers, getting an X% better deal? I wonder at the social factors involved though.

↑ comment by the gears to ascension (lahwran) · 2017-10-01T17:24:42.224Z · LW(p) · GW(p)

I think a nice, short definition of Slack would be, "A small gradient of the cost function in the vicinity of the optimum." In other words, the penalty for making sub-optimal decisions is not very high, because the region of acceptable behaviors around the best one is fairly large.

This doesn't seem right to me. I think you could describe slack formally in terms of machine learning, but I don't think this is a correct description of it. I was going to propose a better one, but I don't know what I think it is yet.

comment by michael_vassar2 · 2017-10-06T22:08:32.265Z · LW(p) · GW(p)

My guess is that 'Slack' reads differently in social and material reality. Maya is, in a sense, signaling infinite slack, but in a manner which actually consumes any possible reserve of slack. I think a careful reading of Rao shows that sacrificing the thing for the representation of the thing is what he refers to as 'creating social capital'.

comment by habryka (habryka4) · 2017-10-01T20:10:46.129Z · LW(p) · GW(p)

Featured (nominated by Elizabeth): well-written, interesting, useful concept

↑ comment by Conor Moreton · 2017-10-01T20:25:18.890Z · LW(p) · GW(p)

Just a data point: I've yet to find a Zvi post enjoyable to actually read. When I make myself slog through them, there's always valuable and interesting concepts, and I'm usually better off for having put forth the effort, and so I upvote and am grateful to Zvi for sharing. But at the same time, the experience is one of trying to make sense of postmodernist slam poetry, or having to consciously sort out which words are jargon and which words are filler and which words just mean what they say, or trying to parse the statements of someone on a half-dose of mushrooms. It always feels like things could've been 50% more straightforward while still being aesthetically unique and interesting. It sort of feels like the writing doesn't try to optimize for limited working memory, maybe?

(All of this really truly just meant for data/feedback; I have no desire to communicate any sort of a demand-for-change and don't claim that it's "wrong" in any way except for me personally. It's just that, in Zvi's shoes, I would want to know if I were imposing a cost on some readers. Doesn't mean I wouldn't continue to impose it, if that were the right move.)

↑ comment by Zvi · 2017-10-02T17:14:24.523Z · LW(p) · GW(p)

Thank you for saying it. Feedback of this type is hard to get and quite valuable, especially given you grok the posts after doing the work. The concept that I might not be accounting for limited working memory is especially new and interesting. I've been making an effort to make the jargon/non-jargon distinction clear, but it's a known issue and I don't love my solutions so far. There's tension with brevity; I'm trying hard to keep things short.

I don't think you're the only person who has this issue, so I need to fix it. Tsuyoku Naritai. If you're willing I'd love to hear more (here or elsewhere), the more detailed and concrete the better.

↑ comment by Neuroff · 2017-10-05T01:35:57.816Z · LW(p) · GW(p)

I have nearly the opposite experience, FWIW.

The posts are intuitive; I flow through the text without any jarring.

I think the trick might be (I'm making this up, I don't know what anyone is actually doing) to not try to analyze each line or try to make sure you've understood each sentence. But to let yourself read all the words and then at the end, try to notice if you feel any different about certain concepts, situations, or beliefs.

My guess is people have different default methods of absorbing or processing text. If Zvi exists on one end of a spectrum, it would be nice to have whatever is at the opposite end. But I don't want to lose the benefits of having, what to me is a very enjoyable and easy reading experience. (But I also want to accommodate other processing types.)

For an example of a similar writing style (which I posit is worse than Zvi's but has similar properties), the book Finite & Infinite Games by James Carse.

↑ comment by Raemon · 2017-10-02T00:19:31.069Z · LW(p) · GW(p)

Heh. I'm able to understand Zvi's posts just fine and find them entertaining, but I think this is largely because I've spent a lot of time talking to him in person. I hear them in his voice with a particular cadence which comes with a sense of familiarity and in-jokiness.

It is interesting how different rationalist bloggers compare in terms of writing vs speaking. Scott and Eliezer both feel very different in essay form than in person. Zvi and Anna Salamon pretty much write exactly the same way they talk.

↑ comment by Chris_Leong · 2017-10-02T00:03:48.191Z · LW(p) · GW(p)

Hmm, I thought that Out to Get You was relatively clear, but I suppose that was because it was describing a concept that was easy to grok, not that it was written any differently. For that article there wasn't any need for a precisely specified definition, but for more difficult concepts definition by examples has its limits. But even more than that, if someone can write down an explicit definition it is more likely that they will also be able to pick good definitions to illustrate their point.

comment by benjaminikuta · 2022-12-25T04:20:00.161Z · LW(p) · GW(p)

Does anyone have a link to the LessWrong slack?

comment by glennonymous · 2019-06-23T22:36:50.055Z · LW(p) · GW(p)

This is pretty amazing — Zvi (winner of my one box award) and I independently invented the exact same concept and used the same word to describe it. I assume Zvi invented it first and deserves the credit, yay Zvi!!! I believe Danny Reeves may be able to verify this FWIW. (Danny, it’s a concept in Optimojo.)