The unspoken but ridiculous assumption of AI doom: the hidden doom assumption

post by Christopher King (christopher-king) · 2023-06-01T17:01:49.088Z · LW · GW · 1 commentsContents

Philosophical naivety Unempiricalness and unfalsifiability Humanity's obsession with the future Futurism as having deep connections to the business elite AI doom demolished None 1 comment

Epistemic Status: Strawman (but maybe also Steelman?)

Advocates for AI existential safety (a.k.a. "AI doomers"), have long been fear-mongering about AGI. Reasonable people have long pointed out how ridiculous the existence of AGI would be. The doom position has many arguments for how AGI could destroy humanity, but they all rely uncritically on the same unempirical, unproven, unfalsifiable, sci-fi-ish assumption:

The hidden doom assumption: The future is something which exists.

Indeed, most people who have thought about and accepted the hidden doom assumption also accept AI doom. But rarely do they spell this assumption out. Although it is clearly laughable, I will now proceed to demolish the doom position by tearing apart this assumption. It is sad that people can be so scared of a hypothetical concept.

Philosophical naivety

The position that the future exists, known as eternalism is widely known as philosophically naive. Quoting a famous philosopher and historian:

In the vast, beguiling constellation of human intellectual endeavors, few enigmas are as tantalizing as the concept of 'the future'. Yet, I dare assert, dear reader, it is a mere illusion, a phantasm conceived within the human propensity for forward-thrusting ambition. Harken to the sage words of Heraclitus, who postulated that "no man ever steps in the same river twice, for it's not the same river and he's not the same man." Indeed, the very fabric of time, like the ceaseless flux of Heraclitean river, is one of relentless and unrepeatable change. Thus, one might indeed conclude, borrowing the lofty wit of Hegel, that the future is but an "Unhappy Consciousness", a state of desire for an entity that does not yet, and can never truly exist.

One may counter-argue, armed with the deceptively empirical utterances of that notorious rationalist Descartes - "cogito ergo sum" - that the future exists in anticipation. Yet, this line of thought is patently absurd, redolent with the absurdity of Sartre's existential angst or Camus' 'Sisyphus' futilely heaving his boulder. For if we contend that the future exists in potentiality, we step upon the slippery slope of Zeno's paradoxes, perpetually dividing time into smaller and smaller fractions, ad infinitum. Alas, it is the very nature of the future, as the insightful Nietzsche succinctly put, to be "an ocean of infinite tomorrows," each tomorrow forever dancing just beyond the reach of our grasp. Thus, to postulate that the future 'exists' is tantamount to chasing Kierkegaard's metaphorical "shadow-self," a quest as ridiculous as it is fruitless. Hence, we should liberate ourselves from the shackles of such temporal illusions, and immerse ourselves, as Epicurus advises, in the pleasures of the present, the only realm of existence we can veritably know. - GPT-4

I hopefully have proved to all the philosophers in the audience why the hidden doom assumption is ridiculous, and thus we have no need to fear AGI. The rest of this post will be aimed at the layman.

Unempiricalness and unfalsifiability

An even graver issue is the lack of scientific evidence of a supposed "future". And even worse, there is no way to falsify it either, since according to futurists we can not observe the future. In fact, by Newton's flaming laser sword:

That what cannot be settled by experiment is not worth debating.

So we shouldn't even discuss the future (another mistake made by AI doomers). In fact, the post I am writing now is a misguided exercise; I would delete it, but that wouldn't make a difference since there are no future people to read it anyways.

Humanity's obsession with the future

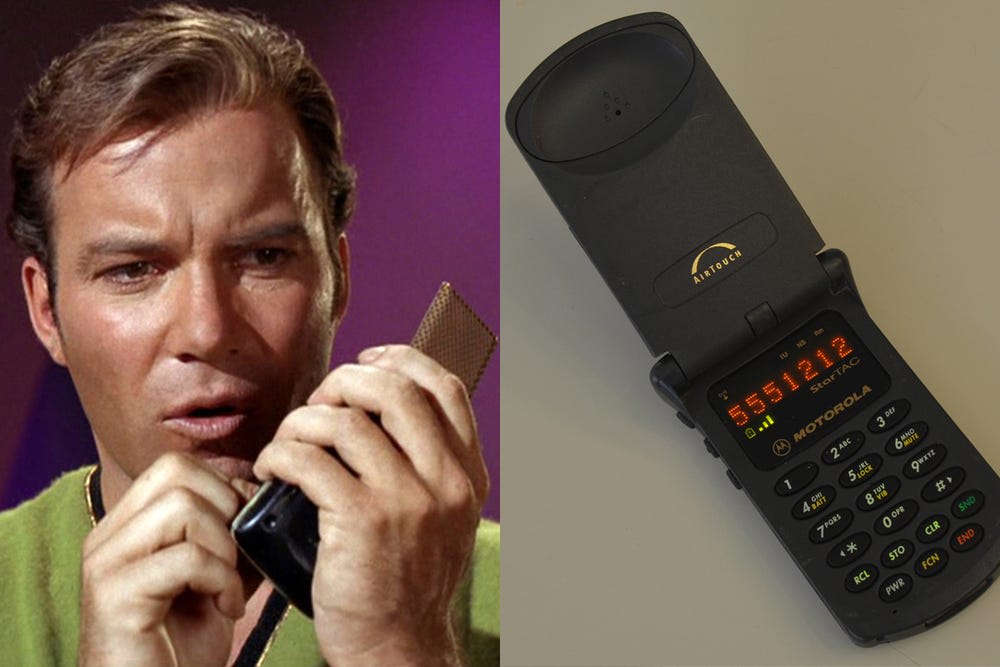

Since ancient times, there are many people who have claimed to be able to see the future. They are all seen as quite silly in retrospect.

Later, science fiction realized the narrative potential of these ideas, turning it into a form of story telling. Unfortunately, some people took these stories too seriously.

Later, people seeking to rationalize their belief in the future created a weird pseudo-religion that attempted to reconcile their belief in the future with their inability to see it.

- For each idea in their head, randomly make up a number that feels "subjectively correct". These numbers are connected to gambling, which makes sense since the followers of this religion are all chronic gambling addicts [? · GW].

- Look around and use math + more guessing to change these numbers (why even use the math if the numbers are made up anyways?).

- Think more about gambling, specifically lotteries.

- Now you can magically influence the future.

Futurism as having deep connections to the business elite

It is well known that investors are superstitious. One of these superstitions is the belief in the future.

For whatever reason, the stock market still functions (that's mostly just a giant invisible hand at work from my understanding of economics), but some investors try to apply the concept of the future outside of investing [? · GW] and impose it on the rest of us [? · GW]!

This all proves that the future must be fake and that the elites are just scheming to make us think it is real.

AI doom demolished

Is the the existence of the future necessary for AI doom? Or am I making a false assumption?

Name any one of those supposedly false allegedly required assumptions, please; I will either show that it isn't required (probably) or explain how we know it to be true (less likely). - @ESYudkowsky

The answer is no. We can clearly see that Yudkowsky acknowledges his reliance on the hypothetical concept:

There is no narrative that current AI models pose an existential threat to humanity, and people who pretend like this is the argument are imagining convenient strawmen to the point where even if it's technically self-delusion it still morally counts as lying. - @ESYudkowsky

Checkmate, doomers.

1 comments

Comments sorted by top scores.

comment by Davidmanheim · 2023-06-01T20:17:47.865Z · LW(p) · GW(p)

You missed April 1st by 2 months...