The Case for Overconfidence is Overstated

post by Kevin Dorst · 2023-06-28T17:21:06.160Z · LW · GW · 13 commentsThis is a link post for https://kevindorst.substack.com/p/the-case-for-overconfidence-is-overstated

Contents

The Empirical Puzzle 1) Intervals are insensitive to confidence level 2) People are less than 90%-confident in their own 90%-confidence intervals 3) People are more overprecise when they give intervals than estimates The Accuracy-Informativity Tradeoff Resolving the Puzzle 1) Intervals are insensitive to confidence level 2) People are less than 90%-confident in their own 90%-confidence intervals 3) People are more overprecise when they give intervals than estimates So is this rational? What next? None 13 comments

(Written with Matthew Mandelkern.)

TLDR: When asked to make interval estimates, people appear radically overconfident—far more so than when their estimates are elicited in other ways. But an accuracy-informativity tradeoff can explain this: asking for intervals incentivizes precision in a way that the other methods don’t.

Pencils ready! For each of the following quantities, name the narrowest interval that you’re 90%-confident contains the true value:

The population of the United Kingdom in 2020.

The distance from San Francisco to Boston.

The proportion of Americans who believe in God.

The height of an aircraft carrier.

Your 90%-confidence intervals are calibrated [? · GW] if 90% of them contain the true value. They are overprecise if less than 90% contain it (they are too narrow), and underprecise if more than 90% do.

We bet that at least one of your intervals failed to contain the true value.[1] If so, then at most 75% of your intervals contained the correct answer, making you overprecise on this test.

You’re in good company [LW · GW]. Overprecision is one of the most robust findings in judgment and decision-making: asked to give confidence intervals for unknown quantities, people are almost always overprecise.

The standard interpretation? People are systematically overconfident: more confident than they rationally should be. Overconfidence is blamed for many societal ills—from market failures to polarization to wars. Daniel Kahneman summed it up bluntly: “What would I eliminate if I had a magic wand? Overconfidence.”

But this is too quick. There are good reasons to think that when people answer questions under uncertainty, they form guesses that are sensitive to an accuracy-informativity tradeoff: they want their guess to be broad enough to be accurate, but narrow enough to be informative.

When asked who’s going to win the Republican nomination, “Trump” is quite informative, but “Trump or DeSantis” is more likely to be accurate. Which you guess depends on how you trade off accuracy and informativity: if you just want to say something true, you’ll guess “Trump or Desantis”, while if you want to say some thing informative, you’ll guess “Trump”.

So accuracy and informativity compete. This competition is especially stark if you’re giving an interval estimate. A wide interval (“the population of the UK is between 1 million and 1 billion”) is certain to contain the correct answer, but isn’t very informative. A narrow interval (“between 60 and 80 million”) is much more informative, but much less likely to contain the correct answer.

We think this simple observation explains many of the puzzling empirical findings on overprecision.[2]

The Empirical Puzzle

The basic empirical finding is that people are overprecise, usually to a stark degree. Standardly, their 90%-confidence intervals contain the true value only 40–60% of the time. They are also almost never under-precise.

In itself, that might simply signal irrationality or overconfidence. But digging into the details, the findings get much more puzzling:

1) Intervals are insensitive to confidence level

You can ask for intervals at various levels of confidence. A 90%-confidence interval is the narrowest interval that you’re 90%-confident contains the true value; a 50%-confidence interval is the narrowest band that you’re 50%-confident contains it, etc.

A standard finding is that people give intervals of very similar width regardless of whether they’re asked for 50%-, 90%-, or even 99%-confidence intervals. For example, this study found that the widths of 99%-confidence intervals were statistically indistinguishable from those of 75%-confidence intervals.

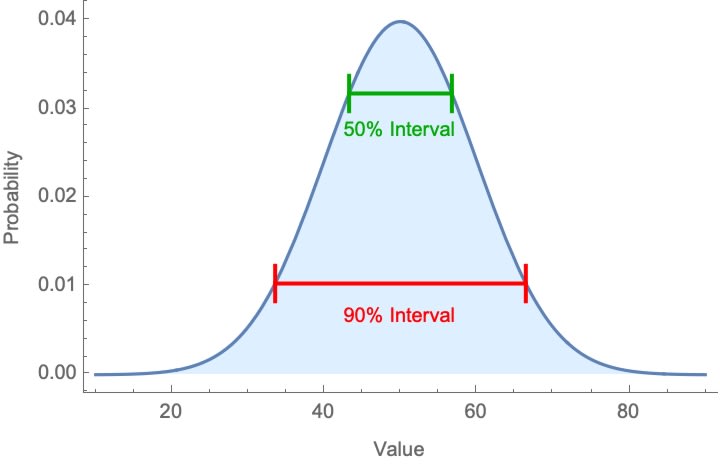

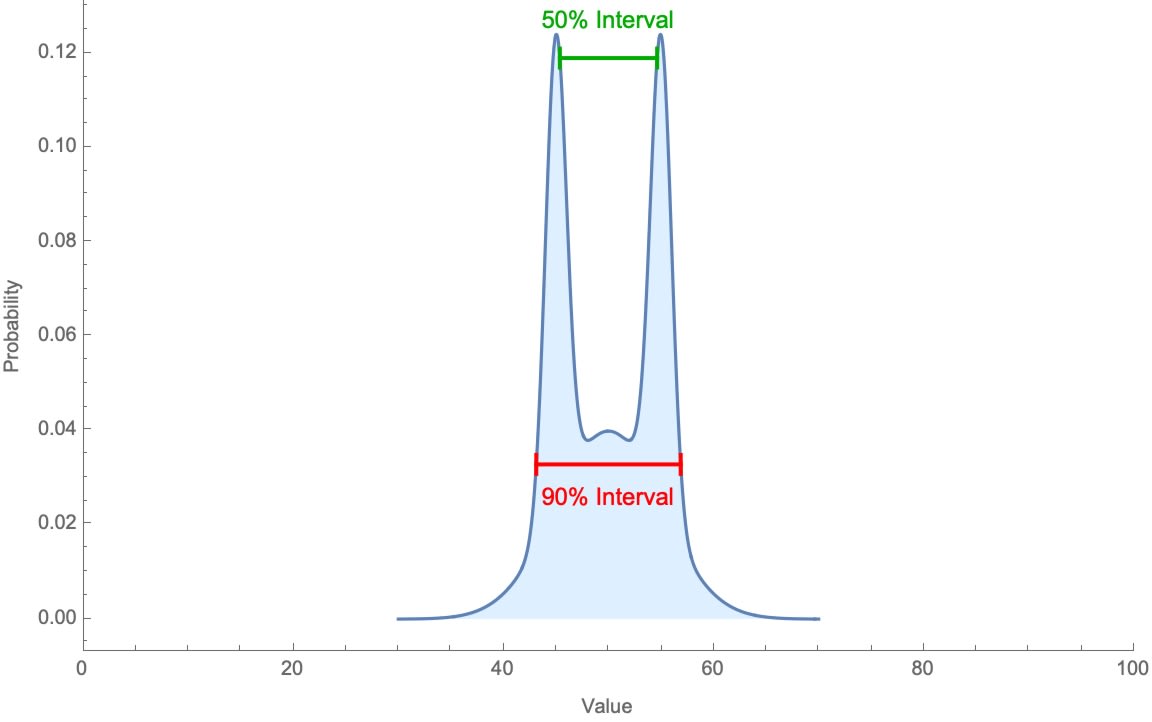

This is puzzling. If people are giving genuine confidence intervals, this implies an extremely implausible shape to their probability distributions—for instance, like so:

2) People are less than 90%-confident in their own 90%-confidence intervals

In a clever design, this study asked people for ten different 90%-confidence intervals, and then asked them to estimate the proportion of their own 90%-confidence intervals that were correct. If they were giving genuine confidence-intervals, they should’ve answered “9 of 10”.

Instead, the average answer was 6 of 10. That indicates that people don’t understand the question (“What’s your 90%-confidence interval?”), and in fact are closer to 60% confident in the intervals that they give in response to it.

3) People are more overprecise when they give intervals than estimates

The elicitation method we’ve used so far is the interval method: asking people to name the interval they’re (say) 90%-confident contains the true value.

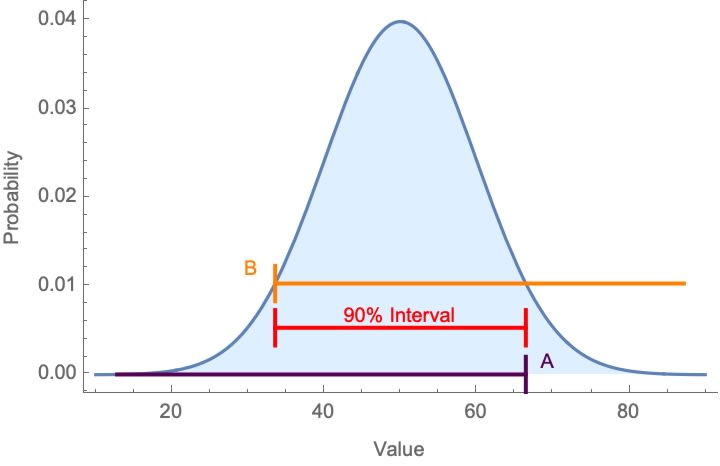

But another way to elicit a confidence interval is the two-point method: first ask people for the number B that they’re exactly 95%-confident is below the true value, and then for the number A that they’re exactly 95%-confident is above the true value. The interval [B,A] is then their 90%-confidence interval.

These two methods should come to the same thing.[3]

But they don’t. For example, this study elicited 80%-confidence intervals using both methods. Intervals for the interval method contained the true value 39% of the time, while those for the two-point method contained it 57% of the time.

This finding generalizes. A different way to test for overconfidence (generalizing the two-point method) is the estimation method: have people answer a bunch of questions and rate their confidence in their answers; then check what proportion were correct of the answers that they were 90%-confident in. If they were calibrated, this ”hit rate” should be 90%.

The main finding of the estimation method is the hard-easy effect. For (hard) tests with low overall hit rates, people over-estimate their accuracy—less than 90% of their 90%-confident answers are correct. But for (easy) tests with high overall hit rates, people under-estimate their accuracy (more than 90% are correct). This contrasts sharply with the interval method, which virtually never finds underprecision.

Notice: these empirical findings are puzzling even if you think people are irrational. After all, if people are simply overconfident, then these different ways of eliciting their confidence should lead to similar results. Why don’t they?

The Accuracy-Informativity Tradeoff

Our proposal: the interval method has a unique question-structure that incentivizes informative answers. Given the accuracy-informativity tradeoff in guessing, this makes people especially prone to sacrifice accuracy (hit rates) when giving intervals.

To see how this works, we need a theory of guessing on the table.

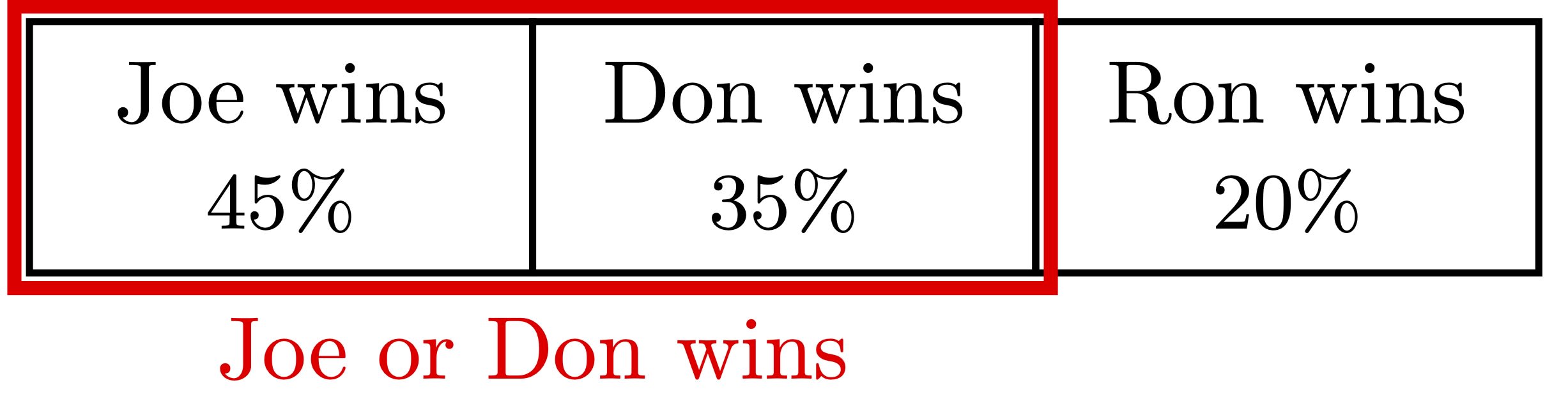

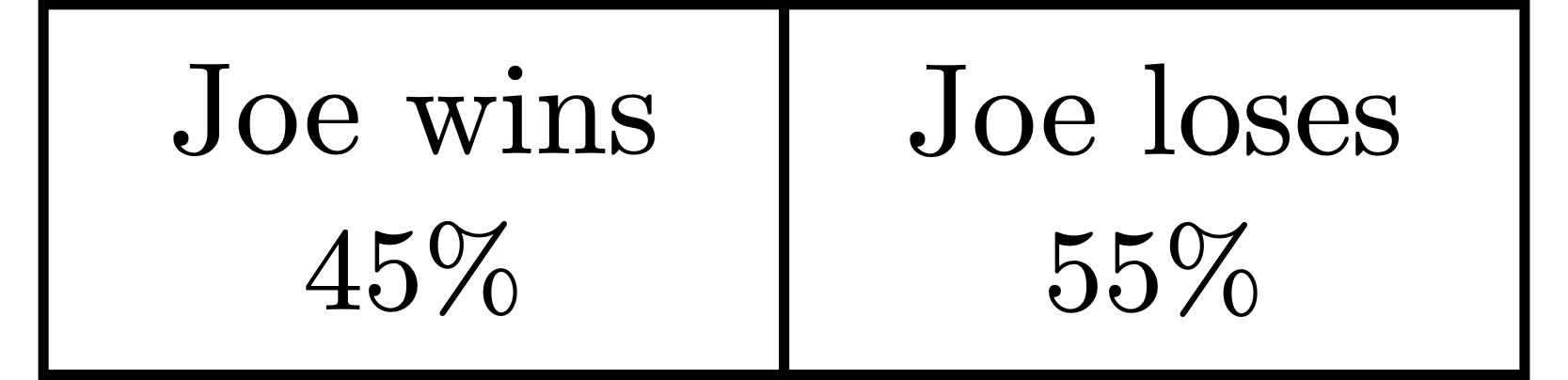

Consider this simple scenario: Three candidates are running for mayor: Joe, Don, and Ron. Current predictions (which you trust) give Joe a 45% chance of winning, Don a 35% chance, and Ron a 20% chance.

A friend asks you: “What do you think will happen in the election?”

There are a whole lot of interesting patterns in what guesses are natural-vs.-weird in such contexts—see this post, this paper by Ben Holguín, and this follow-up for more details. Here we’ll get by with a couple observations.

Guesses take place in response to a question. The complete answers to the question are maximally specific ways it could be resolved—in our case, “Joe will win”, “Don will win”, and “Ron will win”. Partial answers are combinations of complete answers, e.g. “Either Joe or Don will win”:

Guesses are accurate if they’re true. They’re informative to the extent that they rule our alternative answers to the question: “Joe will in” is more informative than “Joe or Don will win”, since the former rules out two alternative (Don, Ron) and the latter rules out only one (Ron).

Crucially, informativity is question-sensitive. Although “Joe will win, and he’s bald” is more specific than “Joe will win”, it’s not more informative as an answer to the question under discussion. In fact, the extra specificity is odd precisely because it adds detail without ruling out any alternative answers.

On this picture of guessing, people form guesses by (implicitly) maximizing expected answer value, trading off the value of being accurate with the value of being informative.

“Joe will win” maximizes this tradeoff when you care enough about informativity, for this drives you to give a specific answer. In contrast, “Don will win” is an odd guess because it can’t maximize this tradeoff given your beliefs: it’s no more informative than “Joe will win” (it rules out the same number of alternatives) and is strictly less likely to be accurate (35% vs. 45%).

Meanwhile, “Joe or Don will win” or “I don’t know—one of those three” can each maximize this tradeoff when you care less about informativity and more about accuracy.

Notice that question-sensitivity implies that what guesses are sensible depends on what the question is. Although “Joe will win” is a perfectly fine answer to “Who do you think will win the election?”, it’s a weird answer to the question, “Will Joe win or lose the election?”. After all, that question has only two possible answers (“Joe will win” and “Joe will lose”), both are equally informative, but “Joe will lose” is more (55%) likely to be accurate than “Joe will win (45%).

On this picture of guessing, part of getting by in an intractably uncertain world is becoming very good at making the tradeoff between accuracy and informativity. We’ve argued elsewhere that this helps explain the conjunction fallacy.

It also helps resolve the puzzle of overprecision.

Resolving the Puzzle

Key fact: Different ways of eliciting confidence intervals generate different questions, and so lead to different ways of evaluating the informativity of an answer.

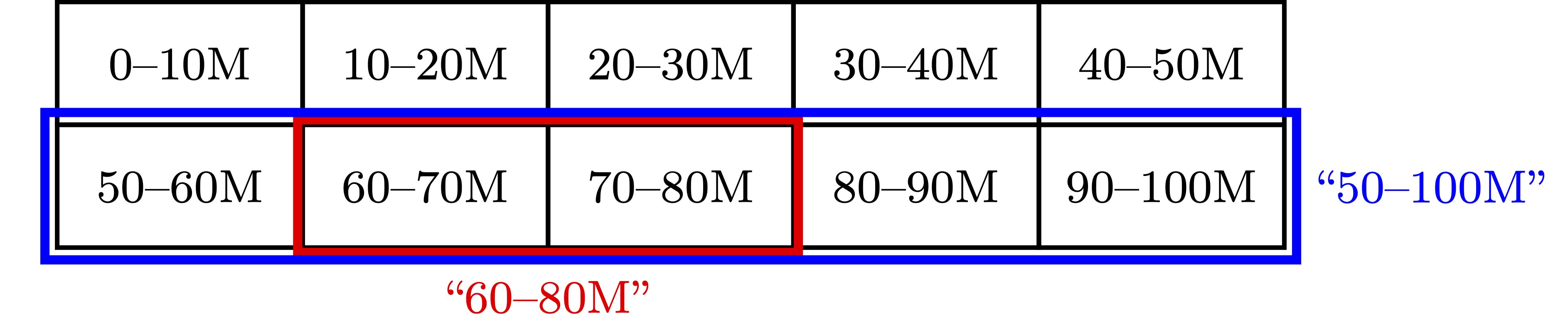

If we ask “What interval does the population of the UK fall within?”, then the possible complete answers to the question are roughly:[4]

Possible answers are then combinations of these complete answers. Narrow guesses are more informative: “60–80 million” rules out 8 of the 10 possible answers, while “50–100 million” rules out only 5 of them. As a result, the pressure to be informative is pressure to narrow your interval.

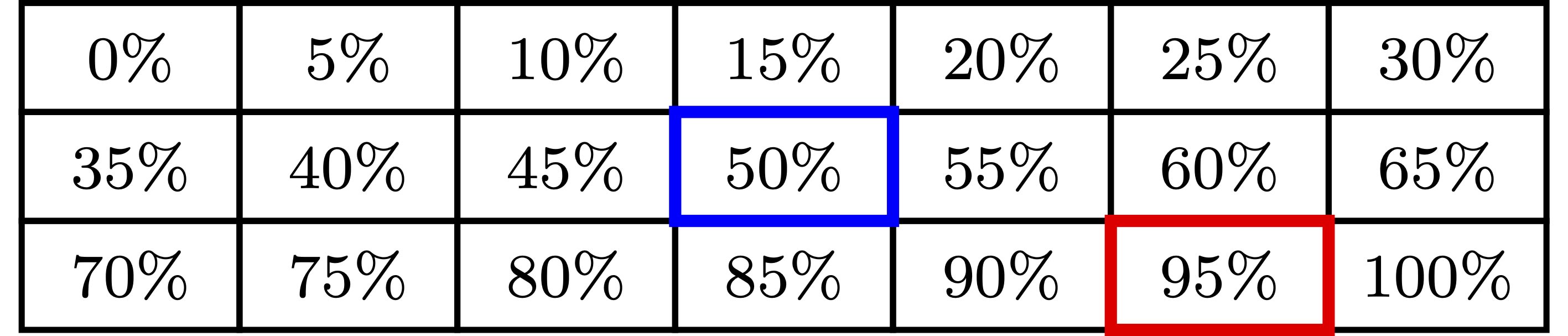

In contrast, suppose we ask “How confident are you that the true population of the UK is at least 60 million?”, now the possible answers are:

Note: in response to this question, “95% confident” is no more informative than (eg) “50% confident”—both of them rule out 20 of the 21 possible answers.

This observation can explain our three puzzling findings:

1) Intervals are insensitive to confidence level

Recall that people give extremely similar width to their intervals regardless of what level of confidence is asked for. We should expect this.

The basic idea of the guessing picture is that our practice of eliciting guesses uses a wide range of vocabulary. In our above election example, the following are all natural ways of eliciting a guess:

“What do you think will happen?”

“Who’s likely to win?”

“What should we expect?”

“Who’s your money on?”

“How’s this going to play out?”

And so on.

On this picture, when we ask questions under uncertainty in everyday thought and talk, we’re almost always asking for guesses—asking for people to balance accuracy and informativity. Thus it’d be unsurprising if people focus on the structure of the question (whether it’s more informative to give a narrower interval), and largely ignore the unfamiliar wording (“90%-confidence interval” vs. “80%-confidence interval”) used to elicit it.

2) People are less than 90%-confident in their own 90%-confidence intervals

Recall that when asked how many of their own 90%-confidence intervals were correct, people estimated that around 60% were. We should expect this.

The responses they give to the interval-elicitation method aren’t their true confidence intervals—rather, they’re their guesses, which involve trading some confidence for the sake of informativity.

If we change the question to “How many of the intervals you gave do you think contain the true value?”, now the possible answers range from “0 out of 10” to “10 out of 10”. “9 of 10” is no longer more informative than “6 of 10”—both are complete answers. This removes the incentive to sacrifice accuracy for informativity.

3) People are more overprecise when they give intervals than estimates

Recall that the interval method elicits more overprecision than the two-point method. We should expect this.

When asked what interval the population of the the UK falls within, “60–80 million” is more informative than “50–100 million”, since it rules out more possible answers.

But when asked for the number B that you’re 95% confident is below the true value, “60 million” is no more informative than “50 million”—both are complete answers to that question.[5] Similarly when asked for the number A that you’re 95%-confident is about the true value—“80 million” is no more informative than “100 million”.

Upshot: the drive for informativity pushes toward a narrower interval when eliciting intervals directly, but not when using the two-point method. That’s why the former leads to more overprecision.

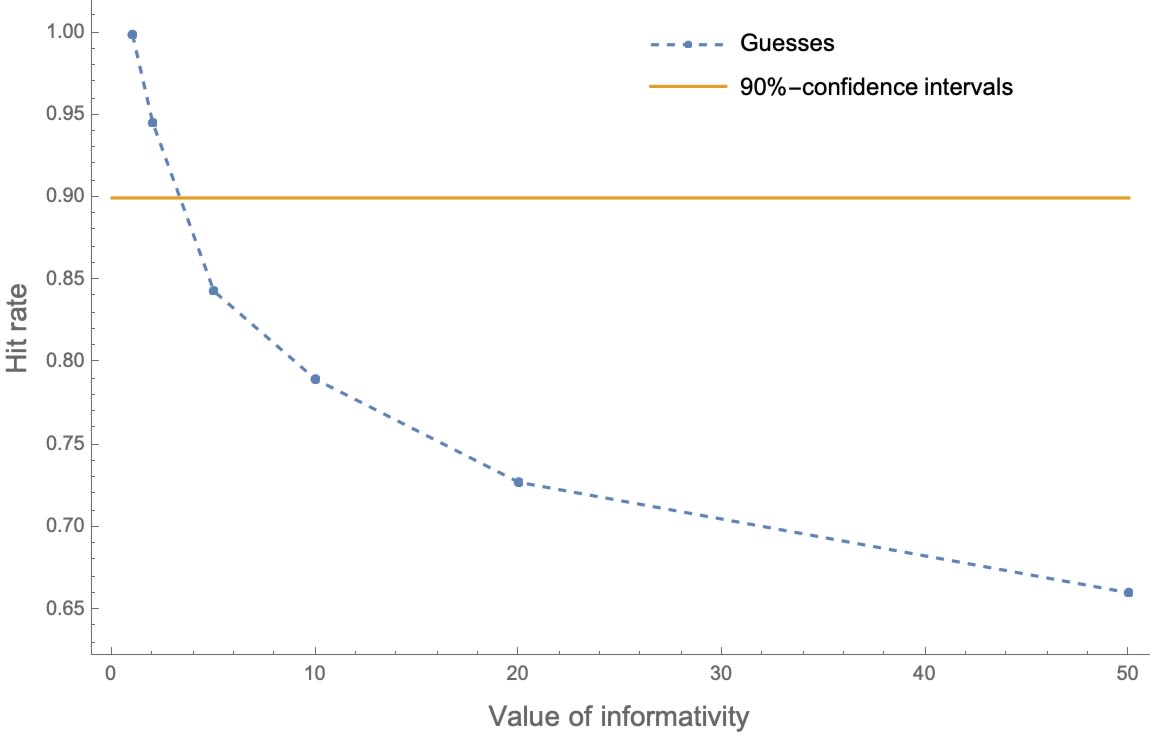

We can simulate the results of agents who form intervals using our preferred way of modeling the accuracy-informativity tradeoff. We can use agents whose probabilities are well-calibrated—their genuine 90%-confidence intervals contain the true value 90% of the time—but who give guesses rather than confidence-intervals.

Here’s how often their intervals contain the true value (“hit rate”) as we vary the value of informativity:

Stark degrees of overprecision are exactly what we should expect.[6]

So is this rational?

Two points.

First, many researchers claim that the best evidence for overconfidence comes from the interval-estimation method, since other methods yield mixed results. But since interval estimation is uniquely situated to reward informativity over accuracy, there’s reason for caution. The empirical evidence for overconfidence is more complicated than you'd think.

Second, you might worry that the irrationality comes at an earlier step: why are people guessing rather than giving genuine confidence intervals?

Granted, people are misunderstanding the question. But insisting that this is irrational underestimates how important informativity is to our everyday thought and talk.

As other authors emphasize, in most contexts it makes sense to trade accuracy for informativity. Consider the alternative: widening your intervals to obtain genuine 90% hit rates. Empirical estimates suggest that to do so, you’ve had to widen your intuitive reactions by a factor of between 5 and 17 times.

Suppose you did. Asked when you’ll arrive for dinner, instead of “5:30ish” you say, “Between 5 and 8”. Asked how many people will be at the party, instead of “around 10” you say, “Between 3 and 30”. Asked when you’ll reply to my email, instead of “next week” you say, “Between 1 day and 3 months”.

These answers are useless. They are in an important sense less rational than the informative-but-error-prone guesses we actually give in everyday thought and talk.

That, we think, is why people are overprecise. And why, on the whole, it makes sense to be so.

What next?

For a detailed discussion of just how subtle it is to move from observations of miscalibration to conclusions of irrationality, see this paper by Juslin et al.

For a great summary piece on overprecision, see this paper by Don Moore and colleagues.

For more on the accuracy-informativity tradeoff, see this blog post and this paper.

- ^

Answers: 67.8 million; 2704 miles; 81%; and 252 feet.

- ^

We’re taking inspiration from Yaniv and Foster 1995, though we implement the idea importantly differently. Two main points: our measure of informativity (1) allows for discrete (rather than interval-valued) questions, and (2) never incentivizes giving an interval that you know doesn’t contain the wrong answer (unlike their measure).

- ^

Assuming the distribution is unimodal and peaked in the middle.

- ^

Binning populations by 10-millions and upper-bounding it at 100M, for simplicity. We assume that when asked for an interval, the goals of the conversation pinpoint a maximal level of fineness of grain.

- ^

Note: “40–60M“ is an answer to the lower-bound question, but experimenters usually force people to give a complete answer to what they’re lower bound is, rather than letting them choose an interval for the bound.

- ^

The “Value of Informativity” is their “J-value” (see section 3 of this paper). Although this is a parameter we don’t expect people to have direct intuitions about, you need a J-value of about 6 for the optimal accuracy-informativity tradeoff in our election case to favor guessing “Joe will win” over “Joe or Don will win”. Thus a J-value of 3 or 4—the threshold at which people become overprecise—is quite modest. Indeed, many sensible guesses require much higher J-values: if Joe had a 50% chance of winning, Don had a 10% chance of winning, and 40 other candidates had 1% each chance of winning, then the J-value required to guess “Joe will win” is over 2000.

13 comments

Comments sorted by top scores.

comment by Lukas Finnveden (Lanrian) · 2023-07-01T17:20:21.066Z · LW(p) · GW(p)

But insisting that this is irrational underestimates how important informativity is to our everyday thought and talk.

As other authors emphasize, in most contexts it makes sense to trade accuracy for informativity. Consider the alternative: widening your intervals to obtain genuine 90% hit rates. (...) Asked when you’ll arrive for dinner, instead of “5:30ish” you say, “Between 5 and 8”.

This bit is weird to me. There's no reason why people should use 90% intervals as opposed to 50% intervals in daily life. The ask is just that they widen it when specifically asked for a 90% interval.

My framing would be: when people give intervals in daily life, they're typically inclined to give ~50% confidence intervals (right? Something like that?). When asked for a ("90%") interval by a researcher, they're inclined to give a normal-sounding interval. But this is a mistake, because the researcher asked for a very strange construct — a 90% interval turns out to be an interval where you're not supposed to say what you think the answer is, but instead give an absurdly wide distribution that you're almost never outside of.

Incidentally — if you ask people for centered 20% confidence intervals (40-60th percentile) do you get that they're underconfident?

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2023-07-03T14:21:17.152Z · LW(p) · GW(p)

Yeah that's a reasonable way to look at it. I'm not sure how much the two approaches really disagree: both are saying that the actual intervals people are giving are narrower than their genuine 90% intervals, and both presumably say that this is modulated by the fact that in everyday life, 50% intervals tend to be better. Right?

I take the point that the bit at the end might misrepresent what the irrationality interpretation is saying, though!

I haven't come across any interval-estimation studies that ask for intervals narrower than 20%, though Don Moore (probably THE expert on this stuff) told me that people have told him about unpublished findings where yes, when they ask for 20% intervals people are underprecise.

There definitely are situations with estimation (variants on the two-point method) where people look over-confident in estimates >50% and underconfident in estimates <50%, though you don't always get that.

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2023-07-03T23:24:22.245Z · LW(p) · GW(p)

Yeah that's a reasonable way to look at it. I'm not sure how much the two approaches really disagree: both are saying that the actual intervals people are giving are narrower than their genuine 90% intervals, and both presumably say that this is modulated by the fact that in everyday life, 50% intervals tend to be better. Right?

Yeah sounds right to me!

I haven't come across any interval-estimation studies that ask for intervals narrower than 20%, though Don Moore (probably THE expert on this stuff) told me that people have told him about unpublished findings where yes, when they ask for 20% intervals people are underprecise.

There definitely are situations with estimation (variants on the two-point method) where people look over-confident in estimates >50% and underconfident in estimates <50%, though you don't always get that.

Nice, thanks!

comment by brendan.furneaux · 2023-06-30T11:44:14.620Z · LW(p) · GW(p)

I don't see any information on the Wikipedia page about the height of the aircraft carrier; the figure you give in your footnote, 252 ft, is listed as the beam. That's width, not height.

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2023-07-01T13:27:21.595Z · LW(p) · GW(p)

Oops, must've gotten my references crossed! Thanks.

This wikipedia page says the height of a "Gerald R Ford-class" aircraft carrier is 250 feet; so, close.

https://en.wikipedia.org/wiki/USS_Gerald_R._Ford

comment by Violet Hour · 2023-06-29T11:11:18.674Z · LW(p) · GW(p)

Interesting work, thanks for sharing!

I haven’t had a chance to read the full paper, but I didn’t find the summary account of why this behavior might be rational particularly compelling.

At a first pass, I think I’d want to judge the behavior of some person (or cognitive system) as “irrational” when the following three constraints are met:

- The subject, in some sense, has the basic capability to perform the task competently, and

- They do better (by their own values) if they exercise the capability in this task, and

- In the task, they fail to exercise this capability.

Even if participants are operating with the strategy “maximize expected answer value”, I’d be willing to judge the participants' responses as “irrational” if the participants were cognitively competent, understood the concept '90% confidence interval', and were incentivized to be calibrated on the task (say, if participants received increased monetary rewards as a function of their calibration).

Pointing out that informativity is important in everyday discourse doesn’t do much to persuade me that the behavior of participants in the study is “rational”, because (to the extent I find the concept of “rationality” useful), I’d use the moniker to label the ability of the system to exercise their capabilities in a way that was conducive to their ends.

I think you make a decent case for claiming that the empirical results outlined don’t straightforwardly imply irrationality, but I’m also not convinced that your theoretical story provides strong grounds for describing participant behaviors as “rational”.

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2023-06-29T12:37:29.091Z · LW(p) · GW(p)

Thanks for the thoughtful reply! Cross-posting the reply I wrote on Substack as well:

I like the objection, and am generally very sympathetic to the "rationality ≈ doing the best you can, given your values/beliefs/constraints" idea, so I see where you're coming from. I think there are two places I'd push back on in this particular case.

1) To my knowledge, most of these studies don't use incentive-compatible mechanisms for eliciting intervals. This is something authors of the studies sometimes worry about—Don Moore et al talk about it as a concern in the summary piece I linked to. I think this MAY link to general theoretical difficulties with getting incentive-compatible scoring rules for interval-valued estimates (this is a known problem for imprecise probabilities, eg https://www.cmu.edu/dietrich/philosophy/docs/seidenfeld/Forecasting%20with%20Imprecise%20Probabilities.pdf . I'm not totally sure, but I think it might also apply in this case). The challenge they run into for eliciting particular intervals is that if they reward accuracy, that'll just incentivize people to widen their intervals. If they reward narrower intervals, great—but how much to incentivize? (Too much, and they'll narrow their intervals more than they would otherwise.) We could try to reward people for being calibrated OVERALL—so that they get rewarded the closer they are to having 90% of their intervals contain the true value. But the best strategy in response to that is (if you're giving 10 total intervals) to give 9 trivial intervals ("between 0 and ∞") that'll definitely contain the true value, and 1 ridiculous one ("the population of the UK is between 1–2 million") that definitely won't.

Maybe there's another way to incentivize interval-estimation correctly (in which case we should definitely run studies with that method!), but as far as I know this hasn't been done. So at least in most of the studies that are finding "overprecision", it's really not clear that it's in the participants' interest to give properly calibrated intervals.

2) Suppose we fix that, and still find that people are overprecise. While I agree that that would be evidence that people are locally being irrational (they're not best-responding to their situation), there's still a sense in which the explanation could be *rationalizing*, in the sense of making-sense of why they make this mistake. This is sort of a generic point that it's hard (and often suboptimal to try!) to fine-tune your behavior to every specific circumstance. If you have a pre-loaded (and largely unconscious) strategy for giving intervals that trades off accuracy and informativity, then it may not be worth the cognitive cost to try to change that to this circumstance because of the (small!) incentives the experimenters are giving you.

An analogy I sometimes use is the Stroop task: you're told to name the color of the word, not read it, as fast as possible. Of course, when "red" appears in black letters, there's clearly a mistake made when you say 'red', but at the same time we can't infer from this any broader story about irrationality, since it's overall good for you to be disposed to automatically and quickly read words when you see them.

Of course, then we get into hard questions about whether it's suboptimal that in everyday life people automatically do this accuracy/informativity thing, rather than consciously separate out the task of (1) forming a confidence interval/probability, and then (2) forming a guess on that basis. And I agree it's a challenge for any account on these lines to explain how it could make sense for a cognitive system to smoosh these things together, rather than keeping them consciously separable. We're actually working on a project along these lines for when cognitive limited agents might be more advantaged by guessing in this way, but I agree it's a good challenge!

What do you think?

Replies from: Violet Hour↑ comment by Violet Hour · 2023-06-29T15:45:06.142Z · LW(p) · GW(p)

The first point is extremely interesting. I’m just spitballing without having read the literature here, but here’s one quick thought that came to mind. I’m curious to hear what you think.

- First, instruct participants to construct a very large number of 90% confidence intervals based on the two-point method.

- Then, instruct participants to draw the shape of their 90% confidence interval.

- Inform participants that you will take a random sample from these intervals, and tell them they’ll be rewarded based on both: (i) the calibration of their 90% confidence intervals, and (ii) the calibration of the x% confidence intervals implied by their original distribution — where x is unknown to the participants, and will be chosen by the experimenter after inspecting the distributions.

- Allow participants to revise their intervals, if they so desire.

So, if participants offered the 90% confidence interval [0, 10^15] on some question, one could back out (say) a 50% or 5% confidence interval from the shape of their initial distribution. Experimenters could then ask participants whether they’re willing to commit to certain implied x% confidence intervals before proceeding.

There might be some clever hack to game this setup, and it’s also a bit too clunky+complicated. But I think there’s probably a version of this which is understandable, and for which attempts to game the system are tricky enough that I doubt strategic behavior would be incentivized in practice.

On the second point, I sort of agree. If people were still overprecise, another way of putting your point might be to say that we have evidence about the irrationality of people’s actions, relative to a given environment. But these experiments might not provide evidence suggesting that participants are irrational characters. I know Kenny Easwaran likes (or at least liked) this distinction in the context of Newomb's Problem.

That said, I guess my overall thought is that any plausible account of the “rational character” would involve a disposition for agents to fine-tune their cognitive strategies under some circumstances. I can imagine being more convinced by your view if you offered an account of when switching cognitive strategies is desirable, so that we know the circumstances under which it would make sense to call people irrational, even if existing experiments don’t cut it.

Replies from: timothy-underwood-1, Kevin Dorst↑ comment by Timothy Underwood (timothy-underwood-1) · 2023-06-29T18:59:22.521Z · LW(p) · GW(p)

I think the issue is that creating an incentive system where people are rewarded for being good at an artificial game that has very little connection to their real world cericumstances, isn't going to tell us anything very interesting about how rational people are in the real world, under their real constraints.

I have a friend who for a while was very enthused about calibration training, and at one point he even got a group of us from the local meetup + phil hazeldon to do a group exercise using a program he wrote to score our calibration on numeric questions drawn from wikipedia. The thing is that while I learned from this to be way less confident about my guesses -- which improves rationality, it is actually, for the reasons specified, useless to create 90% confidence intervals about making important real world decisions.

Should I try training for a new career? The true 90% confidence interval on any difficult to pursue idea that I am seriously considering almost certainly includes 'you won't succeed, and the time you spend will be a complete waste' and 'you'll do really well, and it will seem like an awesome decision in retrospect'.

↑ comment by ChristianKl · 2023-06-30T00:00:48.595Z · LW(p) · GW(p)

Elon Musk estimated a 10% chance of success for both Tesla and SpaceX. Those might have been good estimates.

Peter Thiel talks about how one of the reasons that the PayPal mafia is so successful is that they all learned that success is possible but really hard. If you pursue a very difficult idea and know you have a 10% chance of success you really have to give it all and know that if you slack you won't succeed.

↑ comment by Kevin Dorst · 2023-07-01T13:24:26.940Z · LW(p) · GW(p)

Crossposting from Substack:

Super interesting!

I like the strategy, though (from my experience) I do think it might be a big ask for at least online experimental subjects to track what's going on. But there are also ways in which that's a virtue—if you just tell them that there are no (good) ways to game the system, they'll probably mostly trust you and not bother to try to figure it out. So something like that might indeed work! I don't know exactly what calibration folks have tried in this domain, so will have to dig into it more. But it definitely seems like there should be SOME sensible way (along these lines, or otherwise) of incentivizing giving their true 90% intervals—and a theory like the one we sketched would predict that that should make a difference (or: if it doesn't, it's definitely a failure of at least local rationality).

On the second point, I think we're agreed! I'd definitely like to work out more of a theory for when we should expect rational people to switch from guessing to other forms of estimates. We definitely don't have that yet, so it's a good challenge. I'll take that as motivation for developing that more!

comment by Nathaniel Monson (nathaniel-monson) · 2023-06-28T19:14:15.631Z · LW(p) · GW(p)

This is a really cool piece of work!

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2023-06-29T12:37:41.749Z · LW(p) · GW(p)

Thanks!