When Gears Go Wrong

post by Matt Goldenberg (mr-hire) · 2020-08-02T06:21:25.389Z · LW · GW · 6 commentsContents

Questions None 6 comments

(This talk was given at the LessWrong curated talks event on July 19 [LW · GW]. mr-hire is responsible for the talk, David Lambert edited the transcript.

If you're a curated author and interested in giving a 5-min talk, which will then be transcribed and edited, sign up here.)

mr-hire: A question that I don’t think gets asked enough in the rationalist community is whether gears are a good investment. Typically, when it is asked, the answer is yes. Here's an example from John Wentworth talking about gears as a capital investment.

I'm not picking on John, and I mostly agree with him, but I think this is a question that should receive a little bit more scrutiny and nuance.

So, gears are good—that’s the typical response from most of the rationalist and Effective Altruism community.

Gears provide deeper understanding of a topic. They allow you to carve reality at the joints when you're using your models. They allow for true problem-solving, not just cargo culting around your ideas.

And I think that's fair. All those things are true.

But I personally mostly try to develop deep gears in two places.

One is my primary field. I think you should definitely develop gears around whatever your career is. Whatever you're really focused on right now.

For me, this is business and entrepreneurship. I try to develop deep gears around how businesses work: strategy, distribution, how they all fit together, etc.

Secondly, it is important to develop deep gears around the most important problems you're focused on right now, so that you can understand them from every angle and frame things differently. Again, for me right now, my business is focused on solving procrastination. So I really want nuanced and varied models of procrastination.

But for most other scenarios, I think that having a gears-level model is a waste.

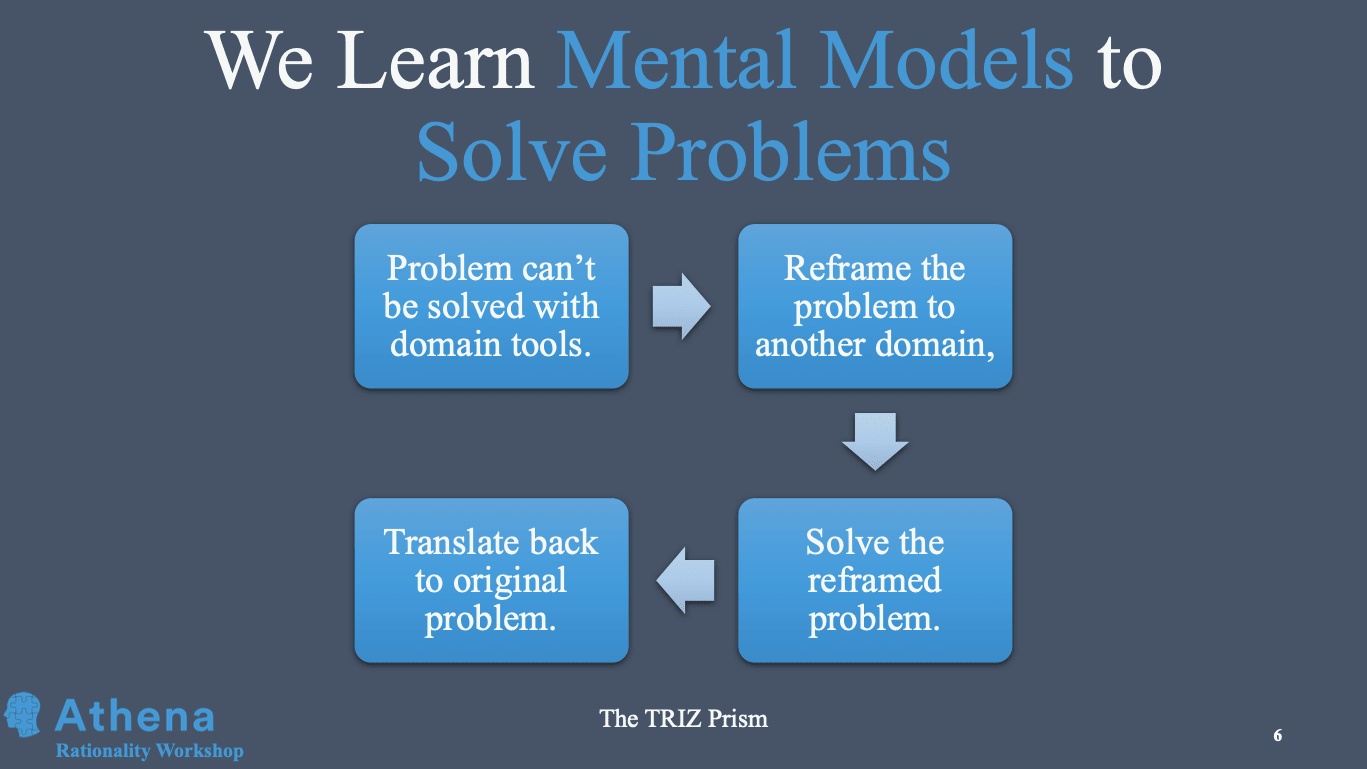

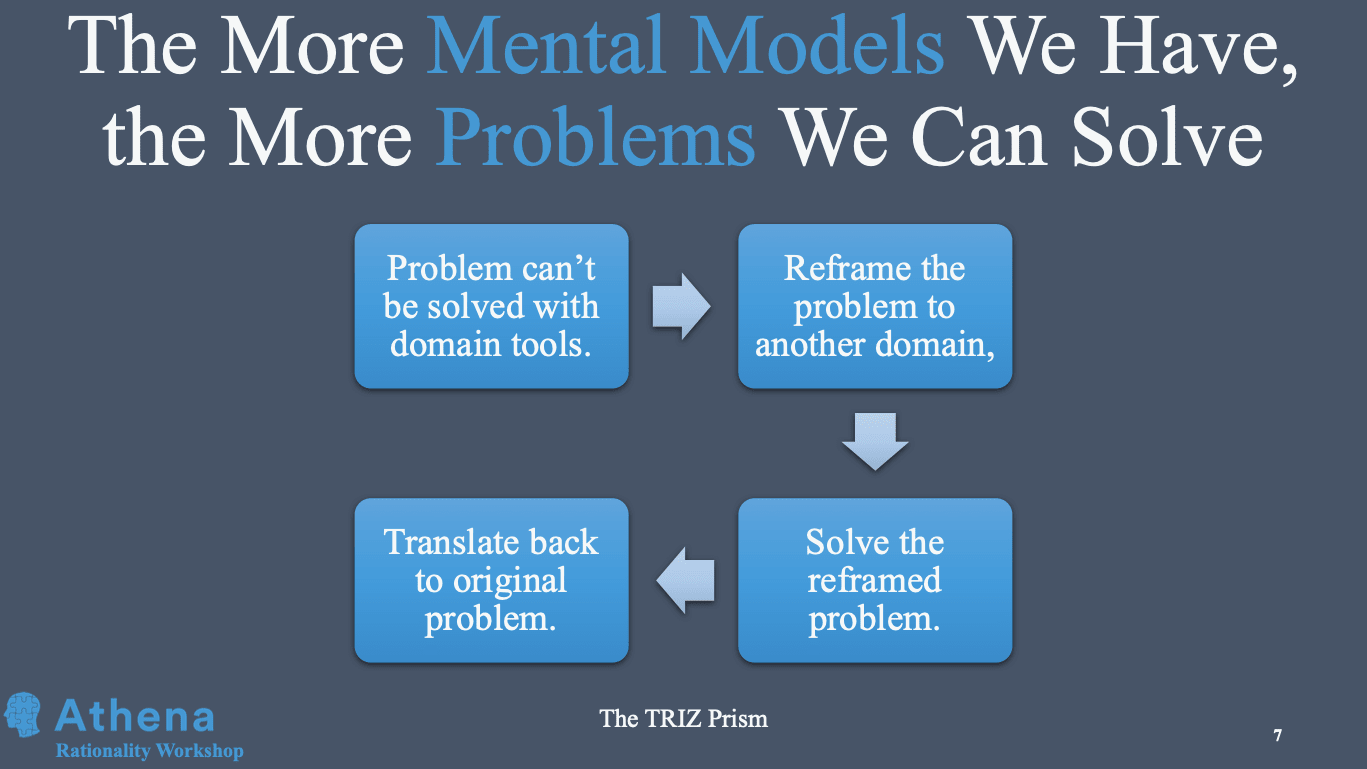

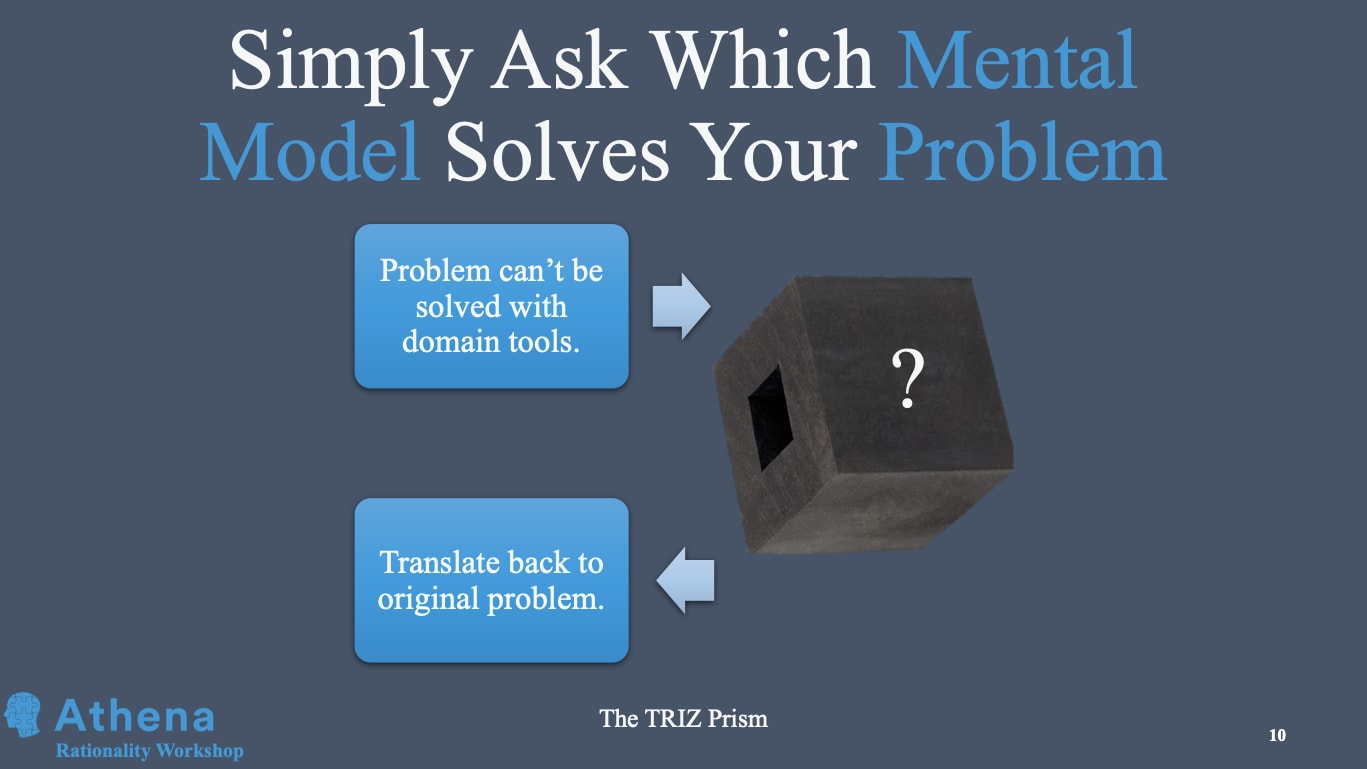

In general, I think that we learn mental models to solve problems. (There are other reasons as well, like prediction and generation, but I will not focus on them here). And we do that using this thing called the TRIZ Prism:

This is how we solve problems that people in our field haven't been able to solve: by using models from outside our field. So the more mental models we have, the more reframes we can do, and the more problems we can solve.

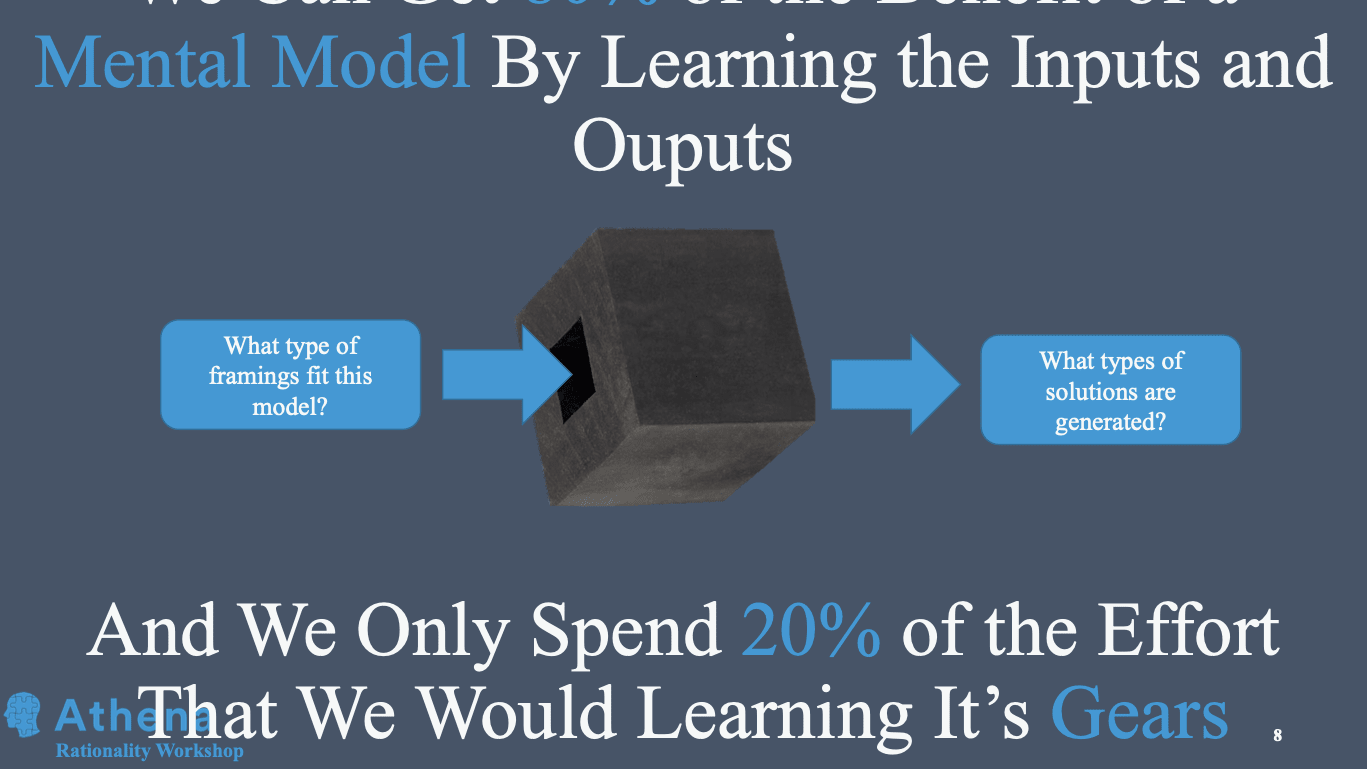

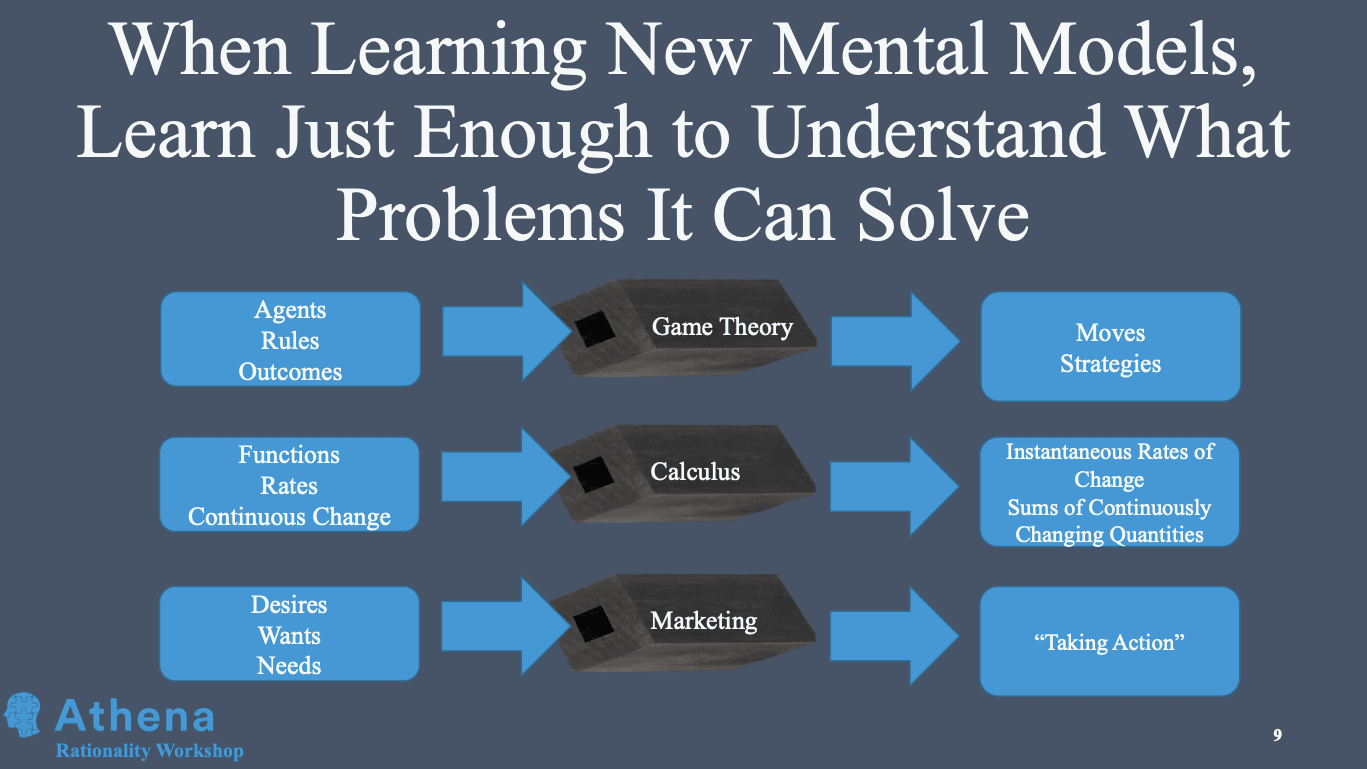

Now, my point is that we can get 80 % of the benefit of learning that mental model by simply learning the inputs that the model can take: the framings that allow something to be viewed as a mental model that way and then the outputs, the types of solutions that the model generates.

By doing this, we spend only 20% of the effort we would have by learning the mental model at a gears level. For example, if we were learning a mathematical concept and we need to be able to generate all of the important claims of that branch of math from scratch, it's going to take much more effort than if we're just understanding what the high-level theory and points of math is about.

Here are some examples.

In game theory, as long as you understand what the inputs and outputs are -- how things need to be framed so game theory can help solve them -- you can get a good portion of the way there to getting value out of these models.

You just need to think “Okay, I understand this problem. Can it be reframed in a way that this mental model ticks, and then can the solution output in a way that fits with the solution that I'm looking for?”

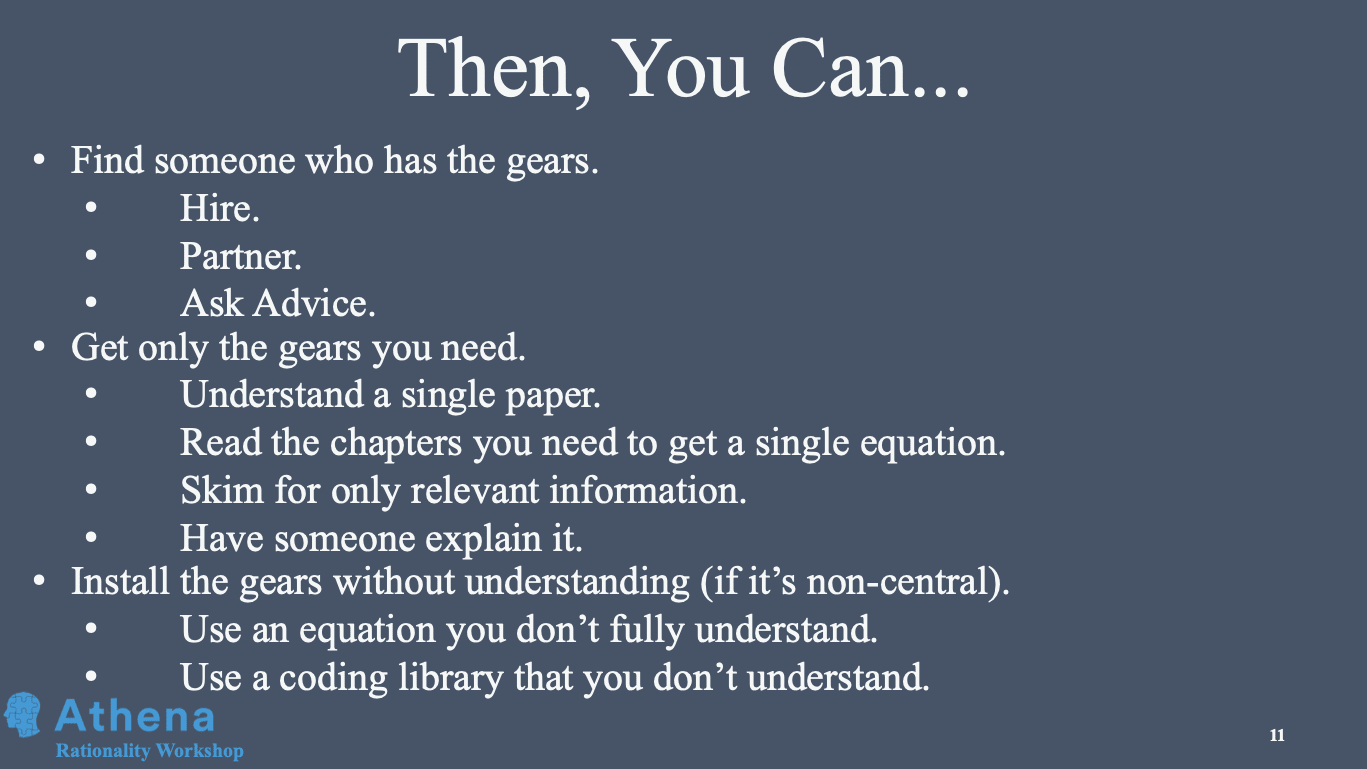

Then, once you know the mental model that works, you can try and figure out which specific gears you need.

For example, you can find someone who has the gears that you want. You could hire, or partner with somebody. You could ask for advice without ever needing to learn that field -- you just know that it exists. You know how it works, so you go out and find somebody who can help you. You can also just learn the specific subset of gears you need to solve this particular problem. And finally, you can install the gears without understanding them fully.

Questions

Ben Pace: You're arguing that oftentimes it's not important to build gears-level models of a domains, if tit's not your primary one. I feel like one counter-argument is that if you can get a pretty good understanding of things, it's really easy to build gears-level models in lots of different domains. Once you get a couple of core abstractions down, you can actually apply them to other areas very quickly.

It would take a long time for a fireman to become a physicist. But if he did, and then were to try biology, he might quite quickly do some pretty good research there. I don't think it would take as long as it takes people to initially become experts in biology.

I would maybe speculate that, as I've grown as a rationalist, I have become better able to look at a problem and quickly figure out the key constraints. I can build a Fermi estimate of what the main variables are and quickly understand them -- as opposed to having to do two weeks of research to understand how to e.g. change the door to my bedroom. I'm curious what your thoughts are about that.

mr-hire: I think one of the benefits of this approach is that if you get gears as they're needed, there’s a selection effect whereby you're going to naturally learn models that are highly applicable to many domains. If rationality is applicable to numerous places, then you're going to start getting the gears piecemeal. And if you keep bumping up against the same fields, eventually at some point, you will have enough evidence to realise that "Yes, this is important. I'm going to actually learn the gears in this domain, because it's so applicable."

Still, I think there is some nuance here. Some models are so widely applicable that you do want to learn the gears upfront. But for this talk, I was trying to play devil's advocate for the other perspective.

---

Phil Hazelden: When you're doing the transformation to another domain, solving it in that domain and then transforming back, I feel like just knowing the inputs and outputs isn't necessarily helpful for knowing whether that domain will help you solve a problem. Whereas, if you actually have some gears in that domain, you might be able to get a more intuitive feel for whether it would help.

mr-hire: I do have thoughts on that. I think when you're learning things on a deep level, you start asking yourself “Okay, where could I apply this? What other models is this similar to? What other models is this different from?” And grappling with those questions gives you a quick intuition for how to apply those models in other domains.

The way that you're learning these inputs and outputs in this particular domain, and being able to quickly connect them to other things in your head, helps you with understanding the applicability of models in general.

Now, the other part of this question was whether knowing the gears in-depth allows you to apply them to more things. I think that's true. But I still think that in general, the 80-20 rule applies.

You get 80% of the applications by learning 20% of the input-output mapping and how this domain relates to things you know. (Still, there's obviously a bunch of nuance.)

---

Ben Pace: George Lanetz said in the comments “it's like knowing the signature of a library function”. I think this is an example I said to you, Matt, the other day. You find that you need a function to solve some problem, and then you find out a library's got it and you think to yourself, "I don't need to know exactly how, in so many terms, to write that function. I don't need to know all the details. I can just call it and bring it in."

---

Mick W: Quantum mechanics tells us a lot about chemistry. We can apply information about quantum mechanics to our knowledge of chemistry, and we can apply our understanding of the laws of chemistry in expanding our models of biology. But when we look at the relationship between biology and quantum mechanics, you don’t really need to know a lot about one to be good at the other. This is despite quantum mechanics informing chemistry and chemistry informing biology.

In some sense, it feels like you are saying that the quantum mechanics gears-level is necessary to be a biologist. I was wondering if what you are actually trying to say is that, on a long bridge between two connected but distant subjects, you can slack off a bit and only import the knowledge when you need it.

mr-hire: I think there's a lot of nuance there. That's a really good question. How far does the domain need to be from your area of expertise to consider it part of your area of expertise? I think it expands as you become more of an expert in your field, and you work on more abstract problems.

Your area of what you consider your expertise needs to expand to encompass more related fields to really have a gears-level model of the problems you're working on. So I think that's how I would approach that sort of question.

---

Ben Pace: I definitely feel like really good CEOs of companies have a field that would be hard to define in advance. They have to know a lot about many different things and have those things interconnect, like management, their particular market, certain parts of their product, sales, and so on. It is difficult to know exactly which things are within your domain of expertise and which things aren't.

---

Daniel Filan: This talk was basically a hypothesis about how to do cognition well, and there are AI scientists, like me, whose job it is to make things that do cognition well and check which methods are better. I was wondering if you had any thoughts about this. If I wanted to check how important gears-level models are, or if you need to know them, what experiments should I run?

mr-hire: There's obviously a bunch of ways to obtain knowledge. There's theory. There's experiment. I would actually do observation, and I would look at people who are good at solving problems and model how they solve them. That doesn't prove that that's the best way to do it, but it does prove that there is a common effective way to do it.

I would venture that many, many people are taking this approach, though not everyone.

6 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2020-08-03T06:24:39.671Z · LW(p) · GW(p)

Synthesis: There are different degrees of gears. Learn only those that you can learn quickly (and wait until you have accumulated enough knowledge of related gears).

This post is a meta-gears article. Gears of gears. Rationality overall is meta-gears.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-08-03T15:20:07.643Z · LW(p) · GW(p)

Learn only those that you can learn quickly (and wait until you have accumulated enough knowledge of related gears).

It's not about what you can learn quickly, it's about only learning the ones that you'll use constantly (related to your field of interest and the important problems you're working on).

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2020-08-03T21:19:07.500Z · LW(p) · GW(p)

I tried to view it not as a black and white but as a trade-off based on effort/cost. That's why I though brought in the cost of learning the gears. Maybe it's non-linear? Anyway if you think my synthesis has failed what would you say is the trad-off?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-08-04T20:33:06.015Z · LW(p) · GW(p)

The trade off is between likelihood of use, utility of that use, and cost of learning the model. I'm making a fairly bold claim here, which is that most of the time, unless it's your primary field or your important problems you're working on, it's not worth the effort.

I'm purposefully being a bit "unnuanced" in this speech to play devil's advocate, and you adding nuance takes away from some of the bold claims I'm trying to make. I just realized I don't know: is "synthesis" supposed to be "synthesis of this article" or "synthesis of the two viewpoints? If the former, I resent you adding nuance to my sledgehammer :D

I also think that "degrees of gears" is doing the same thing of adding nuance. I'm claiming that instead of learning ANY of the gears, you should instead learn the inputs and outputs.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2020-08-05T07:26:17.096Z · LW(p) · GW(p)

Thanks for the clarification.

If you were intentionally bold, which I like as a didactic technique, I'm sorry to have messed with it ;-) And no worries about the hammer: I have enough armor.

I meant it as synthesis of two viewpoints.

I agree that learning what the possibly hidden gears process is important. Esp. the distribution of inputs in practice. But I do't think gears and input/output can be clearly separated. The understand the input structure you have to understand some of the gears. Life is a messy graph.

comment by prudence · 2021-06-21T23:31:20.728Z · LW(p) · GW(p)

The images(which make up most of the post) are missing now(20210622).

https://web.archive.org/web/20200809205218/https://www.lesswrong.com/posts/A2TmYuhKJ5MbdDiwa/when-gears-go-wrong has the post with images.

I found the post well-worth reading. In fact, a critical addition to learning about Gears-level, in that it helps with when and how to optimize it's use.