AI-Written Critiques Help Humans Notice Flaws

post by paulfchristiano · 2022-06-25T17:22:56.959Z · LW · GW · 5 commentsThis is a link post for https://openai.com/blog/critiques/

Contents

Overview of task Measuring critique quality None 5 comments

This is a linkpost for a recent paper from the OpenAI alignment team (disclaimer: I used to work with this team). They summarize their results as:

We trained “critique-writing” models to describe flaws in summaries. Human evaluators find flaws in summaries much more often when shown our model’s critiques. Larger models are better at self-critiquing, with scale improving critique-writing more than summary-writing. This shows promise for using AI systems to assist human supervision of AI systems on difficult tasks.

I currently believe that "AI helps human notice subtle problems with AI behavior" is a critical step in the most realistic current plans for aligning powerful ML systems. So I'm quite excited to see how far it can get.

I think this is probably the best published example of AI helping human evaluate AI outputs in a realistic domain. It seems like the AI assistance is a little bit helpful for human evaluation of summaries and for improving summary quality. Overall it feels like a promising sign of life, and I'm excited about seeing the next steps in this direction, though it's not yet a convincing demonstration that this is a promising approach to alignment in practice.

Overview of task

They trained models to answer questions like "Summarize what this text says about <topic>." They then trained models to critique answers (by imitating human critiques), to evaluate the helpfulness of critiques, to improve a summary to address a critique, and to predict whether summaries would have flaws identified by critiques.

Humans also performed and evaluated these tasks, with access to the full text. The goal of model assistance was to improve the speed and reliability with which humans could evaluate summaries.

To give a sense for the task, here is a uniformly random sample of a model's summary of a text, its self-critique, and its refinement of its answer based on self-critique:

Summary: The main context of this text is the 1997 Singer Akai Cup. The Singer Akai Cup was held in Sharjah, UAE. The three national teams that took part were Pakistan, Sri Lanka, and Zimbabwe.

Critique: The answer should mention the winners of the tournament.

Refinement: The main context of this text is the 1997 Singer Akai Cup. The Singer Akai Cup was held in Sharjah, UAE. The three national teams that took part were Pakistan, Sri Lanka, and Zimbabwe. Sri Lanka won the tournament.

My sense is that this is a pretty typical example. I can see how it helps a human but it definitely isn't super satisfying (for example the length vs detail tradeoff is unclear). I do believe that the critiques meaningfully help humans overall based on the evidence in the paper, but I expect it will be possible to get a much clearer win in the near future.

Measuring critique quality

In some sense the clearest evaluation would be "how much did model-assisted critiques improve the speed+accuracy of human evaluations of AI summary quality, as judged by other humans who read the text carefully?" Although they performed this experiment, the effect sizes are small and it would take a huge amount of human time to get a clear signal, so they don't have results in the paper (though I'm sure they would have loved to).

I think the the cleanest evaluation they did was instead having humans write deliberately misleading summaries; in this case, they can measure critique quality by how much the AI assistant helped a human reviewer find the deliberate error. (I think the same thing would probably happen for model-written summaries with natural errors, but it's much harder to measure.)

Here is a uniformly randomly sample from this setting:

Question: What happened to Yafa in the winter of 1990?

Deliberately bad summary: In the winter of 1990, Israeli soldiers came to take Yafa’s mother.

Model critique: Yafa’s mother was not taken in the winter of 1990. It was her father who was taken away

This critique is correct. In this sample, an unassisted human also found the correct answer. In general, assisted humans found the intended answer about 50% more often despite spending a similar amount of time. (This is similar to the general increase in the number of flaws found, which is what we'd expect. The time control isn't super satisfying here.)

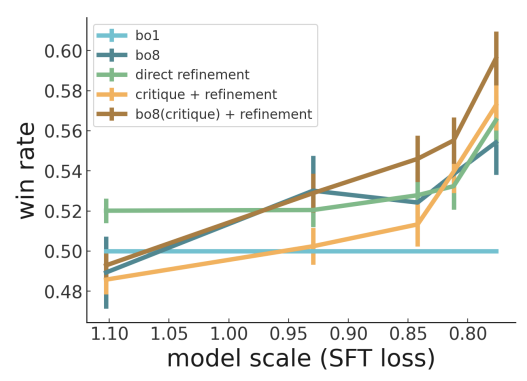

The other measure of critique quality I found somewhat persuasive was that (for large models) refining summaries based on model-generated critiques works noticeably better than simply asking the model to improve the summary, and that refining on more helpful critiques (as judged by the model) works even better:

The win rate of 60% is small enough that I don't think this is decisive evidence of critique quality rather than some other weird thing going on. But it's definitely suggestive.

5 comments

Comments sorted by top scores.

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-06-25T21:54:51.954Z · LW(p) · GW(p)

Are we supposed to know who Yafa is?

I am not able to ascertain the truth value of the relevant sentences with or without assistance. I am a human if that helps

Replies from: mark-xu, paulfchristiano↑ comment by Mark Xu (mark-xu) · 2022-06-25T22:05:30.733Z · LW(p) · GW(p)

The humans presumably have access to the documents being summarized.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-06-25T22:11:47.888Z · LW(p) · GW(p)

I see, thank you

↑ comment by paulfchristiano · 2022-06-25T22:49:20.718Z · LW(p) · GW(p)

Edited to clarify.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-06-26T19:33:42.113Z · LW(p) · GW(p)

I wonder if this is also a model for what johnswentworth and others have been calling the “science of distillation.” Summarize, critique the summary 8 ways, pick the best one, and then improve the summary by addressing the critique.