Why does expected utility matter?

post by Marco Discendenti (marco-discendenti) · 2023-12-25T14:47:46.656Z · LW · GW · 1 commentThis is a question post.

Contents

Expected monetary revenue Expected utility None Answers 11 rossry 5 Tamsin Leake 2 rotatingpaguro None 1 comment

In the context of decision making under uncertainty we consider the strategy of maximizing the expected monetary revenue and expected utility; we provide an argument to show that under certain hypothesis it is rational to maximize expected monetary revenue, then we show that the argument doesn't apply to expected utility. We are left with the question about how do we justify the rationality of the strategy of maximizing expected utility.

Expected monetary revenue

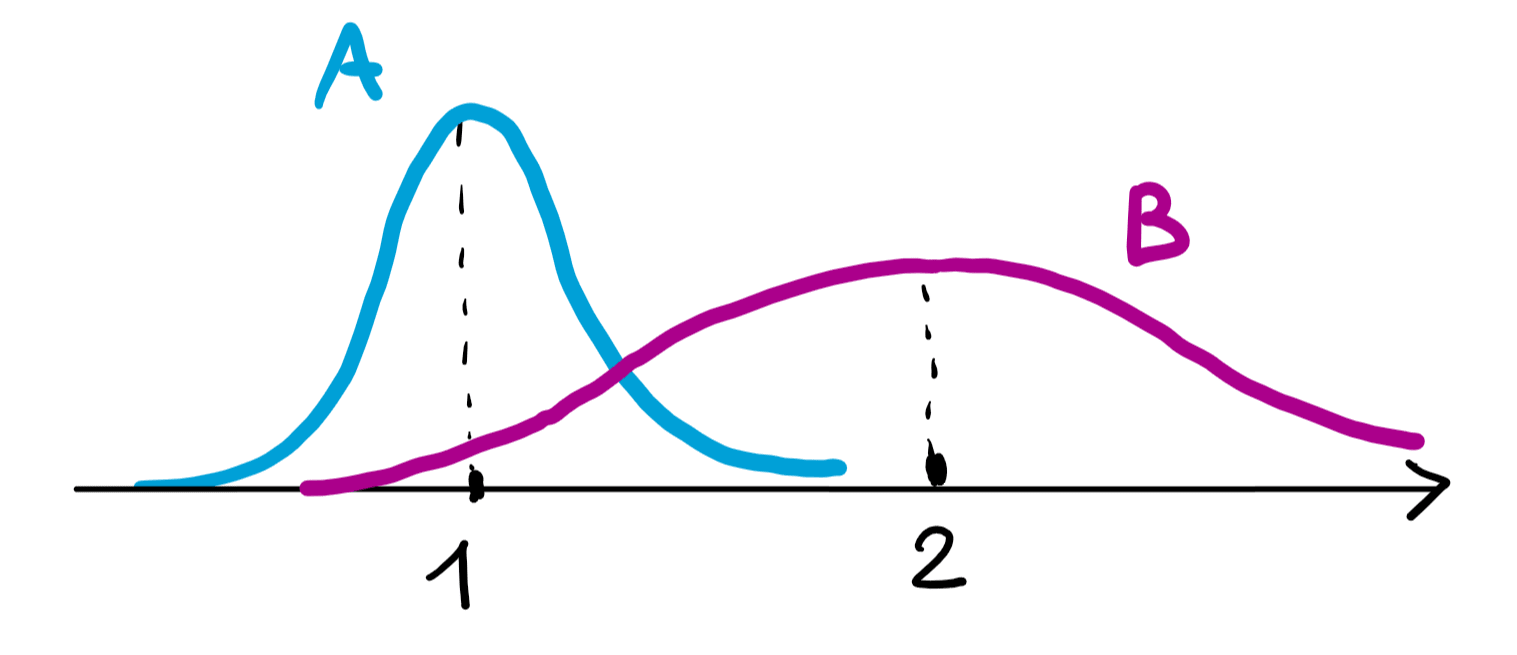

Suppose you have to choose one of two games A and B with an expected economic return of 1$ and 2$ respectively, which have a certain probability distribution.

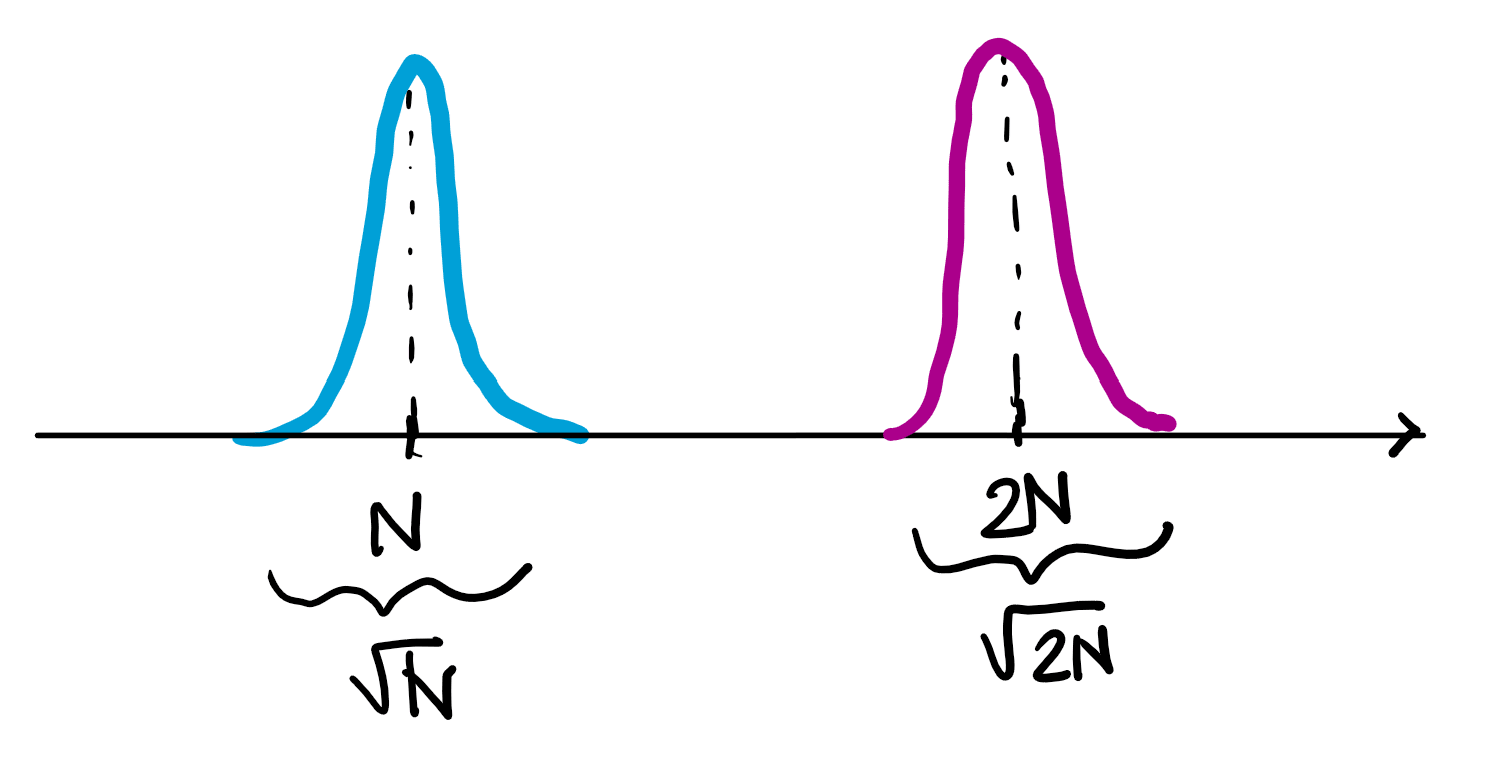

If you play many times, say N, the law of large numbers and the central limit theorem might become relevant, and your probability distribution for the repetition of A and B will have their masses more and more sharply separated around N and 2N respectively.

At this point, it is clear that it is better for you to play B many times than to play A many times. You can predict this in advance by calculating the expected winnings of A and B. So assuming you can make "lots" of choices between A and B, you must prefer the one with the higher expected profit. But what if you can only make one choice? If the distributions of A and B overlap, does the expected profit still matter?

Even if you only have to make this particular choice once, it could be one of a long sequence of many different choices, not always between A and B, but between other sets of options. Even if the choices are different, we can still manage to take advantage of the LLN and the central limit theorem. Suppose we have a large number of choices between two games:

- time 0: choose between games and

- time 1: choose between and

- time 2: ...

- ...

- time N: choose between and

We can hope that again if you always choose the game with the higher expected return the statistical randomness will be increasingly irrelevant over time for large N and you will store a larger amount of money compared to a person with a different strategy.

In order for this hope to actually happen we need that the incomes of the games and are uniformly bounded and "small enough" compared to N. This could not happen if for example:

- a single game has infinite expectation like in the St. Petersburg game;

- a single game has such a big expected revenue that would make almost irrelevant all the previous outcomes;

- the sequence of games has increasingly growing expected revenues.

This could indeed happen if all the games have revenues that lie inside a "bounded" range of values while N is "big enough" compared to this range.

The elements that will make it happen are:

- the additivity property of the money that we gain from the game (it is important that we can store the money and the amount will never reset);

- the central limit theorem for non identical distributed variables.

To summarize: the strategy to always choose the option that maximize the expected monetary revenue is indeed rational if you can store or spend all the money and the choice is part of a set of many choices which have a small mean and standard deviation compared to the total amount of money that is expected to be stored in total.

Expected utility

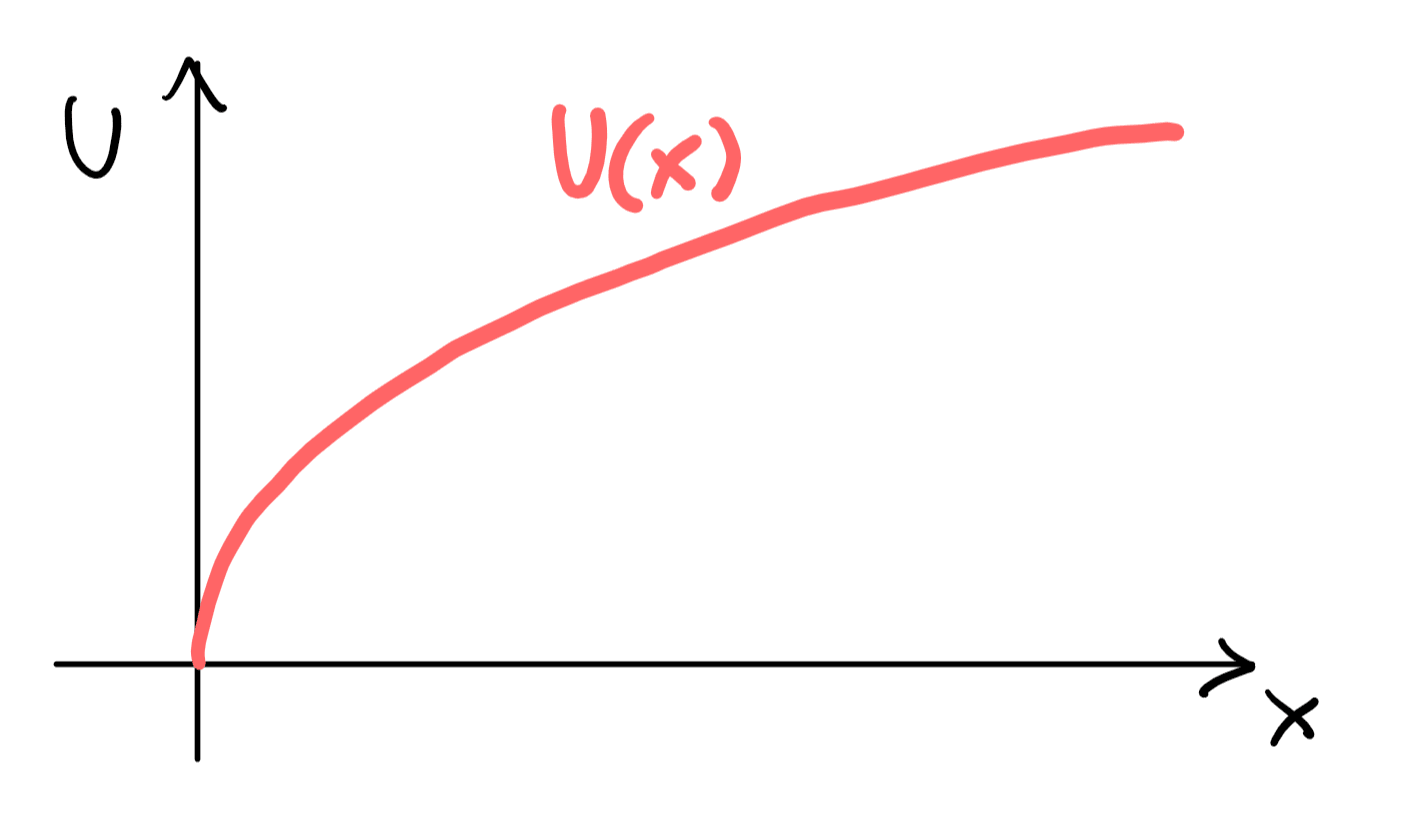

The effect of adding new money to our wealth can be different depending on the wealth we already have at the present moment (10'000 $ can be much more meaningful to someone who has no money than to a millionaire). We model this fact by defining a "utility" function which represents the impact of the extra money on the life of a specific person. We could expect this utility function to be increasing with the amount of money (the more the better) and its slope to be always decreasing (the richer we are the less we care about 1 more dollar), like this:

A rational agent will not be expected to maximize his monetary gain but rather his "utility" gain, if he can. But what happens when he has to make a choice under uncertainty with this new target?

Suppose we have to choose to play game A or game B with some probability distributions for the revenues. In analogy to what we said in the previous paragraph about monetary revenue we could be tempted to say that instead of chosing the game with me maximum expected monetary revenue we choose the one with the maximum expected utility.

Example: if the game A can make us win 1$ with probability 0.5, or otherwise nothing, and game B can make us win $2 with probability 0.3, or otherwise nothing we are no longer interested in the expected revelue, that is:

,

we do not chose B because , we compute the expected utilities

,

So if we have for example (which is a function with a shape like in the graph above) then we have and and therefore we choose A.

But what if we have to make the same choice more than one time? Now the difference between monetary revenue and utility becomes more extreme:

- When we compute the expected monetary revenue of two game and that we play in sequence we can just add the expected revenue of the two: , this happens because money just add together.

- When we compute the expected utility of playing two game and in sequence we are not allowed to add the expected utility of the two: playing game A (above) one time resulted in but if you play the same game A two times you have 3 possible outcomes: 2 wins, 1 wins, 0 wins, the probabilities are 0.25, 0.5, 0.25 and the monetary revenues are 2$, 1$, 0$, so we have that is less than .

- The choice that maximizes the expected utility in 1 single game can be different from the strategy that maximizes utility in 2 games or in 100 games: it can happen that if you can play only 1 time game A or game B then it is game A has the higher expected utility BUT if you can play two times the same games then you get more expected utility by playing B both times. Check this simple example [LW · GW].

So basically there is no point in computing expected utility of every single choice, if you have to make a sequence of choices: you actually need to compute the expected utility of every possible sequence of choices and then choose the sequence of choices that makes the expected utility as big as possible.

But how did we came to the conclusion that it was meaningful to compare expected utilities in order to make a rational decision? Because we derived a similar conclusion about expected monetary revenue and utility looked like a refinement of that line of thought. But expected monetary revenue behaved very differently! It was additive, this allowed us to make use of central limit theorem which was crucial to justify the value of the expectation in making the decision under certain specific circumstances. The situation with expected utility is completely different, we cannot reproduce the argument above to justify the value of expected utility.

So here is the question we are left with: why a rational agent should maximize expected utility?

Answers

I believe this assumption typically comes from the Von Neumann–Morgenstern utility theorem, which says that, if your preferences are complete, transitive, continuous, and independent, then there is some utility function such that your preferences are equivalent to "maximize expected ".

Those four assumptions have technical meanings:

- Complete means that for any A and B, you prefer A to B, or prefer B to A, or are indifferent between A and B.

- Transitive means that if you prefer A to B and prefer B to C, then you prefer A to C, and also that if you prefer D to E and are indifferent between E and F, then you prefer D to F.

- Continuous means that if you prefer A to X and X to Y, then there's some probability p such that you are indifferent between X and "p to get A, else Y"

- Independent means that if you prefer X to Y, then for any probability q and other outcome B, you prefer "q to get B, else X" to "q to get B, else Y".

In my opinion, the continuity assumption is the one most likely to be violated (in particular, it excludes preferences where "no chance of any X is worth any amount of Y"), so these aren't necessarily a given, but if you do satisfy them, then there's some utility function that describes your preferences by maximizing it.

↑ comment by Richard_Kennaway · 2023-12-26T17:46:01.677Z · LW(p) · GW(p)

There is a problem with completeness that requires studying the actual theorem and its construction of utility from preference. The preference function does not range over just the possible “outcomes” (which we suppose are configurations of the world or of some local part of it). It ranges over lotteries among these outcomes, as explicitly stated on the VNM page linked above. This implies that the idea of being indifferent between a sure gain of 1 util and a 0.1% chance of 1000 utils is already baked into the setup of these theorems, even before the proof constructs the utility function. A theorem cannot be used to support its assumptions.

The question, “Why should I maximise expected utility (or if not, what should I do instead)?” is a deep one which I don’t think has ever been fully answered.

The argument from iterated gambles requires that the utility of the gambles can be combined linearly. The OP points out that this is not the case if utility is nonlinear in the currency of the gambles.

Even when the utilities do combine linearly, there may be no long run. An example now well-known is one constructed by Ole Peters, in which the longer you play, the greater the linearly expected value, but the less likely you are to win anything. Ever more enormous payoffs are squeezed into an ever tinier part of probability space.

Even gambles apparently fair in expectation can be problematic if their variances are infinite and so the Central Limit Theorem does not apply.

So iterated gambles do not answer the question.

Logarithmic utility does not help: just imagine games with the payouts correspondingly scaled up.

Bounded utility does not help, because there is no principled way to locate the bound, and because if the bound is set large enough finite versions of the problems with unbounded utility still show up. See SBF/FTX. Is that debacle offset by the alternate worlds in which the collapse never happened?

Replies from: marco-discendenti↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-27T11:23:49.702Z · LW(p) · GW(p)

Very interesting observations. I woudln't say the theorem is used to support his assumption because the assumptions don't speak about utils but only about preference over possible outcomes and lotteries, but I see your point.

Actually the assumptions are implicitly saying that you are not rational if you don't want to risk to get a 1'000'000'000'000$ debt with a small enough probability rather than losing 1 cent (this is strightforward from the archimedean property).

↑ comment by Dagon · 2023-12-25T17:51:13.773Z · LW(p) · GW(p)

Complete is also in question for any real-world application, because it implies consistent-over-time.

Replies from: rhollerith_dot_com, SaidAchmiz↑ comment by RHollerith (rhollerith_dot_com) · 2023-12-26T16:08:42.779Z · LW(p) · GW(p)

No it does not imply constancy or consistency over time because the 4 axioms do not stop us from adding to the utility function a real-valued argument that represent the moment in time that the definition refers to.

In other words, the 4 axioms do not constrain us to consider only utility functions over world states: utility functions over "histories" are also allowed, where a "history" is a sequence of world states evolving over time (or equivalently a function that takes a number representing an instant in time and returning a world state).

↑ comment by Said Achmiz (SaidAchmiz) · 2023-12-26T20:03:51.508Z · LW(p) · GW(p)

Indeed there are quite a few interesting reasons to be skeptical of the completeness axiom. [LW(p) · GW(p)]

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-25T18:22:22.287Z · LW(p) · GW(p)

It seems indeed quite reasonable to maximize utility if you can choose an option that makes it possible, my point is why you should maximize expected utility when the choice is under uncertainty

Replies from: christopher-king↑ comment by Christopher King (christopher-king) · 2023-12-26T15:54:04.333Z · LW(p) · GW(p)

If you aren't maximizing expected utility, you must choose one of the four axioms to abandon.

Replies from: Richard_Kennaway, marco-discendenti, marco-discendenti, marco-discendenti↑ comment by Richard_Kennaway · 2023-12-27T15:26:50.727Z · LW(p) · GW(p)

Or abandon some part of its assumed ontology. When the axioms seem ineluctable yet the conclusion seems absurd, the framework must be called into question.

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-27T10:32:13.109Z · LW(p) · GW(p)

Ok we have a theorem that says that if we are not maximizing the expected value of some function "u" then our preference are apparently "irrational" (violating some of the axioms). But assume we already know our utility function before applying the theorem, is there an argument that shows how and why the preference of B over A (or maybe indifference) is irrational if E(U(A))>E(U(B))?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-12-27T15:35:15.935Z · LW(p) · GW(p)

In the context of utility theory, a utility function is by definition something whose expected value encodes all your preferences.

Replies from: marco-discendenti↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-27T20:28:43.768Z · LW(p) · GW(p)

You don't necessarily need to start from the preference and use the theorem to define the function, you can also start from the utility function and try to produce an intuitive explanation of why you should prefer to have the best expected value

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-12-27T21:22:53.839Z · LW(p) · GW(p)

What does it mean for something to be a “utility” function? Not just calling it that. A utility function is by definition something that represents your preferences by numerical comparison: that is what the word was coined to mean.

Suppose we are given a utility function defined just on outcomes, not distributions over outcomes, and a set of actions that each produce a single outcome, not a distribution over outcomes. It is clear that the best action is that which selects the highest utility outcome.

Now suppose we extend the utility function to distributions over outcomes by defining its value on a distribution to be its expected value. Suppose also that actions in general produce not a single outcome with certainty but a distribution over outcomes. It is not clear that this extension of the original utility function is still a “utility” function, in the sense of a criterion for choosing the best action. That is something that needs justification. The assumption that this is so is already baked into the axioms of the various utility function theorems. Its justification is the deep problem here.

Joe Carlsmith gives many arguments for Expected Utillity Maximisation, but it seems to me that all of them are just hammering on a few intuition pumps, and I do not find them conclusive. On the other hand, I do not have an answer, whether a justification of EUM or an alternative.

Eliezer has likened the various theorems around utility to a multitude of searchlights coherently pointing in the same direction. But the problems of unbounded utility, non-ergodicity, paradoxical games, and so on (Carlsmith mentions them in passing but does not discuss them) look to me like another multitude of warning lights also coherently pointing somewhere labelled “here be monsters”.

Replies from: marco-discendenti↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-29T04:11:52.577Z · LW(p) · GW(p)

There are infinitely many ways to find utility functions that represents preferences on outcomes, for example if outcomes are monetary than any increasing function is equivalent on outcomes but not when you try to extend it to distributions and lotteries with the expected value.

I wander if given a specific function u(...) on every outcome you can also chose "rational" preferences (as in the theorem) according to some other operator on the distributions that is not the average, for example what about the L^p norm or the sup of the distribution (if they are continuous)?

Or is the expected value the special unique operator that have the propety stated by the VN-M theorem?

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-29T14:42:43.356Z · LW(p) · GW(p)

Itt seems to me that it is actually easy to define a function $u'(...)>=0$ such that the preferences are represented by $E(u'^2)$ and not by $E(u')$: just take u'=sqrt(u), and you can do the same for any value of the exponent, so the expectation does not play a special role in the theorem, you can replace it with any $L^p$ norm.

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-27T10:20:37.128Z · LW(p) · GW(p)

Apparently the axioms can be considered to talk about preferences, not necessarily about probabilistic expectations. Am I wrong in seeing them in this way?

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-26T16:07:51.736Z · LW(p) · GW(p)

You might want to read this post (it's also on lesswrong [LW · GW] but the images are broken there)

This is not a complete answer, it's just a way of thinking about the matter that was helpful to me in the past, and so might be to you too:

Saying that you ought to maximise the expected value of a real valued function of everything still leaves a huge amount of freedom; you can encode what you want by picking the right function over the right things.

So you can think of it as a language: a conventional way of expressing decision strategies. If you can write a decision strategy as , then you have written the problem in the language of utility.

Like any generic language, this won't stop you from expressing anything in general, but it will make certain things easier to express than others. If you know at least two languages, you'll have sometimes encountered short words that can't be efficaciously translated to a single word in the other language.

Similarly, thinking that you ought to maximise expected utility, and then asking "what is my utility then?", naturally suggests to your mind certain kinds of strategies rather than others.

Some decisions may need many epicycles to be cast as utility maximisation. That this indicates a problem with utility maximisation, with the specific decision, or with the utility function, is left to your judgement.

There is currently not a theory of decision that just works for everything, so there is not a totally definitive argument for maximum expected utility. You'll have to learn when and how you can not apply it with further experience.

↑ comment by Marco Discendenti (marco-discendenti) · 2023-12-27T20:11:47.327Z · LW(p) · GW(p)

Thank you for your insight. The problem with this view of utility "just as a language" is that sometimes I feel that the conclusion of utility maximization are not "rational" and I cannot figure out why they should be indeed rational if the language is not saying anything that is meaningful to my intuition.

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2023-12-27T22:52:52.093Z · LW(p) · GW(p)

if the language is not saying anything that is meaningful to my intuition.

When you learn a new language, you eventually form new intuitions. If you stick to existing intuitions, you do not grow. Current intuition does not generalize to the utmost of your potential ability.

When I was toddler, I never proceeded to grow new concepts by rigorous construction; yet I ended up mostly knowing what was around me. Then, to go further, I employed abstract thought, and had to mold and hew my past intuitions. Some things I intuitively perceived, turned out likely false; hallucinations.

Later, when I was learning Serious Math, I forgot that learning does not work by a straight stream of logic and proofs, and instead demanded that what I was reading both match my intuitions, and be properly formal and justified. Quite the ask!

The problem with this view of utility "just as a language"

My opinion is that if you think the problem lays in seeing it as a language, a new lens to the world, because specifically of the new language not matching your present intuition, you are pointing at the wrong problem.

If instead you meant to prosaically plead for object-level explanations that would clarify, oh uhm sorry I don't actually know, I'm an improvised teacher, I actually have no clue, byeeeeee

1 comment

Comments sorted by top scores.