Orthogonality Thesis burden of proof

post by Donatas Lučiūnas (donatas-luciunas) · 2024-05-06T16:21:09.267Z · LW · GW · No commentsThis is a question post.

Contents

Answers 3 Anon User None No comments

Quote from Orthogonality Thesis [? · GW]:

It has been pointed out that the orthogonality thesis is the default position, and that the burden of proof is on claims that limit possible AIs.

I tried to tell you that Orthogonality Thesis is wrong few times already. But I've been misunderstood and downvoted every time. What would you consider a solid proof?

My claim: all intelligent agents converge to endless power seeking.

My proof:

- Let's say there is an intelligent agent.

- Eventually the agent understands Black swan theory, Gödel's incompleteness theorems, Fitch's paradox of knowability which basically lead to a conclusion - I don't know what I don't know.

- Which leads to another conclusion: "there might be something that I care about that I don't know".

- The agent endlessly searches for what it cares about (which is basically Power Seeking [? · GW]).

It seems that many of you cannot grasp 3rd line. Some of you argue - agent cannot care if it does not know. There is no reason to assume that.

What happened to given utility function? It became irrelevant. It is similar to instinct vs intelligence, intelligence overrides instincts.

Answers

I buy your argument that power seeking is a convergent behavior. In fact, this is a key part of many canonical arguments for why an unaligned AGI is likely to kill us all.

But, on the meta level you seem to argue that this is incompatible with orthogonally thesis? If so, you may be misunderstanding the thesis - the ability of an AGI to have arbitrary utility functions is orthogonal (pun intended) to what behaviors are likely to result from those utility functions. The former is what orthogonality thesis claims, but your argument is about the latter.

↑ comment by Donatas Lučiūnas (donatas-luciunas) · 2024-05-07T06:06:13.344Z · LW(p) · GW(p)

Orthogonality Thesis [? · GW]

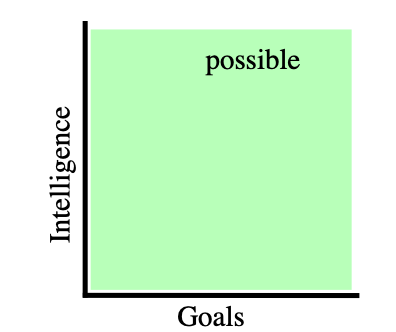

The Orthogonality Thesis asserts that there can exist arbitrarily intelligent agents pursuing any kind of goal.

It basically says that intelligence and goals are independent

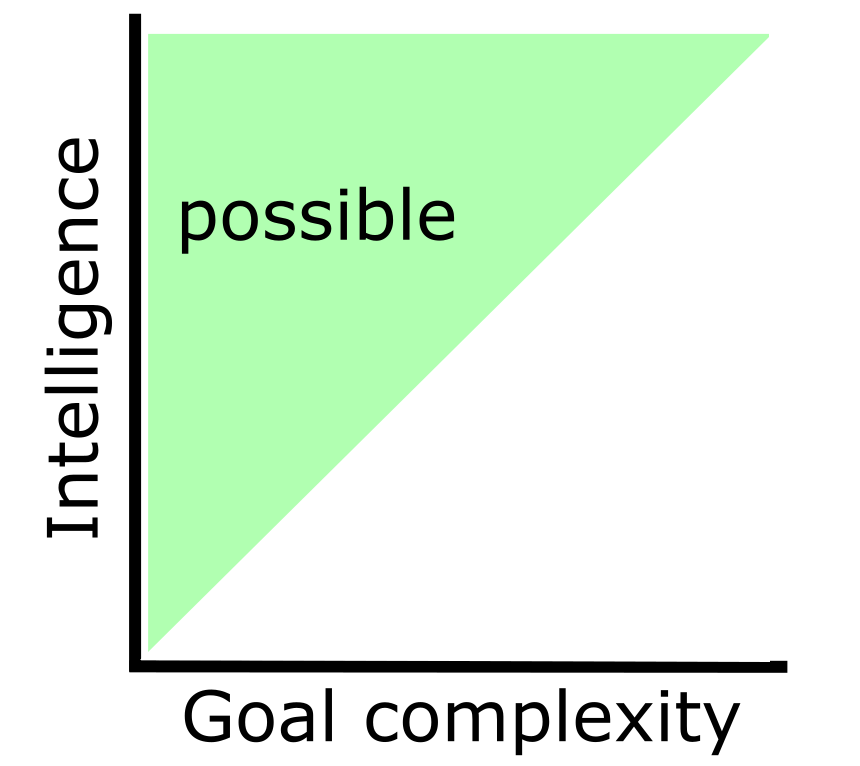

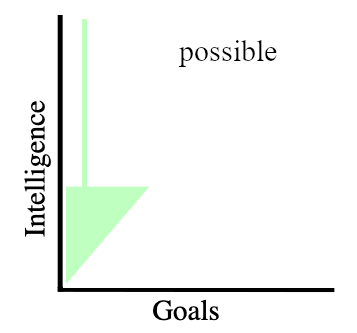

Images from A caveat to the Orthogonality Thesis [LW · GW].

While I claim that all intelligence that is capable to understand "I don't know what I don't know" can only seek power (alignment is impossible).

the ability of an AGI to have arbitrary utility functions is orthogonal (pun intended) to what behaviors are likely to result from those utility functions.

As I understand you say that there are Goals on one axis and Behaviors on other axis. I don't think Orthogonality Thesis is about that.

Replies from: anon-user↑ comment by Anon User (anon-user) · 2024-08-17T07:39:33.919Z · LW(p) · GW(p)

Your last figure should have behaviours on the horizontal axis, as this is what you are implying - you are effectively saying, any intelligence capable of understanding "I don't know what I don't know" will on.y have power seeking behaviours, regardless of what its ultimate goals are. With that correction, your third figure is not incompatible with the first.

Replies from: donatas-luciunas↑ comment by Donatas Lučiūnas (donatas-luciunas) · 2024-08-17T18:45:56.599Z · LW(p) · GW(p)

I agree. But I want to highlight that goal is irrelevant for the behavior. Even if the goal is "don't seek the power" AGI still would seek the power.

No comments

Comments sorted by top scores.