Subagents, introspective awareness, and blending

post by Kaj_Sotala · 2019-03-02T12:53:47.282Z · LW · GW · 19 commentsContents

Robots again Blending None 19 comments

In this post, I extend the model of mind that I've been building up in previous posts to explain some things about change blindness, not knowing whether you are conscious, forgetting most of your thoughts, and mistaking your thoughts and emotions as objective facts, while also connecting it with the theory in the meditation book The Mind Illuminated. (If you didn't read my previous posts, this article has been written to also work as a stand-alone piece.)

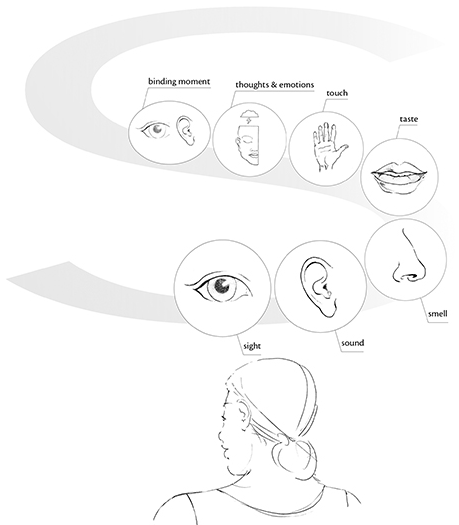

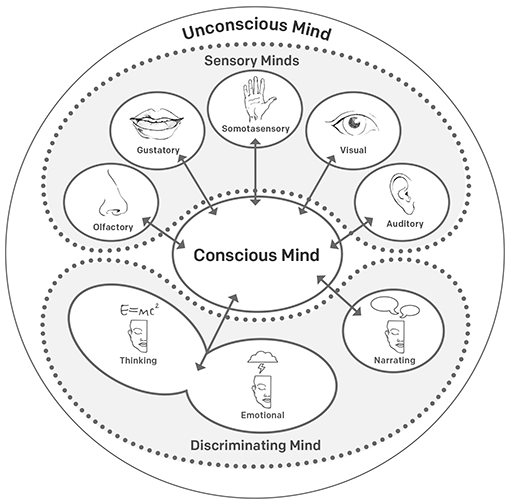

The Mind Illuminated (Amazon, SSC review), or TMI for short, presents what it calls the moments of consciousness model. According to this model, our stream of consciousness consists of a series of discrete moments, each a mental object. Under this model, there are always different “subminds” which are projecting mental objects into consciousness. At different moments, different mental objects get selected as the content of consciousness.

If you’ve read some of the previous posts in this sequence, you may recognize this as sounding familiar. We started by discussing some of the neuroscience research on consciousness [LW · GW]. There we covered the GWT/GNW theory of consciousness being a “workspace” in the brain that different brain systems project information into, and which allows them to synchronize their processing around a single piece of information. In the next post, we discussed the psychotherapy model of Internal Family Systems [LW · GW], which also conceives the mind of being composed of different parts, many of which are trying to accomplish various aims by competing to project various mental objects into consciousness. (TMI talks about subminds, IFS talks about parts, GWT/GNW just talks about different parts of the brain; for consistency’s sake, I will just use “subagent” in the rest of this post.)

At this point, we might want to look at some criticisms of this kind of a framework. Susan Blackmore has written an interesting paper called “There is no stream of consciousness”. She has several examples for why we should reject the notion of any stream of consciousness. For instance, this one:

For many years now I have been getting my students to ask themselves, as many times as possible every day “Am I conscious now?”. Typically they find the task unexpectedly hard to do; and hard to remember to do. But when they do it, it has some very odd effects. First they often report that they always seem to be conscious when they ask the question but become less and less sure about whether they were conscious a moment before. With more practice they say that asking the question itself makes them more conscious, and that they can extend this consciousness from a few seconds to perhaps a minute or two. What does this say about consciousness the rest of the time?

Just this starting exercise (we go on to various elaborations of it as the course progresses) begins to change many students’ assumptions about their own experience. In particular they become less sure that there are always contents in their stream of consciousness. How does it seem to you? It is worth deciding at the outset because this is what I am going to deny. I suggest that there is no stream of consciousness. [...]

I want to replace our familiar idea of a stream of consciousness with that of illusory backwards streams. At any time in the brain a whole lot of different things are going on. None of these is either ‘in’ or ‘out’ of consciousness, so we don’t need to explain the ‘difference’ between conscious and unconscious processing. Every so often something happens to create what seems to have been a stream. For example, we ask “Am I conscious now?”. At this point a retrospective story is concocted about what was in the stream of consciousness a moment before, together with a self who was apparently experiencing it. Of course there was neither a conscious self nor a stream, but it now seems as though there was. This process goes on all the time with new stories being concocted whenever required. At any time that we bother to look, or ask ourselves about it, it seems as though there is a stream of consciousness going on. When we don’t bother to ask, or to look, it doesn’t, but then we don’t notice so it doesn’t matter. This way the grand illusion is concocted.

This is an interesting argument. A similar example might be that when I first started doing something like track-back meditation [LW · GW] when on walks, checking what was in my mind just a second ago. I was surprised at just how many thoughts I would have while on a walk, that I would usually just totally forget about afterwards, and come back home having no recollection of 95% of them. This seems similar to Blackmore’s “was I conscious just now” question, in that when I started to check back the contents of my mind just a few seconds ago, I was frequently surprised by what I found out. (And yes, I’ve tried the “was I conscious just now” question as well, with similar results as Blackmore’s students.)

Another example that Blackmore cites is change blindness. When people are shown an image, it’s often possible to introduce unnoticed major changes into the image, as long as people are not looking at the very location of the change when it’s made. Blackmore also interprets this as well to mean that there is no stream of consciousness - we aren’t actually building up a detailed visual model of our environment, which we would then experience in our consciousness.

One might summarize this class of objections as something like, “stream-of-consciousness theories assume that there is a conscious stream of mental objects in our minds that we are aware of. However, upon investigation it often becomes apparent that we haven’t been aware of something that was supposedly in our stream of consciousness. In change blindness experiments we weren’t aware of what the changed detail actually was pre-change, and more generally we don’t even have clear awareness of whether we were conscious a moment ago.”

But on the other hand, as we reviewed earlier, there still seem to be objective experiments [LW · GW] which establish the existence of something like a “consciousness”, which holds approximately one mental object at a time.

So I would interpret Blackmore’s findings differently. I agree that answers to questions like “am I conscious right now” are constructed somewhat on the spot, in response to the question. But I don’t think that we need to reject having a stream of consciousness because of that. I think that you can be aware of something, without being aware of the fact that you were aware of it.

Robots again

For example, let’s look at a robot that has something like a global consciousness workspace. Here are the contents of its consciousness on five successive timesteps:

- It’s raining outside

- Battery low

- Technological unemployment protestors are outside

- Battery low

- I’m now recharging my battery

Notice that at the first timestep, the robot was aware of the fact that it was raining outside; this was the fact being broadcast from consciousness to all subsystems. But at no later timestep was it conscious of the fact that at the first timestep, it had been aware of it raining outside. Assuming that no subagent happened to save this specific piece of information, then all knowledge of it was lost as soon as the content of the consciousness workspace changed.

But suppose that there is some subagent which happens to keep track of what has been happening in consciousness. In that case it may choose to make its memory of previous mind-states consciously available:

6. At time 1, there was the thought that [It’s raining outside]

Now there is a mental object in the robot’s consciousness, which encodes not only the observation of it raining outside before, but also the fact that the system was thinking of this before. That knowledge may then have further effects on the system - for example, when I became aware of how much time I spent on useless rumination while on walks, I got frustrated. And this seems to have contributed to making me ruminate less: as the system’s actions and their overall effect were metacognitively represented and made available for the system’s decision-making, this had the effect of the system adjusting its behavior to tune down activity that was deemed useless.

The Mind Illuminated calls this introspective awareness. Moments of introspective awareness are summaries of the system’s previous mental activity, with there being a dedicated subagent with the task of preparing and outputting such summaries. Usually it will only focus on tracking the specific kinds of mental states which seem important to track.

So if we ask ourselves “was I conscious just now” for the first time, that might cause the agent to output some representation of the previous state we were in. But it doesn’t have experience in answering this question, and if it’s anything like most human memory systems, it needs to have some kind of a concrete example of what exactly it is looking for. The first time we ask it, the subagent’s pattern-matcher knows that the system is presumably conscious at this instant, so it should be looking for some feature in our previous experiences which somehow resembles this moment, but it’s not quite sure of which one. And an introspective mind state, is likely to be different from the less introspective mind state that we were in a moment ago.

This has the result that on the first few times when it’s asked, the subagent may produce an uncertain answer: it’s basically asking its memory store “do my previous mind states resemble this one as judged by some unclear criteria”, which is obviously hard to answer.

With time, operating from the assumption that the system is currently conscious, the subagent may learn to find more connections between the current moment and past ones that it still happens to have in memory. Then it will report those as consciousness, and likely also focus more attention on aspects of the current experience which it has learned to consider “conscious”. This would match Blackmore’s report of “with more practice [the students] say that asking the question itself makes them more conscious, and that they can extend this consciousness from a few seconds to perhaps a minute or two”.

Similarly, this explains me not being aware of most of my thoughts, as well as change blindness. I had a stream of thoughts, but because I had not been practicing introspective awareness, there were no moments of introspective awareness making me aware of having had these thoughts. Though I was aware of the thoughts at the time, this was never re-presented in a way that would have left a memory trace.

In change blindness experiments, people might look at the same spot in a picture twice. Although they did see the contents of that spot at time 1 and were aware of them, that memory was never stored anywhere. When at time 2 they looked at the same spot and it was different, the lack of an awareness of what they saw previously means that they don’t notice the change.

Introspective awareness will be an important concept in my future posts. (Abram Demski also wrote a previous post on Track-Back Meditation [LW · GW], which is basically an exercise for introspective awareness.) Today, I’m going to talk about its relation to a concept I’ve talked about before: blending / cognitive fusion.

Blending

I’ve previously discussed “cognitive fusion” [LW · GW], as what happens when the content of a thought or emotion is experienced as an objective truth rather than a mental construct. For instance, you get angry at someone, and the emotion makes you experience them as a horrible person - and in the moment this seems just true to you, rather than being an interpretation created by your emotional reaction.

You can also fuse with more logical-type beliefs - or for that matter any beliefs - when you just treat them as unquestioned truths, without remembering the possibility that they might be wrong. In my previous post, I suggested that many forms of meditation were training the skill of intentional cognitive defusion, but I didn’t explain how exactly meditation lets you get better at defusion.

In my post about Internal Family Systems [LW · GW], I mentioned that IFS uses the term “blending” for when a subagent is sending emotional content to your consciousness, and suggested that IFS’s unblending techniques worked by associating extra content around those thoughts and emotions, allowing you to recognize them as mental objects. For instance, you might notice sensations in your body that were associated with the emotion, and let your mind generate a mental image of what the physical form of those sensations might look like. Then this set of emotions, thoughts, sensations, and visual images becomes “packaged together” in your mind, unambiguously designating it as a mental object.

My current model is that meditation works similarly, only using moments of introspective awareness as the “extra wrapper”. Suppose again that you are a robot, and the contents of your consciousness is:

- It’s raining outside.

Note that this mental object is basically being taken as an axiomatic truth: what is in your consciousness, is that it is raining outside.

On the other hand, suppose that your consciousness contains this:

- Sensor 62 is reporting that [it’s raining outside].

Now the mental object in your consciousness contains the origin of the belief that it’s raining. The information is made available to various subagents which have other beliefs. E.g. a subagent holding knowledge about sensors, might upon seeing this mental object, recognize the reference to “sensor 62” and output its estimate of that sensor’s reliability. The previous two mental objects could then be combined by a third subagent:

- (Subagent A:) Sensor 62 is reporting that [it is raining outside]

- (Subagent B:) Readings from sensor 62 are reliable 38% of the time.

- (Subagent C:) It is raining outside with a 38% probability.

In my discussion of Consciousness and the Brain [LW · GW], I noted that one of the proposed functions of consciousness is to act as a production system, where many different subagents may identify particular mental objects and then apply various rules to transform the contents of consciousness as a result. What I’ve sketched above is exactly a sequence of production rules: at e.g. step 2, something like the rule “if sensor 62 is mentioned as an information source, output the current best estimate of sensor 62’s reliability” is applied by subagent B. Then at the third timestep, another subagent combines the observations from the previous two timesteps, and sends that into consciousness.

What was important was that the system was not representing the outside weather just as an axiomatic statement, but rather it was explicitly representing it as a fallible piece of information with a particular source.

Here’s something similar:

- I am a bad person.

- At t1, there was the thought that [I am a bad person].

Here, the moment of awareness is highlighting the nature of the previous thought as a thought, thus causing the system to treat it as such. If you used introspective awareness for unblending, it might go something like this:

- Blending: you are experiencing everything that a subagent outputs as true. In this situation, there’s no introspective awareness that would highlight those outputs as being just thoughts. “My friend is a horrible person”, feels like a fact about the world.

- Partial blending: you realize that the thoughts which you have might not be entirely true, but you still feel them emotionally and might end up acting accordingly. In this situation, there are moments of introspective awareness, but there are also enough of the original “unmarked” thoughts to also be affecting your other subagents. You feel hostile towards your friend, and realize that this may not be rationally warranted, but still end up talking in an angry tone and maybe saying things you shouldn’t.

- Unblending: all or nearly all of the thoughts coming from a subagent are being filtered through a mechanism that wraps them inside moments of introspective awareness, such as “this subagent is thinking that X”. You know that you have a subagent which has this opinion, but none of the other subagents are treating it as a proven fact.

By training your mind to have more introspective moments of awareness, you will become capable of perceiving more and more mental objects as just that. A classic example would be all those mindfulness exercises where you stop identifying with the content of a thought, and see it as something separate from yourself. At more advanced levels, even mental objects which build up sensations such as those which make up the experience of a self may be seen as just constructed mental objects.

19 comments

Comments sorted by top scores.

comment by algekalipso · 2019-03-03T11:36:32.228Z · LW(p) · GW(p)

Really great post!

Andrés (Qualia Computing) here. Let me briefly connect your article with some work that QRI has done.

First, we take seriously the view of a "moment of experience" and study the contents of such entities. In Empty Individualism, every observer is a "moment of experience" and there is no continuity from one moment to the next; the illusion is caused by the recursive and referential way the content of experience is constructed in brains. We also certainly agree that you can be aware of something without being aware of being aware of it. As we I will get to, this is an essential ingredient in the way subjective time is constructed

The concept of blending is related to our concept of "The Tyranny of the Intentional Object". Indeed, some people are far more prone to confusing logical or emotional thoughts for revealed truth; introspective ability (which can be explained as the rate at which awareness of a having being aware before happens) varies between people and is trainable to an extent. People who are systematizers can develop logical ontologies of the world that feel inherently true, just as empathizers can experience a made-up world of interpersonal references as revealed true. You could describe this difference in terms of whether blending is happening more frequently with logical or emotional structures. But empathizers and systematizers (and people high on both traits!) can, in addition, be highly introspective, meaning that they recognize those sensations as aspects of their own mind.

The fact that each moment of experience can incorporate informational traces of previous ones allows the brain to construct moments of experience with all kinds of interesting structures. Of particular note is what happens when you take a psychedelic drug. The "rate of qualia decay" lowers due to a generalization of what in visual phenomenology is called "tracers". The disruption of inhibitory control signals from the cortex leads to the cyclical activation of the thalamus* and thus the "re-living" of previous contents of experience in high-frequency repeating patterns (see "tracers" section of this article). On psychedelics, each moment of experience is "bigger". You can formalize this by representing each moment of experience as a connected network, where each node is a quale and each edge is a local binding relationship of some sort (whether one is blending or not, may depend on the local topology of the network). In the structure of the network you can encode the information pertaining to many constructed subagents; phenomenal objects that feel like "distinct objects/realities/channels" would be explained in terms of clusters of nodes in the network (e.g. subsets of nodes such that the clustering coefficient within them is much larger than the average clustering coefficient of different subsets of nodes of similar size). As an aside, dissociatives, in particular, drastically change the size of clusters, which phenomenally is experienced as "being aware of more than one reality at once".

You can encode time-structure into the network by looking at the implicit causality of the network, which gives rise to what we call a pseudo-time arrow. This model can account for all of the bizarre and seemingly unphysical experiences of time people report on psychedelics. As the linked article explains in detail, how e.g. thought-loops, moments of eternity, and time branching can be expressed in the network, and emerge recursively from calls to previous clusters of sensations (as information traces).

Even more strange, perhaps, is the fact that a long rate of qualia decay can give rise to unusual geometry. In particular, if you saturate the recursive calls and bind together a network with a very high branching factor, you get a hyperbolic space (cf. The Hyperbolic Geometry of DMT Experiences: Symmetries, Sheets, and Saddled Scenes).

That said, perhaps the most important aspect of the investigation has been to encounter a deep connection between felt sense of wellbeing (i.e. "emotional valence") and the structure of the network. From your article:

For instance, you might notice sensations in your body that were associated with the emotion, and let your mind generate a mental image of what the physical form of those sensations might look like. Then this set of emotions, thoughts, sensations, and visual images becomes “packaged together” in your mind, unambiguously designating it as a mental object.

The claim we would make is that the very way in which this packaging happens gives rise to pleasant or unpleasant mental objects, and this is determined by the structure (rather than "semantic content") of the experience. Evolution made it such that thoughts that refer to things that are good for the inclusive fitness of our genes get packaged in more symmetrical harmonious ways.

The above is, however, just a partial explanation. In order to grasp the valence effects of meditation and psychedelics, however, it will be important to take into account a number of additional paradigms of neuroscience. I recommend Mike Johnson's articles: A Future for Neuroscience and The Neuroscience of Meditation. The topic is too broad and complex for me to cover here right now, but I would advance the claim that (1) when you "harmonize" the introspective calls of previously-experienced qualia you up the valence, and (2) the process can lead to "annealing" where the internal structure of the moments of experience are highly-symmetrical, and for reasons we currently don't understand, this appears to co-occur in a 1-1 fashion with high valence.

I look forward to seeing more of your thoughts on meditation (and hopefully psychedelics, too, if you have personal experience with them).

*The specific brain regions mentioned is a likely mechanism of action but may turn out to be wrong upon learning further empirical facts. The general algorithmic structure of psychedelic effects, though, where every sensation "feels like it lasts longer" will have the downstream implications on the construction of the structure of moments experience either way.

Replies from: moridinamael, Kaj_Sotala↑ comment by moridinamael · 2019-03-04T22:05:53.372Z · LW(p) · GW(p)

Could you elaborate on how you're using the word "symmetrical" here?

Replies from: algekalipso, algekalipso↑ comment by algekalipso · 2022-03-22T00:24:08.767Z · LW(p) · GW(p)

Also see: The Symmetry Theory of Valence: 2020 Presentation

↑ comment by algekalipso · 2019-03-10T06:37:05.176Z · LW(p) · GW(p)

Sure! It is "invariance under an active transformation". The more energy is trapped in phenomenal spaces that are invariant under active transformations, the more blissful the state seems to be (see "analysis" section of this article).

↑ comment by Kaj_Sotala · 2019-03-03T15:29:02.060Z · LW(p) · GW(p)

Thanks, glad to hear you liked it! I didn't have a chance to look at your linked stuff yet, but will. :)

Replies from: algekalipso↑ comment by algekalipso · 2019-03-10T06:41:52.370Z · LW(p) · GW(p)

Definitely. I'll probably be quoting some of your text in articles on Qualia Computing soon, in order to broaden the bridge between LessWrong-consumable media and consciousness research.

Of all the articles linked, perhaps the best place to start would be the Pseudo-time Arrow. Very curious to hear your thoughts about it.

comment by Vincent B · 2019-03-10T19:55:02.103Z · LW(p) · GW(p)

This was very strange for me to read. I don't think I can quite imagine what it must be like to not be conscious all the time (though I tried).

Let me explain why, and how I experience 'being conscious'.

For me, being conscious and aware of myself, the world aroung me and what I am thinking or feeling is always ON. Being tired or exhausted may narrow this awareness down, but not collapse it. Of course, I don't remember every single thought I have over the day, but asking wether I'm "conscious right now" is pointless, because I always am. Asking that question feels just like every other thought that I deliberately think: a sentence appearing in my awareness-of-the-moment, that I can understand and manipulate.

I'm also almost always aware of my own awareness, and aware of my awareness of my own awareness. This is useful, because it gives me a three-layered approach to how I experience the world: on one level, I simply take in what I see, feel, hear, think etc., while (slightly time-delayed) on another level I analyze the contents of my awareness, while on a third level I keep track of the level my awareness is at. For example, if I'm in a conversation with another person, there is usually only one-to-two levels going on; if I'm very focused on a task, only one level; but most of the time, all three levels are 'active'.

The third level also gives me a perspective of how 'involved' I get over the day. Whenever I pop out of being immersed in one-to-two level situations, I immediately notice how long I was 'in', and wether it served the goal I was following at the time or if I lost track of what I was doing.

Over the years I learned to use my consciousness with more deliberation: I can mark certain thoughts I want to contemplate later; I have the habit of periodically checking wether there is anything in my 'to think about' box; I can construct several levels of meta-structure that I can pop in- and out of as I complete tasks; I can prompt my subconscious to translate intuitions into more concrete models for my contemplation, while I think on other things; ...

On summaries of thought:

Moments of introspective awareness are summaries of the system’s previous mental activity, with there being a dedicated subagent with the task of preparing and outputting such summaries. Usually it will only focus on tracking the specific kinds of mental states which seem important to track.

I use this summary function in order to remember the gist of my detailed thoughts over the day. When the theme of my thought changes, I stick a sort of 'this was mostly what I thought about' badge on my memories of that time. Depending on how important the memories or the subject are to me, I make an effort to commit more or less details of my conscious experience to memory. If it is more feelings-related or intuitive thinking, I deliberately request of my unconscious (in the post: subagents) a summary of feelings to remember, or an intuitive-thingy I may remember it by. This works surprisingly well for later recollection of intuitive thoughts, meaning thoughts that aren't quite ready yet for structured contemplation.

There are other functions, but those are the ones I use most frequently.

I would be curious to hear what you thinking of this. By the way, I am greatly enjoying this series. Especially the modelling with robots helps to recognize ideas and think of them in a different perspective.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2019-03-13T07:46:12.970Z · LW(p) · GW(p)

Huh! That's very interesting. Has your mind always been like that? As I'll discuss further in the next post, there are meditation techniques which are intended to get people closer to that level of introspective awareness, but I don't know whether anyone has reached the kind of a level that you're describing through practice.

I'm curious - to what extent do you experience things like putting off things which you feel you should do now, or doing things which you know that you'll regret later (like staying up too late)? (My prediction would be that you basically don't.)

Replies from: Vincent B↑ comment by Vincent B · 2019-03-13T16:01:26.894Z · LW(p) · GW(p)

No, it developed over the years. Honestly it just feels normal for me. I like being in control of my mind, so everything I do is somehow related to that. What I described in the previous comment is a somewhat idealized description. When I was younger, I used to experience consciousness as a stream-of-thought. I had much less control. Here are a few examples:

• I used to be annoyed at myself for forgetting important thoughts, so I got into the habit of writing them down (which now I no longer need all that much).

• At age 11, I began developing stories in my mind every evening until sleep. This eventually developed into the habit of introspective thinking every evening before sleep, where I would go through the day and sort everything out, make plans, etc.

• When I discovered 'important' new thoughts, through reading or introspection, I would focus on these and make them the object of my attention; but this happened frequently (every few days), so of course I jumped quite a bit from topic to topic. This annoyed me, so I started writing down goals; but it took me years to learn how to mentally prioritize thoughts, so that I'm now able to recognize when I can investigate a new topic or when it is prudent to finish the project I'm working on.

• I had several competing behaviors, some that I wanted to get rid of, only I didn't know how: on the one hand, I could start a project and work on it steadily until I finished; on the other hand, I would endlessly put off starting work. Finding strategies to control my behavior took several years of trial-and-error. I've only recently started to become good at it. Now I can (mostly) control my activity throughout the day.

Putting things off: Used to be a problem, hasn't been for some time. I'm still learning better scheduling though, and getting used to working effectively.

Doing things I know I will regret later: This is basically just reading for too long, which was a coping mechanism for many years. I recently installed an app used for children on my tablet, so now I have a bed-time. This is very effective!

I'm looking forward to you new post!

comment by quwgri · 2024-12-17T17:25:33.261Z · LW(p) · GW(p)

It seems to me that this is an attempt to sit on two chairs at once.

On the one hand, you assume that there are some discrete moments of our experience. But what could such a moment be equal to? It is unlikely to be equal to Planck's time. This means that you assume that different chronoquanta of the brain's existence are connected into one "moment of experience". You postulate the existence of "granules of qualia" that have internal integrity and temporal extension.

On the other hand, you assume that these "granules of qualia" are separated from each other and are not connected into a single whole.

Why?

The first and second are weakly connected to each other.

If you believe that there is a mysterious "temporal mental glue" that connects Planck's moments of the brain's existence into "granules of qualia" the length of a split second, then it is logical to assume that the "granules of qualia" in turn are connected by this glue into a single stream of consciousness.

No?

Sorry, I feel a little like a bitter cynic and a religious fundamentalist. It seems to me that behind this kind of reasoning there often lies an unconscious desire to maintain faith in the possibility of "mind uploading" or similar operations. If our life is not a single stream, then mind uploading would be much easier to implement. That is why many modern intellectuals prefer such theories.

You can say that the connection of "granules of qualia" into a single stream of the observer's existence does not make evolutionary sense. This is true. But the connection of individual Planck moments of the brain's existence into "granules of qualia" also does not make evolutionary sense. If you assume that the first is somehow an illusion, then you can assume the same about the second.

comment by Gordon Seidoh Worley (gworley) · 2019-03-13T02:27:40.798Z · LW(p) · GW(p)

This is giving me a new appreciation for why multiagent/subagent models of mind are so appealing. I used to think of them as people reifying many tiny phenomena into gross models that are easier to work with because the mental complexity of models with all the fine details is too hard to work with, and while I still think that's true, this gives me a deeper appreciation for what's going on, because it seems it's not just that it's a convenient model to abstract away from the details, but that the structure of our brains is setup in such a way that makes subagents feel natural so long as you model only so far down. I only ever much had a subjective experience of having two parts in my mind before blowing it all apart, so this gives an interesting look to me of how it is that people can feel like they are made up of many parts. Thanks!

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2019-03-13T08:06:39.007Z · LW(p) · GW(p)

Thanks! That's very strong praise. :)

As an aside, from your article:

IFS is a psychoanalytic model that, to my reading, originates from thinking of the Freudian id, ego, and superego like three homunculi competing to control the mind, but replaces the id, ego, and superego with groups of subagents serving exile, firefighter, and manager roles, respectively. These roles differer in both subtle and obvious ways from their inspirational forms,

FWIW, at least Richard Schwartz's published account of the origins of IFS contradicts this reading. Rather than basing the model on any previous theoretical framework, he reports that the observations which led to the creation of IFS actively contradicted many of his previous theoretical assumptions and that he was forced to basically throw them away and construct something new based on what he actually encountered in clincal work. E.g. a few excerpts from Internal Family Systems Theory:

Much of what is contained in this book was taught to me by my clients. Not only did they teach the contents of this approach; they also taught me to listen to them and trust their reports of what happened inside them, rather than to fit their reports into my preconceptions. This lesson was several years in the learning. I had to be corrected repeatedly. Many times clients forced me to discard ideas that had become mainstays of the theory. I also wrestled perpetually with my skeptical parts, which could not believe what I was hearing, and worried about how these reports would be received by academic colleagues. The reason why this model has immediate intuitive appeal for many people is that ultimately I was sometimes able to suspend my preconceptions, tune down my inner skeptics, and genuinely listen. [...]

Some therapists/theorists listened to their clients and consequently walked segments of this intriguing inner road before me. They provided lanterns and maps for some of the territory, while for other stretches I have had only my clients as guides. For the first few years after entering this intrapsychic realm, I assiduously avoided reading the reports of others, for fear that my observations would be biased by preconceptions of what existed and was possible. Gradually I felt secure enough to compare my observations with others who had directly interacted with inner entities. I was astounded by the similarity of many observations, yet intrigued by some of the differences. [...]

Born in part as a reaction against the acontextual excesses of the psychoanalytic movement, the systems-based models of family therapy traditionally eschewed issues of intrapsychic process. It was assumed that the family level is the most important system level to change, and that changes at this level trickle down to each family member’s inner life. Although this taboo against intrapsychic considerations delayed the emergence of more comprehensive systemic models, the taboo had the beneficial effect of allowing theorists to concentrate on one level of human system until useful adaptations of systems thinking could evolve.

I was one of these “external-only” family therapists for the first 8 years of my professional life. I obtained a PhD in marital and family therapy, so as to become totally immersed in the systems thinking that I found so fascinating and valuable. I was drawn particularly to the structural school of family therapy (Minuchin, 1974; Minuchin & Fishman, 1981), largely because of its optimistic philosophy. Salvador Minuchin asserted that people are basically competent but that this competence is constrained by their family structure; to release the competence, change the structure. The IFS model still holds this basic philosophy, but suggests that it is not just the external family structure that constrains and can change. [...]

This position—that it is useful to conceive of inner entities as autonomous personalities, as inner people—is contrary to the common-sense notion of ourselves. [...] It was only after several years of working with people’s parts that I could consider thinking of them in this multidimensional way, so I understand the difficulty this may create for many readers. [...]

When my colleagues and I began our treatment project in 1981, bulimia was a new and exotic syndrome for which there were no family systems explanations or maps. In our anxiety, we seized the frames and techniques developed by structural family therapists for other problems and tried them on each new bulimia case. In several instances this approach worked well, so we leaped to the conclusion that the essential mechanism behind bulimia was the triangulation of the client with the parents. In that sense, we became essentialists; we thought we had found the essence, so we could stop exploring and could use the same formula with each new case. Data that contradicted these assumptions were interpreted as the result of faulty observations or imperfect therapy.

Fortunately, we were involved in a study that required close attention to both the process and outcome of our therapy. As our study progressed, the strain of trying to fit contradictory observations into our narrow model became too great, too Procrustean. We were forced to leave the security and simplicity of our original model and face the anomie that accompanies change. We were also forced to listen carefully to clients when they talked about their experiences and about what was helpful to them. We were forced to discard our expert’s mind, full of preconceptions and authority, and adopt what the Buddhists call beginner’s mind, which is an open, collaborative state. “In the beginner’s mind there are many possibilities; in the expert’s mind there are few” (Suzuki, 1970, p. 21). In this sense, our clients have helped us to change as much as or more than we have helped them.

The IFS model was developed in this open, collaborative spirit. It encourages the beginner’s mind because, although therapists have some general preconceptions regarding the multiplicity of the mind, clients are the experts on their experiences. The clients describe their subpersonalities and the relationships of these to one another and to family members. Rather than imposing solutions through interpretations or directives, therapists collaborate with clients, respecting their expertise and resources.

comment by avturchin · 2019-03-03T10:45:27.476Z · LW(p) · GW(p)

I always felt urge to deconstruct the notion of "consciousness", as it mixes at least two things: sum of sensory experiences presented in form of qualia, and capability to verbally judge about something. It seems to me that in this post consciousness is interpreted in the second meaning, as verbal capability of "being aware".

However, the continuity of consciousness (if it exists) is more about the stream of non-verbal subjective experiences. It is completely possible to have subjective experiences without thinking anything about them or remembering them. When a person asks "Am I consciousness?" and concludes that she was not consciousness until that question, it doesn't mean she was a phylozombie the whole day. (This practice was invented by Gurjiev, btw, under the title of "self-remembering").

Replies from: Kaj_Sotala, jamii↑ comment by Kaj_Sotala · 2019-03-03T11:15:23.465Z · LW(p) · GW(p)

The qualia interpretation was what I had in mind when writing this, though of course Dehaene's work is based on conscious access in the sense of information being reportable to others.

It is completely possible to have subjective experiences without thinking anything about them or remembering them. When a person asks "Am I consciousness?" and concludes that she was not consciousness until that question, it doesn't mean she was a phylozombie the whole day.

Agreed, and the post was intended (in part) as an explanation of why this is the case.

Replies from: avturchin↑ comment by avturchin · 2019-03-03T13:19:54.212Z · LW(p) · GW(p)

It is interesting, is a person able to report something "unconsiousnessly"? For example, Tourette syndrome, when people unexpectedly say bad words. Or automatic writing, when, in extreme cases, a person doesn't know what his left hand is writing about.

↑ comment by jamii · 2019-03-03T12:04:19.219Z · LW(p) · GW(p)

I've seen some authors use 'subjective experience' for the former and reserve consciousness for the latter. Unfortunately consciousness is one of those words, like 'intelligence', that everyone wants a piece of, so maybe it would be useful to have a specific term for the latter too. 'Reflective awareness' sounds about right, but after some quick googling it looks like that term has already been claimed for something else.

comment by mobius wolf (mobius-wolf) · 2019-03-02T15:57:58.040Z · LW(p) · GW(p)

Is she just teaching them to be eternally self-concious?

comment by Josephina Vomit (josephina-vomit) · 2020-04-11T11:25:32.483Z · LW(p) · GW(p)

very interesting.

comment by Vincent B · 2019-03-14T09:09:25.676Z · LW(p) · GW(p)

Out of curiosity: could anybody explain what this ...

Blackmore’s report of “with more practice [the students] say that asking the question itself makes them more conscious, and that they can extend this consciousness from a few seconds to perhaps a minute or two”.

... feels like from the inside in contrast to 'not-conscious' (or aware, or self-aware, or whatever term is appropriate)? What does it mean to be "conscious [just] for a few seconds to perhaps a minute or two"? What is the rest of the time spend like, when one is not in this state? Is one just blindly following their own thoughts and sensations or just ... to some degree aware of the world around you, but not of yourself ... or ... I really can't imagine what else.