The shallow reality of 'deep learning theory'

post by Jesse Hoogland (jhoogland) · 2023-02-22T04:16:11.216Z · LW · GW · 11 commentsThis is a link post for https://www.jessehoogland.com/article/0-the-shallow-reality-of-deep-learning-theory

Contents

Why theory? Outline None 11 comments

Produced under the mentorship of Evan Hubinger as part of the SERI ML Alignment Theory Scholars Program - Winter 2022 Cohort

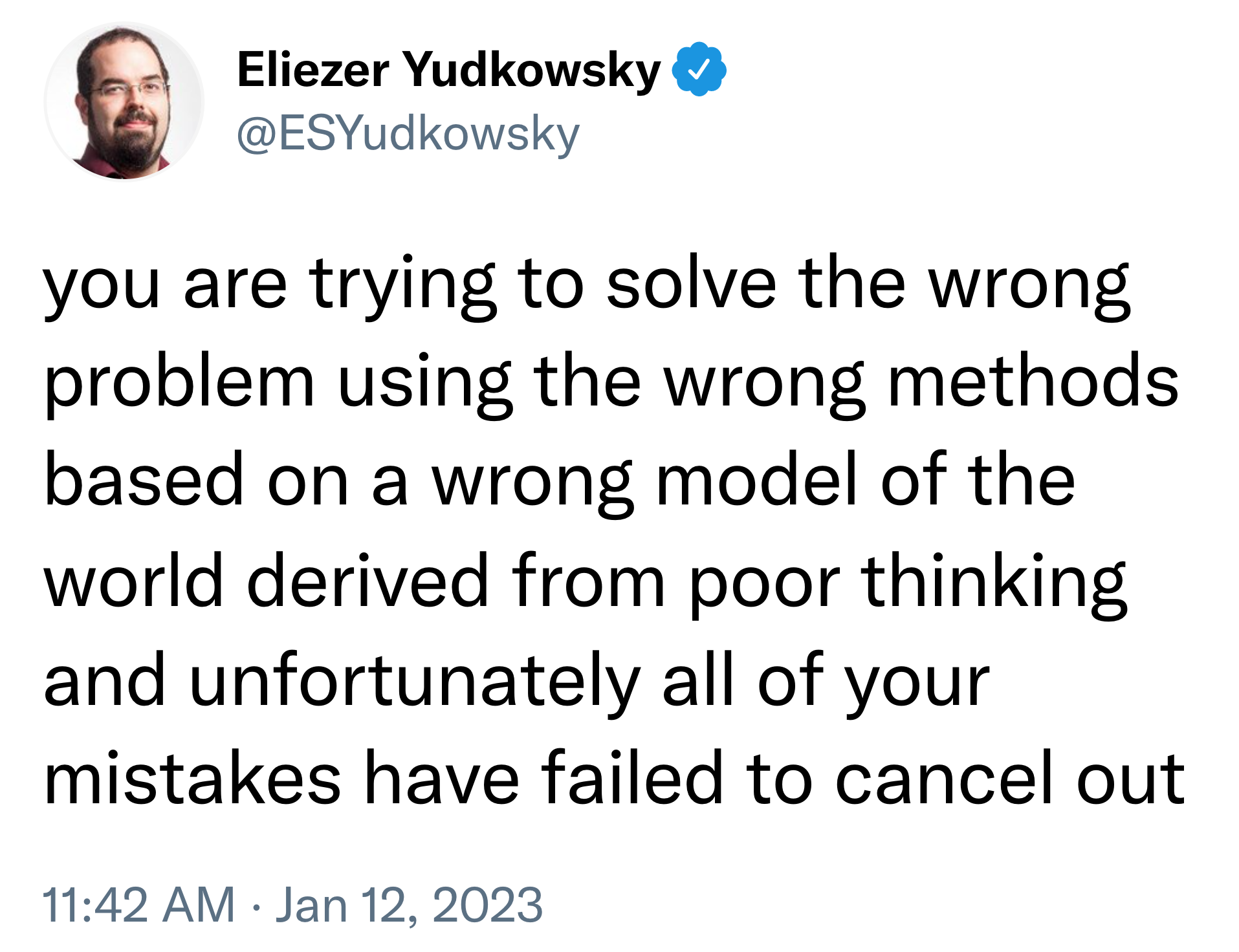

Most results under the umbrella of "deep learning theory" are not actually deep, about learning, or even theories.

This is because classical learning theory makes the wrong assumptions, takes the wrong limits, uses the wrong metrics, and aims for the wrong objectives. Learning theorists are stuck in a rut of one-upmanship, vying for vacuous bounds that don't say anything about any systems of actual interest.

(Okay, not really.)

In particular, I'll argue throughout this sequence that:

- Empirical risk minimization is the wrong framework, and risk is a weak foundation.

- In approximation theory, the universal approximation results are too general (they do not constrain efficiency) while the "depth separation" results meant to demonstrate the role of depth are too specific (they involve constructing contrived, unphysical target functions).

- Generalization theory has only two tricks, and they're both limited:

- Uniform convergence is the wrong approach, and model class complexities (VC dimension, Rademacher complexity, and covering numbers) are the wrong metric. Understanding deep learning requires looking at the microscopic structure within model classes.

- Robustness to noise is an imperfect proxy for generalization, and techniques that rely on it (margin theory, sharpness/flatness, compression, PAC-Bayes, etc.) are oversold.

- Optimization theory is a bit better, but training-time guarantees involve questionable assumptions, and the obsession with second-order optimization is delusional. Also, the NTK is bad. Get over it.

- At a higher level, the obsession with deriving bounds for approximation/generalization/learning behavior is misguided. These bounds serve mainly as political benchmarks rather than a source of theoretical insight. More attention should go towards explaining empirically observed phenomena like double descent (which, to be fair, is starting to happen).

That said, there are new approaches that I'm more optimistic about. In particular, I think that singular learning theory [LW · GW] (SLT) is the most likely path to lead to a "theory of deep learning" because it (1) has stronger theoretical foundations, (2) engages with the structure of individual models, and (3) gives us a principled way to bridge between this microscopic structure and the macroscopic properties of the model class[1]. I expect the field of mechanistic interpretability and the eventual formalism of phase transitions and "sharp left turns" to be grounded in the language of SLT.

Why theory?

A mathematical theory of learning and intelligence could form a valuable tool in the alignment arsenal, that helps us:

- Develop and scale interpretability tools [LW · GW]

- Inspire better experiments (i.e., focus our bits of attention more effectively [LW · GW]).

- Establish a common language between experimentalists and theorists.

That's not to say that the right theory of learning is risk-free:

- A good theory could inspire new capabilities. We didn't need a theory of mechanics to build the first vehicles, but we couldn't have gotten to the moon without it.

- The wrong theory could mislead us. Just as theory tells us where to look, it also tells us where not to look. The wrong theory could cause us to neglect important parts of the problem.

- It could be one prolonged nerd-snipe that draws attention and resources away from other critical areas in the field. Brilliant string theorists aren't exactly helping advance living and technology standards by computing the partition functions of black holes in 5D de-Sitter spaces.[2]

All that said, I think the benefits currently outweigh the risks, especially if we put the right infosec policy in place when if learning theory starts showing signs of any practical utility. It's fortunate, then, that we haven't seen those signs yet.

Outline

My aims are:

- To discourage other alignment researchers from wasting their time.

- To argue for what makes singular learning theory different and why I think it the likeliest contender for an eventual grand unified theory of learning.

- To invoke Cunningham's law — i.e., to get other people to tell me where I'm wrong and what I've been missing in learning theory.

There's also the question of integrity: if I am to criticize an entire field of people smarter than I am, I had better present a strong argument and ample evidence.

Throughout the rest of this sequence, I'll be drawing on notes I compiled from lecture notes by Telgarsky, Moitra, Grosse, Mossel, Ge, and Arora, books by Roberts et al. and Hastie et al., a festschrift of Chervonenkis, and a litany of articles.[3]

The sequence follows the three-fold division of approximation, generalization, and optimization preferred by the learning theorists. There's an additional preface on why empirical risk minimization is flawed (up next) and an epilogue on why singular learning theory seems different.

- The shallow reality of 'deep learning theory' [LW · GW] (You are here)

Coming soon:

- Empirical risk minimization is fundamentally confused

- On approximation— the cosmic waste of universal approximation

- On generalization— against PAC-learning

- On optimization— the NTK is bad, actually

- What makes singular learning theory different?

- ^

This sequence was inspired by my worry that I had focused too singularly on singular learning theory. I went on a journey through the broader sea of "learning theory" hopeful that I would find other signs of useful theory. My search came up mostly empty, which is why I decided to write >10,000 words on the subject.

- ^

Though, granted, string theory keeps on popping up in other branches like condensed matter theory, where it can go on to motivate practical results in material science (and singular learning theory, for that matter).

- ^

I haven't gone through all of these sources in equal detail, but the content I cover is representative of what you'll learn in a typical course on deep learning theory.

11 comments

Comments sorted by top scores.

comment by Ege Erdil (ege-erdil) · 2023-02-22T12:40:44.502Z · LW(p) · GW(p)

I'm looking forward to the concrete out-of-sample predictions that will be made by any deep learning theory. So far, they've been mostly ex-post rationalizations of properties that have already been observed in practice, which are quite easy to produce at an informal level.

comment by interstice · 2023-02-22T19:38:52.681Z · LW(p) · GW(p)

While I agree that existing learning theory leaves much to be desired, I don't think your previous posts have presented strong enough evidence for the superiority of singular learning theory that we should "scrap the whole field and start over". The gold standard for any theory of deep learning is "actually being able to predict non-trivial properties of real networks" and AFAIK SLT does not yet meet this standard[1]. Which is not to say that it shouldn't be pursued -- let a thousand flowers bloom. But I think your post has a vibe of "the SLT is the only worthwhile avenue to explore" which I don't think is well-supported by the evidence. For e.g. here are some papers which are part of research programs which seem at least as promising as SLT as potential avenues to explore to me.

less so than the NTK which you deride, for instance! ↩︎

↑ comment by Jesse Hoogland (jhoogland) · 2023-02-22T21:48:03.632Z · LW(p) · GW(p)

I agree with you that I haven't presented enough evidence! Which is why this is the first part in a six-part sequence.

comment by Daniel Paleka · 2023-02-22T13:08:36.537Z · LW(p) · GW(p)

I don't think LW is a good venue for judging the merits of this work. The crowd here will not be able to critically evaluate the technical statements.

When you write the sequence, write a paper, put it on arXiv and Twitter, and send it to a (preferably OpenReview, say TMLR) venue, so it's likely to catch the attention of the relevant research subcommunities. My understanding is that the ML theory field is an honest field interested in bringing their work closer to the reality of current ML models. There are many strong mathematicians in the field who will be interested in dissecting your statements.

Replies from: jhoogland, Lukas_Gloor↑ comment by Jesse Hoogland (jhoogland) · 2023-02-22T16:51:49.994Z · LW(p) · GW(p)

Yes, I'm planning to adapt a more technical and diplomatic version of this sequence after the first pass.

To give the ML theorists credit, there is genuinely interesting new non-"classical" work going on (but then "classical" is doing a lot of the heavy lifting). Still, some of these old-style tendrils of classical learning theory are lingering around, and it's time to rip off the bandaid.

↑ comment by Lukas_Gloor · 2023-02-22T14:02:36.085Z · LW(p) · GW(p)

What if the arguments are more philosophy than math? In that case, I'd say there's still some incentive to talk to the experts who are most familiar with the math, but a bit less so?

Replies from: jhoogland↑ comment by Jesse Hoogland (jhoogland) · 2023-02-22T16:53:10.279Z · LW(p) · GW(p)

There's more than enough math! (And only a bit of philosophy.)

comment by Lucius Bushnaq (Lblack) · 2023-02-22T07:15:23.847Z · LW(p) · GW(p)

While funny, I think that tweet is perhaps a bit too plausible, and may be mistaken as having been aimed at statistical learning theorists for real, if a reader isn't familiar with its original context. Maybe flag that somehow?

Replies from: DragonGod, jhoogland↑ comment by Jesse Hoogland (jhoogland) · 2023-02-22T16:44:16.529Z · LW(p) · GW(p)

Thanks for pointing this out!