Crossing the experiments: a baby

post by Stuart_Armstrong · 2013-08-05T00:31:56.319Z · LW · GW · Legacy · 89 commentsContents

89 comments

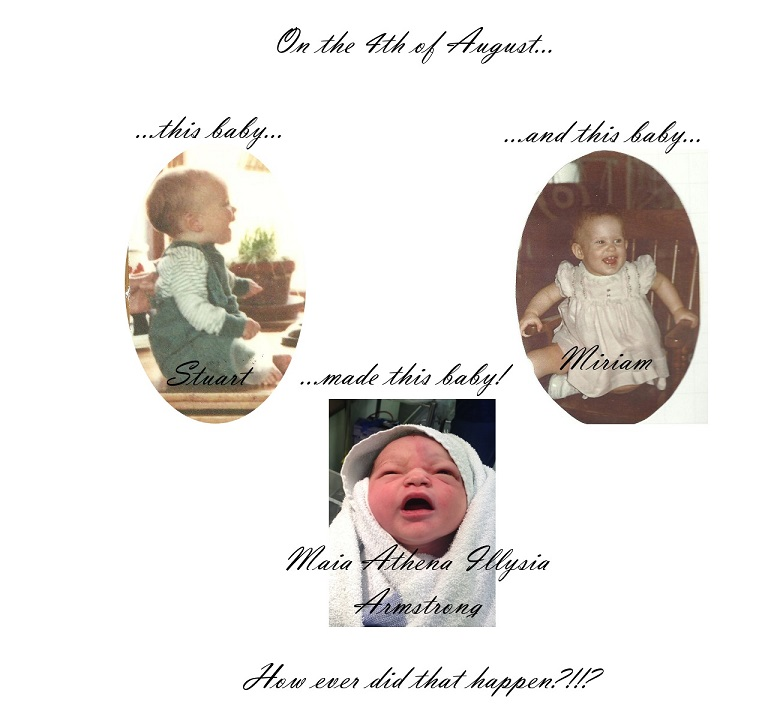

I've always been more of a theoretician, but it's important to try one's hand at practical problems from time to time. In that vein, I've decided to try three simultaneous experiments on major Less Wrong themes. I will aim to acquire something to protect, I will practice training a seed intelligence, and I will become more familiar with many consequences of evolutionary psychology.

In the spirit of efficiency I'll combine all these experiments into one:

She's never seen Star Wars or Doctor Who.

She's never seen David Attenborough or read J. L. Borges.

She's never had a philosophical debate.

She's never been skiing.

Never had sex, never been hugged or even been licked by a dog!

She has so much to look forwards to...

(Though she'll be very boring for several months yet!)

89 comments

Comments sorted by top scores.

comment by Manfred · 2013-08-05T00:56:15.073Z · LW(p) · GW(p)

Congratulations, but I still don't want to hear about your baby.

EDIT: It occurs to me that the correct way to perpetrate baby-photos is to create an "Early Childhood advice repository," or a "Social Open Thread" :P Or, and I like this one best, a satirical "welcome to LW" comment.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-07T09:34:26.558Z · LW(p) · GW(p)

a satirical "welcome to LW" comment.

Damn, why didn't I think of that?

Anyway, rest assured this won't have a repeat performance!

Replies from: David_Gerard↑ comment by David_Gerard · 2013-08-07T11:04:34.694Z · LW(p) · GW(p)

Anyway, rest assured this won't have a repeat performance!

You say that now ...

comment by RolfAndreassen · 2013-08-05T01:31:18.345Z · LW(p) · GW(p)

I admire the attempt, but yeah, she's off-topic. Also, some of us have already acquired weapons of mass cuteness and are ready to deploy them in retaliation for first use. I suggest you back down. :)

comment by Emile · 2013-08-05T10:00:50.093Z · LW(p) · GW(p)

Congratulations!

There is a (not very active) Lesswrong-Parents mailing-list.

Replies from: Stuart_Armstrong, Kawoomba↑ comment by Stuart_Armstrong · 2013-08-07T09:30:11.617Z · LW(p) · GW(p)

Thanks!

↑ comment by Kawoomba · 2013-08-06T16:00:54.235Z · LW(p) · GW(p)

I just checked it out, but would urge anyone to stay away from it. It managed to rustle my jimmies quite thoroughly in just a few minutes, I've read saner practices when skimming non-rationalist women's magazines.

Replies from: Emile, Lumifer↑ comment by Emile · 2013-08-06T16:13:34.459Z · LW(p) · GW(p)

Any specific things rustled your jimmies?

Wouldn't it be better to urge people to join it, and encourage saner practices?

(When it comes to child-rearing, I don't think "non-rationalist women's magazines" are a particularly bad source; I could probably learn plenty of useful things reading them)

Replies from: Kawoomba↑ comment by Kawoomba · 2013-08-06T16:33:54.439Z · LW(p) · GW(p)

Any specific things rustled your jimmies?

I'll answer that per PM later on if you don't mind, the information would be too clearly personally identifiable.

Wouldn't it be better to urge people to join it, and encourage saner practices?

It's a nearly dormant list, very little activity. There is a plethora of parenting related materials online, not every list should be saved, especially if it's not already in a good place to start with. As for encouraging saner practices, unfortunately it's an issue worse than politics, and I often lack the zen-state to be fighting windmills and coating "you are doing it wrong" in layers of niceties. Do you try and save every bad forum you come across, or just do your minimum duty and put up a "stay away" beacon at best?

Replies from: Emile↑ comment by Emile · 2013-08-07T20:27:00.292Z · LW(p) · GW(p)

Do you try and save every bad forum you come across, or just do your minimum duty and put up a "stay away" beacon at best?

Nope! But since I'm part of that list, I encourage people to improve it :)

I think there should be a place for LessWrongers interested in parenting to exchange ideas, and it's better if there's only one such place - that mailing lists seems like the obvious focal point, so I'd like to see anybody interested join it, and improve it's quality!

↑ comment by Lumifer · 2013-08-06T17:53:25.795Z · LW(p) · GW(p)

I've taken a quick look at it but didn't see anything horrible -- if anything it's a fairly "standard" discussion coming out of weird/nonconventional people...

Blindly following the advice from the internet is, of course, not a good idea regardless of what you're reading.

comment by James_Miller · 2013-08-05T05:25:17.350Z · LW(p) · GW(p)

I teach at a women's college and whenever I mention my young son most of my students smile. The semester he was born I put a picture of him on the last page of my final exam and many students positively mentioned the picture in the following semesters. A way to increase the number of women who read LW would be to have more posts like this.

The post is currently at -5. I bet if he had basically the same content but it was about some robot he just created the post would have a positive rank.

Replies from: Qiaochu_Yuan, maia, Desrtopa, wedrifid, David_Gerard, Crux↑ comment by Qiaochu_Yuan · 2013-08-05T05:41:37.981Z · LW(p) · GW(p)

A way to increase the number of women who read LW would be to have more posts like this.

It's not as if we terminally value having more women around. Presumably this is an instrumental value in the service of some other value, and if so we should keep in mind whether attempts to satisfy this value interfere with that or other values, like maintaining a high level of discourse.

Replies from: Crux↑ comment by Crux · 2013-08-05T06:20:17.227Z · LW(p) · GW(p)

I agree. This is an important point. Even though I see no reason why it wouldn't be a good thing to have more women posting on LW, I don't think introducing more stuff that's off topic is a good way to do so. If having more women around would be a good thing, as I assume it would be, this would suggest that there are mostly likely plenty of on-topic ways to do so.

↑ comment by maia · 2013-08-05T11:49:13.757Z · LW(p) · GW(p)

I find this vaguely unsettling, because it seems to imply (though I doubt you intended this) that women have to be attracted via discussion of babies and other stereotypically "feminine" things. I'd rather see a change where more women realize that they can be interested in things other than babies, than a change where LW condescends to women by posting about babies.

Replies from: mwengler, Randy_M↑ comment by mwengler · 2013-08-06T14:41:21.966Z · LW(p) · GW(p)

As nerd-boys have been noticing for at least decades, women don't have to be attracted at all! But jumping up in to rationality land, there are plenty of reasons to want them to be attracted, not the least of them being that providing useful information on how to improve reasoning is valuable approximately proportional to the number of people who receive it, and there are nearly as many women as men out there in the potential audience.

Replies from: maia↑ comment by maia · 2013-08-07T03:00:25.905Z · LW(p) · GW(p)

women don't have to be attracted at all!

I certainly wasn't saying that LW shouldn't make an effort to attract women. I'm female myself, and I'd be happy to have more people who look like me around, and I agree with you about the reasons why as well.

The key part of my sentence there is "via discussion of babies," because as a female, I wouldn't like that particular method of attracting women at all. I think there might be other ways that would be more effective and that also wouldn't dilute the substance of the common interests that bring us here.

↑ comment by Desrtopa · 2013-08-05T18:41:23.049Z · LW(p) · GW(p)

The post is currently at -5. I bet if he had basically the same content but it was about some robot he just created the post would have a positive rank.

Creating a robot and creating a baby may be very broadly similar in output, but could hardly be less similar in input. Creating a robot requires extensive domain knowledge, whereas you can make a baby without even knowing how babies are made, although ideally you really shouldn't.

Replies from: mwengler↑ comment by mwengler · 2013-08-06T14:37:04.422Z · LW(p) · GW(p)

The success of our species is gigantically driven by specialization. I do not need to know how a toilet and a sewage system work in order to use them. I do not have to know the details of creating food, storing it and transporting it without it going bad, in order to eat it, or even cook it. I do not need to know the circuit details of a cell=phone chip in order to create applications for smart phones.

It would be an amazingly high opportunity cost with little if any benefit if all people who had children were to know how babies are made in great detail. What are you thinking that you state as a moral truth that they should?

Replies from: Desrtopa↑ comment by wedrifid · 2013-08-05T09:20:24.235Z · LW(p) · GW(p)

The post is currently at -5. I bet if he had basically the same content but it was about some robot he just created the post would have a positive rank.

Creating a robot is easy. Everybody is born with the ability to create robots once they grow up so long as they can keep themselves alive and find a partner. In fact, not making robots is a harder task for many people and something that people fail at frequently, particularly if they lack education. If we started having people make posts every time someone made a robot we'd be overwhelmed with robot creation posts. Wait, no. Robots aren't the same as babies after all.

(I am approximately neutral regarding this kind of post. I reject this particular argument but not the preceding observation that such sharing can be beneficial to a community.)

↑ comment by David_Gerard · 2013-08-05T11:32:47.127Z · LW(p) · GW(p)

A way to increase the number of women who read LW would be to have more posts like this.

And we've already given new posts a pink border. Nearly there!

Replies from: Manfred↑ comment by Crux · 2013-08-05T06:36:42.684Z · LW(p) · GW(p)

I bet if he had basically the same content but it was about some robot he just created the post would have a positive rank.

I don't understand the analogy. The phrases "create a baby" and "create a robot" utilize the same syntax, but they're certainly in no way analogous in this context. Having a kid is an achievement for sure, but it's not what this forum is about. Creating a robot on the other hand though, this is related to some of the most salient topics on this forum--AI etc.

Edit: I missed the "same content" part of your point. Now that I see that I disagree with the assumption that it would be upvoted. I guarantee a post wouldn't be upvoted if it's about a new robot someone made but has no content about how it was programmed or made, or anything else but pictures that don't really reveal anything and some sentences that don't really explain anything.

Replies from: mwengler↑ comment by mwengler · 2013-08-06T14:50:26.437Z · LW(p) · GW(p)

Having a kid is an achievement for sure, but it's not what this forum is about.

The connection between artificial intelligence and natural intelligence seems to escape many who post here. As an engineer, you would be a fool to ignore the natural experiments all around you in natural intelligence as you contemplate your artificial efforts.

Further, the site is set on adjusting human brains to be more rational. Of course this has to do with how we raise children. There is nothing trivial or boring or irrelevant to studies of friendly artificial intelligence in observations of how a baby turns in to a person under the influence of a tremendous amount of complex interaction with other persons.

Replies from: Crux↑ comment by Crux · 2013-08-07T08:55:36.538Z · LW(p) · GW(p)

Although it does seem to be the case that it would be helpful for the purpose of creating an artificial intelligence to consider how natural intelligence works, I don't think this is something we're in a shortage of here on LW. You say the connection between artificial and natural intelligence seems to escape many who post here, yet I see people talking about how human reasoning works much more often than I see people talk about how AI works, and when people discuss one, it's not uncommon for them to draw analogies from the other.

This site is of course about how to adjust human brains to be more rational, and of course this has to do with how we raise children. And yeah, of course it's relevant to consider how a baby turns into a person, and how the complex interpersonal interaction around them affects how this occurs. But here's the problem: The OP says nothing about this. I don't see even one word or phrase concerning how to raise children, or the mechanisms involved in raising children, or anything like that.

comment by Kawoomba · 2013-08-05T19:11:45.715Z · LW(p) · GW(p)

How ever did that happen?!!?

Really? I mean, um, really? Well, ok, hold on: There are the bees, and the flowers, and ... well ...

EDIT: I'VE SAID TOO MUCH ALREADY. I WILL UPSET THE LW BALANCE.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-07T09:25:32.588Z · LW(p) · GW(p)

Tried it once with birds and bees - it was painful...

comment by CoffeeStain · 2013-08-05T07:45:14.114Z · LW(p) · GW(p)

Congrats! What is her opinion on the Self Indication Assumption?

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-07T09:06:19.500Z · LW(p) · GW(p)

She hasn't indicated it yet herself...

comment by Gunnar_Zarncke · 2013-08-07T06:51:56.332Z · LW(p) · GW(p)

Though she'll be very boring for several months yet.

Not in the least. In the first month if you look closely you can literally see one brain module come online after the other. And I don't mean only those as in Hetty van de Rijt "The Wonder Weeks" which I was looking for (and which did not exactly fit with my children). In the first month you will continuously notice new abilities like focuing, turning, griping, smooth motions, detecting sound direction, gazing, interest in sound, lighting, form, .......

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-07T09:24:40.059Z · LW(p) · GW(p)

I'll look out for those!

Replies from: David_Gerard↑ comment by David_Gerard · 2013-08-07T11:01:33.970Z · LW(p) · GW(p)

It'll give you insight into just how spectacularly many different fragments go into the thing we attempt to generalise as the term "intelligence", as much as we foolishly pretend that this is usable as a handle on a single concept.

comment by BlueSun · 2013-08-05T17:29:46.081Z · LW(p) · GW(p)

Is there a thread somewhere about effective ways to plant the 'rationalist seed' in your children? I'd like to see something other than anecdotes ideally. But just ideas about books to read, shows to watch, or places to visit for different ages of children would be useful to me. For example,

My 2 and 4 year old both love Introductory Calculus For Infants

And a couple of years ago I got the the Star War ABC which lead to a HUGE love of Star Wars. I'm hoping that turns into a love of Science Fiction...

Replies from: Kaj_Sotala, David_Gerard, Stuart_Armstrong↑ comment by Kaj_Sotala · 2013-08-05T17:44:53.214Z · LW(p) · GW(p)

On the math side, DragonBox is great for teaching the symbol manipulation aspect of algebra for kids, though it doesn't do much to teach where the rules actually come from. But at least personally I find that it's generally the symbol manipulation that's the harder part, anyway.

↑ comment by David_Gerard · 2013-08-05T18:26:13.664Z · LW(p) · GW(p)

Far as I can tell, you just have to keep hammering at it. Children pretty much exhibit all the major cognitive biases, untrammelled. I do occasionally ask "How do you know that?" and pursue it a bit, but it doesn't take long to exhaust an extroverted 6yo's philosophical introspection.

↑ comment by Stuart_Armstrong · 2013-08-07T09:26:14.168Z · LW(p) · GW(p)

Thanks for the tips.

comment by David_Gerard · 2013-08-05T06:53:03.889Z · LW(p) · GW(p)

(Though she'll be very boring for several months yet!)

That is not going to be the case. There will be times you so wish it was.

I can tell you, though, you're quite correct that there's little so fascinating as watching and helping a small intelligence grow, and each ability come online.

(Champagne!)

Replies from: Luke_A_Somers, Stuart_Armstrong, Randy_M↑ comment by Luke_A_Somers · 2013-08-05T14:36:19.043Z · LW(p) · GW(p)

Debugging the input and output terminals can take years. One common problem is for the signals to be redirected to /dev/null when not actively polling for input. Even after that is resolved, there may be a problem in which signals are assigned inappropriate weights.

Replies from: mwengler↑ comment by mwengler · 2013-08-06T14:30:05.112Z · LW(p) · GW(p)

I was out in front of my house with my 3 year old. She looked up and said "train."

She was referring to the train horn she was hearing from about 4 miles away in Encinitas. She said this before my own brain had tossed my own hearing of this in to /dev/null, so I got to hear what that train horn sounds like from 4 miles away in a coastal town in the early evening.

That baby has been creating beauty in my life for more than 16 years and she is only 15.5 years old. Most recently I have been teaching her to drive, pushing all sorts of behaviors down in to her cerebellum so that her conscious mind can worry about pedestrians and cars stopping suddenly in front of her. Happily for me, it is hard for me to imagine more fun or exciting tasks than teaching her to drive.

↑ comment by Stuart_Armstrong · 2013-08-07T09:31:13.263Z · LW(p) · GW(p)

Thanks!

comment by Kaj_Sotala · 2013-08-05T07:40:50.508Z · LW(p) · GW(p)

Congratulations!

Replies from: Wei_Dai, Stuart_Armstrong↑ comment by Wei Dai (Wei_Dai) · 2013-08-05T20:11:25.490Z · LW(p) · GW(p)

I thought negative utilitarianism generally doesn't endorse the creation of new life (assuming we can't guarantee some standard of well-being for it). Are you foreseeing that Stuart's baby will eventually make a positive impact by reducing suffering of others?

Replies from: Dahlen, Kaj_Sotala, Pablo_Stafforini↑ comment by Dahlen · 2013-08-06T03:12:24.743Z · LW(p) · GW(p)

Are you foreseeing that Stuart's baby will eventually make a positive impact by reducing suffering of others?

"The one with the power to vanquish the Dark Lord approaches ... born as the seventh month dies ..."

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-07T09:05:43.291Z · LW(p) · GW(p)

And she has a little scar on her forehead, from the forceps!

↑ comment by Kaj_Sotala · 2013-08-05T20:48:00.754Z · LW(p) · GW(p)

All else being equal, creating new lives would be a bad thing, but I don't believe that trying to discourage people from having children on those grounds would be particularly useful (its net effect would probably just be bad PR for negative utilitarianism), nor that it would be good to have a general policy of smart and ethical people having less children than people not belonging to that class. Also, I count Stuart as my friend, and I didn't want him to experience suffering due to his happy event being tainted by a uniformly negative response to this thread.

Replies from: Lukas_Gloor, ExaminedThought↑ comment by Lukas_Gloor · 2013-08-06T17:22:28.420Z · LW(p) · GW(p)

nor that it would be good to have a general policy of smart and ethical people having less children than people not belonging to that class.

It depends on the counterfactual. If it consists of donating all the resources a kid would cost to the best cause, then that likely trumps everything. Especially also if you take the haste consideration into account; it takes forever to raise a child and good altruists are likely cheaper to create by other means. And the part about altruists losing ground in Darwinian terms can be counteracted by sperm donations (or egg donations if there is demand for that).

Having said that, it is important to emphasize that personal factors and preferences need to taken into account in the expected value calculation, since it would be bad/irrational if someone ends up unhappy and burned out after trying too hard to be a perfect utility-maximizer.

↑ comment by anon (ExaminedThought) · 2013-08-06T03:18:28.341Z · LW(p) · GW(p)

removedReplies from: RomeoStevens↑ comment by RomeoStevens · 2013-08-06T13:02:50.873Z · LW(p) · GW(p)

Prioritarianism seems more coherent.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T17:09:51.896Z · LW(p) · GW(p)

Coherent in what sense? Prioritarianism is likely more intuitive, but a problem is that there are infinitely many ways to draw a concave welfare function and no good reasons to choose one over the other. I don't think negative utilitarianism is necessarily incoherent by itself, it depends on the way it is formalized.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-06T17:17:16.208Z · LW(p) · GW(p)

I agree that prioritarianism has the problems you mention. I note that negative-leaning utilitarianism (though not strict negative utilitarianism) has analogous problems: just as there are infinitely many ways to draw a concave welfare function, so there are infinitely many exchange rates between positive and negative experience.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T17:40:44.162Z · LW(p) · GW(p)

Right, and I suspect the same holds for classical utilitarianism too, because there seems to be no obvious way to normalize happiness-units with suffering-units. But I know you think differently.

Replies from: army1987, Pablo_Stafforini, Stuart_Armstrong↑ comment by A1987dM (army1987) · 2013-08-06T22:50:27.249Z · LW(p) · GW(p)

because there seems to be no obvious way to normalize happiness-units with suffering-units

They're the same size when you're indifferent between the status quo and equal chances of getting one more happiness unit or one more suffering unit. Duh.

Am I missing something?

Replies from: Lukas_Gloor, Stuart_Armstrong↑ comment by Lukas_Gloor · 2013-08-06T23:30:48.217Z · LW(p) · GW(p)

Classical utilitarians usually argue for their view from an impartial, altruistic perspective. If they had atypical intuitions about particular cases, they would discard them if it can be shown that the intuitions don't correspond to what is effectively best for the interests of all sentient beings. So in order to qualify as genuinely other-regarding/altruistic, the procedure one uses for coming up with a suffering/happiness exchange rate would have to produce the same output for all persons that apply it correctly, otherwise it would not be a procedure for an objective exchange rate.

The procedure you propose leads to different people giving answers that differ in orders of magnitude. If I would accept ten hours of torture for a week of vacation on the beach, and someone else would only accept ten seconds of torture for the same thing, then either of us will have a hard time justifying to force such trades onto other sentient beings for the greater good. It goes both ways of course, if classical utilitarianism is correct, too low an exchange rate would be just as bad as one that is too high (by the same margin).

Since human intuitions differ so much on the subject, one would have to either (a) establish that most people are biased and that there is in fact an exchange rate that everyone would agree on, if they were rational and knew enough, or (b) find some other way to find an objective exchange rate plus a good enough justification for why it should be relevant. I'm very skeptical concerning the feasibility of this.

↑ comment by Stuart_Armstrong · 2013-08-07T09:03:41.460Z · LW(p) · GW(p)

Preference utilitarianism is not the same thing as hedonistic utilitarianism (they reach different conclusions), so you can't use one to define the other.

Replies from: army1987↑ comment by A1987dM (army1987) · 2013-08-07T13:14:14.870Z · LW(p) · GW(p)

Googles for classical utilitarianism

Oops.

Note to self: Never comment anything unless I'm sure about the meaning of each word in it.

↑ comment by Pablo (Pablo_Stafforini) · 2013-08-06T18:52:47.063Z · LW(p) · GW(p)

If you don't mind, I'd be interested in knowing why you think this is so. If you conceive of happiness and suffering as states that instantiate some phenomenal property (pleasantness and unpleasantness, respectively), then an obvious normalization of the units is in terms of felt intensity: a given instance of suffering corresponds to some instance of happiness just when one realizes the property of unpleasantness to the same degree as the other realizes the property of pleasantness. And if you, instead, conceive of happiness and suffering as states that are the objects of some intentional property (say, desiring and desiring-not), then the normalization could be done in terms of the intensity of the desires: a given instance of suffering corresponds to some instance of happiness just when the state that one desires not to be in is desired with the same intensity as the state one desires to be in.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T19:54:49.405Z · LW(p) · GW(p)

How do you measure the intensity of desires, if not by introspectively comparing them (see below)? If you do measure something objectively, on what grounds do you justify its ethical relevance? I mean, we could measure all kinds of things, such as the amount of neurotransmitters released or activity of involved brain regions and so on, but just because there are parameters that turn out to be comparable for both pleasure and pain doesn't meant that they automatically constitute whatever we care about.

If you instead take an approach analogous to revealed preferences (what introspective comparison of hedonic valence seems to come down to), you have to look at decision-situations where people make conscious welfare-tradeoffs. And merely being able to visualize pleasure doesn't necessarily provoke the same reaction in all beings -- it depends on contingencies of brain-wiring. We can imagine beings that are only very slightly moved by the prospect of intense, long pleasures and that wouldn't undergo small amounts of suffering to get there.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-09T17:19:39.574Z · LW(p) · GW(p)

How do you measure the intensity of desires, if not by introspectively comparing them (see below)?

You need intensity of desire to compare pains and pleasures, but also to compare pains of different intensities. So if introspection raises a problem for one type of comparison, it should raise a problem for the other type, too. Yet you think we can make comparisons within pains. So whatever reasons you have for thinking that introspection is reliable in making such comparisons, these should also be reasons for thinking that introspection is reliable for making comparisons between pains and pleasures.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-10T01:20:53.098Z · LW(p) · GW(p)

Let's assume we make use of memory to rank pains in terms of how much we don't want to undergo them. Likewise, we may rank pleasures in terms of how much we want to have them now (or according to other measurable features). The result is two scales with comparability within the same scale. Now how do you normalize the two scales, is there not an extra source for arbitrariness? People may rank the pains the same way among themselves and the same for all the pleasures too, but when it comes to trading some pain for some pleasure, some people might be very eager to do it, whereas others might not be. Convergence of comparability of pains doesn't necessarily imply convergence of comparability of exchange rates. You'd be comparing two separate dimensions.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-10T06:39:57.222Z · LW(p) · GW(p)

Now how do you normalize the two scales,

As I said above, this could be done either in terms of felt intensity or intensity of desire.

This exchange seems to have proceeded as follows:

Lukas: You can't normalize the pleasure and pain scales.

Pablo: Yes, you can, by considering either the intensity of the experience or the intensity of the desire.

Lukas: Ah, but you need to rely on introspection to do that.

Pablo: Yes, but you also need to rely on introspection to make comparisons within pains.

Lukas: But you can't normalize the pleasure and pain scales.

As my reconstruction of the exchange indicates, I don't think you are raising a valid objection here, since I believe I have already addressed that problem. Once you leave out worries about introspection, what are your reasons for thinking that classical utilitarians cannot make non-arbitrary comparisons between pleasures and pains, while thinking that negative utilitarians can make non-arbitrary comparisons within pains?

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-10T13:32:05.881Z · LW(p) · GW(p)

If you write down all we can know about pleasures (in the moment), and all we can know about pains, you may find parameters to compare (like "intensity", or amount of neurotransmitters, or something else), but there would be no reason why people need to choose an exchange rate corresponding to some measured properties. I believe your point is that we "have reason" to pick intensity here, but I don't see why it is rationally required of beings to care about it, and I believe empirically, many people do not care about it, and certainly you could construct artificial minds that don't care about it.

Pleasure is not what makes decisions for us. It is the desire/craving for pleasure, and there is no reason why a craving for a specific amount of pleasure needs to always come with the same force in different minds, even if the circumstances are otherwise equal. There is also no reason why this has to be true of suffering, of course, and the corresponding desire to not have to suffer. People who value many other things strongly and who have a strong desire to stay alive, for instance, would not kill themselves even if their life mostly consists of suffering. And yet they would still be making perfectly consistent trades within different intensities and durations of suffering.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-10T13:59:09.345Z · LW(p) · GW(p)

My general point is that whatever property you rely upon to make comparisons within pains you can also rely upon to make comparisons between pains and pleasures.

It seems to me that you are using intensity of desire to make comparisons within pains. If so, you can also use intensity of desire to make comparisons between pleasures and pains. That "there would be no reason why people need to choose an exchange rate corresponding to some measured properties" seems inadequate as a reply, since you could analogously argue that there is no reason why people should rely on those measured properties to make comparisons within pains.

However, if intensity of desire is not the property you are using to make comparisons within pains, just ignore the previous paragraph. My general point still stands: the property you are using, whichever it is, is also a property that you could use to make comparisons between pains and pleasures.

↑ comment by Stuart_Armstrong · 2013-08-07T09:02:20.532Z · LW(p) · GW(p)

Preference utilitarianism is not the same thing as hedonistic utilitarianism (they reach different conclusions), so you can't use one to justify the other.

↑ comment by Pablo (Pablo_Stafforini) · 2013-08-05T20:44:27.817Z · LW(p) · GW(p)

Negative utilitarians are known to be notoriously bad at making sense of how humans (and other animals) behave in real life (e.g., most people are willing to endure the pain of walking over hot sand for the pleasure of swimming in the sea). And I suspect this extends to the behavior of negative utilitarians themselves.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T09:18:28.150Z · LW(p) · GW(p)

And classical utilitarians are notoriously prone to making quick judgments when tradeoffs are concerned:)

Perhaps people walk over hot sand because they really want to go swimming in the sea and would suffer otherwise. If there was absolutely nothing that subjectively bothers you about the current state you're in, you would not act at all, so it's highly non-obvious that people are generally trading pleasure and suffering in their daily lives. Whenever we consciously make a trade involving pleasure, the counterfactual alternative always seems to include some suffering as well in the form of unfulfilled longings/cravings.

Having said that, you can always ask why suicide percentages are so low if negative utilitarian axiology is right, and that would be a good point. But there the negative utilitarians would reply that a massive life bias is to be expected for evolutionary reasons.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-06T13:02:55.790Z · LW(p) · GW(p)

If there was absolutely nothing that subjectively bothers you about the current state you're in, you would not act at all

Why not? The obvious reply is that, even if there is nothing that bothers you about your current state, you might still be motivated to act in order to move to an even better state. In any case, your attempt to make sense of the example from a negative utilitarian framework simply doesn't do justice to what people take themselves to be doing in these situations. Just ask people around (not antecedently committed to a particular moral theory), and you'll see.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T16:39:53.113Z · LW(p) · GW(p)

Introspection is not particularly trustworthy. If you consciously (as opposed to acting on auto-pilot) decide to move to "an even better state", you have in fact evaluated your current conscious state and concluded that it is not the one you want to be in, i.e. that something (at least the fact that you'd rather want to be in some other state) bothers you about it. And that -- wanting to get out of your current conscious state (or not), or changing some aspect about it -- is what constitutes suffering, or whatever (some) negative utilitarians consider to be morally relevant.

If you accept this (Buddhist) axiology, it becomes a conceptual truth that conscious welfare-tradeoffs always include counterfactual suffering. And there are good reasons to accept this view. For instance, it implies that there is nothing intrinsically bad about pain asymbolia, which makes sense because the alternative implies having to go to great lengths to help people who assert that they are not bothered by the "pain" at all. Additionally, this view on suffering isn't vulnerable to an inverted qualia thought experiment applied to crosswiring pleasure and pain, where all the behavioral dispositions are left intact. Those who think there is an "intrinsic valence" to hedonic qualia, regardless of behavioral dispositions and other subjective attitudes, are faced with the inconvenient conclusion that you couldn't reliably tell whether you're undergoing agony or ecstacy.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-06T17:04:53.187Z · LW(p) · GW(p)

Introspection is not particularly trustworthy.

My point was that your favorite theory cannot make sense of what people take themselves to be doing in situations such as those discussed above. You may argue that we shouldn't trust these people because introspection is not trustworthy, but then you'd be effectively biting the bullet.

If you consciously (as opposed to acting on auto-pilot) decide to move to "an even better state", you have in fact evaluated your current conscious state and concluded that it is not the one you want to be in, i.e. that something (at least the fact that you'd rather want to be in some other state) bothers you about it.

You may, of course, use the verb 'to be bothered' to mean 'judging a state to be inferior to some alternative.' However, I though you were using the verb to mean, instead, the experiencing of some negative hedonic state. I agree that there is something that "bothers you", in the former sense, about the above situation, but I disagree that this must be so if the term is used in the latter sense--which is the sense relevant for discussions of negative utilitarianism.

Replies from: Lukas_Gloor, Kaj_Sotala↑ comment by Lukas_Gloor · 2013-08-06T17:38:49.960Z · LW(p) · GW(p)

However, I though you were using the verb to mean, instead, the experiencing of some negative hedonic state.

I think that wanting to change your current state is identical with what we generally mean by being in a negative hedonic state. For reasons outlined above, I suspect that qualia aren't indepent of all the other stuff that is going on (attitudes, dispositions, memories etc.).

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-06T18:25:19.187Z · LW(p) · GW(p)

Studies by Kent Berridge have established that 'wanting' can be dissociated from 'liking'. This research finding (among others; Guy Kahane discusses some of these) undermines the claim that affective qualia are intextricably linked to intentional attitudes, as you seem to suggest.

Replies from: Lukas_Gloor↑ comment by Lukas_Gloor · 2013-08-06T19:53:36.561Z · LW(p) · GW(p)

I'm aware of these findings, I think there are different forms of "wanting" and we might have semantical misunderstandings here. There is pleasure that causes immediate cravings if you were to stop it, and there is pleasure that does not. So pleasure would usually cause you to want it again, but not always. I would not say that only the former is "real" pleasure. Instead I'm arguing that a frustrated craving due to the absence of some desired pleasure constitutes suffering. I'm only committed to the claim that "disliking" implies (or means) "wanting to get out" of the current state. And I think this makes perfect sense given the arguments of inverted qualia / against epiphenomenalism and my intuitive response to the case of pain asymbolia.

↑ comment by Kaj_Sotala · 2013-08-07T09:59:10.314Z · LW(p) · GW(p)

My point was that your favorite theory cannot make sense of what people take themselves to be doing in situations such as those discussed above

Negative utilitarianism is a normative theory, not a descriptive one.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2013-08-07T10:58:07.482Z · LW(p) · GW(p)

This is true. But some descriptive facts may provide evidence against a normative theory. The implicit argument was:

- People often believe that they are justified in undergoing some pain in order to experience greater pleasure.

- Negative utilitarianism implies that these people are fundamentally mistaken.

- If (2), then this provides some reason to reject negative utilitarianism.

Of course, the argument is by no means decisive. In fact, I think there are much stronger objections to NU.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2013-08-07T14:55:01.739Z · LW(p) · GW(p)

Negative utilitarianism implies that these people are fundamentally mistaken.

I'm not sure of what this even means. Negative utilitarianism implies one set of preferences, which not everyone shares. People who have different preferences aren't mistaken in any sense, they just want different things.

↑ comment by Stuart_Armstrong · 2013-08-07T09:30:55.828Z · LW(p) · GW(p)

Thanks!

comment by Larks · 2013-08-08T02:01:30.466Z · LW(p) · GW(p)

Congratulations! May she prosper for a trillion suns.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2013-08-08T06:24:52.825Z · LW(p) · GW(p)

Thanks!

comment by [deleted] · 2013-08-05T04:49:31.464Z · LW(p) · GW(p)

Wow, tough crowd. If you don't want to hear about it then go somewhere else.

Congrats Stuart. Having a kid is an epic, life-changing event. She will quickly consume your life, and it will be worth every minute. Savor this time when they're young!

Replies from: Crux, Stuart_Armstrong, RolfAndreassen↑ comment by Crux · 2013-08-05T06:23:15.611Z · LW(p) · GW(p)

If you don't want to hear about it then go somewhere else.

Isn't every negative reception in some way pre-emptive? Clearly a single post that's off topic is easy to just ignore, but if no negative incentive is given, the number of off-topic posts will increase, and if this continues, eventually "go[ing] somewhere else" would be like having to watch your feet at all times to make sure you don't step on something sharp. It would be too distracting, and would detract heavily from the value of the forum.

Replies from: None↑ comment by [deleted] · 2013-08-05T18:15:00.449Z · LW(p) · GW(p)

The slippery slope argument rarely generalizes. Stuart is one of the most prolific contributors to this community, with one of the highest karma scores. Do you think he doesn't know what's appropriate? If Eliezer posted his baby pictures, would the complaints be as loud?

This is a community of rationalists. I hope they'd be discerning enough to know when a reprimand is needed, and when it is not.

Replies from: RolfAndreassen↑ comment by RolfAndreassen · 2013-08-05T20:54:43.929Z · LW(p) · GW(p)

If Eliezer posted his baby pictures, would the complaints be as loud?

As a matter of mere fact it probably wouldn't be, but it should be.

Replies from: RobbBB, Manfred↑ comment by Rob Bensinger (RobbBB) · 2013-08-06T00:24:30.845Z · LW(p) · GW(p)

Eh. It's one of the very most important events in the life of one of our best contributors. There might be a better venue for this, but I don't think it's a big deal, because I don't foresee us tumbling down a slippery slope made of baby. It's useful to us to know about central life-changes in the top LW writers, and 'I had a baby' is one of the most informationally compact, least slippery-slope-prone bits of information to find out.

Plus it encourages us to build stronger social ties to other LessWrongers, and to consider what this is all for. The only extremely obvious reason not to make a post like this is that it might lead to unpleasant arguments about the relevance of the post.

If there's a clear problem with Stuart's post, it's that it has a deliberately misleading title and introduction, so it's harder for people who aren't interested in personal stuff to know to skip it.

↑ comment by Stuart_Armstrong · 2013-08-07T09:36:22.850Z · LW(p) · GW(p)

Thanks!

↑ comment by RolfAndreassen · 2013-08-05T20:53:52.112Z · LW(p) · GW(p)

As a feedback mechanism your proposed procedure suffers from being slow, unspecific, and drastic. It's impossible to improve the quality of posts by quietly going elsewhere, because the author of an off-topic post cannot see your reaction; all that will happen is that, two years later, the forum begins to seem kind of dead because all the veterans are gone; but who knows why? "The Internet, she moves in mysterious ways," you say, shrugging your shoulders. It is not as though you can pinpoint any particular post that caused any particular non-participant to non-participate. So you may as well update us all on what your kid is doing now. It's a pity we don't have some means of rapidly indicating that a post is off-topic, badly-thought-out, or otherwise of inferior quality; say, some kind of thumbs-up/thumbs-down button, and maybe even a method for giving more specific feedback so the author can figure out exactly what was unpopular about the post. Hey, maybe you could implement something like that and get rich when everyone wants your software to run high-quality forums!

The natural state of every online forum is a Facebook/Tumblr/Twitter feed. It takes constant work to keep away from that.