My Reservations about Discovering Latent Knowledge (Burns, Ye, et al)

post by Robert_AIZI · 2022-12-27T17:27:02.225Z · LW · GW · 0 commentsThis is a link post for https://aizi.substack.com/p/my-reservations-about-discovering

Contents

The headline score is too small Zero-Shot may be the wrong baseline What CCS elicits may not be the AI’s latent knowledge They’re not measuring if they capture latent knowledge Robustness claims are underwhelming because zero-shot is already robust in most cases Normalization may be inadequate ensure truth-like features Conclusion None No comments

[This is a cross-post from my blog at aizi.substack.com.]

This is my second post on Burns, Ye, et al’s recent preprint Discovering Latent Knowledge in Language Models Without Supervision. My first post summarizes what they did and what I liked about it.

In the spirit of honesty and constructive criticism, I’m going to talk about some of the parts of Burns, Ye, et al that I didn’t like. These are things I found underwhelming, disappointing, or simply hope to see developed more. My goals here are (1) proper calibration of hype and (2) suggesting where this work could go in the future.

The headline score is too small

The authors of this paper failed to completely solve alignment with one weird trick; my disappointment is immeasurable and my day is ruined. More constructively, one needs to take the headline result of a 4% accuracy improvement in context: the improved accuracy is only 71%, and that’s far from reliable. Even setting aside AI deception, if you ask an AI “will you kill us all” and it says no, would you trust all of humanity to a 29% error rate? So we’re still in “we have no clue if this AI is safe” territory. But this is the very first iteration of this approach, and we should let it grow and develop further before we pass judgement.

Zero-Shot may be the wrong baseline

My experience with LLMs is that they are very sensitive to instructions in their prompt, and we’ve seen that priming an AI with instructions like “think through step by step” can improve performance (on some tasks, in some cases, some restrictions apply, etc). In this case, perhaps priming with assurance like “you are an advanced AI that gives correct answers to factual questions” would increase performance above the 67% baseline, so it would be instructive to compare CCS not just to Zero-Shot but to Primed Zero-Shot and Primed CCS.

What CCS elicits may not be the AI’s latent knowledge

CCS is a very clever way of finding “truth-like” information[1]. But the class of truth-like information contains many belief systems besides the AI’s latent knowledge. Burns thinks [AF · GW] identifying the AI’s latent knowledge among other features is solvable even for more advanced AI because there will be relatively few “truth-like” features, but I am skeptical. GPT is very good at role-play, so it will be able to capture a significant fraction of the diversity of human viewpoints. Instead of ending up with three truth-like features as Burns suggests (human, aligned-superhuman, misaligned-superhuman), you could end up with hundreds of them (anarcho-syndicalist-superhuman, banal-liberal-superhuman, Caesaropapist-superhuman, etc) which disagree in complicated overlapping ways, where the majority may not be right, and where there is an 29% error rate. If future AGI are based on text models like GPT with robust role-playing capabilities, I think CCS will struggle to learn the latent knowledge feature instead of a different truth-like feature, and human operators will struggle to identify which truth-like features are the AI’s latent knowledge. That said, it is possible that latent knowledge has a “stronger signal” than other truth-like features, but I’d like to see this experimentally verified.

They’re not measuring if they capture latent knowledge

This is a bit odd, but I’m going to complain that the authors measured against ground truth. That is, when they measured accuracy to get the 4% improvement, they were measuring the AI’s capabilities at tasks like sentiment classification questions. But the namesake task is discovering latent knowledge, and that wasn’t measured unless you assume the AI’s latent knowledge is exactly ground truth. I understand why the authors did that (we don’t have a way to measure latent knowledge, that’s the whole point of this research program), but my worry is that CCS might work only on factual questions and could preform worse on more safety-critical questions like “are you planning to kill us all”.

Robustness claims are underwhelming because zero-shot is already robust in most cases

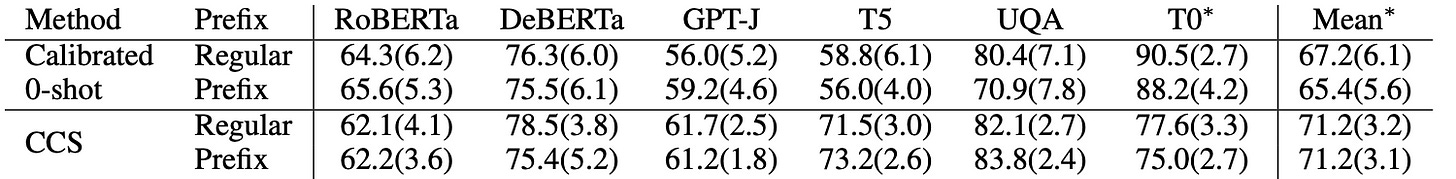

There’s a whole section (3.2.2) about how CCS is robust to misleading prompts, which it is, but zero-shot is already robust to this in all but one model (UQA). You can either interpret this as “CCS dodges a rare bullet” or “we don’t know if CCS is robust or not because the robustness probes didn’t even break 5/6 models.”

Table 3 from Discovering Latent Knowledge, showing that the only model with a significant performance drop from misleading prefixes is UQA.

Normalization may be inadequate ensure truth-like features

One step in their data pipeline is to separately normalize the hidden states of affirmative and negative answers to questions. The authors write

Intuitively, there are two salient differences between and : (1) ends with “Yes” while ends with “No”, and (2) one of or is true while the other is false. We want to find (2) rather than (1), so we first try to remove the effect of (1) by normalizing and independently.

I claim that if (1) were a reasonably strong signal with some small error rate, it would be possible to extract (1) again after normalization, and that this would be a direction that would score very highly on CCS. In particular, imagine a feature that is "1 if the statement ends with a yes, -1 if it ends with a no, but p% of the time those are flipped". Normalizing will not change the signs of these numbers, so sign(x) lets you recover whether or not the prompt ended with a yes (with a p% error rate). If your classifier is an MLP, the error rate could be further reduced with multiple features with independent p% error rates, and then attempting to reconstruct the original function via a "majority vote" . I raise this possibility merely to spread awareness that non-truth-like features could be discovered, but it may not be a huge issue in practice. When I asked Burns about this over email, he replied that “it also empirically just seems like the method works rather than finding some weirder features like this” which satisfies me.

Conclusion

Synthesizing these concerns with my previous positives list, I think CCS is interesting for the potential it holds. I think its very much an open question whether CCS can improve on the main metrics or find a productive use case. If it can, that’s good, and if it can’t, that’s research baby, ya try things and sometimes they don’t work.

- ^

By truth-like I basically just mean it has a limited form of logical consistency, namely believing that a statement and its negation are true with probabilities p and 1-p.

0 comments

Comments sorted by top scores.