Chad Jones paper modeling AI and x-risk vs. growth

post by jasoncrawford · 2023-04-26T20:07:06.311Z · LW · GW · 7 commentsThis is a link post for https://web.stanford.edu/~chadj/existentialrisk.pdf

Contents

7 comments

Looks like this Chad Jones paper was just posted today. Abstract:

Advances in artificial intelligence (A.I.) are a double-edged sword. On the one hand, they may increase economic growth as A.I. augments our ability to innovate or even itself learns to discover new ideas. On the other hand, many experts note that these advances entail existential risk: creating a superintelligent entity misaligned with human values could lead to catastrophic outcomes, including human extinction. This paper considers the optimal use of A.I. technology in the presence of these opportunities and risks. Under what conditions should we continue the rapid progress of A.I. and under what conditions should we stop?

And here's how the intro summarizes the findings:

1. The curvature of utility is very important. With log utility, the models are remarkably unconcerned with existential risk, suggesting that large consumption gains that A.I. might deliver can be worth gambles that involve a 1-in-3 chance of extinction.

2. For CRRA utility with a risk aversion coefficient (γ) of 2 or more, the picture changes sharply. These utility functions are bounded, and the marginal utility of consumption falls rapidly. Models with this feature are quite conservative in trading off consumption gains versus existential risk.

3. These findings even extend to singularity scenarios. If utility is bounded — as it is in the standard utility functions we use frequently in a variety of applications in economics — then even infinite consumption generates relatively small gains. The models with bounded utility remain conservative even when a singularity delivers infinite consumption.

4. A key exception to this conservative view of existential risk emerges if the rapid innovation associated with A.I. leads to new technologies that extend life expectancy and reduce mortality. These gains are “in the same units” as existential risk and do not run into the sharply declining marginal utility of consumption. Even with the bounded utility that comes with high values of risk aversion, substantial declines in mortality rates from A.I. can make large existential risks bearable.

I'm still reading the paper; might comment on it more later.

UPDATE: I read the paper. In brief, Jones is modeling Scott Aaronson's “Faust parameter”:

… if you define someone’s “Faust parameter” as the maximum probability they’d accept of an existential catastrophe in order that we should all learn the answers to all of humanity’s greatest questions, insofar as the questions are answerable—then I confess that my Faust parameter might be as high as 0.02.

Jones calculates the ideal Faust parameter under various assumptions about utility functions and the benefits of AI, and comes up with some answers much higher than 0.02:

The answers turn out to be very sensitive to the utility function: if you have a relative risk aversion parameter > 1, you are much more conservative about AI risk. But it's also very sensitive to any mortality/longevity improvements AI can deliver. If AI can double our lifespans, then even with a relatively risk-averse utility function, we might accept a double-digit chance of extinction in exchange.

7 comments

Comments sorted by top scores.

comment by dr_s · 2023-04-27T13:25:22.400Z · LW(p) · GW(p)

With log utility, the models are remarkably unconcerned with existential risk, suggesting that large consumption gains that A.I. might deliver can be worth gambles that involve a 1-in-3 chance of extinction

I mean, math is nice and all, but this remarkably feels Not How Humans Actually Function. Not to mention that an assumption of AI benefitting a generic humans uniformly seems laughably naïve since unless you really had some kind of all-loving egalitarian FAI, odds are AI benefits would go to some humans more than others.

This paper seems to me like it belongs to the class of arguments that don't actually use math to find out any new conclusions, but rather just to formalize a point that is so vague and qualitative, it could be made just as well in words. There's no real added value from putting numbers into it since so many important coefficients are unknown or straight up represent opinion (for example, whether existential risk shouldn't carry an additional negative utility penalty beyond just everyone's utility going to zero). I don't feel like this will persuade anyone or shift anything in the discussion.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-04-28T08:19:25.729Z · LW(p) · GW(p)

In fact, the verbal tl;dr seems to be that if you don't care much about enormously wonderful or terrible futures (because of bounded or sufficiently concave utility functions) then you won't pay much to achieve or avoid them.

comment by gjm · 2023-04-27T15:39:04.212Z · LW(p) · GW(p)

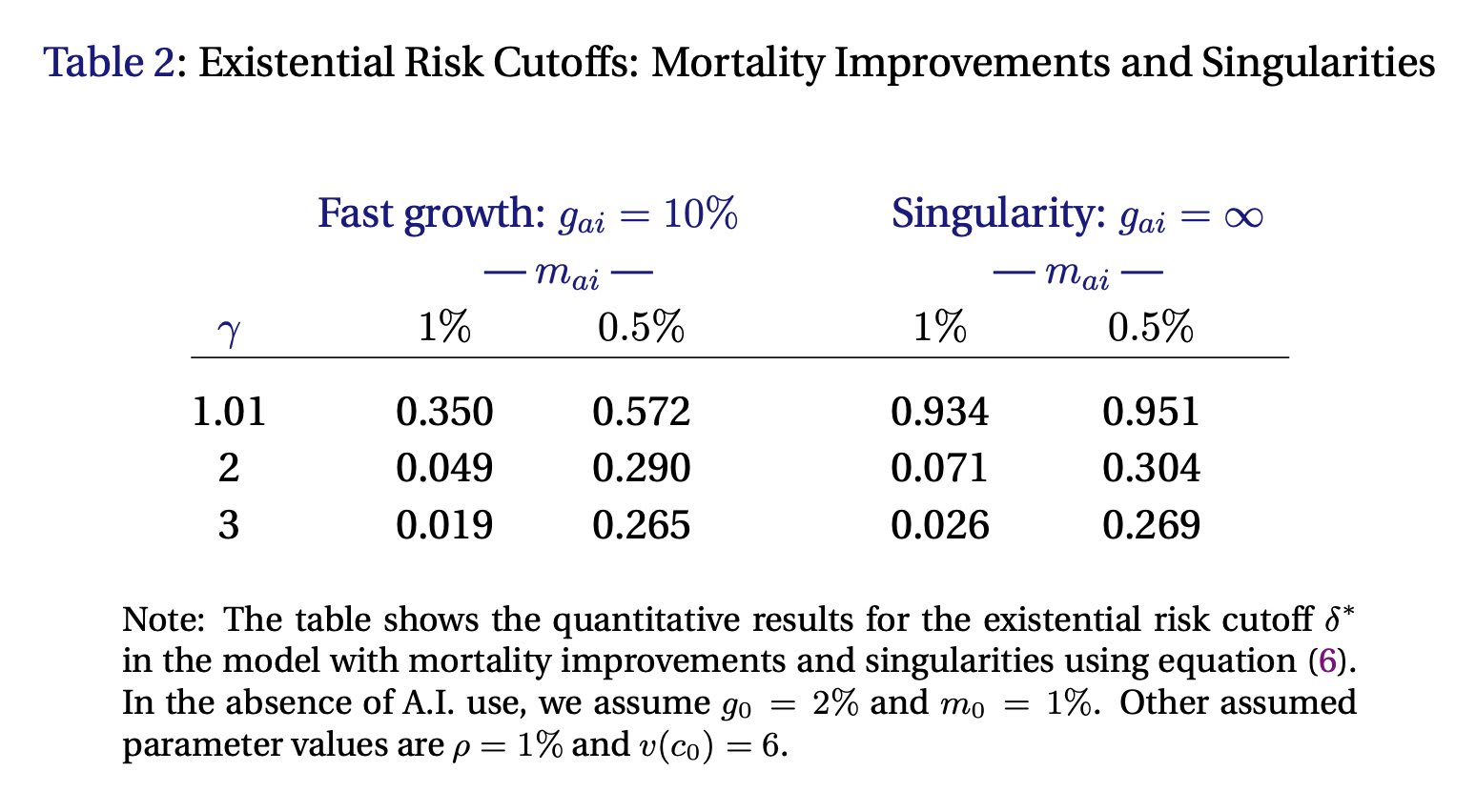

For those who like me didn't find it immediately obvious what the various parameters in "Table 2" mean:

- is the economic growth rate per unit time produced by AI, versus without it. (You can take "per unit time" to mean "per year"; I don't think anything in the paper depends on what the unit of time actually is, but the without-AI figures are appropriate for a unit of one year.)

- is the personal mortality risk per year with AI, versus without it. (Simple model where everyone independently either dies or doesn't during each year, with fixed probabilities.)

- is a risk-aversion parameter: d(utility)/d(wealth) is inversely proportional to the power of wealth. So is the usual "log utility"; larger values mean you value extra-large amounts of wealth less, enough so that any means that the amount of utility you can get from being wealthier is bounded. (Aside: It's not clear to me that "risk aversion" is a great term for this, since there are reasons to think that actual human risk aversion is not fully explained by decreasing marginal utility.)

- The values displayed in the table are "existential risk cutoffs" meaning that you favour developing AI that will get you those benefits in economic growth and mortality rate up to the point at which the probability that it kills everyone equals the existential risk cutoff. (It's assumed that any other negative consequences of AI are already factored in to those m and g figures.)

The smallest "existential risk cutoff" in the table is about 2%, and to get it you need to assume that the AI doesn't help with human mortality at all, that it leads to economic growth of 10% per year (compared with a baseline of 2%), and that your marginal utility of wealth drops off really quickly. With larger benefits from AI or more value assigned to enormous future wealth, you get markedly larger figures (i.e., with the assumptions made in the paper you should be more willing to tolerate a greater risk that we all die).

I suspect that actually other assumptions made in the paper diverge enough from actual real-world expectations that we should take these figures with a grain of salt.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-05-03T17:17:29.314Z · LW(p) · GW(p)

I don't think this paper is valuable, because the premises seem absurd. You can't anchor on currently existing humans and current correlations between wealth in our current economy in order to predict the value of sapient beings after a singularity. Do digital humans count? Can you assume that wealth will have decreasing returns to self-modifying rapidly self-duplicating digital humans in the same way it does to meat humans? What about the massive expanse of the future light cone, and whether that gets utilized or neglected due to extinction?

This paper doesn't touch on the true arguments against extinction, and thus massively overvalues options that risk extinction.

comment by gjm · 2023-04-27T13:13:37.969Z · LW(p) · GW(p)

Unless I hallucinated it, an earlier version of this post included a table showing how the "existential risk cutoff" (~= Scott Aaronson's "Faust parameter") varies with (1) risk aversion, (2) anticipated economic growth due to superhuman AI, and (3) anticipated reduction in mortality due to superhuman AI. I'm curious why it was removed.

Replies from: jasoncrawford↑ comment by jasoncrawford · 2023-04-27T14:00:42.122Z · LW(p) · GW(p)

Weird, I don't know how it got reverted. I just restored my additional comments from version history.

Replies from: gjm