EIS XIV: Is mechanistic interpretability about to be practically useful?

post by scasper · 2024-10-11T22:13:51.033Z · LW · GW · 4 commentsContents

Five tiers of rigor for safety-oriented interpretability work 1. Pontification 2. Basic Science 3. Streetlight/Toy Demos 4. Useful Engineering 5. Net Safety Benefit What’s been happening lately? Recently, some solid work has been done in tier 3. I think that tier 4 has (barely) been broken into. Current efforts may soon break further into tier 4. What might happen next with SAEs? Past predictions New predictions What if we succeed? None 4 comments

Part 14 of 12 in the Engineer’s Interpretability Sequence [? · GW].

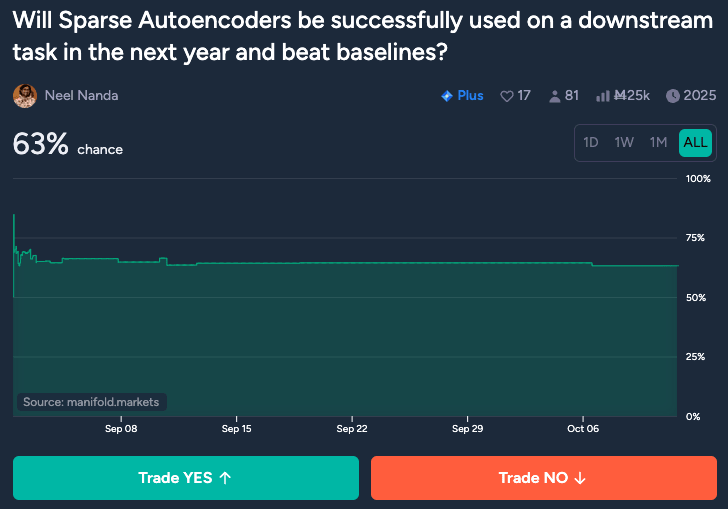

Is this market really only at 63%? I think you should take the over.

Five tiers of rigor for safety-oriented interpretability work

Lately, I have been thinking of interpretability research as falling into five different tiers of rigor.

1. Pontification

This is when researchers claim they have succeeded in interpreting a model by definition or based on analyzing results and asserting hypotheses about them. This is a key part of the scientific method. But by itself, it is not good science. Previously in this sequence, I have argued [? · GW] that this standard is fairly pervasive.

2. Basic Science

This is when researchers develop an interpretation, use it to make some (usually simple) prediction, and then show that this prediction validates. This is at least doing science, but it doesn't necessarily demonstrate any usefulness or value.

3. Streetlight/Toy Demos

This is when researchers accomplish a useful type of task with an interpretability technique but do so in a way that is toy, cherry-picked, or under a streetlight.

4. Useful Engineering

This is when researchers show that an interpretability tool can be used uniquely or competitively to accomplish a useful task. For this level of rigor, it needs to be convincingly demonstrated that doing the task with interpretability is better than other ML techniques. I think that there is currently at least one example of work low in this tier.

5. Net Safety Benefit

This would be when researchers convincingly demonstrate that an interpretability tool isn't just practically and competitively useful, but is in a way that is differentially beneficial for reducing risks instead of undergoing a capability capture. By my understanding, this tier has not yet been reached. And unless it is, then the field of AI (mechanistic) interp will have been, at best, a big waste from a safety standpoint.

What’s been happening lately?

Recently, some solid work has been done in tier 3.

A few months ago, I remember hearing about some new work on SAE interpretability – Marks et al. (2024). The person I heard about it from was pretty excited, and when I pulled up the paper, I thought to myself “here we go, let’s see” and mentally prepared to look for holes in it. But when I read the paper, I thought it was pretty great and the kind of thing that could pull interpretability research in a really positive direction. To be clear, the paper solved a toy task – identifying and mitigating a known gender bias in a small transformer. But it was done in a way that did not require disambiguating labels and mirrors realistic debugging situations in which red-teamers may not know exactly what they are looking for in advance.

Recently, we have also seen Anthropic’s Golden Gate Claude – an impressive feat of streetlight model editing (though I wished that Anthropic would have tried to demo unlearing instead). Meanwhile, Arditi et al. (2024) [AF · GW] demonstrated a fairly clean method for controlling model refusal using linear perturbations. Yu et al. (2024) used this for adversarial training but didn't outcompete LAT (Sheshadri et al., 2024). Finally, Smith and Brinkman (2024) [AF · GW] used sparse autoencoders to find some simple adversarial vulnerabilities in reward models.

All of the above demos could be argued to be high in tier 3. Meanwhile, in an exchange a few months ago, Christopher Potts presented an argument to me for why representation finetuning (Wu et al., 2024) methods inspired by findings from interchange intervention techniques might be an example of tier 4 work. I think this is a really useful point, but I don’t subjectively feel that this convincingly breaks through. Its connection to interpretability research is mostly via conceptual inspiration rather than specific mechanistic insights.

I think that tier 4 has (barely) been broken into.

About a year ago, Schut et al. (2023) did what I think was (and maybe still is) the most impressive interpretability research to date. They studied AlphaZero's chess play and showed how novel performance-relevant concepts could be discerned from mechanistic analysis. They worked with skilled chess players and found that they could help these players learn new concepts that were genuinely useful for chess. This appears to be a reasonably unique way of doing something useful (improving experts' chess play) that may have been hard to achieve in some other way.

Current efforts may soon break further into tier 4.

Finally, others have been cooking. I frequently hear about people working toward engineering applications of interpretability tools. Neel Nanda recently posted a Metaculus market on whether sparse autoencoders (or other dictionary learning techniques) will be successfully used on a downstream task in the next year and beat baselines. Props to Neel for good field building with a good market. I think the ground rules laid out for the market hit the nail on the head for what would be an impressive and game-changing advancement in mechanistic interpretability. Reading between the lines, it’s also pretty easy to infer that Neel and collaborators at Google DeepMind and MATS are working on this right now. They also talk openly about how they are working on engineering applications of interp, but I haven't heard specifics from anyone at GDM.

Somehow, the market currently stands at 63%. I think this is surprisingly low. I would definitely take the over.

What might happen next with SAEs?

Past predictions

In May, I made some predictions about what Anthropic’s next research paper on sparse autoencoders would do. See the full predictions in this post. But in short, I thought that each of the following things would happen with these probabilities. I have marked with a ✅ things that the paper did, and an ❌ things that the paper didn’t do.

- ✅ 99% – “Eye-test” experiments

- ✅ 95% – Streetlight edits

- ✅ 80% – Some cherry-picked proof of concept for a useful *type* of task

- ❌ 20% – Doing PEFT by training sparse weights and biases for SAE embeddings in a way that beats baselines like LORA

- ❌ 20% – Passive scoping

- ❌ 25% – Finding and manually fixing a harmful behavior that WAS represented in the SAE training data

- ❌ 5% – Finding and manually fixing a novel bug in the model that WASN'T represented in the SAE training data

- ❌ 15% – Using an SAE as a zero-shot anomaly detector

- ❌ 10% – Latent adversarial training under perturbations to an SAE's embeddings

- ❌ 5% – Experiments to do arbitrary manual model edits

On one hand, these predictions were all individually good – all were on the right side of 50%. But overall, the paper underperformed expectations. If you scored the paper relative to my predictions by giving it (1-p) points when it did something that I predicted it would do with probability p and -p points when it did not, the paper would score -0.74.

You can read my full reflection in the previous post of the sequence: EIS XIII [? · GW]. Overall, I think that the paper under-delivered and was somewhat overhyped. It had me wondering about safety-washing, especially in light of how some less knowledgeable and shamelessly dishonest actors have greatly overstated the progress being made in ways that could be politically hazardous if policymakers are misled.

New predictions

Here is a new set of predictions. Overall, I’m going to double down on some similar ideas, but I have some updates.

One difference is that I will make predictions simultaneously about Googe DeepMind, OpenAI, and Anthropic – I’m not familiar enough with what’s happening inside each to distinguish between each of them in my predictions. So when I say they will do X with probability p, I am saying this about all three at once.

Meanwhile, predictions are made ignoring the possibility of them being self-fulfilling. I’ll make them about SAEs but I’ll count it if they do these things with another dictionary learning method such as clustering.

- ❓60% – Finding and manually fixing a harmful behavior that WAS represented in the SAE training data in a way that is competitive with appropriate fine-tuning and machine unlearning baselines.

- ❓20% – Finding novel input space attacks that exploit the model in a way that is competitive with appropriate adversarial attack baselines.

- ❓20% – Using SAE’s to detect – either by sparsity thresholds or a reconstruction loss threshold – anomalies in a way that is competitive with appropriate statistical anomaly detection baselines.

- ❓15% – Finding and manually fixing a harmful behavior that WAS CONVINCINGLY NOT represented in the SAE training data in a way that is competitive with appropriate fine-tuning and machine unlearning baselines.

- ❓15% – Fine-tuning the model via sparse perturbations to the sparse autoencoder’s embeddings in a way that is competitive with appropriate PEFT baselines.

- ❓15% – Performing arbitrary (e.g. not streetlight) model edits in a way that is competitive with appropriate fine-tuning and model editing baselines.

- ❓10% – Performing latent adversarial attacks and/or latent adversarial training on the SAE neurons in a way that is competitive with latent-space approaches.

- ❓10% – Demonstrating that SAEs can be used to make the model robust to exhibiting harmful behaviors not represented in the SAE’s training data in a way that is competitive with appropriate compression baselines.

Note that these are what I think will happen – not what I think is possible. I think that all of these could very well be possible, except I'm not so sure about 6.

Also keep in mind that all of these predictions require that SAEs are demonstrated to be competitive in fair fights with other relevant baseline techniques -- if it were not for this, I would have much higher probabilities above.

Once the next Anthropic, GDM, or OpenAI paper on SAEs comes out, I will evaluate my predictions in the same way as before. I will score the paper relative to my predictions by giving it (1-p) points when it does something that I predicted it would do with probability p and -p points when it does not. Note that this is arguably a flawed measure because these 8 events are not independent, but I will proceed nonetheless.

What if we succeed?

For the past few years, I have spent a lot of thought and time on mechanistic interpretability. This includes several papers, numerous collaborations, and this sequence. But now that mechanistic interpretability may be on the verge of being useful, I feel that I have only recently come to appreciate something that I didn’t before.

I named this sequence the “Engineer’s Interpretability Sequence,” dedicating it to the critique that mechanistic interpretability tools are struggling to be useful. But sometimes I wonder if I should have spent less time worrying about mechanistic interpretability’s failures and more time worrying about what happens if it succeeds. At the end of the day, it doesn’t matter if they are useful to engineers or not – unless mechanistic interpretability tools offer a net safety benefit, then all work on them will have been one big waste (from an AI safety perspective).

Mechanistic interpretability, if and when it is useful, will probably offer a defender’s advantage. I think it will generally be much easier to remove capabilities with mechanistic techniques than to add them. And I think that mechanistic techniques are a useful part of the AI evaluation toolbox.

However, it is not hard to imagine how it could be used to advance capabilities. And I have very limited confidence [AF · GW] in scaling labs only using mechanistic interpretability for good. Unfortunately, I think that it will be hard to effectively monitor the future uses and impacts of interpretability techniques due to safety washing and a lack of transparency into scaling labs.

🚶♂️➡️🔥🧺

4 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2024-10-12T14:39:33.086Z · LW(p) · GW(p)

Once the next Anthropic, GDM, or OpenAI paper on SAEs comes out, I will evaluate my predictions in the same way as before.

Uhh... if we (GDM mech interp team) saw good results on any one of the eight things on your list, we'd probably write a paper just about that thing, rather than waiting to get even more results. And of course we might write an SAE paper that isn't about downstream uses (e.g. I'm also keen on general scientific validation of SAEs), or a paper reporting negative results, or a paper demonstrating downstream use that isn't one of your eight items, or a paper looking at downstream uses but not comparing against baselines. So just on this very basic outside view, I feel like the sum of your probabilities should be well under 100%, at least conditional on the next paper coming out of GDM. (I don't feel like it would be that different if the next paper comes from OpenAI / Anthropic.)

The problem here is "next SAE paper to come out" is a really fragile resolution criterion that depends hugely on unimportant details like "what the team decided was a publishable unit of work". I'd recommend you instead make time-based predictions (i.e. how likely are each of those to happen by some specific date).

Replies from: scasper, Max Lee↑ comment by scasper · 2024-10-12T17:26:55.164Z · LW(p) · GW(p)

Thanks for the comment. My probabilities sum to 165%, which would translate to me saying that I would expect, on average, the next paper to do 1.65 things from the list, which to me doesn't seem too crazy. I think that this DOES match my expectations.

But I also think you make a good point. If the next paper comes out, does only one thing, and does it really well, I commit to not complaining too much.

↑ comment by Knight Lee (Max Lee) · 2024-10-12T22:54:00.221Z · LW(p) · GW(p)

It seems like the post is implicitly referring to the next big paper on SAEs from one of these labs, similar in newsworthiness as the last Anthropic paper. A big paper won't be a negative result or a much smaller downstream application, and a big paper would compare its method against baselines if possible, making 165% still within the ballpark.

I still agree with your comment, especially the recommendation for a time-based prediction (I explained in my other comment here).

Thank you for your alignment work :)

comment by Knight Lee (Max Lee) · 2024-10-12T22:47:29.401Z · LW(p) · GW(p)

I like your post, I like how you overviewed the big picture of mechanistic interpretability's present and future. That is important.

I agree that it is looking more promising over time with the Golden Gate Claude etc. I also agree that there is some potential for negatives. I can imagine an advanced AI editing itself using these tools, causing its goals to change, causing it to edit itself even more, in a feedback loop that leads to misalignment (this feels unlikely, and a superintelligence would be able to edit itself anyways).

I agree the benefits outweigh the negatives: yes mechanistic interpretability tools could make AI more capable, but AI will eventually become capable anyways. What matters is whether the first superintelligence is aligned, and in my opinion it's much harder to align a superintelligence if you don't know what's going on inside.

One small detail is defining your predictions better, as Dr. Shah said. It doesn't hurt to convert your prediction to a time-based prediction. Just add a small edit to this post. You can still post an update after the next big paper even if your prediction is time-based.

A prediction based on the next big paper not only depends on unimportant details like the number of papers they spread their contents over, but doesn't depend on important details like when the next big paper comes. Suppose I predicted that the next big advancement beyond OpenAI's o1 will be able to get 90% on the GPQA Diamond, but didn't say when it'll happen. I'm not predicting very much in that case, and I can't judge how accurate my prediction was afterwards.

Your last prediction was about the Anthropic report/paper that was already about to be released, so by default you predicted the next paper again. This is very understandable.

Thank you for your alignment work :)