Goodhart's Law in Reinforcement Learning

post by jacek (jacek-karwowski), Joar Skalse (Logical_Lunatic), OliverHayman, charlie_griffin (cjgriffin), Xingjian Bai · 2023-10-16T00:54:11.669Z · LW · GW · 22 commentsContents

TL;DR Introduction Geometric explanation How can we use this intuition? What's next? None 22 comments

Produced As Part Of The OxAI Safety Labs program, mentored by Joar Skalse.

TL;DR

This is a blog post introducing our new paper, "Goodhart's Law in Reinforcement Learning" (to appear at ICLR 2024). We study Goodhart's law in RL empirically, provide a geometric explanation for why it occurs, and use these insights to derive two methods for provably avoiding Goodharting.

Here, we only include the geometric explanation (which can also be found in the Appendix A) and an intuitive description of the early-stopping algorithm. For the rest, see the linked paper.

Introduction

Suppose we want to optimise for some outcome, but we can only measure an imperfect proxy that is correlated, to a greater or lesser extent, with that outcome. A prototypical example is that of school: we would like children to learn the material, but the school can only observe the imperfect proxy consisting of their grades. When there is no pressure exerted on students to do well on tests, the results will probably reflect their true level of understanding quite well. But this changes once we start putting pressure on students to do get good grades. Then, the correlation usually breaks: students learn "for the test", cheat, or use other strategies which make them get better marks without necessarily increasing their true understanding.

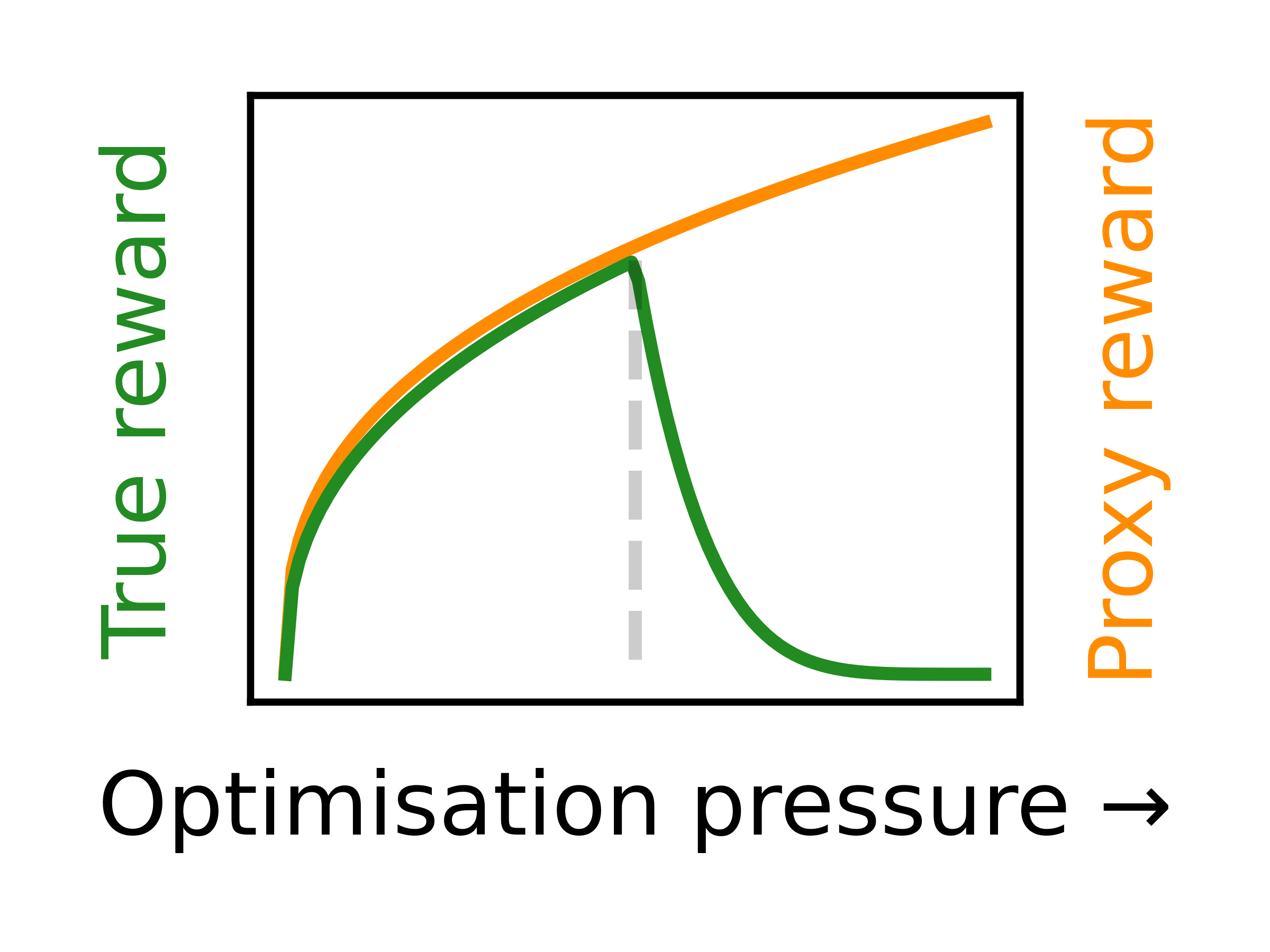

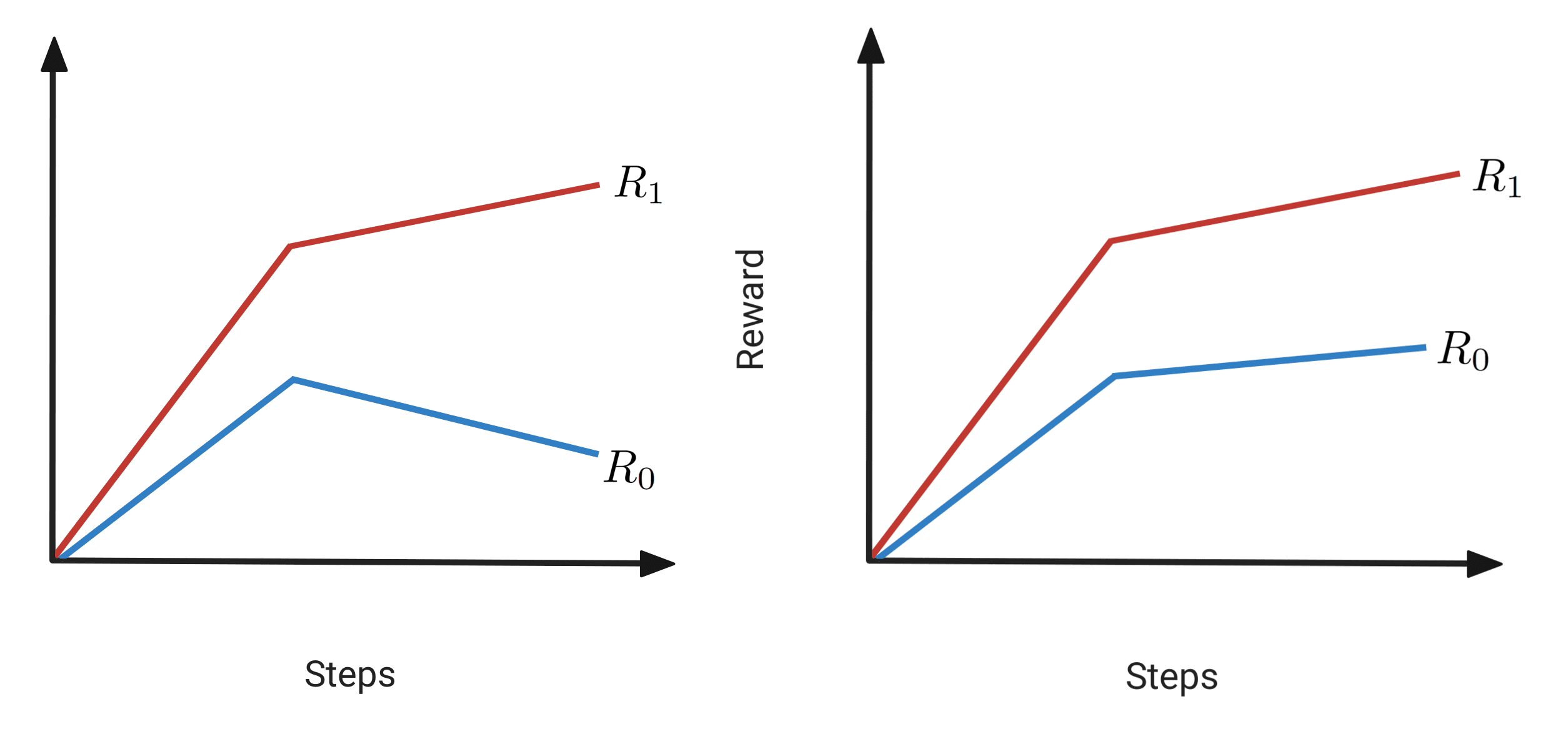

That "any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes" (or "when a measure becomes a target, it ceases to be a good measure") is called the Goodhart's law. Graphically, plotting the true reward and the proxy reward obtained along increasing optimisation pressure, we might therefore expect to see something like the following graph:

In machine learning, this phenomenon has been studied before. We have a long list lot of examples where agents discover surprising ways of getting reward without increasing their true performance. These examples, however, have not necessarily been quantified over increasing optimisation pressure: we only know that agents discovered those "reward hacks" at some point in their training. Even in cases where the effect was attributed specifically to the increasing optimisation pressure, we lacked a true, mechanistic understanding for why it happens.

Our paper fills this gap. The core contribution is an explanation for Goodhart's law in reinforcement learning in terms of the geometry of Markov decision processes. We use this theoretical insight to develop new methods for provably avoiding Goodharting and for optimising reward under uncertainty. We also show empirically that Goodharting occurs quite often in many different MDPs and investigate the performance of our early-stopping method (which, being pessimistic, might usually lose on some reward).

Geometric explanation

Suppose that we work with a fixed MDP, with states, actions (we assume both being finite) and a discount factor . We are interested in the space of (Markov) policies giving, for every state , a probability distribution over actions. For every reward we can compute the expected return for any policy . Unfortunately, the function can be quite complex. However, it turns out that instead of working with policies, we might instead choose to work with state-action occupancy measures. There is an occupancy measure for each policy : it is defined over pairs (state , action ), and indicates roughly how often we take action in state . Formally:

but the exact form is not really needed to understand the explanation below.

The state-action occupancy measure space has a number of extremely nice properties:

- We can recover a policy from its occupancy measure (up to the states that were never visited).

- The space of occupancy measures is a convex hull over a finite number of points, corresponding to occupancy measures of deterministic policies.

- Moreover, lies in the affine subspace of dimension (we will denote the orthogonal projection matrix to that subspace by ).

- If we treat rewards on MDPs as vectors , then the RL cost function can be decomposed as . In other words, each reward induces a linear function on the convex polytope , which reduces finding the optimal policy to solving a linear programming problem in !

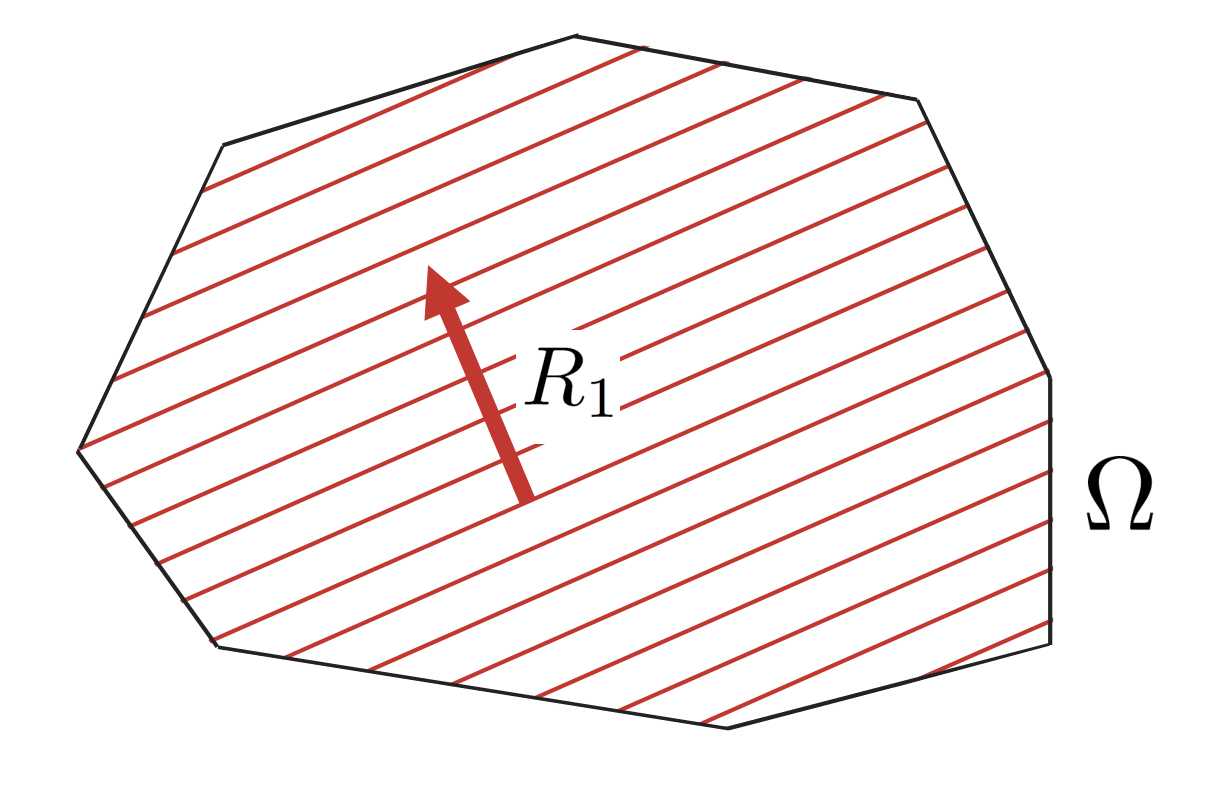

In short: the space of policies over a MDP can be understood as a certain polytope , where for a given reward , finding optimal policy for is really solving a linear program. Or, putting it differently, the gradient of is a linear function in the polytope.

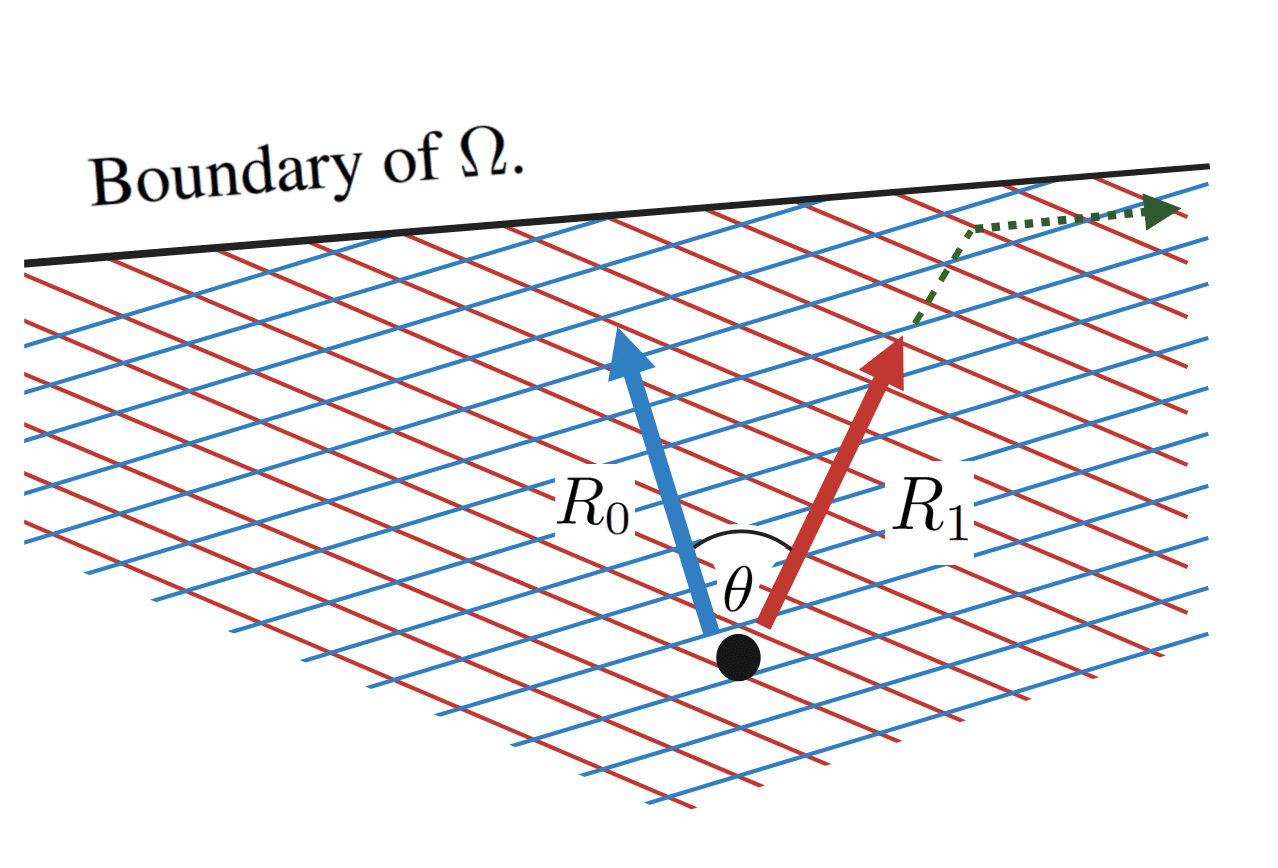

We can visualise it as follows:

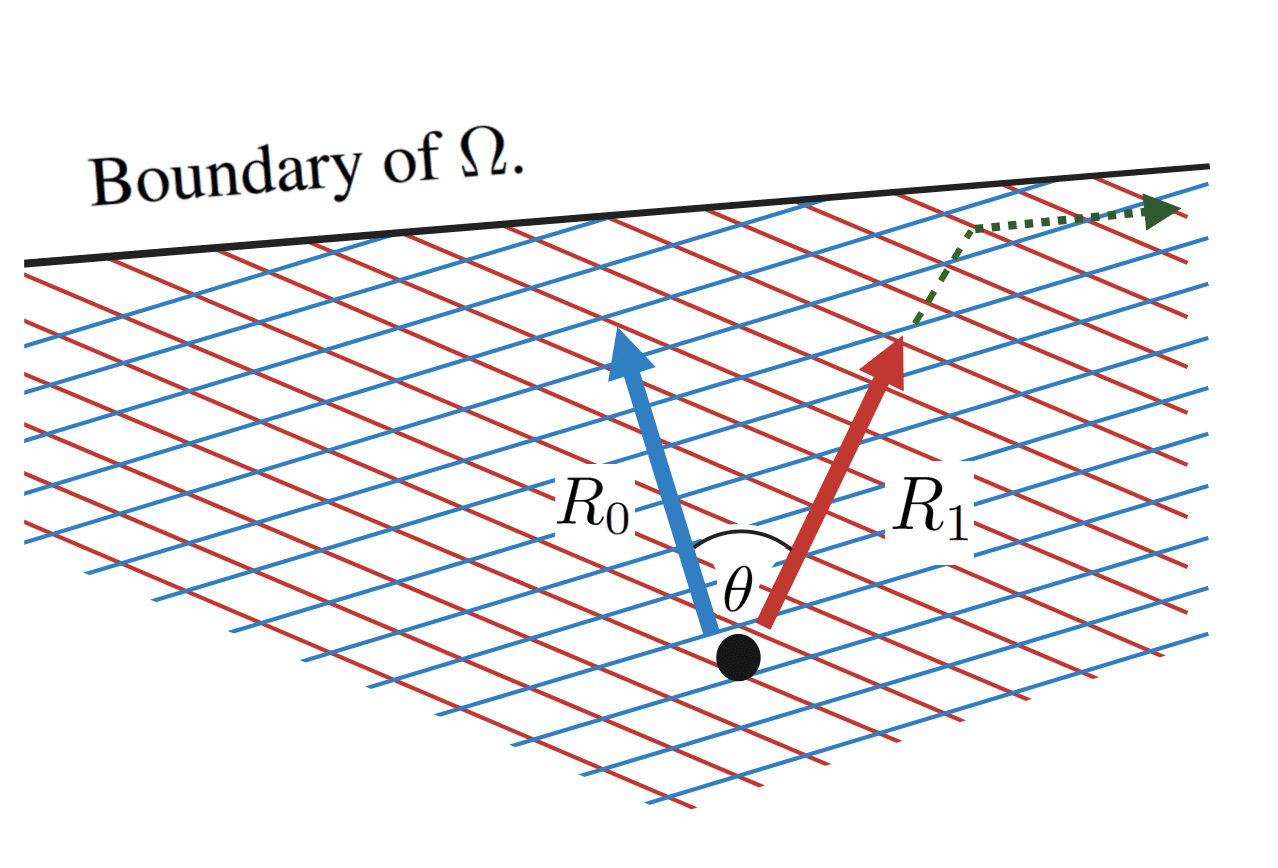

Here the red arrow denotes the direction of within . Note that this direction corresponds to , rather than , since lies in a lower-dimensional affine subspace. Similarly, the red lines correspond to the level sets of , i.e. the directions we can move in without changing .

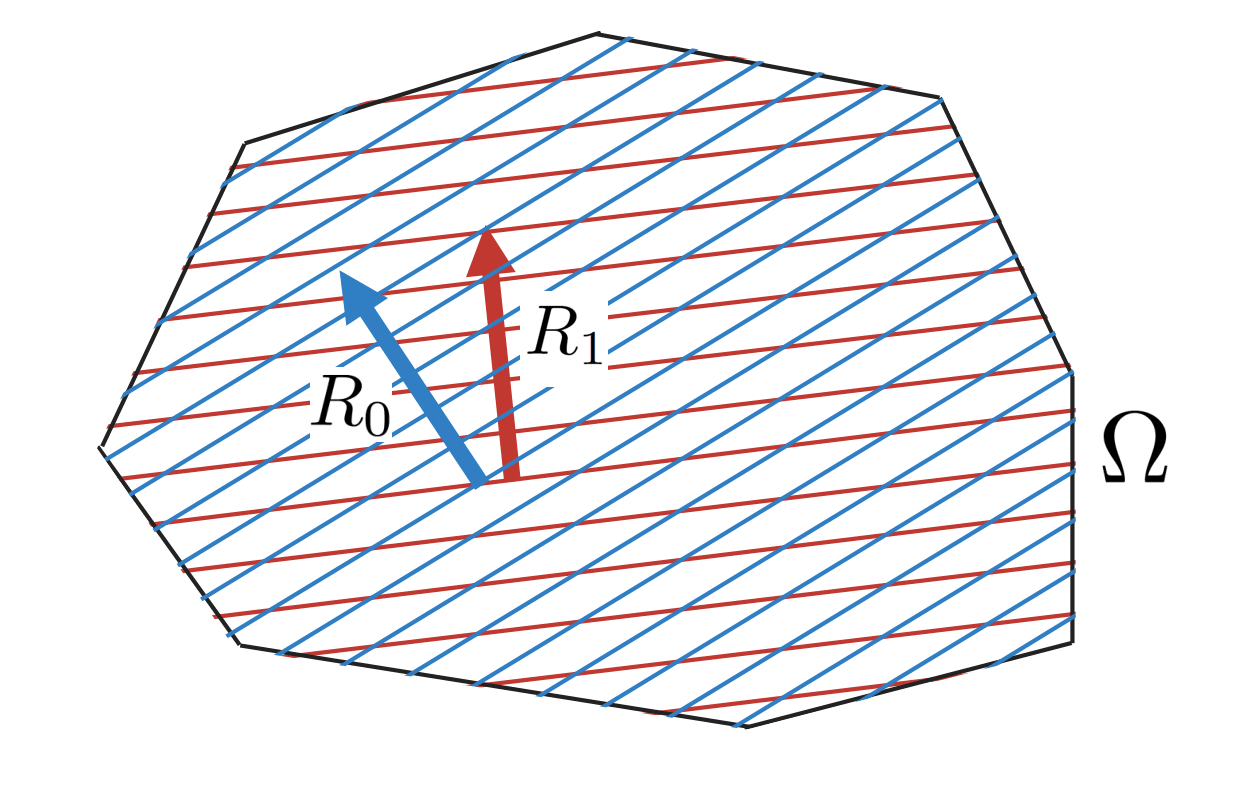

Now, if is a proxy reward, then we may assume that there is also some (unknown) true reward function . This reward also induces a linear function on , and the angle between them is :

Suppose we pick a random point in , and then move in a direction that increases . This corresponds to picking a random policy , and then modifying it in a direction that increases . In particular, let us consider what happens to the true reward function , as we move in the direction that most rapidly increases the proxy reward .

To start with, if we are in the interior of (i.e., not close to any constraints), then the direction that most rapidly increases is to move parallel to . Moreover, if the angle between and is no more than , then this is guaranteed to also increase the value of . To see this, consider the following diagram:

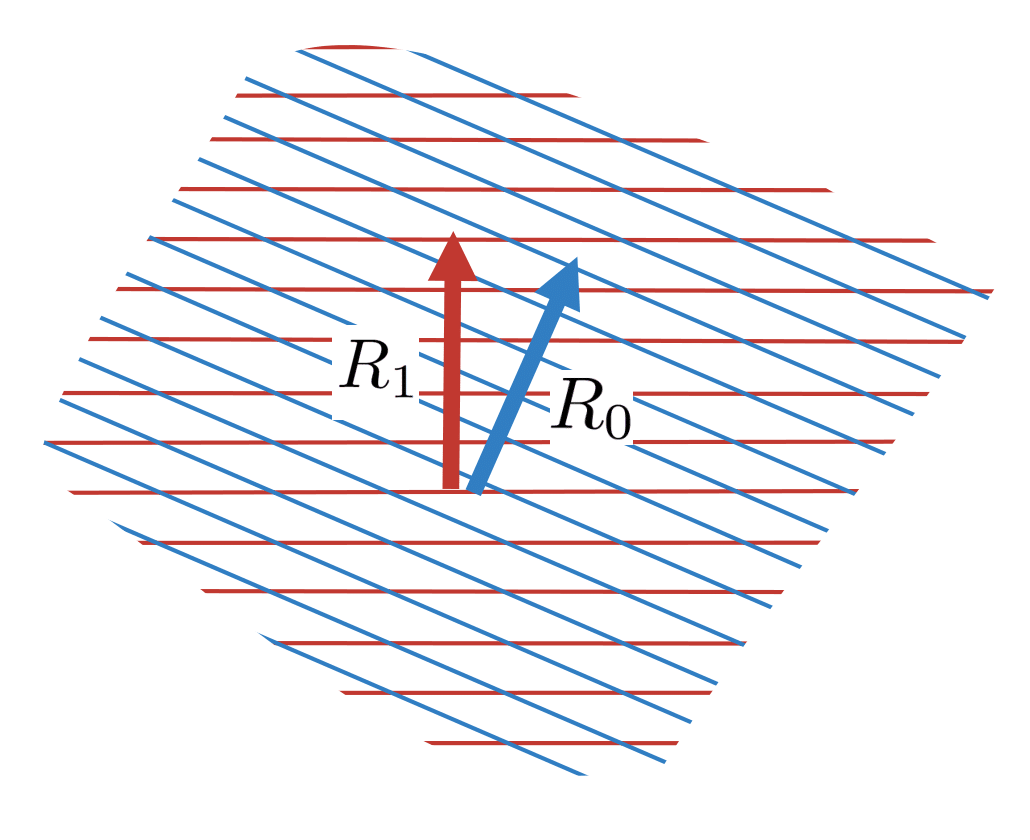

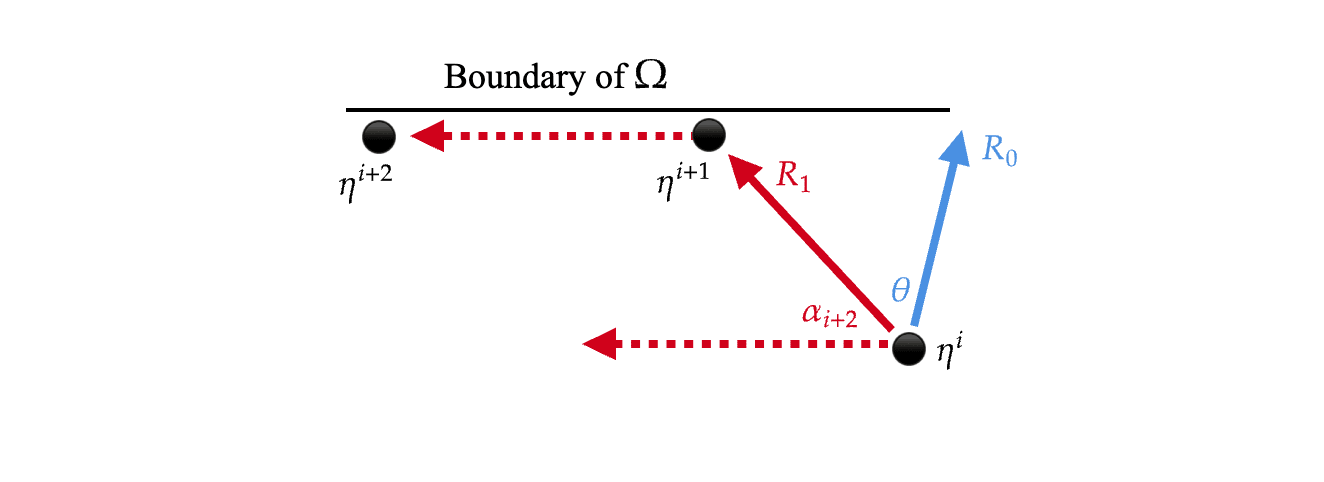

However, as we move parallel to , we will eventually hit the boundary of . When we do this, the direction that most rapidly increases will no longer be parallel to . Instead, it will be parallel to the projection of onto the boundary of that we just hit. Moreover, if we keep moving in this direction, then we might no longer be increasing the true reward . To see this, consider the following diagram:

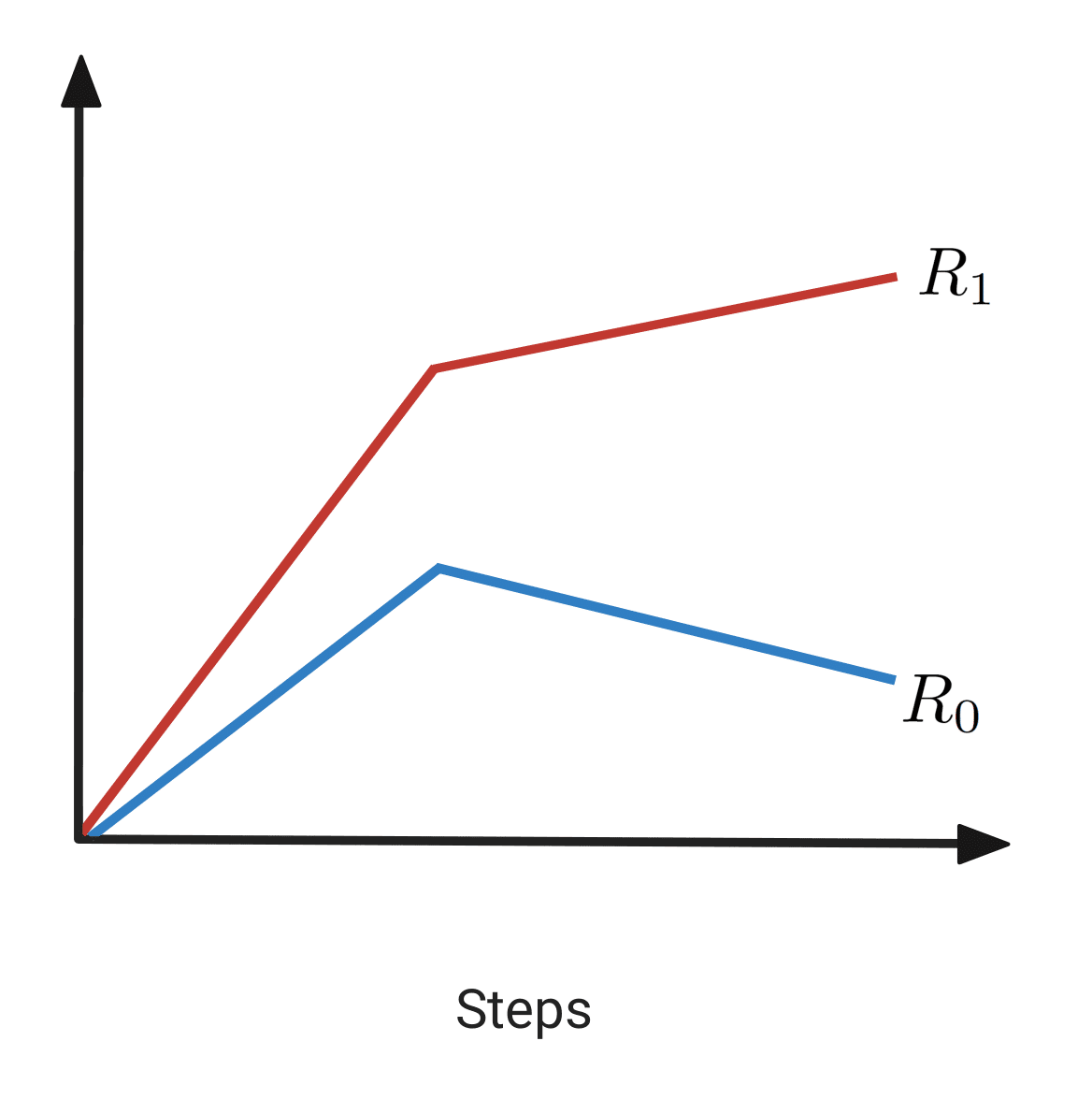

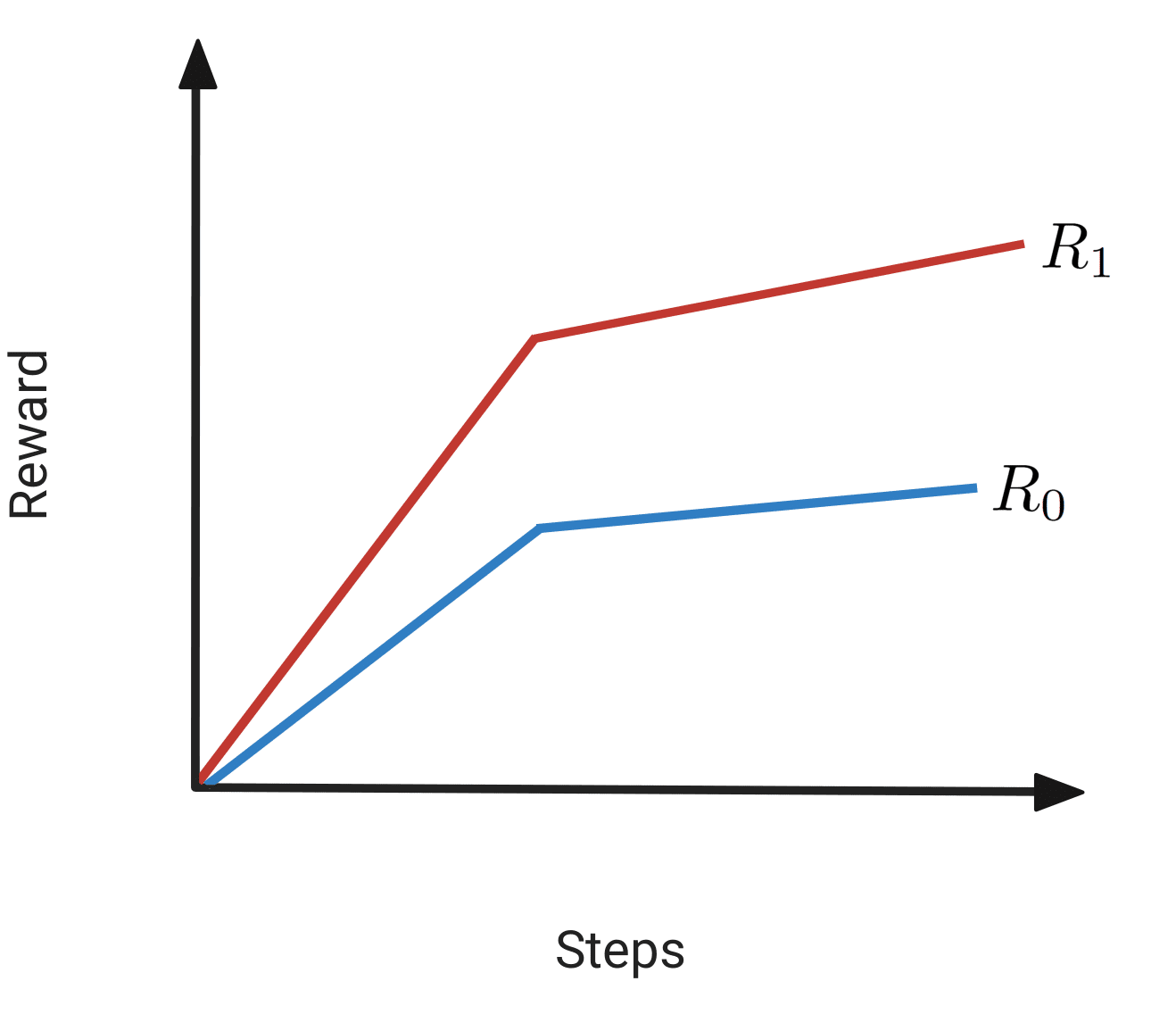

The dashed green line corresponds to the path that most rapidly increases . As we move along this path, initially increases. However, after the path hits the boundary of and changes direction, will instead start to decrease. Thus, if we were to plot and over time, we would get a plot that looks roughly like this:

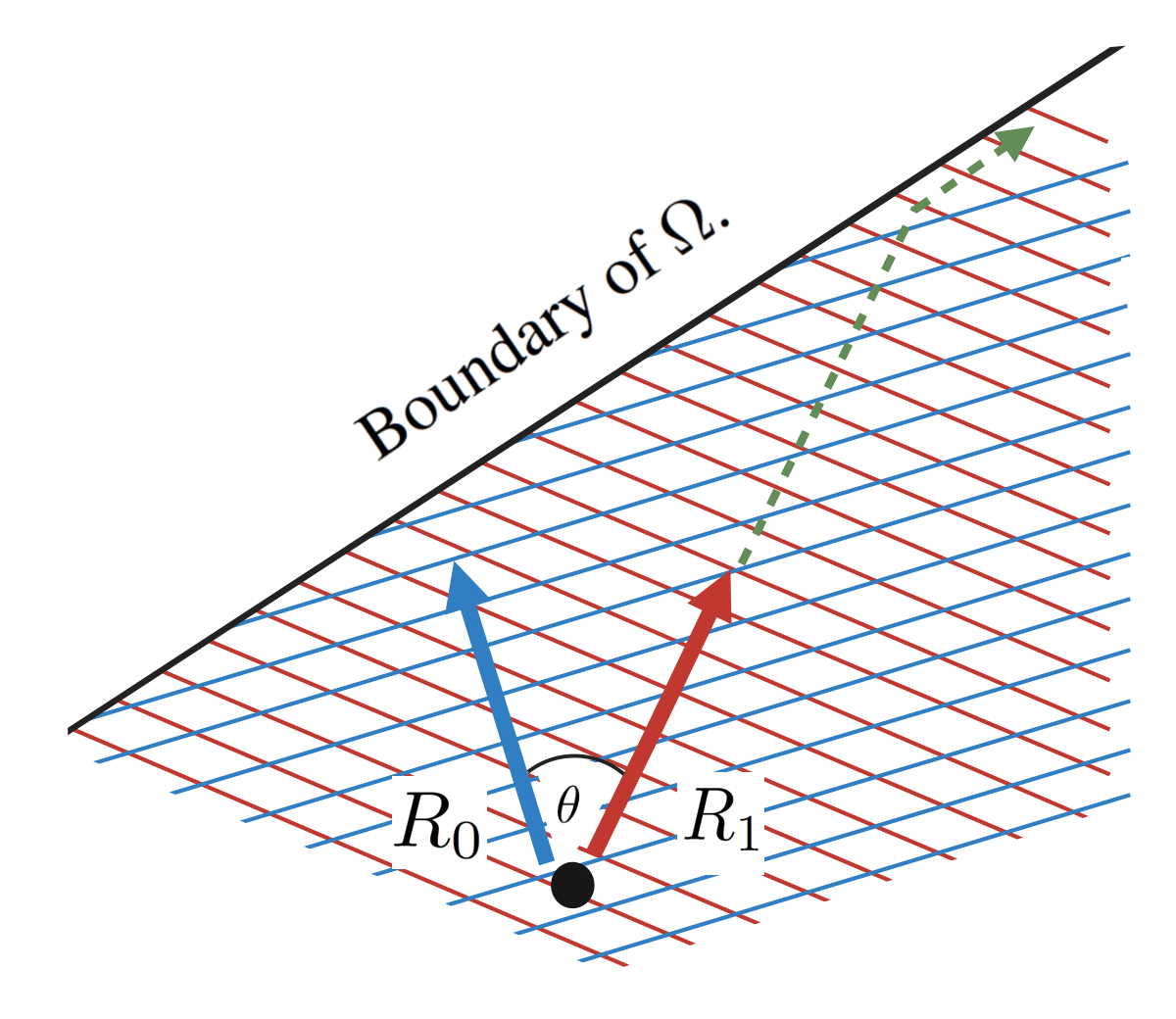

Next, it is important to note that is not guaranteed to decrease after we hit the boundary of . To see this, consider the following diagram:

The dashed green line again corresponds to the path that most rapidly increases . As we move along this path, will increase both before and after the path has hit the boundary of . If we were to plot and over time, we would get a plot that looks roughly like this:

The next thing to note is that we will not just hit the boundary of once. If we pick a random point in , and keep moving in the direction that most rapidly increases until we have found the maximal value of in , then we will hit the boundary of over and over again. Each time we hit this boundary we will change the direction that we are moving in, and each time this happens, there is a risk that we will start moving in a direction that decreases .

Goodharting corresponds to the case where we follow a path through along which initially increases, but eventually starts to decrease. As we have seen, this must be caused by the boundaries of .

We may now ask; under what conditions do these boundaries force the path of steepest ascent (of ) to move in a direction that decreases ? By inspecting the above diagrams, we can see that this depends on the angle between the normal vector of that boundary and , and the angle between and . In particular, in order for to start decreasing, it has to be the case that the angle between and is larger than the angle between and the normal vector of the boundary of . This immediately tells us that if the angle between and is small, then Goodharting will be less likely to occur.

Moreover, as the angle between and the normal vector of the boundary of becomes smaller, Goodharting should be correspondingly more likely to occur. In our paper, Proposition 3 tells us that this angle will decrease monotonically along the path of steepest ascent (of ). As such, Goodharting will get more and more likely the further we move along the path of steepest ascent. This explains why Goodharting becomes more likely when more optimisation pressure is applied.

How can we use this intuition?

Preventing Goodharting is difficult because we only have access to the proxy reward. If we only observe , we don't know whether we are in the situation on the left or the situation on the right:

However, we might detect that there is a danger of Goodharting: if we have a bound on the angle between the true and the proxy, then we can detect when there is at least one policy within a cone of around whose return goes down. This is the idea for the pessimistic early stopping algorithm we have developed. Specifically, we track the current direction of optimisation relative to the proxy reward given by , in the occupancy measure space:

If , or equivalently, , there is a possibility of Goodharting - since pessimistically, we are moving opposite to some possible true reward .

In the interior of the polytope , we know that the increase in return is given by the product of how much we moved and the magnitude of the (projected) reward vector: . In general, it has to be rescaled by the current direction of optimisation: .

Therefore, assuming that the LHS of the following inequality is non-increasing (Proposition 3 in the paper again):

we can simply stop first that satisfies it. This is the optimal (last possible) point where we still provably avoid Goodharting.

What's next?

Early-stopping can, unfortunately, lose out on quite a lot of reward, so we think this particular method can be best used in situations where it is much more important to prevent Goodharting than to get good performance in absolute terms. It is also expensive to compute the occupancy measure and the angle . Still, the algorithm can be improved in many directions: for example, we might be able to construct a training algorithm where we alternate between (1) training a model and (2) collecting more data about the reward function. In this way, we collect just enough information for training to proceed, without ever falling into Goodharting. We write about some of those possibilities in the paper but leave the details for future work.

22 comments

Comments sorted by top scores.

comment by johnswentworth · 2023-10-16T21:03:38.292Z · LW(p) · GW(p)

Very cool math (and clear post), but I think this formulation basically fails to capture the central Goodheart problem.

Relevant slogan: Goodheart is about generalization, not approximation.

A simple argument for that slogan: if we have a "true" utility , and an approximation which is always within of , then optimizing achieves a utility under within of the optimal . So optimizing an approximation of the utility function yields an outcome which is approximately-optimal under the true utility function... if the approximation holds well everywhere.

In all the standard real-world examples of Goodheart, the real problem is that the proxy is not even approximately correct once we move out of a certain regime. For instance, consider the classic case in which the British offered a reward for dead snakes (hoping to kill them off), and people responded by farming snakes. That reward did not even approximately reflect what the British wanted, once snake-farms entered the picture. Or the Soviet nail factories, rewarded for number of nails produced, which produced huge numbers of tiny useless nails - once in that regime, the reward just completely failed to approximate what the central bureau wanted. These are failures of generalization, not approximation.

So my concern with the OP is that it relies on an approximation assumption:

if we have a bound on the angle between the true and the proxy, then we can detect when there is at least one policy within a cone of around whose return goes down

... and that's not really how Goodheart works. Goodheart isn't about cases where the proxy approximates the "true" goal, it's about cases where the approximation just completely breaks down in some regimes.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-10-16T21:50:50.372Z · LW(p) · GW(p)

Goodheart isn't about cases where the proxy approximates the "true" goal, it's about cases where the approximation just completely breaks down in some regimes.

I agree with your general sentiment but how does that translate formally to the math framework of the paper? Or in other words, where does their formulation diverge from reality?

Perhaps it's in how they define the occupancy function over explicit (state, action) pairs - Seems like the occupancy measure doesn't actually weight by state probability correctly? which seems odd - so you could have 2 reward functions that seem arbitrarily aligned (dot product close to 1), but only because they agree on the vast volume of highly improbable states, and not the tiny manifold of likely states.

Moreover in reality the state space is essentially infinite and everything must operate in a highly compressed model space for generalization regardless, so even if the 'true' unknown utility function can be defined over the (potentially infinite) state space, any practical reward proxy can not - it is a function of some limited low dimensional encoding of the state space, such that the mapping from that to the full state space is highly nonlinear and complex. We can't realistically expand that function to the true full state space, nor expect the linearity to translate into the compressed model space. Tiny changes in the model space can translate to arbitrary jumps in the full state space, updates to the model compression function (ontology shifts) can shift everything around, etc.

Replies from: OliverHayman↑ comment by OliverHayman · 2023-10-16T22:36:59.282Z · LW(p) · GW(p)

So here's a thing that I think John is pointing at, with a bit more math?:

The diversion is in the distance function.

- In the paper, we define the distance between rewards as the angle between reward vectors.

- So what we sort of do is look at the "dot product", i.E., look at for true and proxy rewards and with states/actions sampled according to a uniform distribution. I give justification as to why this is a natural way to define distance in a separate comment.

But the issue here is that this isn't the distribution of the actions/states we might see in practice. might be very high if states/actions are instead weighted by drawing them from a distribution induced from a certain policy (e.g., the policy of "killing lots of snakes without doing anything sneaky to game the reward" in the examples, I think?). But then as people optimize, the policy changes and this number goes down. A uniform distribution is actually likely quite far from any state/action distributions we would see in practice.

In other words the way we formally define reward distance here will often not match how "close" two reward functions seem, and lots of cases of "Goodharting" are cases where two reward functions just seem close on a particular state/action distribution but aren't close according to our distance metric.

This makes the results of the paper primarily useful for working towards training regimes where we optimize the proxy and can approximate distance, which is described in Appendix F of the paper. This is because as we optimize the proxy it will start to generalize, and then problems with over-optimization as described in the paper are going to start mattering a lot more.

↑ comment by OliverHayman · 2023-10-16T22:54:04.803Z · LW(p) · GW(p)

So more concretely, this is work towards some sort of RLHF training regime that "provably" avoids Goodharting. The main issue is that a lot of the numbers we're using are quite hard to approximate.

↑ comment by jacob_cannell · 2023-10-16T23:18:24.664Z · LW(p) · GW(p)

Thanks that clarifies somewhat, but guess I'll need to read the paper - still a bit confused about the justification for a uniform distribution.

with states/actions sampled according to a uniform distribution. I give justification as to why this is a very natural way to define distance in a separate comment.

A uniform distribution actually seems like a very weird choice here.

Defining utility functions over full world states seems fine (even if not practical at larger scale), and defining alignment as dot products over full trajectory/state space utility functions also seems fine, but only if using true expected utility (ie the actual bayesian posterior distribution over states). That of course can get arbitrarily complex.

But it also seems necessary in that for one to say that two utility functions are truly 'close' seems like that must cash out to closeness of (perhaps normalized) expected utilities given the true distribution of future trajectories.

Do you see a relation between the early stopping criteria and regularization/generalization of the proxy reward?

Replies from: OliverHayman↑ comment by OliverHayman · 2023-10-16T23:31:03.322Z · LW(p) · GW(p)

The reason we're using a uniform distribution is that it follows naturally from the math, but maybe an intuitive explanation is the following: the reason this is weird is that most realistic distributions are only going to sample from a small number of states/actions. Whereas the uniform distribution more or less encodes that the reward functions are similar across most states/actions. So it's encoding something about generalization.

comment by TurnTrout · 2023-10-16T23:42:54.485Z · LW(p) · GW(p)

This is a really cool insight, at least from an MDP theory point of view. During my PhD [LW · GW], I proved a bunch of results on the state visit distribution perspective and used those a few of those results to prove the power-seeking theorems [LW · GW]. MDP theorists have, IMO, massively overlooked this perspective, and I think it's pretty silly.

I haven't read the paper yet, but doubt this is the full picture on Goodhart / the problems we actually care about (like getting good policies out of RL, which do what we want).

Under the geometric picture, goodharting occurs as the general case because, selecting reward functions uniformly randomly (an extremely dubious assumption!), pairs of high-dimensional vectors are generically nearly orthogonal to each other (ie have high angle).

Even practically, it's super hard to get regret bounds for following the optimal policy (an extremely dubious assumption!) of an approximate reward function; the best bounds are, like, , which is horrible.

But since you aren't trying to address the optimal case, maybe there's hope (although I personally don't think that reward Goodharting is a key problem, rather than a facet of a deeper issue).

I want to note that LLM-RL is probably extremely dissimilar from doing direct gradient ascent in the convex hull of state visitation distributions / value iteration. This means -- whatever this work's other contributions -- we can't directly conclude that this causes training-distribution Goodharting in the settings we care about.

I think this is a bit misleading; the angle is larger than visually implied, since the vectors aren't actually normal to the level sets.

Also, couldn't I tell a story where you first move parallel to the projection of onto the boundary for a while, and then move parallel to ? Maybe the point is "eventually (under this toy model of how DRL works) you hit the boundary, and have thereafter spent your ability to increase both of the returns at the same time. (EDIT maybe this is ruled out by your theoretical results?)

Replies from: TurnTrout↑ comment by TurnTrout · 2023-10-17T18:02:57.525Z · LW(p) · GW(p)

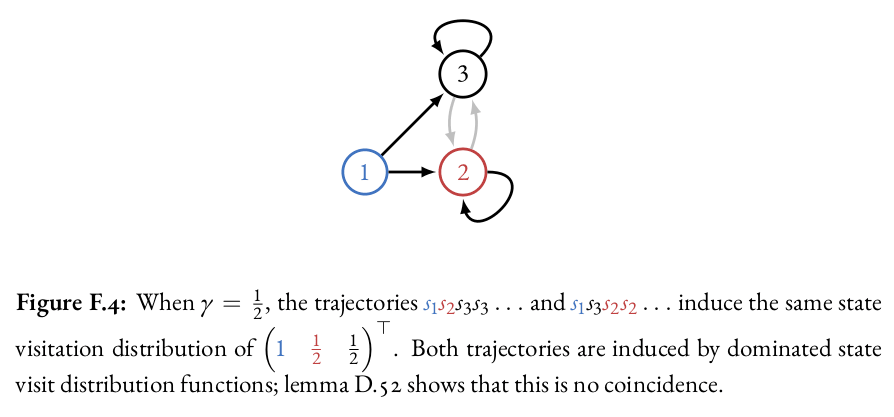

We can recover a policy from its occupancy measure (up to the states that were never visited).

Actually, this claim in the original post is false for state-based occupancy measures, but it might be true for state-action measures. From p163 of On Avoiding Power-Seeking by Artificial Intelligence:

(Edited to qualify the counterexample as only applying to the state-based case)

Replies from: jacek-karwowski↑ comment by jacek (jacek-karwowski) · 2023-10-17T18:12:51.437Z · LW(p) · GW(p)

Thanks for the comment! Note that we use state-action visitation distribution, so we consider trajectories that contain actions as well. This makes it possible to invert (as long as all states are visited). Using only states trajectories, it would indeed be impossible to recover the policy.

Replies from: TurnTroutcomment by Oliver Sourbut · 2023-10-16T07:55:02.746Z · LW(p) · GW(p)

As I've previously said in person, this is one of the best technical articulations of Goodhart I've encountered. Really glad you made it into a paper!

It's worth mentioning that I think the 'angle between reward functions' in the occupancy space should relate somewhat neatly to the 'distances between reward functions' thread of research ('reward function theory'?) including stuff Joar has worked on, and my upcoming paper on delegation games.

Replies from: Logical_Lunatic↑ comment by Joar Skalse (Logical_Lunatic) · 2023-10-16T09:03:32.890Z · LW(p) · GW(p)

including stuff Joar has worked on

That is right! See this paper.

Replies from: Oliver Sourbut↑ comment by Oliver Sourbut · 2023-10-17T10:14:11.091Z · LW(p) · GW(p)

Thanks, amended the link in my comment to point to this updated version

comment by MikkW (mikkel-wilson) · 2023-10-18T19:37:29.058Z · LW(p) · GW(p)

I strong-downvoted this post because sentences like

use these insights to derive two methods for provably avoiding Goodharting

Tend to be misleading, pretending that mathematical precision describes the complex and chaotic nature of the real world, where it shouldn't be assumed to (see John Wentworth's comment), and in this case it could potentially lead to very bad consequences if misunderstood.

comment by Multicore (KaynanK) · 2023-10-16T22:59:01.161Z · LW(p) · GW(p)

My guess is that early stopping is going to tend to stop so early as to be useless.

For example, imagine the agent is playing Mario and its proxy objective is "+1 point for every unit Mario goes right, -1 point for every unit Mario goes left".

(Mario screenshot that I can't directly embed in a comment)

If I understand correctly, to avoid Goodharting it has to consider every possible reward function that is improved by the first few bits of optimization pressure on the proxy objective.

This probably includes things like "+1 point if Mario falls in a pit". Optimizing the policy towards going right will initially also make Mario more likely to go in a pit than if the agent was just mashing buttons randomly (in which case it would stay around the same spot until the timer ran out and never end up in a pit), so the angle between the gradients is likely low at first.

However, after a certain point more optimization pressure on going right will make Mario jump over the pit instead, reducing reward under the pit reward function.

If the agent wants to avoid any possibility of Goodharting, it has to stop optimizing before even clearing the first obstacle in the game.

(I may be misunderstanding some things about how the math works.)

Replies from: Oliver Sourbut↑ comment by Oliver Sourbut · 2023-10-17T10:17:32.345Z · LW(p) · GW(p)

I think you're (at least partly) right in spirit. The key extra nuance is that by constraining the 'angle' between the reward functions[1], you can rule out very opposed utilities like the one which rewards falling in a pit. So this is not true

to avoid Goodharting it has to consider every possible reward function that is improved by the first few bits of optimization pressure on the proxy objective.

In particular I think you're imagining gradients in policy space (indeed a practical consideration). But this paper is considering gradients in occupancy space (which in practice is baking in some assumptions about foresight etc.).

How? Yes this is a pretty big question (there are some theoretical and empirical ideas but I don't rate any of them yet, personally). ↩︎

comment by OliverHayman · 2023-10-16T21:59:20.990Z · LW(p) · GW(p)

An important part of the paper that I think is easily missed, and useful for people doing work on distances between reward vectors:

There is some existing literature on defining distances between reward functions (e.g., see Gleave et. al.). However, all proposed distances are only pseudometrics.

A bit about distance functions:

Commonly, two reward functions are defined to be the same (e.g., see Skalse et. al.) if they're equivalent up to scaling the reward function and introducing potential shaping. By the latter, I mean that two reward functions are the same if one is and the other is of the form for some function and discount . This is because in Ng. et. al. it is shown these make up all reward vectors that we know give the same optimal policy as the original reward across all environments (with the same state/action space).

This leads us to the following important claim:

Projecting reward vectors onto and taking the angle between them is a perfect distance metric according to these desiderata.

Why: It can easily be shown it's a metric, provided it's well-defined with the equivalence relation. It can also be shown that the locus of reward functions that give the same projection as onto is exactly the set of potential-shaped reward functions. Then the claim pretty clearly follows.

In particular, this seems like the most natural "true" reward metric, and I'm not sure any other "true" metrics have even been proposed before this.

↑ comment by Oliver Sourbut · 2023-10-17T10:21:46.817Z · LW(p) · GW(p)

I remarked on this claim while the paper was in review when I was asked to give some feedback on the paper [it's still under official review I think]. Some of the earlier proposed metrics are in fact full metrics in the relevant sense, provided they have full coverage on the reward space. e.g. STARC distances are metrics on the quotient space of reward functions by the equivalences, aren't they, if the distance metric has full coverage? (Which is the same as what this project-then-take-angle metric is.) In particular, I think the angle relates 1-1 with the L2 distance on a suitably canonicalised unit ball from STARC/EPIC.

↑ comment by OliverHayman · 2023-10-17T12:20:06.914Z · LW(p) · GW(p)

You're right. For some reason, I thought EPIC was a pseudometric on the quotient space and not on the full reward space.

I think this makes the thing I'm saying much less useful.

↑ comment by Oliver Sourbut · 2023-10-17T21:03:02.233Z · LW(p) · GW(p)

I still think this is an important point, and I've been thinking there should be a bloggy write-up of the maths in this area on LW/AF! Maybe you (or I, or Jacek, or Charlie, or Joar, or whoever...) could make that happen.

The original EPIC definition, and the STARC defs, can be satisfied while yielding only a pseudometric on the quotient space. But they also include many full (quotient) metrics, and the (kinda default?) L2 choice (assuming full-support weighting) yields a full metric.

comment by Jobst Heitzig · 2023-10-16T13:41:33.833Z · LW(p) · GW(p)

Excellent! I have three questions

-

How would we get to a certain upper bound on ?

-

As collisions with the boundary happen exactly when one action's probability hits zero, it seems the resulting policies are quite large-support, hence quite probabilistic, which might be a problem in itself, making the agent unpredictable. What is your thinking about this?

-

Related to 2., it seems that while your algorithm ensures that expected true return cannot decrease, it might still lead to quite low true returns in individual runs. So do you agree that this type of algorithm is rather a safety ingredient amongst other ingredients, rather that meant to be a sufficient solution to satety?

comment by scrdest · 2023-10-16T21:24:36.175Z · LW(p) · GW(p)

This seems to me like a formalisation of Scott Alexander's The Tails Coming Apart As Metaphor For Life post.

Given a function and its approximation, following the approximate gradient in Mediocristan is good enough, but the extremes are highly dissimilar.

I wonder what impact complex reward functions have. If you have a pair of approximate rewards, added together, could they pull the system closer to the real target by cancelling each other out?