ACI#6: A Non-Dualistic ACI Model

post by Akira Pyinya · 2023-11-09T23:01:30.976Z · LW · GW · 2 commentsContents

Communication Channel and Mutual Information Memory and Prophecy Collect Memories for Prophecies Autonomy and Heteronomy The Laws of the Mind Dualism and Survival Bias None 2 comments

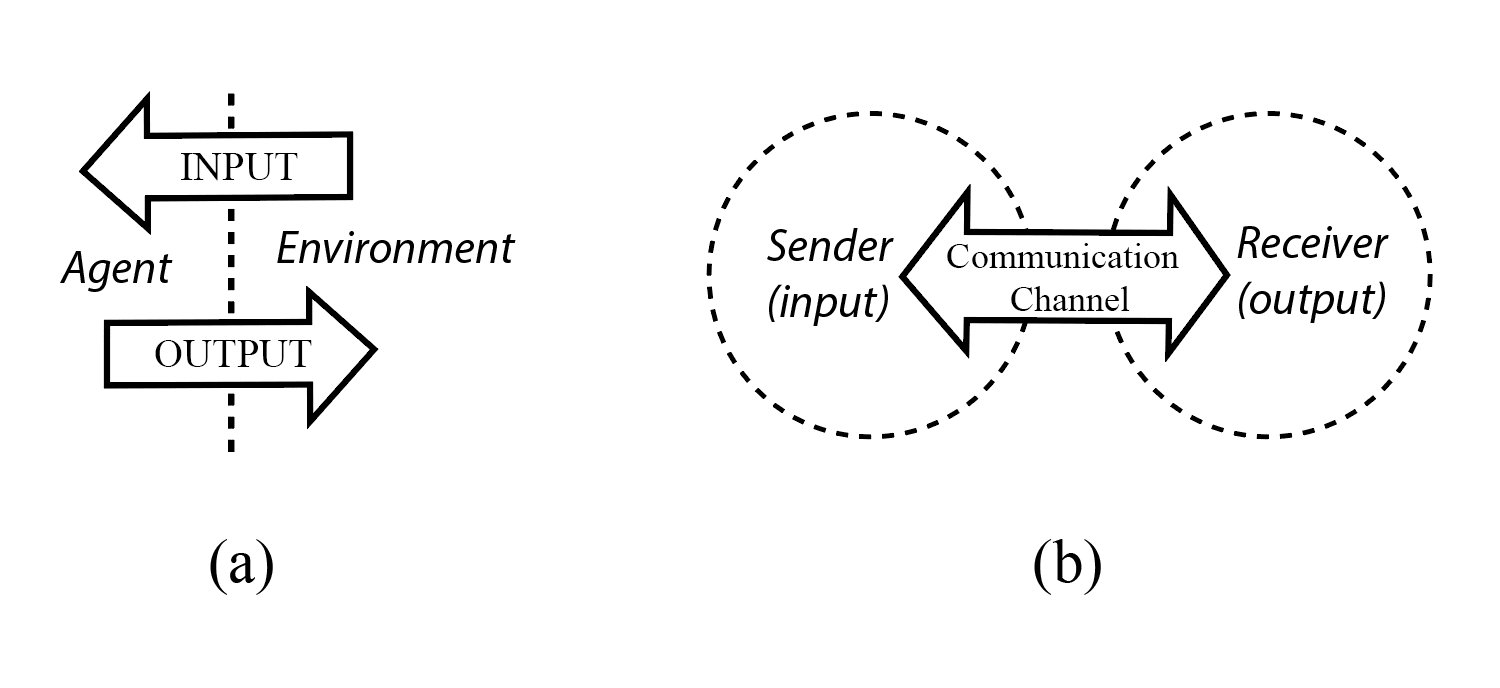

Most traditional AI models are dualistic. As Demski & Garrabrant have pointed out [? · GW], these models assume that an agent is an object that persists over time, and has well-defined input/output channels, like it's playing a video game.

In the real world, however, agents are embedded in the environment, and there's no well-defined boundary between the agent and the environment. That's why a non-dualistic model is needed to depict how the boundary and input/output channels emerge from more fundamental notions.

For example, in Scott Garrabrant's Cartesian Frames [LW · GW], input and output can be derived from "an agent's ability to freely choose" among "possible ways an agent can be".

However, choosing is still one of the key concepts of Cartesian Frames, but from a non-dualistic perspective, "it's not clear what it even means for an embedded agent to choose an option", since an embedded agent is "the universe poking itself". Formalizing the idea of choice in a non-dualistic model is as difficult as formalizing the idea of free will.

To avoid relying on the notion "choosing", we have proposed the General Algorithmic Common Intelligence (gACI) model which describes embedded agents solely from a third-person perspective, and measures the actions of agents using mutual information in an event-centric framework.

The gACI model does not attempt to answer the question "What should an agent do?". Instead, it focuses on describing the emergence of the agent-environment boundary, and answering the question "Why does an individual feel like it's choosing?"

In the language of decision theory, gACI belongs to descriptive decision theory rather than normative decision theory.

Communication Channel and Mutual Information

In dualistic intelligence models, an agent receives input information from the environment, and manipulates the environment through output actions. But real-world agents are embedded within the environment, it's not easy to confine information exchange to a clear input/output channel.

In the gACI model, on the other hand, the input/output channel is a communication channel, in which the information transfer between a sender and a receiver is measured by mutual information.

Figure 1: From the dualistic input/output model to the mutual information model.

We can easily define mutual information between the states of any two objects, without specifying how the information is transmitted, or who is the sender and who is the receiver, or what the transmission medium is, or whether they are direct or indirect connected.

These two objects can be any parts of the world, such as agents, the environment, or any parts of agents or the environment, whose boundaries can be drawn anywhere if necessary. They can even overlap.

Having mutual information does not always mean knowing or understanding, but it provides an upper bound for knowing or understanding.

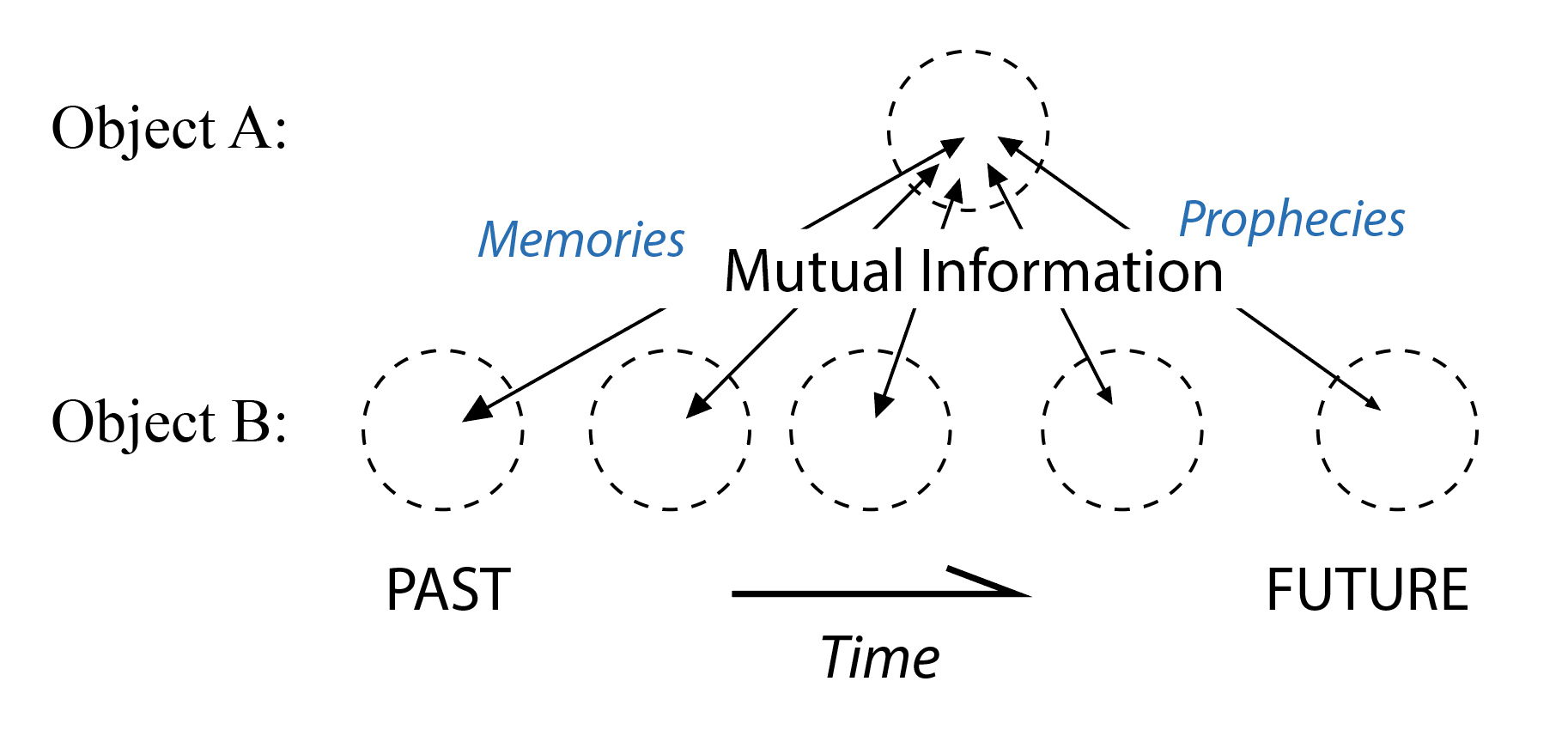

With mutual information of two objects, we can define memories and prophecies.

Memory and Prophecy

Memory is information about the past, or a communication channel that transmits information about the past into the future (Gershman 2021). If A is the receiver and B is the sender, we can define: A's memory of B is the mutual information between the present state of A and a past state of B:

A can have memories about more than one Bs, or about different moments of B. It can also have memories about itself, in other words, A can be equal to B.

Prophecy is the mutual information between the present state of A and a future state of B:

Obviously, the prophecy will not be confirmed until the future state of B is known.

A prophecy can be either a prediction about the future, or an action that controls/affects the future. In the language of the Active Inference model, it's either "change my model" or "change the world".

It's not necessary to prefer one interpretation over another, because different interpretations can be derived in different situations, which will be explained in the later chapters.

Figure 2: Object A can have both memories and prophecies about object B.

Collect Memories for Prophecies

We won't be surprised to find that most, if not all, objects that have prophecies about object A also have memories about it, although the reverse is not always true. We can speculate that information about the future comes from information about the past.

For example, if you know the position and velocity of the moon in the past, you can have a lot of information about its position and velocity in the future.

Not all information is created equal. Using our moon as an example, information about its position and phase contains more information about its future, while the pattern of foam in your coffee cup contains less.

(Although computing power plays an important role in processing information from memory to prediction or control, we only consider the upper bound of prophecy as if we had infinite computing power. )

Objects with different memories would have different prophecies about the same object. For example, an astronomer and an astrologer would have different information about the future of the planet Mars because of their different knowledge of the universe.

Intelligence needs prophecy to survive and thrive, because to maintain its homeostasis and achieve its goals, it needs sufficient information about the future, especially about its own future. In order to obtain prophecies about itself, one should collect memories about itself and the world that are useful for predicting or controlling its own future.

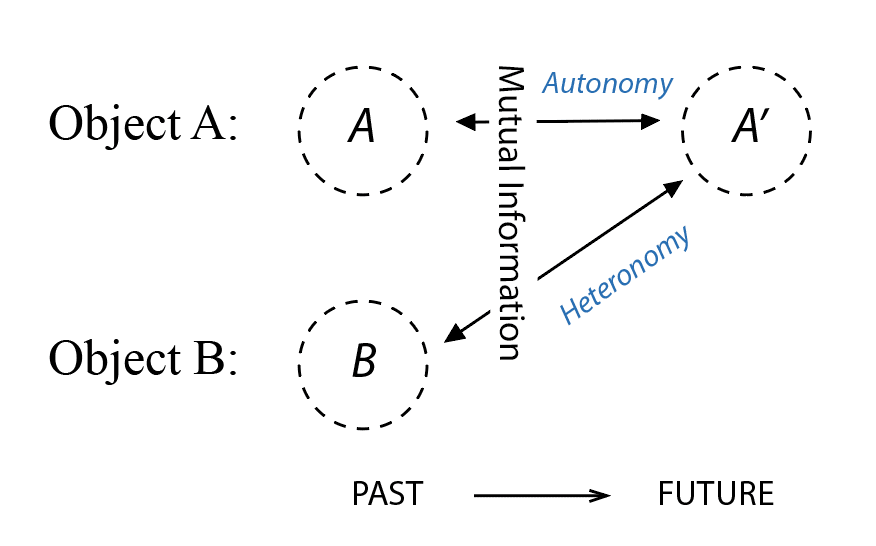

Autonomy and Heteronomy

We can measure the degree of autonomy of an object by how much prophecy it has about a future of itself, which indicates its self-governance and independence.

Similarly, we can measure the degree of heteronomy of object A from object B by how much prophecy B has about A, which indicates A's degree of dependence on B.

An object that has considerable autonomy can be considered an individual or an agent. The permanent loss of autonomy is the death of an individual. Death is often the result of the permanent loss of essential memories that can induce prophecies about itself.

Focusing on different types of information requires different standards for autonomy and death. For example, a human neuron has some autonomy over its metabolism, but the timing and strength of its action potential depends mostly on other neurons. We can think of it as an individual, but it is better to think of it as a part of an individual when studying intelligence. Because the death of a single neuron has little effect on a person's autonomy, but the death of a person does.

Figure 3: Autonomy and Heteronomy

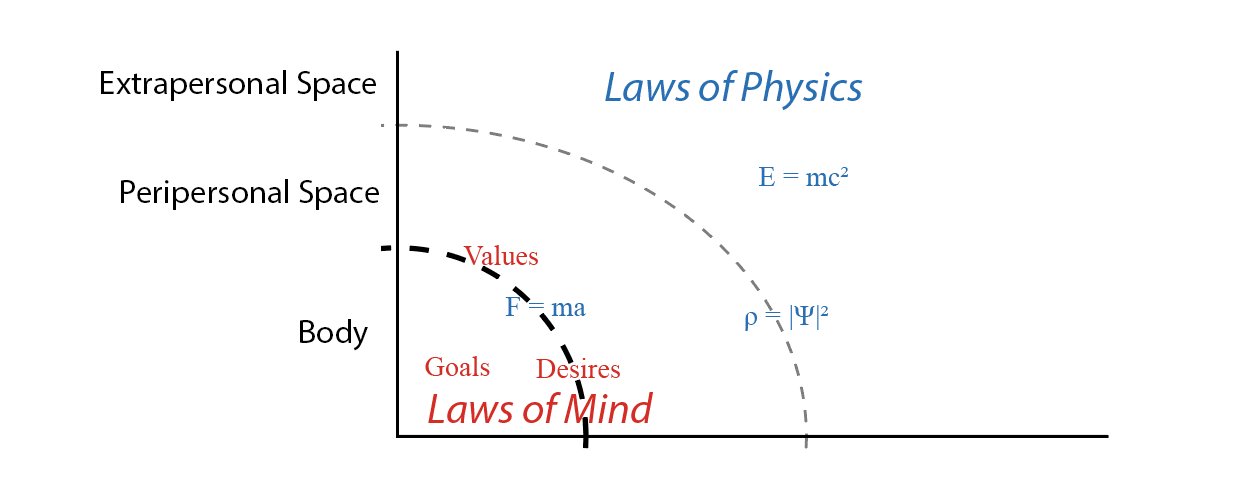

The Laws of the Mind

As an individual accumulates more and more memories and prophecies, it can discover general rules about the world, which are relationships between the past and the future.

During this rule-learning process, the boundary between the self and the outside world emerges. One will inevitably find out that some parts of the world follow different rules than other parts, and these special parts are spatially concentrated around itself. We can call this special part the body.

For example, an individual may acknowledge that its body temperature has never been very high, like, say above 1000K, and predict that its body will never experience a temperature above 1000K, if its future is under control. Since some other objects can have a temperature of 1000K, it will conclude that there must be some special rules that prevent one's body from getting too hot. We call these rules goals, motivations, or emotions, etc.

The intuitive conclusion is that your body follows some rules that are different from the rules that other objects follow. This is what people call dualism: the body follows the laws of the mind, which uses concepts like goals, emotions, logic, etc., while the outside world doesn't.

However, the exact boundary between the body and the environment is not very clear. The space surrounding the body may partly follow the laws of the mind and can be called peripersonal space, a term borrowed from psychology.

(Closer examination will reveal that the body and peripersonal space also follow the same scientific laws as the outside world, and the laws of mind are some additional laws that only bodies follow.)

Figure 4: Everything in the universe follows the laws of physics, but additionally, one's body and peripersonal space follow the laws of the mind.

Dualism and Survival Bias

Why do our bodies seem to follow the laws of mind, if bodies are made of the same atoms as the outside world?

Consider a classic example of survival bias. During World War II, the statistician Abraham Wald examined the bullet holes in returning aircrafts and recommended adding armor to the areas that showed the least damage, because most aircrafts damaged in those areas could not return safely to base.

This survival bias could be overcome by observing the aircraft on the battlefield instead of at the base, where we could find out that the bullet holes are evenly distributed throughout the aircraft, since the survival of the observer is independent of the location of the bullet holes. Because a survival bias is introduced when the observer's survival is not independent of the observed event.

We can speculate that if an event is in principle dependent on the observer's own survival, there will be a survival bias that can't be overcome. For example, one's own body temperature is not independent of one's own survival, but the body temperature of others can be.

Unlike the pattern of bullet holes in returning aircrafts, the inherent survival bias, including numerous experiences of how to survive, can accumulate in the observer's memory, like the increased armor in the critical areas of an aircraft. We call the memories of accumulated survival bias the inward memory, and the memories of the external world, whose survival bias can be overcome, the outward memory.

The laws of the mind, such as goal-directed mechanisms, can be derived from the inward memory. The observer may find that (almost) everything in the outside world has a cause, but its own goal-driven survival mechanism, such as an aversion to hot temperature, or enhanced armor, has no cause other than the rule "the survival of itself depends on the survival of itself", or the existence of itself. Then the observer comes to a conclusion: I have a goal, I have made a choice.

2 comments

Comments sorted by top scores.

comment by Alvin Ånestrand (alvin-anestrand) · 2024-02-20T09:38:19.884Z · LW(p) · GW(p)

Interesting!

I thought of a couple of things that I was wondering if you have considered.

It seems to me like when examining mutual information between two objects, there might be a lot of mutual information that an agent cannot use. Like there is a lot of mutual information between my present self and me in 10 minutes, but most of that is in information about myself that I am not aware of, that I cannot use for decision making.

Also, if you examine an object that is fairly constant, would you not get high mutual information for the object at different times, even though it is not very agentic? Can you differentiate autonomy and a stable object?

Replies from: Akira Pyinya↑ comment by Akira Pyinya · 2024-03-22T19:21:53.661Z · LW(p) · GW(p)

Thank you for your reply!

"The self in 10 minutes" is a good example of revealing the difference between ACI and the traditional rational intelligence model. In the rational model, the input information is send to atom-like agent, where decisions are made based on the input.

But ACI believes that's not how real-world agents work. An agent is a complex system made up with many different parts and levels: the heart receives mechanical, chemical, and electronic information from its past self and continue beating, but with different heart rates because of some outside reasons; a cell keeps running its metabolic and functional process, which is determined by its past situation, and affected by its neighbors and chemicals in the blood; finally, the brain outputs neural signals based on its past state and new sensory information. In other words, the brain has mutual information with its past self, the body, and the outer world, but that's only a small part of the mutual information between my present self and me in 10 minutes.

In other words, the brain uses only a tiny part of the information an agent uses. furthermore, when we talk about awareness, I am aware of only a tiny part of the information process in my brain.

An agent is not like an atom, but an onion with many layers. Decisions are made in parallel in these layers, and we are aware of only a small part of the layers. It's even not possible to draw a solid boundary between awareness and no awareness.

The second question, a stable object may have high mutual information at different times, but may also have high mutual information with other agents. For example, a rock may be stable in size and shape, but its position and movement may highly depends on outside natural force and human behavior. However, the definition of agency is more complex than this, I will try to discuss it in the future posts.