Review: Structure and Interpretation of Computer Programs

post by L Rudolf L (LRudL) · 2022-04-11T20:27:13.167Z · LW · GW · 9 commentsThis is a link post for https://www.strataoftheworld.com/2019/09/review-structure-and-interpretation-of.html

Contents

Beware the wizards Contrarian SICP Why Lisp? Executable math Finally: data! The what evaluator? What do we say to compilers? Not today First Principles of Computer Programming Links & resources None 9 comments

This is a linkpost (written several years ago) that I'm posting in response to a request to share more of the archive of my personal blog on LessWrong. It is most useful if you have none or limited experience with functional programming and might want to get into it, or if you have a good practical grasp of programming but want to read something about the unifying concepts.

Many regard Structure and Interpretation of Computer Programs (SICP) as the bible of programming. For good reason, as it turns out.

Beware the wizards

|

| The Wizard Book. (Credit: MIT Press) |

SICP is sometimes called the “Wizard Book”, because there’s a wizard on the cover (if your job is making an interesting cover for a programming book, what would you do?). However, this does not mean that the book has anything to do with –

“[L]earning to program is considerably less dangerous than learning sorcery, because the spirits we deal with are conveniently contained in a secure way.”

Um. Okay, I rest my case. Proceed with caution.

Contrarian SICP

For most subjects there is a standard way to present it that most books, lectures, etc. will follow.

For programming, the standard way seems to be to take some “mainstream” language, show how to print “Hello, World!” onto the screen, then start introducing things like assigning values to variables, conditionals, and so on. Pretty soon you can be doing some pretty impressive things.

SICP does not follow this route.

Why Lisp?

The first thing that might strike you about SICP is that the programming language of choice is Scheme, a dialect of Lisp (short for “LISt Processor”), which is commonly known as that obscure language invented in 1958 that wears down the parentheses keys on your keyboard.

However, the authors are not just being contrarian here; there are many good arguments for using Lisp in a book like this.

First, Lisp is the closest a programming language can get to having no syntax. You don’t have to learn where curly brackets are used, or which operators/functions follow which type of syntax, or a multitude of special characters that perform arcane pointer logic (I’m looking at you, C++).

If you have an expression in parentheses, the first thing inside the parentheses is the name of the function that is being called. Everything after it is an argument to be passed to that function. Something not in parentheses represents either just itself (e.g. a string, number, or boolean), or is the name of a variable that in turn represents something.

For example: (+ 1 (* 2 3) var) evaluates to the sum of the numbers 1, the product of 2 and 3, and whichever number the variable var has been set to.

Now you know approximately 90% of Lisp syntax (there’s also a few other things, like a special syntax that stands in for an unnamed function, and some shortcuts for things you’d otherwise have to type out repeatedly).

If you follow along with SICP, Lisp is self-explanatory.

The second point in favour of Lisp follows immediately from the first: the near-absence of syntax means you don’t have to think about it. Once you get used to it, writing in Lisp feels almost like transcribing pure thought into code.

When a language implements various special syntaxes, it generally privileges certain design patterns and ways of thinking; if for-loops are unavoidable, the programmer will think in for-loops. A near-absence of syntax means neutrality. Some might call it blandness; fair enough, but Lisp’s blandness is very powerful when used right. It makes it a very useful language for a book like SICP, which tries to teach you (for example) many different ways of abstracting data, rather than the one that is made most convenient by a language’s syntax.

The third point in favour of Lisp is that what little syntax it has was chosen carefully, namely in such a way that Lisp code is also Lisp data. The example function call (+ 1 (* 2 3) var) given above is just a list of the elements +, 1, the list of the elements *, 2, and 3, and var. This means that it’s very easy to write Lisp code that operates on Lisp code, something that comes in handy when SICP walks through the operation of a Lisp interpreter (in more practical situations, it also enables Lisp’s powerful macro system). To put it another way, introspection is easier in Lisp than other languages.

Finally, as the (perhaps biased) authors write: “Above and beyond these considerations, programming in Lisp is great fun.”

Executable math

Once you’ve gotten over all the parentheses, the second thing you’ll notice about SICP is the order in which topics are presented.

The first chapter is entirely devoted to creating abstractions by defining functions. Only function (and variable) definition and function calling are used – no mention is made of data structures or changing the values of variables.

If you think it’s impossible to do anything interesting by just calling functions, you are wrong, and SICP will prove it.

The chapter runs through the very basics of function application, variable definitions, and the substitution model of how to apply functions (this last point will latter be amended). It discusses iterative and recursive processes, and how iterative processes can be described by recursive functions.

A lot of the things you can do by just calling function are quite math-y. SICP does not shy away from this: Newton’s method for square roots, numerical integration, and finding fixed points of (mathematical) functions are prominent examples. No prior knowledge about the math is assumed, but this may still put off many readers because it’s abstract and not directly relevant to most real-world problems. “Executable math” is a pretty good summary of what most of this chapter is about.

However, the chapter really is striking. Using just one type of abstraction (defining functions) and not too many pages, SICP scales from the very basics to solving fairly involved problems with techniques, like extensive use of higher-order functions, that would be left for much later in a more conventional work.

Finally: data!

Only in the second chapter does SICP turn to data structures. Once again the format is the same: introduce exactly one type of abstraction, and systematically introduce examples of how it’s useful and what can be done with it.

The basic Lisp data structure is creating cells that link together two values. The primitive function for this is cons. If we want to chain together many values, for instance to create a list of the elements 1, 2, and 3, we can do this with (cons 1 (cons 2 (cons 3 null))) (of course, there’s also a function – list – that creates lists like this automatically ).

Additionally, Lisp provides primitive functions for accessing the first and second element in a cons cell. For historical reasons, these functions are called car (returns the first element) and cdr (returns the second element). This means that the cdr of a list defined in the same way as above would be all but the first element of the list.

But what is data? Or do we even care? After all, all that interests us about cons, car, and cdr is that if we define, say, x as (cons 1 2), then (car x) should be 1 and (cdr x) should be 2.

One clever way of implementing this – and one that will likely seem both weird and ingenious the first time you see it – is the following:

(define (cons x1 x2) ; define cons as a function on two inputs

(define (dispatch n) ; define a function inside cons

(if (= n 1)

x1 ; return x1 if n = 1

x2)) ; else, return x2

dispatch) ; the cons function returns the dispatch function

(define (car x)

(x 1))

(define (cdr x)

(x 2))

What’s happening is this: cons returns the function dispatch. Let’s say x is a cons cell that we have made with cons, consisting of the elements x1 and x2.

Now we’ve defined the car of x to be whatever you get when you call the function x with 1 as the argument. x is what the cons function returned, in other words the dispatch function, and when we call that with 1 as the argument, it will return x1. Likewise, when we call x with the argument 2, the dispatch function that x represents will return x2. We have satisfied all the properties that we wanted cons, car, and cdr to have.

Is this how any reasonable Lisp implementation actually works? No.

(If you’re confused about the previous example: note that we’ve snuck in an assumption about how variable scoping works in functions. When the dispatch function is created inside the cons function, the variables x1 and x2 are obviously bound to whatever values we inputted into cons. What’s not obvious is that dispatch can access these values when it’s called later – after all, x1 and x2 were local variables for a function call that will have ended by then (and the cons function might have been called many times, meaning many x1s and x2s). However, in Lisp the environment at the time a function is called is bound to that function. When that function is called again, any local variables like x1 and x2 present in a parent function in which that function was defined will remain accessible. This type of thing is called a closure.)

Mutability (changing variable values after they’ve been defined) is only introduced in the third chapter; up until then, the book focuses purely on functional programming.

The third chapter is the culmination of the first half of the book: now that functions, data abstraction, and mutability have all been discussed, the authors go through a bunch of examples of what you can do by combining these.

The what evaluator?

SICP walks the reader through the process of writing a Lisp evaluator in Lisp, something that is called a “metacircular evaluator”.

Writing a Lisp evaluator in Lisp might seem pointless, but remember that a programming language, especially one like Lisp, is just as much a language for setting down our thoughts about procedures as it is something to be executed by computers. A Lisp-to-Lisp interpreter has the advantage that it is one of the simplest interpreters that it is possible to write. Interpreters for Lisp benefit greatly from the simplicity of Lisp’s syntax, while interpreters written in Lisp benefit from the expressiveness and flexibility of the language. Thus, with our Lisp-to-Lisp interpreter, the essence of an evaluator is laid about as barely before our eyes as it can be.

|

If you're willing to forget about code readability and leave out some syntactic sugar like cond expressions, you can literally behold the metacircular evaluator at a glance. (Note the #lang sicp line – DrRacket has a package that implements the exact version of Scheme used in SICP.) |

The authors write:

“It is no exaggeration to regard this as the most fundamental idea in programming:

‘The evaluator, which determines the meaning of expressions in a programming language, is just another program.’

To appreciate this point is to change our images of ourselves as programmers. We come to see ourselves as designers of languages, rather than only users of languages designed by others.”

After presenting the metacircular evaluator (and an optimisation), the authors go on to discuss three “variations on a Scheme” (haha …):

- Making the evaluator lazier. More precisely, delaying the evaluation of an expression until it is needed (“lazy evaluation”). This allows, for example, the convenient representation of infinite lists (“streams”), and more flexibility in creating new conditionals.

- Non-deterministic computing, in which the language has built-in capabilities to handle statements like “pick one of these three items”, or “search through these options until some permutation matches this condition”. With such a language, some logic puzzles can be solved by simply stating the requirements and pressing enter.

- A logic programming language, which can process queries about data.

(In other languages, some of these are built-in; Haskell includes the first one, and Prolog has the latter two. But if you want to be able to implement whichever set of features you want in your language, Lisp is the best option.)

Programming often involves wanting to do something, and then taking that task and “building down” to the demands of whatever programming language is used (consider the phrase "human compiler", used to describe the role of a human writing repetitive code in a language that is not flexible enough to elegantly express every abstraction). A powerful alternative method is to also build up the language to customise it for the needs of the task at hand. The boundary between language and program blurs.

It’s almost as if …

“The evaluator, which determines the meaning of expressions in a programming language, is just another program.”

What do we say to compilers? Not today

There’s a fifth chapter to SICP, in which a register machine simulator is constructed, and then used to implement – surprise surprise – a Lisp compiler.

In a way, this completes the loop: the first three chapters show what kinds of things various programming abstractions allow, the fourth shows how these abstractions can be used to implement themselves, and the fifth looks “under the hood” of Lisp itself to consider how it can be implemented with elements simpler than itself. Of course, the question of how the simpler register machine itself can be implemented is left unanswered, but this is already starting to brings us into the realm of hardware, for which another book might be better suited.

For the first four chapters I did perhaps half of the exercises; for the last, I just read the main text. The chapter feels more theoretical than the previous ones. Even though the Lisp-to-Lisp evaluator of the fourth chapter is purely academic, I found it more interesting than the construction of a compiler from simulated versions of very restrictive components.

First Principles of Computer Programming

SICP is a rather unconventional programming book. I think this is largely because the authors seem to have started from first principles and asked “what should a good book on deep principles in high-level programming languages look like?”, rather than making all the safest choices.

Therefore, Lisp.

Therefore, presenting one element at a time (functions, data abstraction, mutability) with care and depth, rather than the (admittedly faster and more practical) approach of introducing all the simplest things first.

Therefore, spending a lot of time hammering in the point that what evaluates/compiles your program is just another program.

SICP is not about showing you the fastest route to making an app. Unless you’re of a theoretical bent, it might not even be a particularly good introduction to programming in general (on the other hand, on several occasions I was slowed down by prior misconceptions; those with a fresher perspective may avoid some difficulties).

However, it excels as a deep dive into the principles of programming. Especially if you have experience with programming but haven't yet read a systematic treatment of the topic, SICP will be invaluable in straightening out and unifying many concepts.

Links & resources

- SICP is available for free online in a variety of formats:

- MIT’s official web version and other SICP stuff

- PDF and EPUB conversions are available here

- The SICP lectures are on YouTube.

I’m not aware of an official SICP solution set, but you will find many on the internet. This one seems to be the most complete, often featuring many solutions to a given exercise.

How to Design Programs: an alternative book

A similar, first-principles-driven, Lisp-based book on programming called How to Design Programs (HTDP) also exists (I have not read it). This book was consciously designed to emulate SICP in the good while fixing the bad, particularly in the context of being used as an accessible introduction to programming (the authors of HTDP have written an article called The Structure and Interpretation of the Computer Science Curriculum in which they summarise their case).

Incredibly, HTDP is also available available for free online. Either MIT Press has been overrun by communists, or the people who write good programming books are far more charitable than the average textbook writer.

9 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2022-04-12T04:33:48.263Z · LW(p) · GW(p)

I wonder if there is a syntax that feels less idiosyncratic to someone who is used to procedural programming?

For example the following feels much more natural than the LISP equivalent. (Or maybe that's how we ended up with Python.)

return x1 if n = 1 else return x2↑ comment by L Rudolf L (LRudL) · 2022-04-12T18:20:45.087Z · LW(p) · GW(p)

In my experience the sense of Lisp syntax being idiosyncratic disappears quickly, and gets replaced by a sense of everything else being idiosyncratic.

The straightforward prefix notation / Lisp equivalent of return x1 if n = 1 else return x2 is (if (= n 1) x1 x2). To me this seems shorter and clearer. However I admit the clarity advantage is not huge, and is clearly subjective.

(An alternative is postfix notation: ((= n 1) x1 x2 if) looks unnatural, though (2 (3 4 *) +) and (+ 2 (* 3 4)) aren't too far apart in my opinion, and I like the cause->effect relationship implied in representing "put 1, 2, and 3 into f" as (1 2 3 f) or (1 2 3 -> f) or whatever.)

Note also that since Lisp does not distinguish between statements and values:

- you don't need

return, and - you don't need a separate ternary operator when you want to branch in a value (the

x if c else ysyntax in Python for example) and for normalif.

I think Python list comprehensions (or the similarly-styled things in e.g. Haskell) are a good example of the "other way" of thinking about syntax. Guido van Rossum once said something like: it's clearer to have [x for x in l if f(x)] than filter(f, l). My immediate reaction to this is: look at how much longer one of them is. When filter is one function call rather than a syntax-heavy list comprehension, I feel it makes it clearer that filter is a single concept that can be abstracted out.

Now of course the Python is nicer because it's more English-like (and also because you don't have to remember whether the f is a condition for the list element to be included or excluded, something that took me embarrassingly long to remember correctly ...). I'd also guess that I might be able to hammer out Python list comprehensions a bit faster and with less mental overhead in simple cases, since the order in which things are typed out is more like the order in which you think of it.

However, I do feel the Englishness starts to hurt at some point. Consider this:

[x for y in l for x in y]What does it do? The first few times I saw this (and even now sometimes), I would read it, backtrack, then start figuring out where the parentheses should go and end up confused about the meaning of the syntax: "x for y in l, for x in y, what? Wait no, x, for y in l, for x in y, so actually meaning a list of every x for every x in every y in l".

What I find clearer is something like:

(mapcat (lambda (x) x) l)or

(reduce append l)Yes, this means you need to remember a bunch of building blocks (filter, map, reduce, and maybe more exotic ones like mapcat). Also, you need to remember which position which argument goes in (function first, then collection), and there are no syntactic signposts to remind you, unlike with the list comprehension syntax. However, once you do:

- they compose and mix very nicely (for example,

(mapcat f l)"factors into"(reduce append (map f l))), and - there are no "seams" between the built-in list syntax and any compositions on top of them (unlike Python, where if you define your own functions to manipulate lists, they look different from the built-in list comprehension syntax).

I think the last point there is a big consideration (and largely an aesthetic one!). There's something inelegant about a programming language having:

- many ways to write a mapping from values to values, some in infix notation (

1+1) and some in prefix notation (my_function(val)), and others even weirder things (x if c else y); - expressions that may either reduce to a value (most things) or then not reduce to a value (if it's an

iforreturnor so on); - a syntax style you extend in one way (e.g. prefix notation with

def my_function(val): [...]) and others that you either don't extend, or extend in weird ways (def __eq__(self, a, b): [...]).

Instead you can make a programming language that has exactly one style of syntax (prefix), exactly one type of compound expression (parenthesised terms where the first thing is the function/macro name), and a consistent way to extend all the types of syntax (define functions or define macros). This is especially true since the "natural" abstract representation of a program is a tree (in the same way that the "natural" abstract representation of a sentence is its syntax tree), and prefix notation makes this very clear: you have a node type, and the children of the node.

I think the crux is something like: do you prefer a syntax that is like a collection of different tools for different tasks, or a syntax that highlights how everything can be reduced to a tight set of concepts?

Replies from: shminux↑ comment by Shmi (shminux) · 2022-04-12T19:24:20.074Z · LW(p) · GW(p)

Hmm, neither lisp nor python feel natural to me, but I understand that it is just a matter of getting used to. On the other hand, for all JS faults, its style of lambda and filter/map/reduce felt natural to me right away.

↑ comment by dkirmani · 2022-04-12T07:28:28.764Z · LW(p) · GW(p)

Maybe prefix notation feels weird because English (and Chinese, and Spanish, and Russian...) follow the subject-verb-object word order. "list.append(3)", for example, is in SVO order, while "(append list 3)" is in VSO order.

Most languages primarily use either SOV or SVO word-orderings, with VSO trailing at a distant third. Funnily enough, both Classical Arabic and Biblical Hebrew are VSO languages. Looks like God does speak Lisp after all.

Replies from: LRudL, Dirichlet-to-Neumann, Zmavli Caimle↑ comment by L Rudolf L (LRudL) · 2022-04-12T18:35:57.303Z · LW(p) · GW(p)

This is an interesting point, I haven't thought about the relation to SVO/etc. before! I wonder whether SVO/SOV dominance is a historical quirk, or if the human brain actually is optimized for those.

The verb-first emphasis of prefix notation like in classic Lisp is clearly backwards sometimes. Parsing this has high mental overhead relative to what it's expressing:

(reduce +

(filter even?

(take 100 fibonacci-numbers)))I freely admit this is more readable:

fibonacci-numbers.take(100).filter(is_even).reduce(plus)Clojure, a modern Lisp dialect, solves this with threading macros. The idea is that you can write

(->> fibonacci-numbers

(take 100)

(filter even?)

(reduce +))and in the expressions after ->> the previous expression gets substituted as the last argument to the next.

Thanks to the Lisp macro system, you can write a threading macro even in a Lisp that doesn't have it (and I know that for example in Racket you can import a threading macro package even though it's not part of the core language).

As for God speaking in Lisp, we know that He at least writes it: https://youtu.be/5-OjTPj7K54

↑ comment by Dirichlet-to-Neumann · 2022-04-12T10:40:50.157Z · LW(p) · GW(p)

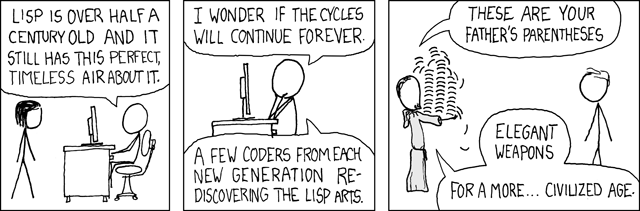

Mandatory xkcd's links: https://xkcd.com/224

↑ comment by Zmavli Caimle · 2022-04-12T18:55:15.349Z · LW(p) · GW(p)

I'm the opposite. My first two languages are VSO, so VSO ordering (function first, then arguments) comes naturally to me. Some languages are SOV -- Japanese is the most prominent example. Don't think I know of any proglangs with that form of syntax, though.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2022-04-12T19:10:12.621Z · LW(p) · GW(p)

In programming, SOV is known as Reverse Polish Notation. First must the arguments come before the operation you write. Forth, Postscript also, such languages are.

↑ comment by TLW · 2022-04-12T10:53:50.571Z · LW(p) · GW(p)

I wonder if there is a syntax that feels less idiosyncratic to someone who is used to procedural programming?

There are; there's a tradeoff. One of the advantages of Lisp is that it's very easy to parse and manipulate programmatically, because the syntax is so simple and regular.

I don't know of any syntaxes that achieve both the easy and regular syntax for machine parsing and 'natural' syntax for humans.