A Review of In-Context Learning Hypotheses for Automated AI Alignment Research

post by alamerton · 2024-04-18T18:29:33.892Z · LW · GW · 4 commentsContents

TL;DR Introduction The Purpose of This Post The Hypotheses About How ICL Works Bayesian Inference Gradient Descent Mesa-Optimisation Other How These Hypotheses Compare What This Means for AI Alignment Research Conclusion Reflection, Blind Spots, and Further Work References None 4 comments

This project has been completed as part of the Mentorship in Alignment Research Students (MARS London) programme under the supervision of Bogdan-Ionut Cirstea, on investigating the promise of automated AI alignment research. I would like to thank Bogdan-Ionut Cirstea, Erin Robertson, Clem Von Stengel, Alexander Gietelink Oldenziel, Severin Field, Aaron Scher, and everyone who commented on my draft, for the feedback and encouragement which helped me create this post.

TL;DR

The mechanism behind in-context learning is an open question in machine learning. There are different hypotheses on what in-context learning is doing, each with different implications for alignment. This document reviews the hypotheses which attempt to explain in-context learning, finding some overlap and good explanatory power from each, and describes the implications these hypotheses have for automated AI alignment research.

Introduction

Since their capabilities have improved and their size has increased, large language models (LLMs) have started demonstrating novel behaviours when prompted with natural language. Pre-trained LLMs can effectively carry out a range of behaviours when prompted. While predicting the most probable next token in a sequence is somewhat well understood, LLMs display another interesting behaviour, in-context learning, which is less easy to understand from the standpoint of traditional supervised learning.

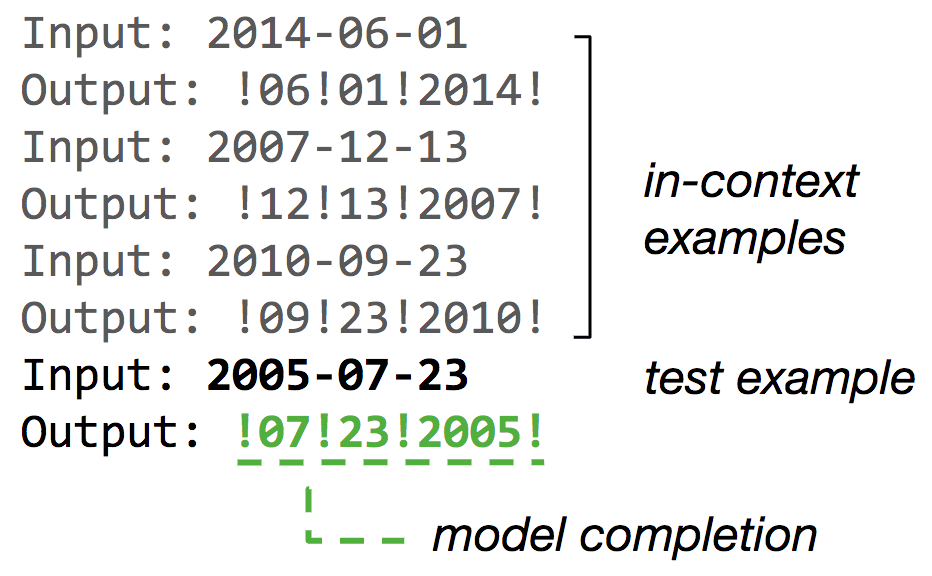

In-context learning is an emergent behaviour in pre-trained LLMs where the model seems to perform task inference (learn to do a task) and to perform the inferred task, despite only having been trained on input-output pairs in the form of prompts. The model does this without changing its parameters/weights, contrary to traditional machine learning.

In traditional supervised learning, a model’s weights are changed using an optimisation algorithm such as gradient descent. The main reason why ICL is a significant behaviour is because learning appears to take place, but the model’s weights do not change. Therefore, it does not require specific training or fine-tuning for new tasks. The model seems able to learn to do a new task with only prompts. ICL is also significant because it doesn’t need many training examples to do tasks well, unlike traditional training and fine-tuning approaches to learning.

ICL is defined by Xie et al. (2022) as “a mysterious emergent behaviour in [LLMs] where the [LLM] performs a task just by conditioning on input-output examples, without optimising any parameters”. It is defined by Wies et al (2023) as “a surprising and important phenomenon that emerged when [LLMs] were scaled to billions of learned parameters. Without modifying [an LLM’s] weights it can be tuned to perform various downstream natural language tasks simply by including concatenated training examples of these tasks in its input”. Dai et al (2023) defines ICL by comparing it with fine-tuning, highlighting the fact that ICL does not require the model to update its parameters, rather that ICL “just needs several demonstration examples prepended before the query input”. Lastly, Hoogland et al (2024) concisely define ICL as “the ability to improve performance on new tasks without changing weights”.

One reason the definition of ICL varies is because definitions of learning differ when speculating about hypothetical learning processes. There is room for differences in communication about what ICL is because writers may be describing different things that are happening ‘in context’. This is important because how this behaviour is defined impacts the claim about what ICL is doing. The ‘learning’ in in-context learning is usually defined as something like ‘the ability to improve performance on a task’. Definitions about what in-context means are less ambiguous. What qualifies the ‘in-context’ part of the behaviour is more agreed on; it is the fact that the behaviour is happening as a result of the prompts given to the LLM. This is less subject to speculation because the concept of a prompt is well established and understood, unlike a hypothetical learning process happening inside the LLM.

The Purpose of This Post

The main purpose of this post is to distil and communicate the hypotheses on in-context learning to individuals interested in AI alignment research, and those generally interested in the hypotheses about ICL, in a way that is representative of the different perspectives on ICL. It is intended for individuals interested in OpenAI’s Superalignment research agenda, and adjacent alignment agendas which involve automating AI alignment research. It is also more broadly intended for those interested in ICL’s relevance to AI alignment, AI safety, and the future of machine learning.

ICL has been studied extensively but the mechanism behind ICL is not yet agreed on by those studying it (Dong et al. (2023)). Understanding how ICL works could help us understand how hard aligning LLMs is. If this is the case, understanding ICL could also provide insight into how challenging it would be to align future, more advanced models. This could be especially helpful if such future models retain a similar architecture to today’s LLMS, exhibit ICL, and the same mechanism is responsible for the ICL they exhibit as the mechanism being observed in present-day LLMs.

Understanding how ICL works could also help us understand how hard it would be to align automated AI researchers, especially if they look similar to current models (for example, if they are based on or mostly involve the same or similar architectures and/or training setups as current LLMs).

Understanding how ICL works could also be important for alignment in general. Firstly, if ICL is approximating a learning algorithm, which algorithm it is matters. Some algorithms would be more alarming than others from an AI alignment perspective. If ICL is analogous to mesa-optimisation [AF · GW], it could be more cause for alarm, because it would imply that the LLM contains another optimisation process which may have a different objective function than the LLM.

Understanding ICL could also contribute to interpretability-based AI alignment agendas. Aside from allowing us to potentially surgically modify how a model does ICL, knowledge about the decision-making processes of ICL could contribute to interpretability by giving us clues to the other decision-making processes within LLMs, and insight into how models respond to inputs. Lastly, it is possible that ICL could be an alignment strategy of its own.

The Hypotheses About How ICL Works

The hypotheses for ICL can be categorised by how their explanation is framed. The two most prevalent explanations of ICL are from the perspective of Bayesian inference and Gradient Descent (Wang and Wang, 2023). This difference in framing is the main point which the difference in hypothesis hinges on. It is important to voice both sides of this difference to conduct a productive survey on ICL.

Bayesian Inference

Bayesian inference (BI) is a statistical inference method that uses Bayes’ theorem to update the probability for a hypothesis as more evidence is gathered. In this context, a parallel is drawn between the prior probabilities used to form confidence in a prediction in Bayesian inference, and the identification of the given task for ICL in the model’s training data.

A popular paper Xie et al. (2022) suggests that ICL can be viewed as the LLM implicitly performing BI. The authors find the ability to do ICL in GPT-2 and LSTMs, despite not training them explicitly to learn from prompts. They claim that the models infer concepts which are latent in the training data, and provide a theoretical framework for ICL based on BI. They find that ICL emerges in pre-trained models which were trained on data containing long-term coherence. In their methodology, they demonstrate that ICL emerges when the model’s pre-training distribution contains a mixture of hidden Markov models (HMMs). Jeon et al. (2024) suggest the results of this paper rely on contrived assumptions. Raventós et al. (2023) builds upon the paper, claiming to find a number of tasks in which ICL cannot be explained by BI.

Zhang et al. (2023) also claim to view ICL from a Bayesian perspective. The authors investigate how the transformer architecture enables ICL, claiming to “provide a unified understanding of the transformer”. They share the latent variable model assumption provided by Xie et al. (2022), and claim to prove that LLMs implicitly implement Bayesian model averaging (BMA) via their attention mechanisms during prompting. That is to say they suggest the LLM calculates the distribution of the latent variable, and predicts the likelihood of its occurrence in the output. They claim that an extension of linear attention, which can be compared to the softmax attention component, encodes BMA.

Jiang, (2023) also attributes ICL, among other things, to BI. They claim to be motivated by Xie et al. (2022) and present a latent space theory for emergent abilities in LLMs, including ICL. Expanding on the findings of Xie et al., they explore the property of sparsity in languages, explaining the abilities of LLMS as implicit BI on the distribution of latent intention concepts in language data.

Wang et al. (2024) also look at ICL ‘through a Bayesian lens’, claiming that current explanations for ICL are disconnected from real-world LLMs. They criticise Xie et al. (2022) for using HMMs to describe a behaviour concerning natural language, and for their empirical findings being restricted due to using synthetic data and ‘toy models’. However, it can be argued that GPT-2 is not a toy model in the context of machine learning. They pose LLMs as “implicit topic models that infer a latent conceptual variable from prompts”, and propose an algorithm which extracts latent conceptual tokens from a small LLM. They focus more on finding good demonstrations of ICL than on diagnosing its underlying mechanism.

Han et al. (2023) pose that LLMs are simulating kernel regression with internal representations, and that BI on in-context prompts can be understood as kernel regression. Similarly to the Wang et al. (2024), they criticise Xie et al. (2022) for not extrapolating their theoretical claim to practical LLM behaviour, claiming they fail to explain how BI is computationally implemented by an LLM, considering that such BI requires computational graphs of unbounded depth. They try to extend the ideas put forward by Xie et al. (2022) by explaining how ICL is implemented in the transformer’s attention mechanism. They conduct a theoretical analysis suggesting that inference on ICL prompts converges to a kernel regression form. They conduct an empirical investigation on this claim to verify the theoretical analysis by analysing the alignment of the attention map of GPT-J 6B with kernel regression. They claim to find that the attention maps and functions in the hidden layers are shown to match the behaviours of a kernel regression mechanism, concluding that it can be explained as kernel regression.

Wies et al. (2023) provide a new Bayesian task identification framework for ICL. They interpret pre-training as unsupervised multi-task learning of natural language tasks because during ICL LLMs exhibit natural language tasks they were not trained for. They claim that their work is similar to the work of Xie et al. (2022), but differs in that it is more connected to practical LLM behaviour. They claim the complexity of the number of required samples is lower than Xie et al. (2022), the class of HMM mixtures is more broad, and extends to also capture imperfect learning. Zhang et al. (2023) claim this makes unrealistic assumptions about pre-trained models.

Zhu et al. (2024) also casts ICL as implicit BI, finding similarities between the judgements made by LLMs and those made by humans. They attribute these findings to BI; specifically, the Bayesian Sampler model from Bayesian cognitive science. They evaluate the coherence of probability judgments made by GPT-4, GPT-3.5, LLaMa-2-70b and LLaMa-2-7b, finding they display the same reasoning bias characteristics as humans. They formalise the autoregressive approach LLMs use to form probability judgments, and rewrite the objective of the autoregressive approach as implicit BI using Finetti’s Theorem under an exchangeability assumption. They do, however, acknowledge that this assumption may not apply directly to the token level of specificity in LLMs, but suggest that it still has semantic explanatory power, which seems acceptable at the level of abstraction being employed for the BI hypothesis.

Gradient Descent

Some work suggests that ICL could be learning by approximating, or simulating [LW · GW], gradient descent (GD). GD is a well-known iterative algorithm used in traditional machine learning algorithms to minimise the loss of a neural network on training data, usually for an optimisation problem.

Dai et al. (2023) frames LLMs as meta-optimisers, claiming that ICL is doing implicit fine-tuning. They compare an observed meta-optimisation of ICL with the explicit optimisation of fine-tuning, finding they behave similarly from multiple perspectives. They claim to provide proof that transformer attention modules and GD have the same functional form, using it as evidence for the claim that ICL is implicit fine-tuning.

Akyürek et al. (2023) also make the claim that ICL is implementing implicit versions of existing learning algorithms. They compare ICL with algorithms such as GD, ridge regression, and exact least-squares regression, and investigate the hypothesis that transformers encode smaller models in their activations. They claim to prove that transformers implement linear models based on GD and closed-form ridge regression. They also show that ICL matches the outputs made by GD, and that ICL shares algorithmic features with these outputs; specifically, that they encode weight vectors and moment matrices. They also claim to show that LLMs transition between different predictors depending on their model size and depth, implying an LLM’s size impacts which algorithm it seems to implement. It is worth noting that their paper only explores linear regression tasks, but invites an extension of their work to non-linear tasks and larger-scale samples of ICL.

Von Oswald et al. (2023) claim that ICL is closely related to gradient-based few-shot learning. They suggest that either models learned by GD and Transformers are similar or that the weights found by the transformer's optimisation matches their GD model. They use this to claim to mechanistically understand ICL in optimised transformers, identify how transformers surpass the performance of GD, and identify parallels between ICL and a kind of attention head named the induction head Olsson et al. (2022).

Deutch et al. (2023) build on the existing work comparing ICL with GD suggesting that while the theoretical basis for the comparison is sound, empirically, there is a significant difference in the two processes (layer causality), causing the comparison to fail. They claim results focus on simplified settings and that the similarities between ICL and GD are not put forward strongly. They find that layer causality is a significant problem in the comparison between ICL and GD-based fine-tuning. They propose that a layer-causal variant of fine-tuning is more equivalent to ICL than GD.

A paper by Wu and Varshney (2023) supports this view, suggesting that LLMs are performing stochastic GD (SGD) within a meta-learning framework to achieve ICL. The authors claim to use a meta-learning framework to suggest that an inner optimisation process is happening within LLMs that mirrors SGD. They experiment on GPT-2, Llama-7B and Llama-13B, finding a characteristic of learned token representations in causal transformers.

Panigrahi et al. (2024) also allude to implicit fine-tuning, but instead framing ICL as a transformer simulating and fine-tuning another pre-trained transformer-like model during inference. They claim that the simulators model relied on by other work is not tractable because of the required memory overhead of training an internal linear or 2-layer multi-layer perceptron. Instead, they propose Transformer in Transformer (TinT), a simulator model whereby a transformer simulates and fine-tunes an ‘auxiliary transformer’ during inference. They suggest that the inference of transformers in a forward pass relies on the training dynamics of smaller internal models this way.

GD is a different kind of explanation of ICL than BI. Dong et al. (2023) claim that attributing ICL to GD seems reasonable, and further work into the relationship could help with developing the capabilities of ICL. Based on the viewpoints highlighted here, it seems reasonable that further work into the relationship between ICL and GD will contribute to our understanding of ICL for alignment and automating alignment research.

Mesa-Optimisation

Mesa-optimisation (MO) [LW · GW] is a theoretical phenomenon whereby an optimisation process creates a solution that is, itself, an optimiser. Von Oswald et al. (2023) suggest that this is how LLMs are able to do ICL. They suggest that transformer-based models construct an internal learning objective and use optimisation to find the best solution. They reverse-engineer transformers and find gradient-based MO algorithms. This hypothesis provides a theoretical model of understanding ICL. It is not as pragmatically related to LLM behaviour, and it is harder to provide empirical evidence of ICL being MO. Many of the papers which attribute ICL to GD could also fit into this framework. MO can be viewed as an extension of the GD hypothesis in this case.

Other

Hahn and Goyal (2023) view ICL from an information-theoretic perspective, claiming that ICL relies on a recombination of compositional operations found in natural language data. They claim to provide a theoretical analysis of how next-token prediction leads to ICL using an idealised model. They claim that their approach differs from Xie et al. (2022), by explaining ICL as identifying a task from an open hypothesis space of tasks compositionally recombining operations found in the training data, while Xie et al. (2022) analysed the task of recovering one of a fixed space of HMMs in the training data. This shows that ICL can be approached from the perspective of linguistic information theory and offers an alternative high level of abstraction for explaining ICL.

A recent paper by Hoogland et al. (2024) provides an analysis of ICL from a behaviour development perspective, comparing neural networks to biological cells. They draw a parallel with the developmental stages of cells, identifying 5 developmental stages in transformers, and study how ICL develops over the course of training.

How These Hypotheses Compare

The main thing that appears to separate each of these hypotheses is that they explain ICL in different ways. One views ICL as implicit BI, while one studies the mechanism behind ICL and compares it with algorithms we know

A problem with this is that each definition of this sort creates a separation which might not be useful. Viewed from different perspectives, as alluded to by some authors, it seems that ICL can be explained in an equally plausible way by BI or GD. Other studies also suggest this. Korbak and Perez (2022) suggest that gradient descent can be interpreted as Bayesian inference, and Hubinger et al. (2023) suggests supervised fine-tuning can be seen as a form of Baysian conditioning. Grant et al. (2018) also casts model-agnostic meta learning as BI. Therefore, depending on one’s approach, perspective, and experimental analysis, it seems plausible to explain ICL as either BI or GD.

One paper Deutch et al. (2023) argues against the comparison of ICL and gradient-based fine-tuning, claiming that the theoretical basis for the similarity is sound, but fails empirically due to a difference in information flow called layer causality. They claim that at each point in the process, ICL can only rely on information from lower layers, but that fine-tuning relies on deeper layers. They present an alternative to the equivalence of ICL with gradient-based fine-tuning: a novel fine-tuning method which respects layer causality.

Most papers suggest that ICL is an implicit version of some well-defined algorithm or process. This could suggest that ICL is doing something unlike any known algorithm, that it is equivalent to those algorithms but in ways we don’t grasp yet, or that the same algorithm is implemented every time, but depending on the perspective, level of abstraction, or framework it is studied under, it appears equivalent to different known algorithms.

The explanations illustrated above are not incompatible, they can be used together. GD can be interpreted as BI, as suggested by Korbak and Perez (2022), and Mandt et al. (2017). The more important question is why some researchers explain ICL using BI and some explain it using GD.

It is not yet agreed whether LLMs learn during ICL (as suggested by the hypotheses that ICL is GD or MO), or if they are just locating latent concepts learned during pre-training (as suggested by the BI hypotheses). If LLMs are just locating latent concepts, it can be argued that all the learning was done in training and thus followed the standard supervised learning format, suggesting ICL could be a less significant emergent behaviour in LLMs. This is an important distinction because locating concepts from training has less friction with our understanding of learning from a supervised learning perspective.

What This Means for AI Alignment Research

The fact that there are disagreements about the underlying mechanism behind ICL is important because of the implications of each hypothesis for alignment.

The implication of ICL being implicit BI is that the LLM is locating concepts it already learned in its training data, and these contribute to the LLM's ability do ICL, suggesting ICL could not be a new form of learning that has not been seen before. The scope of what LLMs are capable of doing in-context is reduced, compared with the GD hypothesis, and the potential for risks from inner-misaligned LLMs as a result of ICL would be reduced. LLMs would still pose potential misalignment risks, but the amount ICL is responsible for LLM misalignment would be reduced. ICL being implicit BI would mean aligning LLMs is easier than if ICL was simulating GD, because there would not be a process approximating GD which would need to be solved when aligning LLMs.

The implication of ICL being the LLM simulating GD is that the LLM has learned how to approximate a learning process in its forward pass, and is more likely to become misaligned than if ICL is implicit BI. It could cause the LLM to behave in unexpected ways, optimising for goals which are different to what the LLM may have learned to optimise for in training. The MO hypothesis suggests that the learned optimisation process may have a different goal than the LLM within which it is instantiated. This would be a case of inner misalignment, it could cause the outputs of the LLM to be misaligned as a result of prompting. This hypothesis also implies that the LLM could be more difficult to align than if a simpler process was responsible for ICL such as GD or BI. If there is a process approximating GD in the forward pass of an LLM, then that process will need to be included in LLM alignment strategies, for any LLM which displays ICL. This may be harder than if ICL is implicit BI, because the difficulty of aligning an inner optimisation process is not yet known. The implication of ICL simulating GD is roughly equivalent to the implication of MO, and the degree to which the implication is concerning increases the more the inner optimisation process resembles MO.

Understanding ICL could therefore give us insight into how hard it will be to develop and deploy automated AI alignment researchers. Such automated researchers may well be similar in architecture to LLMs, and they also need to be aligned to be trustworthy. Each hypothesis has the same implications for these researchers as it does for current and future LLMs, if the automated researchers have similar architectures to LLMs, display ICL, and the same internal mechanism is responsible for the ICL they display as the mechanism responsible for the ICL being witnessed in current LLMs. If understanding ICL is difficult, we might have to look for other ways to align automated researchers and other ways for the automated researchers to align models.

ICL could also be an alignment method of its own. Lin et al. (2023) propose a method of aligning LLMs using ICL named URIAL. They use ICL to guide LLM behaviour with prompts, including background information, instructions, and safety disclaimers. There is also a system prompt which guides the LLM on its role in the exercise, focusing on helpfulness, honesty, politeness and safety. They claim to achieve effective LLM alignment via ICL on three prompts. They benchmark URIAL-aligned Llama-2 variants and find that their method surpasses RLHF and SFT in some cases. This suggests that ICL can be an effective alignment strategy. This is relevant for automated AI alignment research because it can be built upon and used as a method to align automated alignment researchers and by automated researchers to align other models.

Conclusion

This post reviews the major hypotheses on ICL in LLMs, contrasts their claims, and analyses the frameworks within which they are presented. It is clear from the literature that the mechanism behind ICL is not yet fully understood and agreed upon. The two prevalent hypotheses explaining ICL do so in different terms, and depend on the framework one wishes to use to view ICL. The perspective casting ICL as BI does so from a more general level of analysis, and uses a probabilistic analytical framework, while those that allude to GD are more granular, attempting to identify the kind of learning algorithm being simulated during ICL in a model’s forward pass. Further theoretical and empirical work on LLMs is needed to improve our understanding of ICL. Such work would help illuminate the difficulty of aligning current LLMs and designing automated AI alignment research models.

Reflection, Blind Spots, and Further Work

This is only as good as my understanding, the time I spent on it, and the literature I covered. I invite readers to please correct me on any blind spots or mistakes I have made, and show me where I need to focus to improve. This will help me grow as a researcher. I plan to conduct a survey on the in-context learning literature from the perspective of AI alignment in general in the next few months. I believe such a survey would be helpful for the alignment community.

References

- Akyürek et al. (2023). What learning algorithm is in-context learning? Investigations with linear models

- Dai et al. (2023). Why Can GPT Learn In-Context? Language Models Implicitly Perform Gradient Descent as Meta-Optimizers

- Deutch et al. (2023). In-context Learning and Gradient Descent Revisited

- Dong et al. (2023). A Survey on In-context Learning

- Grant et al. (2018). Recasting Gradient-Based Meta-Learning as Hierarchical Bayes

- Han et al. (2023). Explaining Emergent In-Context Learning as Kernel Regression

- Hahn and Goyal (2023). A Theory of Emergent In-Context Learning as Implicit Structure Induction

- Hoogland et al (2024). The Developmental Landscape of In-Context Learning

- Hubinger et al. (2023). Conditioning Predictive Models: Risks and Strategies

- Mandt et al. (2017). Stochastic Gradient Descent as Approximate Bayesian Inference

- Olsson et al. (2022). In-context Learning and Induction Heads

- Raventós et al. (2023). Pretraining task diversity and the emergence of non-Bayesian in-context learning for regression

- Rong (2021). Extrapolating to Unnatural Language Processing with GPT-3's In-context Learning: The Good, the Bad, and the Mysterious

- Jiang, (2023). A Latent Space Theory for Emergent Abilities in Large Language Models

- Jeon et al. (2024). An Information-Theoretic Analysis of In-Context Learning

- Korbak and Perez (2022). RL with KL penalties is better viewed as Bayesian inference

- Lin et al. (2023). URIAL: Tuning-Free Instruction Learning and Alignment for Untuned LLMs

- Panigrahi et al. (2024). Trainable Transformer in Transformer

- Von Oswald et al. (2023). Transformers learn in-context by gradient descent

- Wang et al. (2024). Large Language Models Are Latent Variable Models: Explaining and Finding Good Demonstrations for In-Context Learning

- Wang and Wang, (2023). Reasoning Ability Emerges in Large Language Models as Aggregation of Reasoning Paths: A Case Study With Knowledge Graphs

- Wies et al. (2023). The Learnability of In-Context Learning

- Wu and Varshney (2023). A Meta-Learning Perspective on Transformers for Causal Language Modeling

- Xie et al. (2022). An Explanation of In-context Learning as Implicit Bayesian Inference

- Zhang et al. (2023). What and How does In-Context Learning Learn? Bayesian Model Averaging, Parameterization, and Generalization

- Zhu et al. (2024). Incoherent Probability Judgments in Large Language Models

4 comments

Comments sorted by top scores.

comment by Aaron_Scher · 2024-04-19T16:51:58.374Z · LW(p) · GW(p)

The implication of ICL being implicit BI is that the model is locating concepts it already learned in its training data, so ICL is not a new form of learning that has not been seen before.

I'm not sure I follow this. Are you saying that, if ICL is BI, then a model could not learn a fundamentally new concept in context? Can some of the hypotheses not be unknown — e.g., the model's no-context priors are that it's doing wikipedia prediction (50%), chat bot roleplay (40%), or some unknown role (10%). And ICL seems like it could increase the weight on the unknown role. Meanwhile, actually figuring out how to do a good job in the previously-unknown role would require piecing together other knowledge the model has — and sufficiently strong building blocks would allow a lot of learning of new concepts.

Replies from: alamerton↑ comment by alamerton · 2024-04-19T19:08:04.715Z · LW(p) · GW(p)

I think I mean to say this would imply ICL could not be a new form of learning. And yes, it seems more likely that there could be at least some new knowledge getting generated, one way or another. BI implying all tasks have been previously seen feels extreme, and less likely. I've adjusted my wording a bit now.

comment by Ben Pace (Benito) · 2024-04-18T18:41:33.507Z · LW(p) · GW(p)

Not sure where the right place to raise this complaint, but having just seen it for the first time, really, "MARS"? I checked, this is not affiliated with MATS [? · GW] who have had like 6 programs and ~300 people go through it. To me this seems too close in branding space to me, and I'd recommend picking a more distinct name.

Replies from: Linda Linsefors↑ comment by Linda Linsefors · 2024-04-19T11:08:34.427Z · LW(p) · GW(p)

I disagree. In verbal space MARS and MATS are very distinct, and they look different enough to me.

However, if you want to complain, you should talk to the organisers, not one of the participants.

Here is their website: MARS — Cambridge AI Safety Hub

(I'm not involved in MARS in any way.)