Why Experienced Professionals Fail to Land High-Impact Roles (FBB #5)

post by gergogaspar (gergo-gaspar) · 2025-04-10T12:46:35.862Z · LW · GW · 3 commentsContents

Introduction On professionals looking for jobs What I mean by context Understanding the landscape Previous involvement in the movement Understanding concepts Familiarity with thought leaders and their work Understanding culture Visualising your journey in AI Safety If you are an experienced professional who is new to AI Safety, this is why you don’t get far in hiring rounds. You may have the skills, but not enough context - yet. Understand hiring practices Why is that? What you can do Networking Improve your epistemics Signaling value-alignment Team up with high-context young people Be Patient and Persistent Which roles does the “context-thesis” apply to? On seniority Conclusion None 3 comments

Crossposted on the EA forum [EA · GW] and Substack.

Introduction

There are many reasons why people fail to land a high-impact role. They might lack the skills, don’t have a polished CV, don’t articulate their thoughts well in applications[1] or interviews, or don't manage their time effectively during work tests. This post is not about these issues. It’s about what I see as the least obvious reason why one might get rejected relatively early in the hiring process, despite having the right skill set and ticking most of the other boxes mentioned above. The reason for this is what I call context, or rather, lack thereof.

On professionals looking for jobs

It’s widely agreed upon that we need more experienced professionals in the community, but we are not doing a good job of accommodating them once they make the difficult and admirable decision to try transitioning to AI Safety.

Let’s paint a basic picture that I understand many experienced professionals are going through, or at least the dozens I talked to at EAGx conferences.

- They do an AI Safety intro course

- They decide to pivot their career

- They start applying for highly selective jobs, including ones at OpenPhilanthropy

- They get rejected relatively early in the hiring process, including for more junior roles compared to their work experience

- They don’t get any feedback

- They are confused as to why and start questioning whether they can contribute to AI Safety

If you find yourself continuously making it to later rounds of the hiring process, I think you will eventually land the job sooner or later. The competition is tight, so please be patient! To a lesser extent, this will apply to roles outside of AI Safety, especially to those aiming to reduce global catastrophic risks.

But for those struggling to penetrate later rounds of the hiring process, I want to suggest a potential consideration. Assuming you already have the right skillset for a given role, it might be that you fail to communicate your cultural fit and contextual understanding of AI Safety to hiring managers, or you still lack those for now.

This isn’t just on you, but on all of us as a community. In this article, I will outline some ways that job seekers can better navigate the job market, but focus less on how the community can avoid altruistic talent getting lost on the journey. That is worth its own forum post!

To make it clear again, this is not the only reason you might be rejected, but one that might be the least obvious to people failing to land roles. Now let's look at what you can do.

What I mean by context

A highly skilled professional who was new to the AI Safety scene at the time told me they applied to the Chief of Staff role at OpenPhilantropy. They got rejected in the very first round, but they didn’t understand why. Shortly after, they went to an EAG conference and told me: “Oh, I get it now.”

Here is a list of resources that I would have sent their way before their job search, had I written it up at the time. Context is a fuzzy concept, but I will try my best to give you a sense of what I mean by it. Let’s break it down into different parts.

Understanding the landscape

- How familiar you are with what the various [EA · GW] orgs [EA · GW] in the space are doing

How much you understand their theories of change [? · GW][2]

- How much you have networked with individuals at these orgs and the broader community by going to EAG(x) [? · GW] conferences

- Your familiarity with the different risks and threat [LW · GW] models precipitating them

- How familiar you are with the [EA · GW] different [EA · GW] needs [EA · GW] and theories [? · GW] of change [EA · GW] behind [EA · GW]

- How many AIS newsletters and podcasts [EA · GW] you follow

Previous involvement in the movement

- Having volunteered for AIS initiatives

- The support you have received from programs of SuccessIf, High Impact Professionals or career advice from people knowledgeable about the space

- How much you have read, written and interacted on the EA/LW forums and relevant Slack workspaces

- Having registered yourself in the relevant people directories [EA · GW]

Understanding concepts

Whether you have come across the following concepts:

- Long reflection [? · GW]

- x-risk [? · GW]

- s-risk [? · GW]

- moral patienthood [? · GW]

- grand challenges

- Existential risk [? · GW]

- Longtermism [? · GW] and yes, Effective Altruism

- scout mindset

- updating [? · GW]

- reasoning transparency [? · GW]

- leverage

- inside vs. outside view [? · GW]

- expected value [? · GW] (EV)

- ITN framework [? · GW]

- cause prioritisation [? · GW] (within AIS)

- Existential security [? · GW]

- crux [? · GW]

- bottleneck [? · GW]

- Recursive Self-Improvement [? · GW]

- AI takeoff [? · GW]

- RLHF [? · GW]

- p(doom) [LW · GW]

- There are a lot more here [EA · GW], here [EA · GW] and here [? · GW] (sections 3 and 4)

Many of them are based on different assumptions, and understanding them does not mean that you have to be on board [EA · GW] with them. And don’t worry, it’s frustrating to other newcomers too. I linked to all the explanations from above, so I hope we can still be friends.

Familiarity with thought leaders and their work

Having read books such as

- The Precipice

- Superintelligence

- The Alignment Problem

- What We Owe the Future (often referred to as WWOF)

- The Scout Mindset

- Superforecasting

- Avoiding the Worst

The Rationalist’s Guide to The Galaxy[3]

- Highlights from The Sequences [? · GW]

- (Warning: the full series [? · GW] is 2000+ pages long, this is most popular among veteran readers of LessWrong)

Familiarity with he following people and how they influenced the movement. I’m probably forgetting some.

- Eliezer Yudkowsky

- Nick Bostrom

- Stuart Russell

- Max Tegmark

- Toby Ord

- William MacAskill

- Geoffrey Hinton

- Joshua Bengio

- Elon Musk

- Robin Hanson

Heard of the people and know the motivations of those who are regarded as “AI Safety’s top opponents”:

- Yan LeCun

- Marc Andreessen

- Andrew Ng

- and of course, Sam Altman

Understanding culture

- The history of the AI Safety movement and yes, the Effective Altruism movement

- Knowledge of the FTX collapse [? · GW] and its impact on the movement

- Understand why the AIS movement is trying to distance itself from EA (as well as why EAs who want to contribute to AIS are trying to distance themselves from EA [EA · GW])

- Considerations around how transparent [LW · GW] organisations are to different parties about which risks they talk about

A caveat to the list above is that many of the items will be more important for AIS roles within the EA/rationalist space. As the AIS community grows, I expect this to change [EA · GW] and some of the items on the list above will become less important.

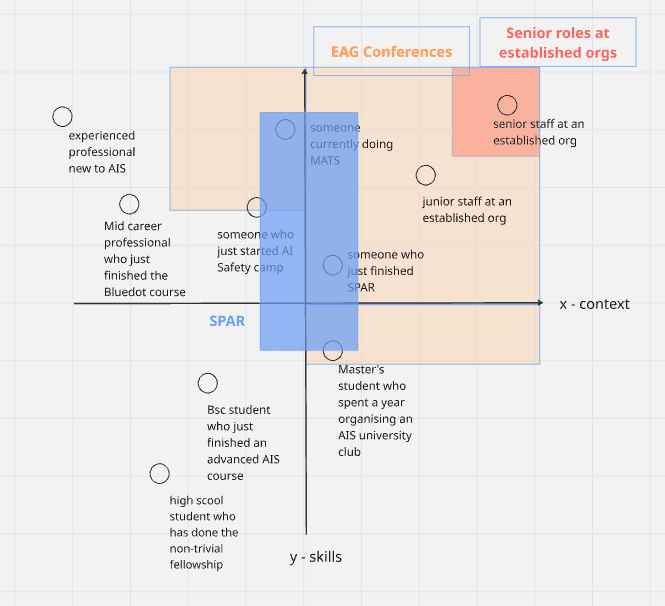

Visualising your journey in AI Safety

Think of the y-axis as the skills needed for a given role. The x-axis refers to the context people have. Different roles require varying levels of skills and context. My claim is that the closer one is towards the top right, the better position they are in to land a job and make a big impact.[4]

Now let’s put you on the map, or rather, the different career profiles that want to contribute to AI Safety.

Now let’s also map some of the different opportunities that someone might apply to. The squares refer to the target audiences for the opportunities.

If you are an experienced professional who is new to AI Safety, this is why you don’t get far in hiring rounds. You may have the skills, but not enough context - yet.

Understand hiring practices

The current state of the AI Safety job market is nowhere near ideal. My hope is that by shedding some light on how it works, you will get a better sense of how to navigate it.

If your strategy is to just apply to open hiring rounds, such as through job ads that are listed on the 80,000 Hours job boards, you are cutting your chances of landing a role by ~half. It’s hard to know the exact figure, but I wouldn’t be surprised if as many as 30-50% of paid roles in the movement aren’t being recruited through traditional open hiring rounds, but instead:

- closed hiring rounds where hiring managers pool a small number of candidates through referrals in their network

- volunteer work turning into paid work

- Someone gets a small contract job through networking at a conference, the org sees that they are competent and decides to hire them

- people fundraise for their own projects (often starting them on a volunteer basis to demonstrate viability to funders)

Why is that?

- Open hiring rounds are time-intensive to run

Hiring rounds in the community tend to be really rigorous, with several rounds of interviews, (paid, timed) work tests, day- or even week-long work trials[5]

This is because organisations really care about getting things right, and are willing to invest a lot in the people that end up joining them

They really don’t want to fire you once you are in the org, as funders also tend to care about organisational culture and management practices, so someone being fired or having a negative experience at the org can be a flag to them

- Closed hiring rounds are less time-intensive to run

- Given the costs outlined above, and the fact that the community is really small, hiring managers, especially for roles that require a very specific skillset, (think they) can find most of the eligible candidates through their network

The community is really high-trust [EA · GW] and relies too much [EA · GW] on personal connections[6]

I expect these to gradually change as it grows further, meaning that a larger chunk of roles are going to be filled through open hiring rounds

What you can do

The good news is that it’s possible to level up your context pretty fast. Based on what professionals have told me, the community is also really open and helpful, so you can have a lot of support if you know where and what to ask.

Networking

If the picture I painted above is true, you need to get out there and network, so you can be at the right place at the right time.

- Currently, the best way to do this is at EAG(x) conferences

- Even if you are not interested in the broader EA movement, at these events around 50% of the people and content is focused on AI Safety, so you will have plenty of things to do. If you already have enough context to get into EAG conferences, consider flying in a week earlier to work out of a local office or coworking space. In London, this means the LISA or LEAH offices, but as far as I know, many of the other hubs have a coworking space.

Improve your epistemics

You can start with the list of concepts and books I mentioned above. In the future, I plan on writing up a proper guide, similar to this post [EA · GW] about skill levels in research engineering.

Signaling value-alignment

- The community is often criticised for being inward-looking, and this is true in some forms.

One form of inward-looking-ness I’m not critical of is organisations caring about value alignment[7]

Here I’m not talking about anything crazy, such as having to agree with everyone on every niche topic on AI Safety (people within organisations don’t)

This is more about the big picture stuff that hiring managers ask themselves when evaluating a candidate, such as

“Does this person sufficiently understand the risks and implications of transformative AI?”

“Is this person concerned about catastrophic risks? How would they prioritize working on those compared to current problems?”

“Have they thought deeply about timelines?”

“Will they fit the organisational culture?”

“How much will I have to argue with this person on strategy because they just “don’t get” some things?

Of course, I’m not saying that you should fake being more or less worried about AI than you actually are. While it’s tempting to conform to the views of others, especially if you are hoping to land a role to work with them. It’s not worth it, as you wouldn’t excel at an organisation where you feel like you can’t be 100% honest.

Team up with high-context young people

Apart from taking part in programs such as those of Successif and HIP (that have limited slots), I would like to see experienced professionals new to AI Safety team up with young professionals who are more embedded in the community but lack the experience to fundraise for ambitious projects by themselves. The closest thing we have to this at the moment is Agile for Good, a program that connects younger EA/AIS people to experienced consultants.

Be Patient and Persistent

Landing a job in AI Safety often takes way longer than in the “real world”. Manage your expectations and join smaller (volunteer) projects in the meantime to build context.

Continuously get feedback on your plans from high-context people. A good place for this is at EAG(x) conferences, but you can also post in the AI alignment slack workspace, people will be happy to give you feedback.

Which roles does the “context-thesis” apply to?

As I already mentioned before, many of the items will be more important for AIS roles that are within the EA/rationalist space. Even within, it is going to be more relevant for some roles than others.

Roles for which I think context is less important:

- AIS technical research on more empirical agendas, such as mechanistic interpretability

- Niche subfields, such as compute governance or information security

- Marketing and communications roles

- Operations

I expect it to be more important for roles in:

- Fieldbuilding

- Big-picture policy and strategy research

- Theoretical AI Safety technical research

- Fundraising (if it’s aimed at funders within the ecosystem)

- Middle and upper management, including organisational leadership

On seniority

Especially for senior roles that require a lot of context high-value alignment, I would expect hiring managers to opt for someone less experienced with high context and levels of value alignment as opposed to risking having to argue with an experienced professional (who is often going to be older than them) about which AI risks are the most important to mitigate.

Hiring managers will expect that, on average, it is harder to change the mind of someone older (which is probably true, even if it’s not true in your case!)

I also expect context to be less important for junior roles, as orgs have more leverage to guide a younger person into “the right direction”. At the same time, I don’t expect this to be an issue often, as there are a lot of high-context young people in the movement.

Conclusion

You have seen above just how and why the job market is so opaque. This is neither good nor intentional, it’s just what it is for now.

What I don’t want to come across as is saying that what we need is an army of like-minded soldiers, as that’s not the case. All I intend to show is that there is value in being able to “speak the local language”. Think of context as a stepping stone that can put you in a position of being able to then spread your knowledge in the community. We need fresh ideas and diversity of thought. Thank you for deciding to pivot your career to AI Safety, as we really need you.

Thank you to Miloš Borenović for providing valuable feedback on this article. Similarly, thanks to Oscar for doing the same, as well as providing support with editing and publishing.

- ^

As an example, Bluedot often rejects otherwise promising applicants simply because they have a bad application. Many of these people then get into the program after the 3rd time of trying. I’m not sure if it’s about them gaining more context, or just putting more effort into the application.

- ^

Which is often not public or written up even internally in the AIS space. Eh. Here is one that’s really good though.

- ^

I’m not sure how widely this is read, but it gives a good summary of the early days of the rationalist and therefore AI Safety movements.

- ^

This is not meant to be a judgment about people’s intrinsic worth. It’s also not to say that you will always have more impact. It’s possible to have a huge influence with lower levels of context and skills if you are at the right place at the right time. Having said that, the aim of building the field of AI Safety, as well as your career journey, is to get further and further towards the top right, as this is what will help you to have more expected impact [? · GW].

- ^

A friend told that an established org she was applying to flew out the top two candidates to the org’s office so they can co-work and meet the rest of the team for a week. Aside from further evaluating their skills, this also serves as an opportunity to see how they get along with other staff and fit the organisational culture.

- ^

Someone wrote a great post about this, but I couldn’t find it. Please share if you do!

- ^

There is a good post on criticising [EA · GW] the importance of value alignment in the broader movement, but I think most or the arguments apply less to value alignment within organisations.

3 comments

Comments sorted by top scores.

comment by Parv Mahajan (ParvMahajan) · 2025-04-11T02:54:46.521Z · LW(p) · GW(p)

Think of the x-axis as the skills needed for a given role. The y-axis refers to the context people have.

This might be a typo - I think the graph shows the opposite?

comment by Richard_Kennaway · 2025-04-10T14:43:44.247Z · LW(p) · GW(p)

Familiarity with he following people and how they influenced the movement. I’m probably forgetting some.

• Eliezer Yudkowski

...

• Stuart Russel

Especially if they know how to spell all their names!

Replies from: gergo-gaspar↑ comment by gergogaspar (gergo-gaspar) · 2025-04-11T09:01:10.318Z · LW(p) · GW(p)

Haha, thanks for pointing that out, what an ironic mistake! One can only do so much proofreading and still leave in some mistakes x)