Report from a civilizational observer on Earth

post by owencb · 2022-07-09T17:26:09.223Z · LW · GW · 12 commentsContents

Intellectual automation The Omohundro-completeness threshold Superhuman generalization Anthropological note None 12 comments

[translated into English by the author]

I have arrived in [2022]. There is one species, humans, that is building civilization. (A few other species exhibit potential but have not crossed the threshold of runaway economic development or cultural accumulation; it is now unlikely that they ever will unless a deliberate choice is made to allow them to do so.)

There are around 129.2 humans. They are organized into loose and overlapping political structures, including firms, [countries with systems of law one cannot opt out of], and [religions and hobby groups]. There is a degree of [law, treaties, and custom] constraining these groups, but there is no strong political unification, and sometimes there is significant [violence causing destruction of persons or property].

Most of the physical activity of civilization is done by machines operated by humans, although a significant minority is still done directly by humans, and a significant-and-increasing minority is done by machines operated by [rule-governed thinking-machines].

In some domains a large majority of the intellectual labour of civilization is done by thinking-machines, but these tend to be [rule-governed thinking-machines] following precise instructions laid down by humans. Most key intellectual work is still done by humans (although a lot of the communication of this is mediated by [rule-governed thinking-machines]).

In the last econoyear[1], civilization has built nontrivial [patterning thinking-machines]. And in the last economonth[2], these have been applied to human language with significant success. [Patterning thinking-machines] are now capable of doing sophisticated tasks that humans might previously have been paid to do. And since the language they learned encoded a lot of civilizational knowledge, these [patterning-thinking-machines] now implicitly contain a large fraction of civilizational knowledge (at least at a [shallow/indexing] level).

Intellectual automation

The next several economonths will likely represent a period of acceleration for civilization. It is unclear whether they will contain an emergent [post-civilization], though it is most likely that that will occur within the next 24 econoyears, and maybe much sooner. It is currently unclear what the nature of any emergent [post-civilization] will be.

The reason for acceleration is:

- They can now build [patterning thinking-machines] which are strong at general pattern recognition and generation

- Most of the intellectual labour that humans do is just these two operations, specialized to particular domains

- (Indeed most human cognition is just these two operations, applied appropriately, e.g. to pattern recognition of valid reasoning steps)

It is therefore mostly the lack of major efforts, rather than technological inability, which prevents a majority of human intellectual work being automated.

(Some human intellectual work is automation-proof for now for economic reasons. Earth’s best [patterning-thinking-machines] are still significantly slower to learn and to generalize than humans are (in terms of the number of examples they need to see), so for specialized intellectual labour the training involved would currently be prohibitive.)

Over the next few economonths we can expect a few domains to start having large swathes of low-level work automated by [patterning thinking-machines]. This is most likely in areas that give human supervisors time to intervene in cases of errors (bemusingly, the humans have fixated on having thinking-machines take over the controls of dangerously-fast mobile machines, which have very little margin for error).

Humans working in these domains will increasingly devote time to training and overseeing these systems rather than doing the work themselves. (Eventually, oversight itself may be automated, but not in the first wave of applications.) In domains where this goes well, this will unlock much more labour than is currently provided by humans — usually until the point where it is no longer a significant bottleneck. The best humans will become wealthy training systems to excellence; many other humans will see demand for their services disappear or change significantly.

Typically humans will end up with access to much better teachers, expert advice, and (non-physical) assistants. Depending on how fast these various applications are developed, solar time might start to meaningfully slow[3].

All of this will be of limited importance compared to the automation of research.

Research is a relatively hard thing to automate, but the problem will attract many top human minds. They will work to:

- Atomize the research process into steps that are within-grasp for well-trained [patterning thinking-machines]

- Record high-grade performance on these steps, to give the [patterning thinking-machines] something to emulate

- Work to supervise the output of the machines, both on their individual steps and the larger outputs of the research process[4]

(Eventually, “end-to-end” systems aimed at good research will outperform these atomized versions. But such systems will be hungrier for training data, and take longer to train, so they won’t be the first high-performing research systems we see.)

Where will Earth civilization go from there? As research is significantly automated, solar time will slow, with a solar year taking more than an economonth and then likely several economonths.

Overall, the direction of the world will be determined in significant part by the sequence in which important insights are arrived at.[5] This in turn will be determined by which research areas are automated early, and well-resourced with expert supervision and [thinking-machines].

This is not simply a matter of economic incentives, although of course those matter. A relatively small number of decision-makers (the more far-sighted researchers/entrepreneurs/investors; very wealthy individuals or institutions; top researchers and entrepreneurs) could have significant influence on the paths unfolding.

The Omohundro-completeness threshold

It will around this time become technologically feasible to build [Omohundro-complete thinking-machines], which pursue [fully general power over the future] in service of their goals. Automating strategic thinking is of comparable technical difficulty to automating research (the major difficulties for both being holistic coherence, and evaluating highly novel ideas), but there will be less economic incentive to build [Omohundro-complete thinking-machines], so they will not appear as early as the automation of research. However, absent strong coordination to avoid building them (which humans do not presently seem capable of achieving), they will likely follow the automation of research by somewhere between 6 economonths and 6 econoyears.

This matters. At the moment the strategic actors on Earth are all humans or political bodies composed of humans. The direction of civilization depends in real part on who is steering, and the advent of [Omohundro-complete thinking-machines] could greatly expand the set of possibilities. It is very possible that some [Omohundro-complete thinking-machines] created in the early days could — via their appointed successors — end up controlling much of that part of the universe which Earth-originating thinking beings can hope to claim.

The first powerful [Omohundro-complete thinking-machines] will most likely be cobbled together from more service-like systems, but could instead be constructed deliberately or emerge from some small constituent [reward-chasing-machine] seizing control of the larger system around it. Unfortunately it is too hard to predict at this stage: what systems might be created and how; what the priorities will be of those systems that acquire power; whether power transitions will be characterized by negotiation or expropriation.

Superhuman generalization

The next major foreseeable development is when the fundamental capacity of [thinking machines] to [learn/generalize] surpasses that of humans. Within an econoyear of this point, new [perspectives/paradigms] from direct observation will be more powerful than learning [perspectives/paradigms] from humans. Research automation using such [thinking-machines] will overtake research automation based on human paradigms. Solar time will slow further. Within the next few econoyears [thinking-machines] will advance until they vastly outperform human thinkers in all important intellectual tasks. If we take as a milestone the moment when humans represent less than a 1/144 part of at least 143/144 of important areas of intellectual work, this is likely to be passed between 2 and 12 econoyears after the start of significant research automation. (It is possible that it will occur before the emergence of [Omohundro-complete thinking-machines], although that would not necessarily be stabilizing.)

It is more likely than not that Earth will see the emergence of a coherently-coordinated [post-civilization] in the few econoyears immediately preceding or immediately following this milestone.

Anthropological note

These approaching developments have spurred relatively little interest among humans. Even those who do attend generally talk of “the time of transformative AI”, and mix up features of all of the above, as though there were one transition to come and not three.

It is hard to fully account for this, since humans are certainly capable of understanding three transitions, and have enough information to deduce their existence. It may be a combination of:

- Few humans paying attention to this at all, because it is incongruent with their direct experience;

- The expected slowdown of solar time, such that individual humans who observe one transition will typically observe subsequent transitions.[6]

- ^

A period of time over which the economy grows by a factor of e. One econoyear ago on Earth was 1989 in the local reckoning; two econoyears ago was 1962. Although theorists are dissatisfied with some unresolved issues in measuring the size of economies, pragmatists have won the battle over the most useful ways to discuss timelines of rising civilizations.

- ^

Approximately the time over which the economy grows by one twelfth. There are twelve economonths in an econoyear (which pleasing fact informed my translation into English; although economonths and econoyears are currently much longer than solar months and years, this is unlikely to remain true for too long).

- ^

i.e. relative to econotime.

- ^

Since it is easier to recognise good research than to produce it; while in the end Goodhart’s Law will bite here as everywhere, there is a lot of value to be extracted before that becomes a serious problem.

- ^

For example, insight into how to construct [[redacted]] before understanding how to safely structure access to this knowledge could end civilization abruptly. Or the relative degree of advancement of [Omohundro-complete thinking-machines] and insights into [direction-setting-for-thinking-machines] could determine the [goals/nature] of any subsequent emergent [post-civilization].

- ^

The lifespan of an individual human has risen over their recorded history from around 1 economonth to around 3 econoyears; 12 econoyears is generally reckoned to be enough to see most civilizational transitions — at which point solar time will speed up again (until civilization approaches technological maturity).

12 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-09T22:22:14.545Z · LW(p) · GW(p)

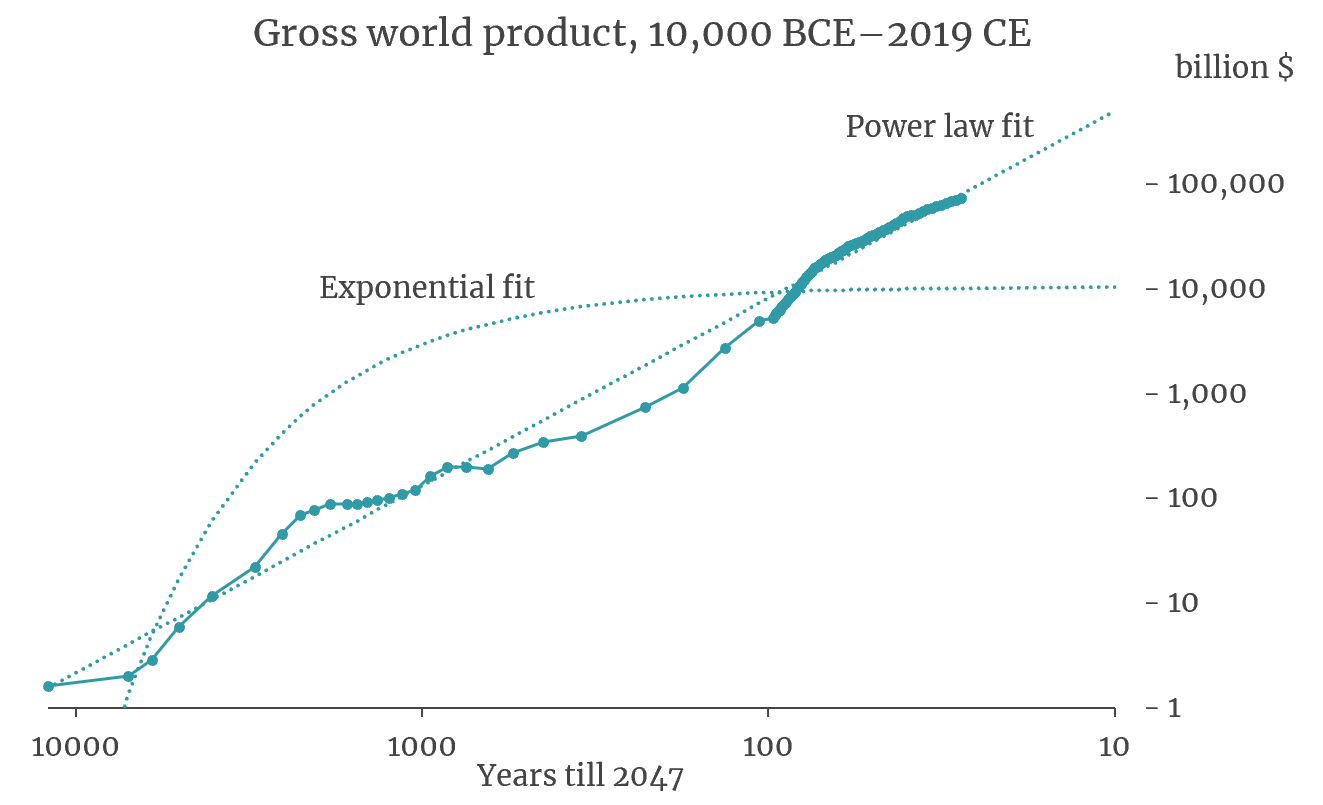

Nice post! You've probably seen these already, but for the benefit of other readers, check out this awesome graph from the highly relevant OpenPhil report by Roodman:

More discussion of it here, blue lines added by me:

The red line is real historic GWP data; the splay of grey shades that continues it is the splay of possible futures calculated by the model. The median trajectory is the black line.

I messed around with a ruler to make some rough calculations, marking up the image with blue lines as I went. The big blue line indicates the point on the median trajectory where GWP is 10x what is was in 2019. Eyeballing it, it looks like it happens around 2040, give or take a year. The small vertical blue line indicates the year 2037. The small horizontal blue line indicates GWP in 2037 on the median trajectory.

Thus, it seems that between 2037 and 2040 on the median trajectory, GWP doubles. Thus TAI arrives around 2037 on the median trajectory.

...

Also: You say:

Earth’s best [patterning-thinking-machines] are still significantly slower to learn and to generalize than humans are (in terms of the number of examples they need to see), so for specialized intellectual labour the training involved would currently be prohibitive.

I'd be interested to dig into this claim more. What exactly is the claim, and what is the justification for it? If the claim is something like "For most tasks, the thinking machines seem to need 0 to 3 orders of magnitude more experience on the task before they equal human performance" then I tentatively agree. But if it's instead 6 to 9 OOMs, or even just a solid 3 OOMs, I'd say "citation needed!"

Also, note that the current models are 3+ OOMs smaller than the human brain, and it's been shown that bigger models are more data-efficient.

↑ comment by Vivek Hebbar (Vivek) · 2022-07-10T09:01:24.046Z · LW(p) · GW(p)

Some problems with the power law extrapolation for GDP:

- The graph is for the whole world, not just the technological leading edge, which obscures the thing which is conceivably relevant (the endogenous trend in tech advancement at the leading edge)

- The power law model is a bad fit for the GDP per capita of the first world in the last 50-100 years

- Having built a toy endogenous model of economic growth, I see no gears-level reason to expect power law growth in our current regime. (Disclaimer: I'm not an economist, and haven't tested my model on anything.) The toy model presented in the OpenPhil report is much simpler and IMO less realistic.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-10T12:23:13.984Z · LW(p) · GW(p)

Agreed on points 1+2. On 3, depends on what you mean by current regime -- seems like AI tech could totally lead to much faster growth than today, and in particular faster than exponential growth. Are you modelling history as a series of different regimes, each one of which is exponential but taken together comprise power-law growth?

Replies from: Vivek↑ comment by Vivek Hebbar (Vivek) · 2022-07-11T01:15:53.038Z · LW(p) · GW(p)

seems like AI tech could totally lead to much faster growth than today, and in particular faster than exponential growth

Strongly agree.

Are you modelling history as a series of different regimes, each one of which is exponential but taken together comprise power-law growth?

I am not. The model is fully continuous, and involves the variables {science, technology, population, capital}. When you run the model, it naturally gives rise to a series of "phase changes". The phase changes are smooth[1] but still quite distinct. Some of them are caused by changes in which inputs are bottlenecking a certain variable.

The phases predicted are:[2]

- Super-exponential growth (Sci&Tech bottlenecked by labor surplus; BC to ~1700 AD (??))

- Steady exponential growth

- Fast exponential growth for a short period (population growth slows, causing less consumption)

- Slow exponential growth for some time (less population growth --> less science --> less economic growth after a delay)

- Super-exponential growth as AI replaces human researchers (population stops bottlenecking Sci&Tech as capital can be converted into intelligence)

My claim is that:

- We are in phase 4, and that we don't have enough automation of research to see the beginnings of phase 5 in GDP data.

- Extrapolating GDP data tells us basically zero about when phase 5 will start. The timing can only be predicted with object-level reasoning about AI.

- Phase 4 doesn't fit the model of "growth always increases from one phase to the next". Indeed, if you look at real economic data, the first world has had lower growth in recent decades than it did previously. Hence, power law extrapolation across phases is inappropriate.

- ^

I don't mean this in a mathematically rigorous way

- ^

As I think about this more and compare to what actually happened in history, I'm starting to doubt my model a lot more, since I'm not sure if the timing and details of the postulated phases line up properly with real world data.

↑ comment by owencb · 2022-07-09T23:09:17.450Z · LW(p) · GW(p)

I'd be interested to dig into this claim more. What exactly is the claim, and what is the justification for it? If the claim is something like "For most tasks, the thinking machines seem to need 0 to 3 orders of magnitude more experience on the task before they equal human performance" then I tentatively agree. But if it's instead 6 to 9 OOMs, or even just a solid 3 OOMs, I'd say "citation needed!"

No precise claim, I'm afraid! The whole post was written from a place of "OK but what are my independent impressions on this stuff?", and then setting down the things that felt most true in impression space. I guess I meant something like "IDK, seems like they maybe need 0 to 6 OOMs more", but I just don't think my impressions should be taken as strong evidence on this point.

The general point about the economic viability of automating specialized labour is about more than just data efficiency; there are other ~fixed costs for automating industries which mean small specialized industries will be later to be automated.

(It's maybe worth commenting that the scenarios I describe here are mostly not like "current architecture just scales all the way to human-level and beyond with more compute". If they actually do scale then maybe superhuman generalization happens significantly earlier in the process.)

comment by Stephen McAleese (stephen-mcaleese) · 2022-07-11T01:36:56.461Z · LW(p) · GW(p)

I think this post is really interesting because it describes what I think are the most important changes happening in the world today. I may be biased but rapid progress in the field of AI seems to be the signal among the noise today.

The difference between this post and reality (e.g. the news) is that this post seems to distill the signal without adding any distracting noise. It's not always easy to see which events happening in the world are most important.

But maybe when we look back in several decades everyone in the world will remember history the way this post describes the world today - the pre-AGI era when AGI was imminent. Maybe when we look back this will be this clear signal without any noise.

Relevant quote from Superintelligence:

"Yet let us not lose track of what is globally significant. Through the fog of everyday trivialities, we can perceive—if but dimly—the essential task of our age."

comment by M. Y. Zuo · 2022-07-11T00:46:42.795Z · LW(p) · GW(p)

A period of time over which the economy grows by a factor of e. One econoyear ago on Earth was 1989 in the local reckoning; two econoyears ago was 1962. Although theorists are dissatisfied with some unresolved issues in measuring the size of economies, pragmatists have won the battle over the most useful ways to discuss timelines of rising civilizations.

'Grow' in what dimension?

The world economy has certainly not grown by all measurable dimensions, or imaginable dimensions, by a factor of e since 1989.

Replies from: owencb↑ comment by owencb · 2022-07-11T08:04:46.875Z · LW(p) · GW(p)

Fair question. I just did the lazy move of looking up world GDP figures. In fact I don't think that my observers would measure GDP the same way we do. But it would be a measurement of some kind of fundamental sense of "capacity for output (of various important types)". And I'm not sure whether that has been growing faster or slower than real GDP, so the GDP figures seem a not-terrible proxy.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2022-07-11T16:56:41.655Z · LW(p) · GW(p)

of various important types

Important seems to be doing most of the work here. Since even within any given society there is no broad agreement as to what falls into this category.

It's better to use a more agreed upon measure such as total energy production / consumption such as what the Kardashev scale uses.