Adversarial attacks and optimal control

post by Jan (jan-2) · 2022-05-22T18:22:49.975Z · LW · GW · 7 commentsThis is a link post for https://universalprior.substack.com/p/adversarial-attacks-and-optimal-control?s=w

Contents

TL;DR: Real talk and real estate A random environment Average surprise and optimal control An adversarial environment None 7 comments

Meta: After a fun little motivating section, this post goes deep into the mathematical weeds. This thing aims to explore the mathematical properties of adversarial attacks from first principles. Perhaps other people are not as confused about this point as I was, but hopefully, the arguments are still useful and/or interesting to some. I'd be curious to hear if I'm reinventing the wheel.

TL;DR:

- When we invest the appropriate effort, the probability of random catastrophic events tends to decrease exponentially with a rate given by the[1] rate function.

- One way of estimating the rate function is to solve an optimal control problem, where we have to determine the "least surprising" way that the catastrophic event comes about. The rate function equals the catastrophic event's surprise (in nats).

- Thus, intuitively, as we invest the effort to decrease the probability of random catastrophic events, the "difficulty" of performing an adversarial attack only increases linearly.

Real talk and real estate

Zillow is an American tech real-estate marketplace company that recently had (what the experts call) a small snafu. They decided they were done just being a marketplace and started buying up homes, completing light renovations, and then selling them with a profit. The whole thing went poorly; they bought houses too expensive and had to sell at a loss, costing the company $420 million and leading to large lay-offs.

This story is not very interesting[2] for anyone not directly involved. The reason I remember the whole affair is a Twitter thread that also caught the attention of Eliezer:

The thread proceeds to lay out how Zillow relied too much on their automatic estimates, which (ex-ante) looked good on average, but (ex-post) manifested in the worst possible way[3]. Or, in the words of the OP:

"[They mistook] an adversarial environment for a random one."

I have approximately zero knowledge about Zillow or the real estate business, so I can't comment on whether this actually is what went wrong (edit: see this comment [LW(p) · GW(p)] for a more informed take!). But the distinction between a random and an adversarial environment applies beyond the Zillow example and is relevant for research in AI Safety: from adversarial examples, via adversarial training [LW · GW], all the way to conceivable existential risk scenarios. It is the distinction between a world where it's fine to go outside because getting struck by lightning is sufficiently unlikely and a world where somebody is trying to smite you.

This post works towards achieving a mathematical understanding of what distinguishes these two worlds. Perhaps other people are not as confused about this point as I was, but hopefully, the arguments are still useful and/or interesting to some. I'll introduce a bit of extreme value theory. Then I'll demonstrate a neat connection to control theory via large deviation theory, which shows that an exponentially decreasing risk of failure translates into a linearly increasing difficulty of adversarial attacks.

A random environment

I'll go over the basics quickly: When you (i.e. Zillow) think of your environment as random, each house you buy is essentially a coin flip with a biased coin. If you make sure that

- the expected value of a deal is positive and

- that all the deals are (mostly) independent of each other,

then you're guaranteed to accrue profits eventually.

This guarantee is the law of large numbers from probability theory 101 and probably not too revolutionary for you, dear reader. But what is the probability of things going very badly? What is the probability of an extreme event or a large deviation? It should decrease (given the law of large numbers), but how fast does it decrease?

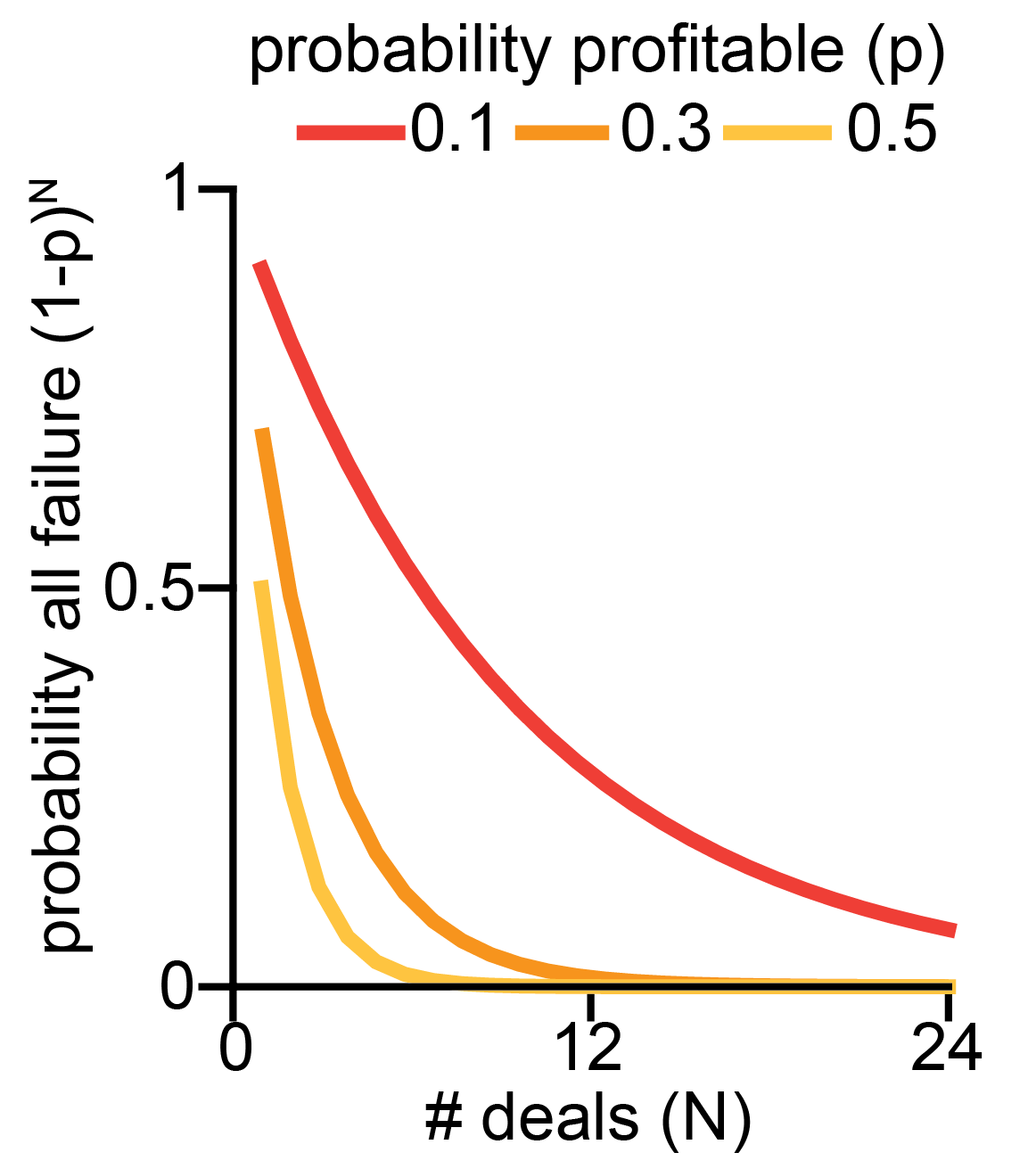

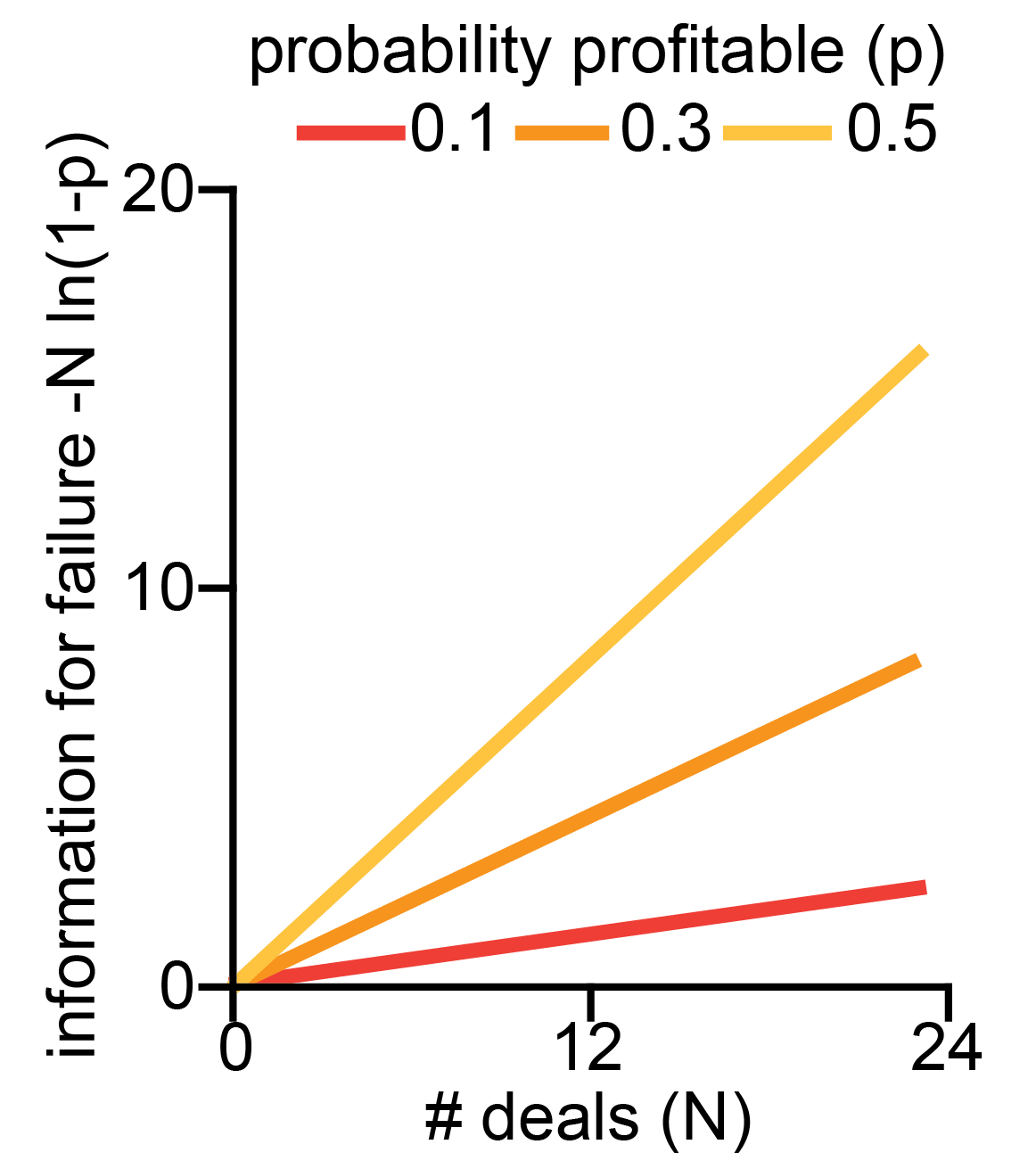

Let's continue the example of buying and selling houses and say that there is a probability that a given deal ends up profitable. The worst-case probability that all your deals go poorly is , which goes to exponentially fast as we increase the number of deals .

It turns out that this toy example generalizes a lot, and the probability that the sum of independent random variables is less than some fraction times always[4] goes down exponentially fast in :

The function is called the "rate function," and it determines the speed with which extreme events get unlikely.

How to compute the rate function[5]? That is what we'll explore in the next section. There is a "standard" way of calculating which is a bit involved and opaque[6] for my taste; I prefer a slightly non-standard derivation[7] of arriving at the rate function, which gives deeper insight into how adversarial attacks and extreme events relate.

Average surprise and optimal control

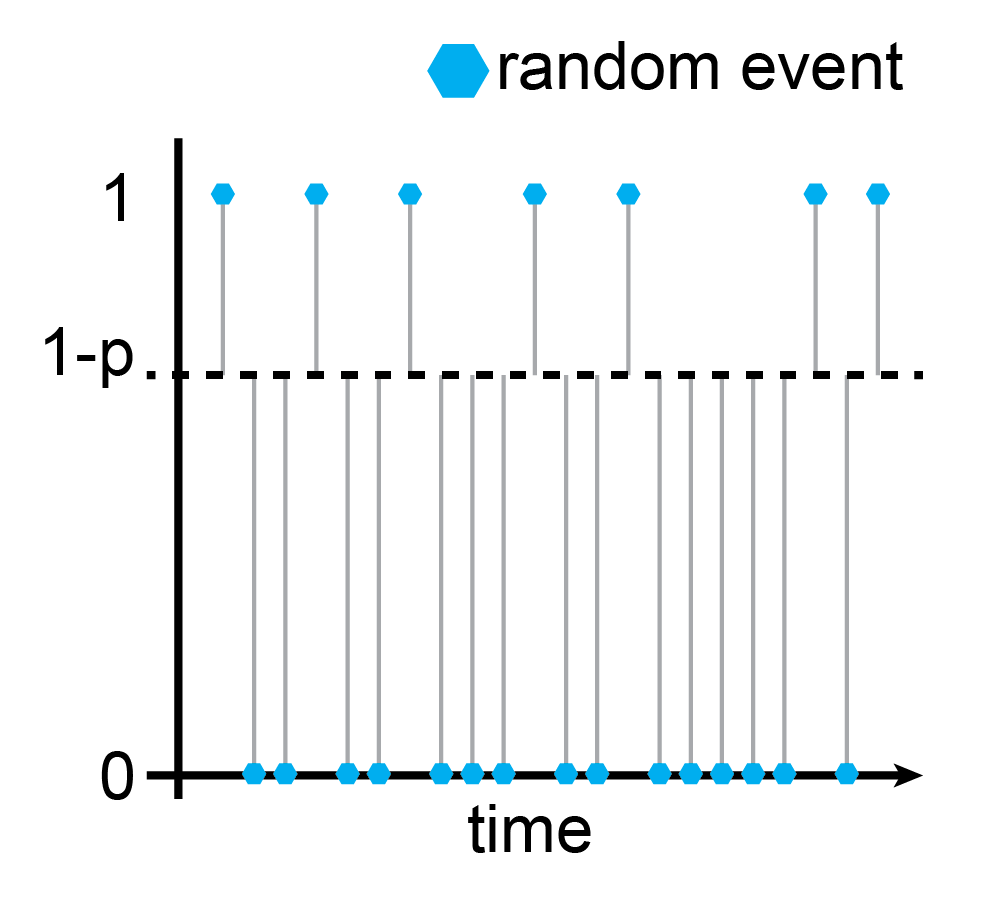

As I like to say: there is, of course, another way of looking at this. We can think of the sequence of house deals as a sequence of random events that fluctuate around the probability of failure .

For a truly random sequence of events, we can compute the average surprise by averaging the information content of all events:

where the information content of an individual event is given as

and the probability measure is a Bernoulli measure, . In the picture, the probability of a random Bernoulli event is the distance from the dashed line, . Consequently, the information content is and the average information content of the sequence is .[8]

Why am I bringing this up? It turns out that the rate function is the solution to an optimal control problem that involves the average excess surprise. In particular[9],

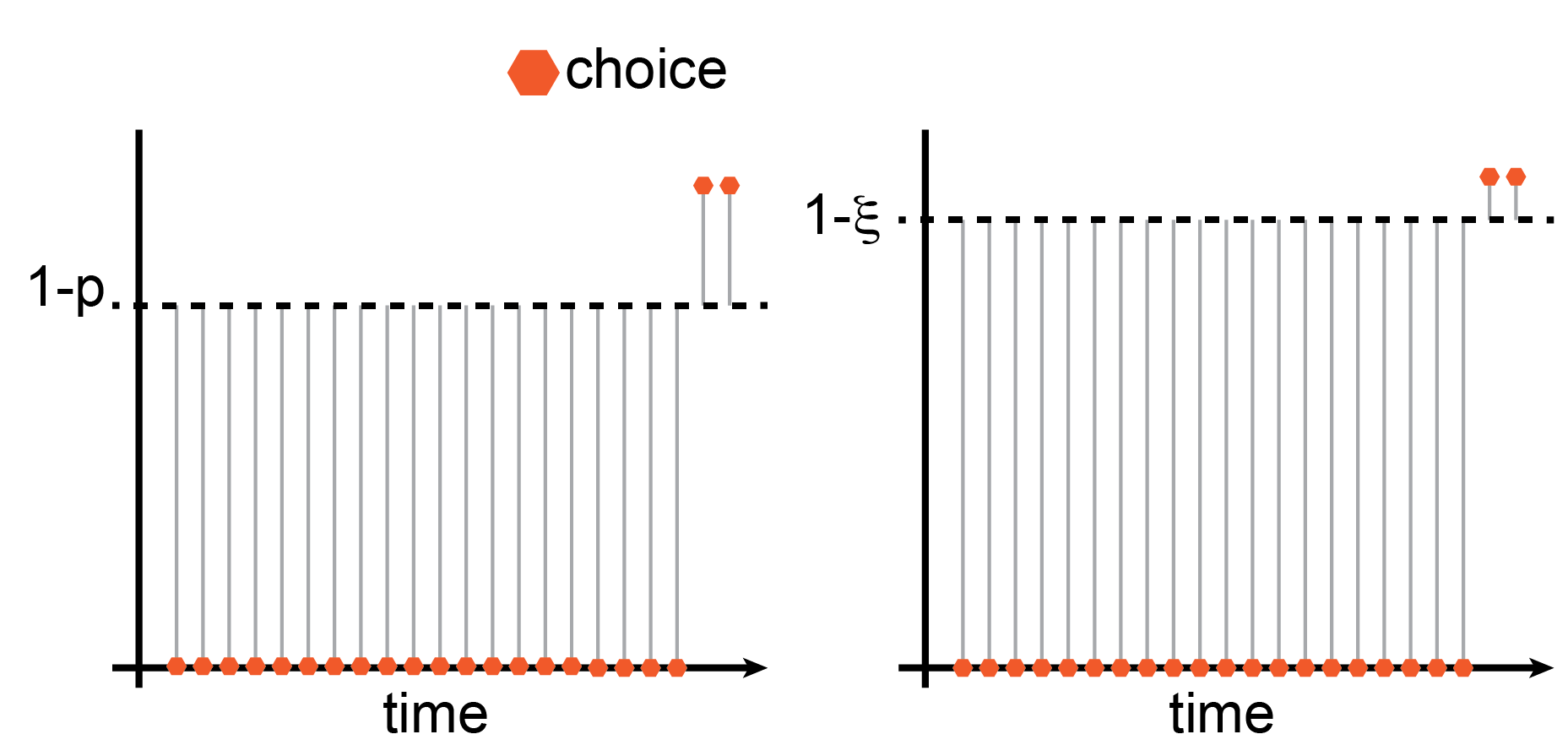

The intuition behind this equation is: If we want to know the rate function for an extreme event, we get to pick how the extreme event comes about (that's the ). The only thing we have to respect is that the extreme event does, in fact, come about (). And then we try to make it so that the average excess surprise, , is as small as possible.

Let's see how this plays out in our house deal example. We want to determine the probability that the sum of independent random variables is less than some success fraction times , , i.e. that a certain fraction of our house deals go badly.

Now we get to pick how the extreme event, , comes about. I went ahead and already did that in the illustration. I picked it so that the first deals go wrong, and only the remaining deals go fine. This configuration satisfies the constraint that and (thanks to commutativity[10]) the average excess surprise of this configuration is identical to that of all possible permutations. Therefore, this configuration's average excess surprise is already the infimum of all possible configurations that satisfy the constraint. Given the expression we wrote above for the average information content of a Bernoulli sequence, we can write the average excess surprise as

Since we know that are equal to and that are equal to , we can simplify the expression for by splitting up the sums and regrouping,

You might recognize this as the KL-divergence between two Bernoulli random variables, , one with success probability , the other with success probability . When we plug in the extreme event that all the deals go poorly, , the rate function reduces to , and the probability of this extreme event is

as it should be.

An adversarial environment

Quick recap:

- When we find ourselves in a random environment, then we can leverage the law of large numbers to push the probability of failure close to zero by trying sufficiently many times[11], .

- If we want to find out how fast the probability of failure goes to zero, we can find that out by solving an optimal control problem where we need to determine the least surprising way failure might come about, .

- The probability of failure then goes to zero exponentially fast, proportional to how surprised we would be and the number of tries, .

So far, so good, but... what is that sound in the distance[12]?

As general as the theorems mentioned above are in terms of the underlying distributions[13], they centrally rely on the assumption that the environment is random. This was not the case for Zillow, where homeowners and real estate agencies selling houses had additional information to select bad deals for Zillow systematically. Instead of pushing the risk of total failure strongly toward zero, Zillow only increasingly exposed itself to an adversary with more insight into the situation.

While the probability of complete failure, , falls exponentially, the amount of surprise required to bring it about, , scales linearly! A few things of note:

- The unit of "surprise" is nats, a measure of information. This interpretation maps only weakly onto the house buying example (where one bit of information might indicate whether a given deal is bad for Zillow). Still, it has a much more straightforward interpretation when we think about set-ups where information passes through a single channel and can be arbitrarily constrained.

- Similarly, we can interpret the expected excess surprise, , as a functional on a state space spanned by . The fact that we compute the infimum over this functional turns the problem into an instance of Hamilton's principle - the "true" evolution of the sequence of states is stationary w.r.t. the "action" given by the excess surprise. Through this lens, we're close to "intelligence as optimization power [? · GW]", which characterizes intelligence as the ability to steer reality into a small subset of possible states. But I can't quite put the pieces in the right place to make the connection click.

- Since we compute as the solution to an optimal control problem that minimizes excess surprise, it is the lower bound on the amount of surprise required. Catastrophe can also come about in more surprising ways, but highly improbable events tend to happen in the least improbable way. On the plus side, catastrophe cannot come about with less surprise, which might have applications for the set-ups with constrained communication mentioned in point 1.

- The team at Redwood Research recently released their report on their research on high-stakes alignment [AF · GW], where they trained a filter to reduce the probability of harmful output from 2.5% in the training set to 0.003% after applying the filter to 0.002% after adversarial training. While OOM reduction in failure probability feels comforting, this only helps when the adversary is not actively trying to trick the filter (from 3.6 nats to 10.8 nats of information). This is consistent with their finding that their tool-assisted search for adversarial examples was 1000x more effective than naive sampling.

These factors combined make me excited about exploring this direction further to find reliable estimates of the lower bound of input required to bring about catastrophe[14]. I'm also curious if there is more structure to the similarity between the information content of an adversarial attack and the characterization of intelligence in terms of optimization processes. If you know more about this or can point me to some literature on the topic, please let me know!

- ^

(appropriately named)

- ^

How much is $420 million again? My gut feeling is that this type of snafu happens somewhere in the world every other week.

- ^

In the same way that the maximum of a set of random variables can be overwhelmingly good in expectation, the minimum of the same set is terrible (in expectation). That's the power of choice.

- ^

Well, not always. That's the point of this post.

- ^

you ask, presumably on the edge of your seat.

- ^

(you need the logarithm of the moment generating function of your random variable, , and then you need to compute the Legendre-Fenchel transformation, .)

- ^

I don't have a reference that follows exactly the same path I choose, but I enjoy the texts by Touchette 2011 and Paninski 2006.

- ^

Absolute values are a bit icky, but since we have the we can be a bit sneaky and write instead. This now has an uncanny similarity to the Freidlin-Wentzell rate function, which can't be a coincidence.

- ^

(I recommend staring at that for a while.)

- ^

When the control problem gets more involved and the individual time points are not interchangeable this step gets a bit trickier and we have to recruit some mathematical machinery.

- ^

Given that the expected value is positive ofc.

- ^

- ^

and any additional structure you might want to slap on

- ^

Perhaps we can manage to reliably stay below that bound?

7 comments

Comments sorted by top scores.

comment by gwern · 2022-05-23T14:29:17.280Z · LW(p) · GW(p)

I have approximately zero knowledge about Zillow or the real estate business, so I can't comment on whether this actually is what went wrong.

FWIW, like the tank or Microsoft Tay or the Amazon hiring system stories, Zillow seems quite dubious as an AI-risk/bias story despite its rapid canonization into the narrative.

The current narrative seems to trace mostly to the Zillow CEO's initial analyst call where he blamed Zillow's losses on the models, saving his face although neglecting to explain how his competitors in the same markets using similar models & datasets mysteriously did so much better, which then got repeated in the online echo chamber (ever more encrusted with opinions and speculation).

But almost immediately, anonymous comments on Reddit/HN & Zillow insiders talking to Bloomberg journalists told a different story: Zillow had two sets of models, and was well-aware that the secondary price model necessarily would lead to the winner's curse & losses if they bought at the model's high bid because they'd only win the houses they were overpaying for; they bought all the houses anyway because of a failure of management, which wanted to hit sales & growth targets and used the secondary rather than primary model as an excuse to do all the buys. This risk-hungry growth-buying strategy looked good in the short-term but then failed long-term in the predictable way, as has happened to many market-makers and companies in the past, when a predictably unpredictable market fluctuation happened.

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-05-23T20:36:48.015Z · LW(p) · GW(p)

Interesting, I added a note to the text highlighting this! I was not aware of that part of the story at all. That makes it more of a Moloch-example than a "mistaking adversarial for random"-example.

Replies from: gwern↑ comment by gwern · 2022-05-24T15:20:38.733Z · LW(p) · GW(p)

Yes, it is a cautionary lesson, just not the one people are taking it for; however, given the minimal documentation or transparency, there are a lot of better examples of short-term risk-heavy investments or blowing up (which are why Wall Street strictly segregates the risk-control departments from the trader and uses clawbacks etc) to tell, so the Zillow anecdote isn't really worth telling in any context (except perhaps, like the other stories, as an example of low epistemic standards and how leprechauns are born).

comment by Adam Jermyn (adam-jermyn) · 2022-05-22T22:58:26.402Z · LW(p) · GW(p)

I’m not sure I follow the concept of “the surprise required to bring about an event”. Is this saying “an optimizer must be able to target a part of phase space that is this much smaller than the overall space?”, where the amount is in units of surprise?

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-05-23T09:29:27.015Z · LW(p) · GW(p)

Yes, that's a pretty fair interpretation! The macroscopic/folk psychology notion of "surprise" of course doesn't map super cleanly onto the information-theoretic notion. But I tend to think of it as: there is a certain "expected surprise" about what future possible states might look like if everything evolves "as usual", . And then there is the (usually larger) "additional surprise" about the states that the AI might steer us into, . The delta between those two is the "excess surprise" that the AI needs to be able to bring about.

It's tricky to come up with a straightforward setting where the actions of the AI can be measured in nats, but perhaps the following works as an intuition pump: "If we give the AI full, unrestricted access to a control panel that controls the universe, how many operations does it have to perform to bring about the catastrophic event?". That's clearly still not well defined (there is no obvious/privileged way that the panel should look like), but it shows that 1) the "excess surprise" is a lower bound (we wouldn't usually give the AI unrestricted access to that panel) and 2) that the minimum amount of operations required to bring about a catastrophic event is probably still larger than 1.

Replies from: adam-jermyn↑ comment by Adam Jermyn (adam-jermyn) · 2022-05-23T17:23:25.330Z · LW(p) · GW(p)

Thanks for clarifying!

Maybe the 'actions -> nats' mapping can be sharpened if it's not an AI but a very naive search process?

Say the controller can sample k outcomes at random before choosing one to actually achieve. I think that let's it get ~ln(k) extra nats of surprise, right? Then you can talk about the AI's ability to control things in terms of 'the number of random samples you'd need to draw to achieve this much improvement'.

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-05-29T12:42:04.847Z · LW(p) · GW(p)

This sounds right to me! In particular, I just (re-)discovered this old post by Yudkowsky [LW · GW] and this newer post by Alex Flint [LW · GW] that both go a lot deeper on the topic. I think the optimal control perspective is a nice complement to those posts and if I find the time to look more into this then that work is probably the right direction.