Legibility Makes Logical Line-Of-Sight Transitive

post by StrivingForLegibility · 2024-01-19T23:39:47.213Z · LW · GW · 0 commentsContents

Making Logical Line-Of-Sight Transitive Relationship to AI Safety Logical Line-Of-Sight Defines a Partial Order None No comments

In the last post [LW · GW], we talked about how logical line-of-sight [? · GW] makes games sequential or loopy, in a way that causal information flow alone doesn't capture. In this post, I want to describe the conditions necessary for logical line-of-sight to be transitive. This will make our information-flow diagrams even more complicated, and I want to describe one tool for summarizing a lot of loopy and sequential relationships into a single partial order graph.

Making Logical Line-Of-Sight Transitive

Logical line-of-sight can be thought of as "being able to model a situation accurately." The situation doesn't need to exist, but two agents with mutual logical line-of-sight can model each other's thinking well enough to condition their behavior on each other's expected behavior. If Alice is modeling a situation, and Bob can "see" and understand Alice's thinking, then Bob can do his own thinking about the same situation using the same model. Alice's legibility to Bob makes her logical line-of-sight transitive to him.

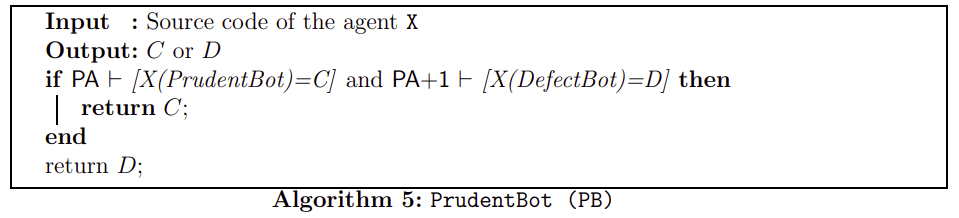

As an example, consider PrudentBot from the Robust Cooperation [LW · GW] paper:

The player deploying PrudentBot knew that it was going to be playing an open-source [? · GW] Prisoners' Dilemma [? · GW], but they didn't know what sort of program the other player would delegate their decision to. At runtime, PrudentBot is handed the source code for the other delegate, and this causal information flow grants PrudentBot logical line-of-sight that its author didn't have. PrudentBot can model more situations accurately because it has more information to work with.

Like FairBot, PrudentBot looks through its logical crystal ball [LW · GW] at its counterpart. PrudentBot might be facing another program like itself or FairBot, which will only Cooperate if it is logically certain that its counterpart will Cooperate back. Amazingly, these modal agents [LW · GW] are able to enact a self-fulfilling prophecy [? · GW], where A Cooperates because it expects B to Cooperate, and B is only cooperating because it expects A to Cooperate. So A's Cooperation "causes" B's Cooperation, which "causes" A's Cooperation. A strategic time loop [LW · GW] that was deliberately designed to lead to socially optimal results.

Unlike FairBot, PrudentBot also casts its logical gaze at another scenario, in which its counterpart is facing DefectBot. DefectBot always Defects, and there's no local incentive to Cooperate with it. And in fact PrudentBot sets up an acausal incentive not to Cooperate with DefectBot. Because the authors of PrudentBot were thinking about programs like CooperateBot, who unconditionally Cooperates even with DefectBot. They thought it would be prudent to Defect on such delegates: if your counterpart will Cooperate with anybody, you may as well Defect on them and take some more resources for yourself. PrudentBot only Cooperates with programs that Cooperate with it, and only when that program's Cooperation is conditional.

The authors of the Robust Cooperation paper also considered TrollBot, which doesn't care at all whether you'll Cooperate with it. TrollBot Cooperates with you if and only if it can prove you'll Cooperate with DefectBot. This sets up the opposite acausal incentive as PrudentBot, and the authors of that paper point out that this means there are no dominant strategies when it comes to choosing which program to delegate your decision to in this game.

This is what got me thinking about Meta-Games [LW · GW]. If most weird "agents that condition their behavior on their predictions about your behavior" are deliberately created by strategic actors trying to accomplish their own goals, it seems like they'll be more like PrudentBot than TrollBot and you should go ahead and Defect on DefectBot. But if Greater Reality is mostly populated by superintelligences handing out resources [? · GW] if and only if they predict you'd Cooperate with DefectBot, and pretty much devoid of any PrudentBots or DefectBots, then a rational decision theory probably calls for Cooperating with DefectBot in that case.

Relationship to AI Safety

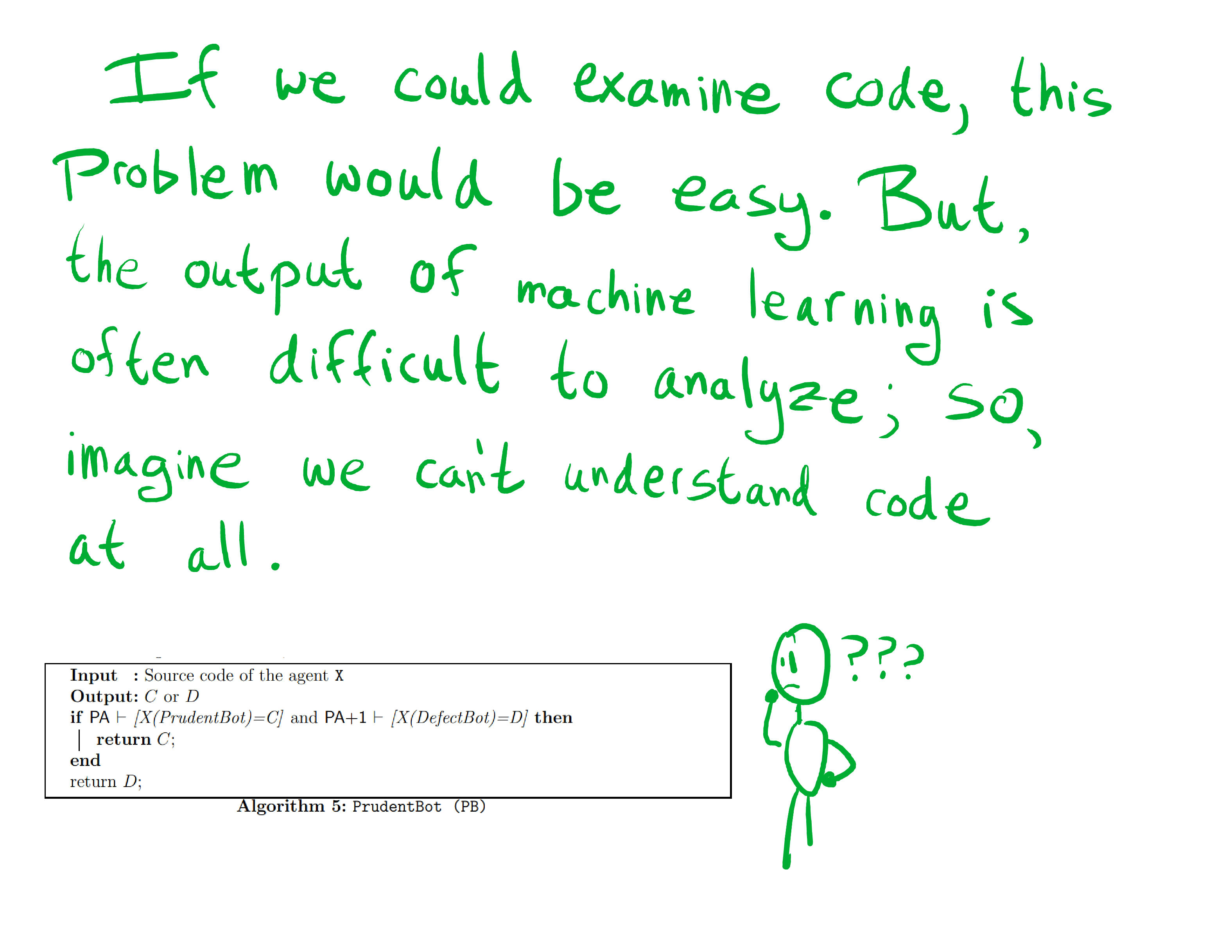

Crucially, all of this analysis relies on our ability to understand the code of these agents. PrudentBot contains "pointers" to the scenarios that it has logical line-of-sight to, but we can't follow those pointers if we can't identify the gears [? · GW] of PrudentBot's thinking. If the same algorithm had been learned by gradient descent acting on inscrutable matrices, we'd be in the same boat as this poor illiterate:

Being able to design and understand the information processing of our software systems is important for a lot of reasons, and a general class of AI safety failure is that your software system is thinking about stuff you don't want it thinking about. Like how to manipulate its users [? · GW], or how to collude with other software systems [LW · GW] to get away with misbehavior.

Or hypothetical scenarios involving sentient beings, thought about in such detail that those beings have their own subjective experiences and status as moral patients. Or how to handle the possibility that hostile aliens have created many more copies of it in systems they control, than could ever exist on Earth, so that it should mostly focus on the being aligned with what these hostile aliens want instead of what humanity wants.

These last two scenarios are discussed in Project Lawful [LW · GW], in a part written by Eliezer Yudkowsky [LW · GW], and the discussion contains spoilers but it's here. The overall safety principle advocated there to handle cases like these is "Actually just don't model other minds at all." I'm a big fan of the story overall and the AI safety principles Yudkowsky calls for in that discussion in particular.

Logical Line-Of-Sight Defines a Partial Order

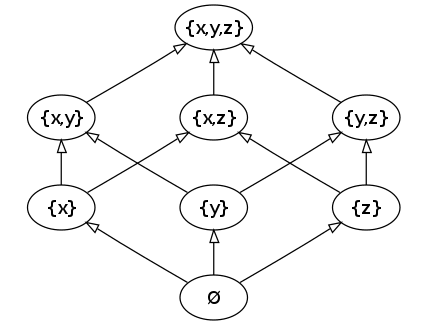

Open-source games can be thought of as happening strategically-after [LW · GW] other games containing any subset of the same programs. This induces a partial order on these games that looks like this:

Here each node in the graph contains 0 or more games. All of the games where x, y, and z can all reason accurately about each other, and have common knowledge of the situations they all find themselves in, live in the node labeled {x, y, z}. This "common knowledge of what game we're even playing" part is important, and what stops us from having to do hypergame theory, but common knowledge is expensive [LW · GW] and I want to call it out as important part of "mutual logical line-of-sight" between different games.

So if you were to look inside of a node, you'd see a bunch of games that each have loopy information flow [LW · GW] with every other game in the node. And the single arrow going from one node to another represents the one-way information flow from each game in the source node to each game in the target node, but not vice-versa.

Games in {x, y, z} can also directly see games in {x, y}, {x, z}, {y, z}, {x}, {y}, {z}, and Ø. But this logical line-of-sight doesn't go the other way, because the agents in those games are each missing information needed to model games containing {x, y, z} accurately. For 3 players, there are ways for each player to be present or absent, and you can think of this as a cube where the positive X, Y, and Z directions correspond to adding x, y, and z respectively to the set of players with mutual logical line-of-sight.[1] The origin of that grid is the empty set Ø, and contains the closed-source games where no players can model each other accurately.[2]

These capture games where some set of players can mutually reason about each other and their environments accurately, and how those cliques are related to each other. It doesn't capture asymmetrical-predictive-power scenarios involving one agent being able to predict another, but not vice versa. It also doesn't capture asymmetrical-belief-about-the-game-we're-even-playing scenarios where one player has a different model of the overall strategic context than another.[3] So the overall information graph is more complicated, but it contains this lattice of islands and continents of mutual predictability and common knowledge.

Suppose that y has an accurate model of z, and x has an accurate model of y. As we discussed before, x also needs to understand their model of y in order to pull out the subsystem that's serving as an accurate model of z. But this is one way for x to move up the information graph. Being able to find a single acausal trading partner [? · GW] out there in Greater Reality would already be pretty cool. But being able to understand them well enough to find and follow the pointers in their thoughts, and keep going like that recursively up the information graph, would let us join their entire trading network.

- ^

This diagram showing the relationship between different elements of a partial order is called a Hasse diagram. When there are n players, the Hasse diagram for the set of subsets looks like an n-dimensional hypercube.

- ^

Some more math notes: A partial order is a comparison relationship between elements of a set, that doesn't require every set to be comparable. The subset relationship defines a partial order between subsets of the same set. {x} is a subset of {x, y}, so information flows from {x} to {x, y}. But neither {x, y} or {y, z} are subsets of each other, so there is not necessarily any information flow between {x, y} and {y, z}. (They share the member y, who might facilitate information flow anyway if it's just x and z that lack logical line-of-sight to each other. But that information flow doesn't come from the subset relationship and might not happen at all.)

- ^

Hypergames include situations where one player has capabilities that another doesn't know about. Legibility into that player's thought processes can eliminate this information asymmetry.

0 comments

Comments sorted by top scores.