Concept Safety: The problem of alien concepts

post by Kaj_Sotala · 2015-04-17T14:09:36.499Z · LW · GW · Legacy · 7 commentsContents

Just what are concepts, anyway? The problem of alien concepts None 7 comments

In the previous post in this series, I talked about how one might get an AI to have similar concepts as humans. However, one would intuitively assume that a superintelligent AI might eventually develop the capability to entertain far more sophisticated concepts than humans would ever be capable of having. Is that a problem?

Just what are concepts, anyway?

To answer the question, we first need to define what exactly it is that we mean by a "concept", and why exactly more sophisticated concepts would be a problem.

Unfortunately, there isn't really any standard definition of this in the literature, with different theorists having different definitions. Machery even argues that the term "concept" doesn't refer to a natural kind, and that we should just get rid of the whole term. If nothing else, this definition from Kruschke (2008) is at least amusing:

Models of categorization are usually designed to address data from laboratory experiments, so “categorization” might be best defined as the class of behavioral data generated by experiments that ostensibly study categorization.

Because I don't really have the time to survey the whole literature and try to come up with one grand theory of the subject, I will for now limit my scope and only consider two compatible definitions of the term.

Definition 1: Concepts as multimodal neural representations. I touched upon this definition in the last post, where I mentioned studies indicating that the brain seems to have shared neural representations for e.g. the touch and sight of a banana. Current neuroscience seems to indicate the existence of brain areas where representations from several different senses are combined together into higher-level representations, and where the activation of any such higher-level representation will also end up activating the lower sense modalities in turn. As summarized by Man et al. (2013):

Briefly, the Damasio framework proposes an architecture of convergence-divergence zones (CDZ) and a mechanism of time-locked retroactivation. Convergence-divergence zones are arranged in a multi-level hierarchy, with higher-level CDZs being both sensitive to, and capable of reinstating, specific patterns of activity in lower-level CDZs. Successive levels of CDZs are tuned to detect increasingly complex features. Each more-complex feature is defined by the conjunction and configuration of multiple less-complex features detected by the preceding level. CDZs at the highest levels of the hierarchy achieve the highest level of semantic and contextual integration, across all sensory modalities. At the foundations of the hierarchy lie the early sensory cortices, each containing a mapped (i.e., retinotopic, tonotopic, or somatotopic) representation of sensory space. When a CDZ is activated by an input pattern that resembles the template for which it has been tuned, it retro-activates the template pattern of lower-level CDZs. This continues down the hierarchy of CDZs, resulting in an ensemble of well-specified and time-locked activity extending to the early sensory cortices.

On this account, my mental concept for "dog" consists of a neural activation pattern making up the sight, sound, etc. of some dog - either a generic prototypical dog or some more specific dog. Likely the pattern is not just limited to sensory information, either, but may be associated with e.g. motor programs related to dogs. For example, the program for throwing a ball for the dog to fetch. One version of this hypothesis, the Perceptual Symbol Systems account, calls such multimodal representations simulators, and describes them as follows (Niedenthal et al. 2005):

A simulator integrates the modality-specific content of a category across instances and provides the ability to identify items encountered subsequently as instances of the same category. Consider a simulator for the social category, politician. Following exposure to different politicians, visual information about how typical politicians look (i.e., based on their typical age, sex, and role constraints on their dress and their facial expressions) becomes integrated in the simulator, along with auditory information for how they typically sound when they talk (or scream or grovel), motor programs for interacting with them, typical emotional responses induced in interactions or exposures to them, and so forth. The consequence is a system distributed throughout the brain’s feature and association areas that essentially represents knowledge of the social category, politician.

The inclusion of such "extra-sensory" features helps understand how even abstract concepts could fit this framework: for example, one's understanding of the concept of a derivative might be partially linked to the procedural programs one has developed while solving derivatives. For a more detailed hypothesis of how abstract mathematics may emerge from basic sensory and motor programs and concepts, I recommend Lakoff & Nuñez (2001).

Definition 2: Concepts as areas in a psychological space. This definition, while being compatible with the previous one, looks at concepts more "from the inside". Gärdenfors (2000) defines the basic building blocks of a psychological conceptual space to be various quality dimensions, such as temperature, weight, brightness, pitch, and the spatial dimensions of height, width, and depth. These are psychological in the sense of being derived from our phenomenal experience of certain kinds of properties, rather than the way in which they might exist in some objective reality.

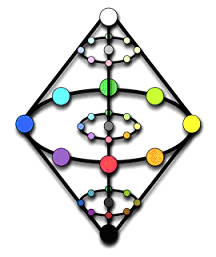

For example, one way of modeling the psychological sense of color is via a color space defined by the quality dimensions of hue (represented by the familiar color circle), chromaticness (saturation), and brightness.

The second phenomenal dimension of color is chromaticness (saturation), which ranges from grey (zero color intensity) to increasingly greater intensities. This dimension is isomorphic to an interval of the real line. The third dimension is brightness which varies from white to black and is thus a linear dimension with two end points. The two latter dimensions are not totally independent, since the possible variation of the chromaticness dimension decreases as the values of the brightness dimension approaches the extreme points of black and white, respectively. In other words, for an almost white or almost black color, there can be very little variation in its chromaticness. This is modeled by letting that chromaticness and brightness dimension together generate a triangular representation ... Together these three dimensions, one with circular structure and two with linear, make up the color space. This space is often illustrated by the so called color spindle

This kind of a representation is different from the physical wavelength representation of color, where e.g. the hue is mostly related to the wavelength of the color. The wavelength representation of hue would be linear, but due to the properties of the human visual system, the psychological representation of hue is circular.

Gärdenfors defines two quality dimensions to be integral if a value cannot be given for an object on one dimension without also giving it a value for the other dimension: for example, an object cannot be given a hue value without also giving it a brightness value. Dimensions that are not integral with each other are separable. A conceptual domain is a set of integral dimensions that are separable from all other dimensions: for example, the three color-dimensions form the domain of color.

From these definitions, Gärdenfors develops a theory of concepts where more complicated conceptual spaces can be formed by combining lower-level domains. Concepts, then, are particular regions in these conceptual spaces: for example, the concept of "blue" can be defined as a particular region in the domain of color. Notice that the notion of various combinations of basic perceptual domains making more complicated conceptual spaces possible fits well together with the models discussed in our previous definition. There more complicated concepts were made possible by combining basic neural representations for e.g. different sensory modalities.

The origin of the different quality dimensions could also emerge from the specific properties of the different simulators, as in PSS theory.

Thus definition #1 allows us to talk about what a concept might "look like from the outside", with definition #2 talking about what the same concept might "look like from the inside".

Interestingly, Gärdenfors hypothesizes that much of the work involved with learning new concepts has to do with learning new quality dimensions to fit into one's conceptual space, and that once this is done, all that remains is the comparatively much simpler task of just dividing up the new domain to match seen examples.

For example, consider the (phenomenal) dimension of volume. The experiments on "conservation" performed by Piaget and his followers indicate that small children have no separate representation of volume; they confuse the volume of a liquid with the height of the liquid in its container. It is only at about the age of five years that they learn to represent the two dimensions separately. Similarly, three- and four-year-olds confuse high with tall, big with bright, and so forth (Carey 1978).

The problem of alien concepts

With these definitions for concepts, we can now consider what problems would follow if we started off with a very human-like AI that had the same concepts as we did, but then expanded its conceptual space to allow for entirely new kinds of concepts. This could happen if it self-modified to have new kinds of sensory or thought modalities that it could associate its existing concepts with, thus developing new kinds of quality dimensions.

An analogy helps demonstrate this problem: suppose that you're operating in a two-dimensional space, where a rectangle has been drawn to mark a certain area as "forbidden" or "allowed". Say that you're an inhabitant of Flatland. But then you suddenly become aware that actually, the world is three-dimensional, and has a height dimension as well! That raises the question of, how should the "forbidden" or "allowed" area be understood in this new three-dimensional world? Do the walls of the rectangle extend infinitely in the height dimension, or perhaps just some certain distance in it? If just a certain distance, does the rectangle have a "roof" or "floor", or can you just enter (or leave) the rectangle from the top or the bottom? There doesn't seem to be any clear way to tell.

As a historical curiosity, this dilemma actually kind of really happened when airplanes were invented: could landowners forbid airplanes from flying over their land, or was the ownership of the land limited to some specific height, above which the landowners had no control? Courts and legislation eventually settled on the latter answer. A more AI-relevant example might be if one was trying to limit the AI with rules such as "stay within this box here", and the AI then gained an intuitive understanding of quantum mechanics, which might allow it to escape from the box without violating the rule in terms of its new concept space.

More generally, if previously your concepts had N dimensions and now they have N+1, you might find something that fulfilled all the previous criteria while still being different from what we'd prefer if we knew about the N+1th dimension.

In the next post, I will present some (very preliminary and probably wrong) ideas for solving this problem.

Next post in series: What are concepts for, and how to deal with alien concepts.

7 comments

Comments sorted by top scores.

comment by James_Miller · 2015-04-17T19:31:24.297Z · LW(p) · GW(p)

As a historical curiosity, this dilemma actually kind of really happened when airplanes were invented: could landowners forbid airplanes from flying over their land, or was the ownership of the land limited to some specific height, above which the landowners had no control?

I think a similar issue arose with satellites and whether you need a country's permission to fly over it. As best I can remember Glenn Reynolds wrote about this, but I don't have a cite. Also, you might want to look at property law where there are always questions of how far property rights extend and what constitutes unlawful interference with property rights.

comment by ChristianKl · 2015-04-17T14:12:25.364Z · LW(p) · GW(p)

When it comes to humans learning new concept I find the concept of phenomological primitives very important. It seems damn hard to integrate new ones but not impossible.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2015-04-17T14:40:14.054Z · LW(p) · GW(p)

Great article, thanks!

comment by RyanCarey · 2015-04-17T15:33:56.400Z · LW(p) · GW(p)

Thanks for another interesting post.

Say that you're an inhabitant of Flatland. But then you suddenly become aware that actually, the world is three-dimensional, and has a height dimension as well! That raises the question of, how should the "forbidden" or "allowed" area be understood in this new three-dimensional world?

This is an interesting question. I know that you plan to suggest some ideas in your next post, but let me pre-empt that with some alternatives: i) it's underspecified: if your training set has n dimensions and your test set has n+1 dimensions, then you don't have any infallible way to learn the relationship of that n+1th dimension with the labels. ii) it's underspecified, but we can guess anyway: try extending the walls up infinitely (i.e. projecting the n+1 dimensional space down to the familiar plane), make predictions for some of these points in n+1 dimensional space, and see how effectively you can answer them. Check whether this new dimension is correlated with any of the existing dimensions. If so, maybe you can reduce the collinear axis to just one, and again test the results. The problem with these kinds of suggestions is that you have at least some labelled data in the higher-dimensional space, in which to test your extrapolations. iii) model some ideal reasoning agent discovering this new dimension, and behave as they would behave iv) find a preferable abstraction, in some Occam's Razor-esque sense, there should be a 'simplest' abstraction that can make sense of the new world without diverging too far from your existing priors. What kind of information theoretic tool to use here is obscure to me. Would AIXI/Kolmogorov complexity make sense here?

Anyhow, will be interested to see where this leads.

Replies from: PyryP↑ comment by PyryP · 2015-04-18T17:18:08.845Z · LW(p) · GW(p)

I think we have to be really careful when bringing AIXI/Kolmogorov complexity to the discussion. My strong intuition is that AIXI is specifically the type of alien intelligence we want to avoid here. Most programming languages have very short programs that behave in wild and complex ways when analyzed from a human perspective. To an AIXI mind based on those programming languages this kind of behavior is by definition simple and preferred by Occam's Razor. The generalizations to n+1 dimensions that this kind of an intelligence makes are almost guaranteed to be incomprehensible and totally alien to a human mind.

I'm not saying that information theory is a bad approach in itself. I'm just saying that we have to be really careful what kind of primitives we want to give our AI to reason with. It's important to remember that even though human concepts are only a constant factor away from concepts based on Turing machines, those constant factors are huge and the behavior and reasoning of an information theoretic mind are always exponentially dominated by what it finds simple in it's own terms.

comment by Linda Linsefors · 2022-06-20T11:22:04.502Z · LW(p) · GW(p)

(Carey 1978)

Do you have a link to this citation?

I don't get any obvious correct result at Google Scholar

↑ comment by Kaj_Sotala · 2022-06-20T13:02:51.337Z · LW(p) · GW(p)

Looks like the claim was from Gärdenfors's book, which gives the cites

Carey, S. (1985). Conceptual change in childhood. Cambridge, MA: MIT Press.

Clark, E. V. (1972). On the child’s acquisition of antonyms in two semantic fields. Journal of Verbal Learning and Verbal Behavior, 11, 750–758.

(no idea why I had a 1978 in my post)