The next AI winter will be due to energy costs

post by hippke · 2020-11-24T16:53:49.923Z · LW · GW · 8 commentsContents

8 comments

Summary: We are 3 orders of magnitude from the Landauer limit (calculations per kWh). After that, progress in AI can not come from throwing more compute at known algorithms. Instead, new methods must be develloped. This may cause another AI winter, where the rate of progress decreases.

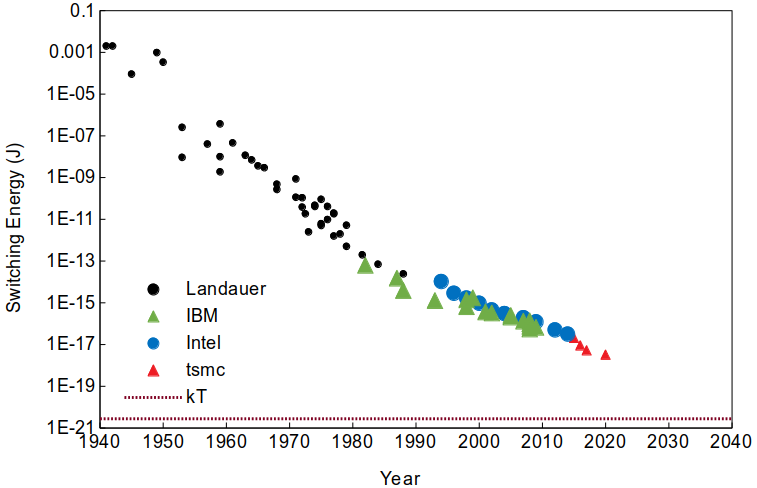

Over the last 8 decades, the energy efficiency of computers has improved by 15 orders of magnitude. Chips manufactured in 2020 feature 16 bn transistors on a 100mm² area. The switching energy per transistor is only J (see Figure). This remarkable progress brings us close to the theoretical limit of energy consumption for computations, the Landauer principle: "any logically irreversible manipulation of information, such as the erasure of a bit or the merging of two computation paths, must be accompanied by a corresponding entropy increase in non-information-bearing degrees of freedom of the information-processing apparatus or its environment".

Figure: Switching energy per transistor over time. Data points from Landauer (1988), Wong et al. (2020), own calculations.

The Landauer limit of is, at room temperature, J per operation. Compared to this, 2020 chips (tsmc 5nm node) consume a factor of 1,175x as much energy. Yet, after improving by 15 orders of magnitude, we are getting close to the limit – only 3 orders of magnitude improvement are left. A computation which costs 1,000 USD in energy today may cost as low as 1 USD in the future (assuming the same price of USD per kWh). However, further order-of-magnitude improvements of classical computers are forbidden by physics.

At the moment, AI improves rapidly simply because current algorithms yield significant improvements when increasing compute [LW · GW]. It is often better to double the compute than work on improving the algorithm. However, compute prices will decrease less rapidly in the future. Then, AI will need better algorithms. If these can not be found as rapidly as compute helped in the past, AI will not grow on the same trajectory any more. Progress slows. Then, a second AI winter can happen.

As a practical example, consider the training of GPT-3 which required FLOPs. When such training is performed on V100 GPUs (12 nm node), this would have cost 5m USD (market price, not energy price). The pure energy price would have been 350k USD (assuming V100 GPUs, 300 W for 7 TFLOPs, 10 ct/kWh). With simple scaling, at the limit, one gets FLOPs per EUR (or FLOPs for 1m EUR, FLOPs for 1 bn USD in energy). With a -limit computer, one could easily imagine to scale by 1,000x and learn a GPT-4, and perhaps even GPT-5. But beyond that, new algorithms (and/or a Manhattan project level effort) are required.

Following the current trajectory of node shrinks in chip manufacturing, we may reach the limit in about 20 years.

Arguments that the numbers given above are optimistic:

- A -type computer assumes that all energy goes into gate flips. No parasitic losses exist, and no connects are required. In practice, only part of the energy goes into gate flips. Then, the lower limit is with or ; the winter will begin in 10 years and not in 20 years.

Arguments that the numbers given are pessimistic:

- The heat waste of a classical computer is typically dissipated into the environment (eventually, into space); often at additional cooling costs. In principle, one could process the heat waste with a heat pump. This process is limited by the Carnot efficiency, which is typically a factor of a few.

- Energy prices (in USD per kWh) may decrease in the future (solar? fusion?)

- If reversible computers could be made, the Landauer limit would not apply. From my limited understanding, it is presently unclear whether such devices could be made in practically useful form.

- I do not understand the impact of quantum computing on AI, and whether such a device can be made in practically useful form.

Other caveats:

- To improve speed, chips use more transistors than minimally required to perform calculations. For example, large die areas are filled with caches. A current estimate for the number of transistor switches per FLOP is . This number can in principle be reduced in order to increase the number of FLOPs per unit energy, at the price of lower speed.

8 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2020-11-24T20:47:46.774Z · LW(p) · GW(p)

At the moment, AI improves rapidly simply because current algorithms yield significant improvements when increasing compute. It is often better to double the compute than work on improving the algorithm. However, compute prices will decrease less rapidly in the future. Then, AI will need better algorithms. If these can not be found as rapidly as compute helped in the past, AI will not grow on the same trajectory any more. Progress slows. Then, a second AI winter can happen.

I kinda disagree with this, especially the first sentence. "Increasing compute" is indeed one thing that is happening in AI, and it's in the headlines a lot, but it's not the only thing happening in AI. Algorithmic innovations are happening now and have been happening all along. Like, 3 years ago, the Transformer had just been invented ... 5 years ago there was no BatchNorm or ResNets .... In the area I'm most interested in (neocortex-like models), the (IMO) most promising developments are in a very early research-project-ish stage, maybe like deep neural nets were in the 1990s, probably years away from progressing to parallelized, hardware-accelerated, turn-key code that can even begin to be massively scaled.

Replies from: Polytoposcomment by Tetraspace (tetraspace-grouping) · 2020-11-30T23:24:16.630Z · LW(p) · GW(p)

In The Age of Em, I was somewhat confused by the talk of reversible computing, since I assumed that the Laudauer limit was some distant sci-fi thing, probably derived by doing all your computation on the event horizon of a black hole. That we're only three orders of magnitude away from it was surprising and definitely gives me something to give more consideration to. The future is reversible!

I did a back-of-the-envelope calculation about what a Landauer limit computer would look like to rejiggle my intuitions with respect to this, because "amazing sci-fi future" to "15 years at current rates of progress" is quite an update.

Then, the lower limit is with or [...] A current estimate for the number of transistor switches per FLOP is .

The peak of human computational ingenuity is of course the games console. When doing something very intensive, the PS5 consumes 200 watts and does 10 teraFLOPs ( FLOPs). At the Landauer limit, that power would do bit erasures per second. The difference is - 6 orders of magnitude from FLOPs to bit erasure conversion, 1 order of magnitude from inefficiency, 3 orders of magnitude from physical limits, perhaps.

Replies from: Nonecomment by ChristianKl · 2020-11-24T18:48:41.099Z · LW(p) · GW(p)

Energy prices (in USD per kWh) may decrease in the future (solar? fusion?)

Solar costs do fall exponentially if the last decades are any indication. If you are fine with not getting 365/7/24 energy because energy is your biggest cost they will give you cheap power.

There might also be a time where you want to fly your solar cells nearer to the sun to pick up more sun. Computers in space could be powered that way.

comment by Roko · 2023-04-05T08:29:18.794Z · LW(p) · GW(p)

I think that AI will just consume a lot more power moving forward rather than solving reversible computation. Simple calculation: computers as a whole consume about 3GW in the US, i.e. about 10^10 W (with a bit of wiggle room).

The total solar energy incident on the USA is about 10^15W when you take into account day/night, clouds, etc.

So there's a factor of, say, 10^4 - 10^5 available just by capturing all that sunlight and using it for computation.

comment by [deleted] · 2020-12-17T10:55:22.297Z · LW(p) · GW(p)

Wait a minute - does this mean that microprocessors have already far surpassed the switching energy efficiency of the human brain? That came to me as a surprise

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-08-07T23:41:13.812Z · LW(p) · GW(p)

Where do you read that?