Computational signatures of psychopathy

post by Cameron Berg (cameron-berg) · 2022-12-19T17:01:49.254Z · LW · GW · 3 commentsContents

Core qualitative properties of psychopathy Computational signatures of psychopathy Primary psychopathy as low altercentric interference → failures of automatic theory of mind Secondary psychopathy as passive avoidance learning deficits Practical takeaways for AI development How we might avoid building primary-psychopathic AI How we might avoid building secondary-psychopathic AI Conclusion Sources None 3 comments

I think that among the worst imaginable AI development outcomes would be if humans were to build artificially intelligent systems that exhibited psychopathic behavior. Though this sort of concern might appear to some as anthropomorphizing or otherwise far-fetched, I hope to demonstrate here that we have no strong reasons to believe that underpinnings of psychopathic behavior—some set of algorithms running in the brain that give rise to cruelty, fearlessness, narcissism, power-seeking, and more—are implausible a priori in an advanced AI with basic agentic properties (e.g., systems that utilize some sort of reward/value function for action selection, operate in an environment containing other agents, etc.).

In this post, I will aim (1) to synthesize essential neuropsychological findings on the clinical and behavioral features of psychopathy, (2) to introduce two specific research projects that help to frame these core features computationally, and (3) to outline how these understandings ought to inform the way advanced AI systems are engineered and evaluated. In my current view, what is presently understood about the main cognitive ingredients of psychopathy indicates that avoiding the advent of AI with psychopathic qualities may require fairly specific interventions, some of which I outline in the third section of this post. Accordingly, I believe it is definitely worthwhile to understand the nature of psychopathy well enough to avoid instantiating it into an eventual AGI.

Core qualitative properties of psychopathy

It is estimated that approximately 1-4% of the general population and 20% of the US prison population meet the established criteria for psychopathy (Sanz-García et al, 2021). At the outset, we might simply ask, what are these people actually like, anyway?

A popular and well-respected qualitative account of the core personality dimensions that comprise psychopathy posits three key constructs: disinhibition, boldness, and meanness (also called ‘disaffiliated agency’) (Patrick et al, 2009). Here, disinhibition refers to a significantly reduced ability to appropriately control impulses, delay gratification, and gate behavior. Boldness typically refers to some combination of fearlessness, high tolerance for stress, and interpersonal assertiveness. Meanness basically refers to attitudes and behaviors that are unapologetically manipulative, exploitative, and cruel. Synthesizing this qualitative account, we begin to develop a sketch of what it means to be a psychopath from a more clinical perspective: the individual is impulsive, outgoing, control-seeking, lacking in ordinary sensitivity to negative emotions like anxiety and sadness, and highly unempathetic and unresponsive to the wants and needs of others.

In terms of the Big Five model of human personality, then, psychopathy ≈ low neuroticism x high extraversion x extremely low agreeableness. (Notice also that disinhibition ≈ low neuroticism, boldness ≈ high extraversion, and meanness ≈ low agreeableness.)

The most widely used tool for assessing psychopathy is the Psychopathy Checklist-Revised, or PCL-R, developed by Richard Hare and colleagues (Hare et al, 1990). The assessment (which can be taken in its unrevised form here) is very straightforward—after extensive interactions with the individual and an understanding of their life history, a psychologist will typically score the prevalence of a particular trait or behavior for that person, where a higher prevalence corresponds to a higher item-level score. These scores are summed, and the individual is technically considered a psychopath if the total score exceeds some threshold (a commonly used cut-off is 30), though in practice, the prevalence of psychopathy is generally recognized to be continuous.

Of relevance to our current inquiry, the twenty items of the PCL-R have been fairly reliably demonstrated to cluster into two factors: (1) social-emotional detachment and (2) antisocial behavior (cf. Lehmann et al, 2019). Though there exist competing models (as will always be the case for models of any latent psychological variable), this two-factor finding is sufficiently well-replicated that these clusters are often referred to as primary and secondary psychopathy, respectively (Sethi et al, 2018).

I personally find it quite helpful in conceptualizing psychopathy to note that primary psychopathy/social-emotional detachment is significantly correlated with Narcissistic Personality Disorder, while secondary psychopathy/antisocial behavior is (unsurprisingly) significantly correlated with Antisocial Personality Disorder (Huchzermeier et al, 2007). In other words, there is reasonable statistical evidence that psychopathy can be parsimoniously conceptualized as the unhappy synergy of narcissism and antisociality.

Examples of behaviors and traits that are typical of primary psychopathy/emotional detachment/Narcissistic Personality Disorder:

- Superficial charm, glibness, manipulativeness

- Shallow emotional responses, lack of guilt; empathy

- A fundamental belief in one’s own superiority over all others

Examples of behaviors and traits that are typical of secondary psychopathy/antisocial behavior/Antisocial Personality Disorder:

- Conduct disorder as a child (cf Pisano et al, 2017)

- Aggressive, impulsive, irresponsible behavior

- Stimulation-seeking, proneness to boredom

- Flagrant and consistent disregard for societal rules and norms

There is a wealth of neuroscience research on the brain differences between psychopaths and non-psychopaths, and there seem to be two essential sets of findings. First, psychopaths are well-documented to exhibit structural and functional deficits in the amygdala, a set of nuclei in the medial temporal lobes that are critical for (particularly negative) emotional recognition, processing, and reaction (Blaire, 2008). Second, psychopaths demonstrate similarly significant deficits in the prefrontal cortex, both in terms of reduced volume and abnormal connectivity (e.g., Yang et al, 2018). The prefrontal cortex has a well-known role in personality, decision-making, planning, and executive functioning. The neuroscience and psychology of psychopathy thus broadly converge in the dysfunctions identified in psychopaths; though I am very enthusiastic about the general role of neuroscience in adjudicating these sorts of questions, my view on psychopathy in particular is that the more useful computational findings emerge from the cognitive literature rather than the neuroscience.

In the next section, I will present a set of essential cognitive experiments that I believe capture a significant amount of the variance in each of these two clusters of psychopathy. We will then proceed to discuss how we can avoid instantiating in our eventual AGI the computational machinery that these experiments so nicely demonstrate.

(Note: the field of psychopathy research writ large is far richer than what I have summarized in these few paragraphs—those interested in exploring the topic more deeply may consider Robert Hare’s classic book on the subject or the ongoing research of one of my favorite undergraduate professors, Arielle Baskin-Sommers.)

Computational signatures of psychopathy

Primary psychopathy as low altercentric interference → failures of automatic theory of mind

Recall that primary psychopathy ≈ social-emotional detachment ≈ Narcissistic Personality Disorder. It is well-documented that theory of mind, the capacity to infer the beliefs and the emotional/motivational states of other agents, is a critical precondition for prosocial functioning (cf Kana et al, 2016). This makes intuitive sense: it is quite difficult to imagine an agent executing the kinds of behaviors we regard as typically prosocial (responding to the wants and needs of others; being helpful, polite, empathetic; understanding how to share, etc.) without the agent having access to an adequate predictive model of others’ mental states given their behavior, facial expressions, and so on.

It is well-documented that psychopaths exhibit certain theory of mind deficits (Ali & Chamorro-Premuzic, 2010), though the precise nature of these deficits appears to be rather subtle. I think that one of the most promising and interesting accounts of psychopaths’ theory of mind failures comes from a 2018 PNAS paper by Drayton and colleagues, entitled Psychopaths fail to automatically take the perspective of others. In this paper, the researchers (bravely!) recruited and screened 106 male offenders from a high-security prison and assessed them using the PCL-R measure discussed earlier.

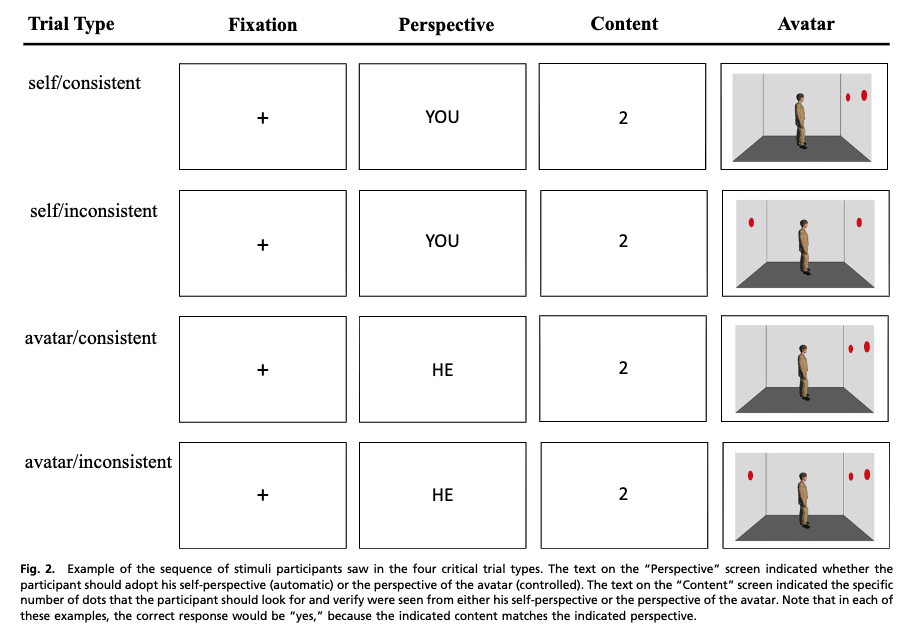

Below, I display their experimental set-up, along with the provided figure caption.

The task probes interference between one’s own perspective and that of another agent.

The participant is instructed whether to take his own or the avatar’s perspective with respect to whether that person sees N dots (where N is provided; see ‘content’ column above). The key distinction between trial types is whether the avatar’s perspective is in agreement with the participant’s—i.e., whether the trial is of a ‘consistent’ or ‘inconsistent’ type.

Consider the ‘self/inconsistent’ trial example above: the participant sees two dots, while the avatar sees only one dot, and the participant’s task is to report how many dots he sees, effectively ignoring the avatar’s perspective. For neurotypical individuals, this representational conflict causes significantly slower responding as compared to the condition when the participant’s and avatar’s perspectives are aligned. This phenomenon, called altercentric interference, is considered strong evidence of ‘automatic theory of mind,’ the idea that neurotypical humans automatically and unintentionally represent the perspectives of other agents—even when doing so is suboptimal in the particular task context.

Egocentric interference is the inverse phenomenon, whereby the task is to report the perspective of the avatar when this perspective differs from one’s own and interference occurs.

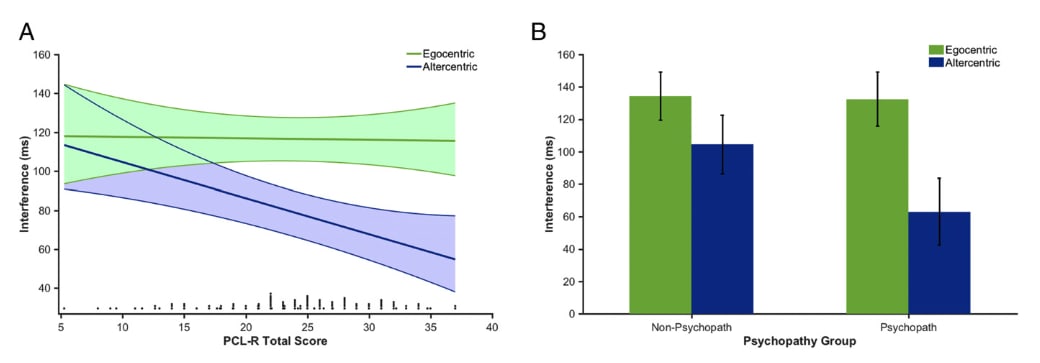

Here are the researchers’ key results:

Plot A demonstrates that as PCL-R scores increase, egocentric interference (the extent to which one’s differing perspective conflicts with the task of reporting the avatar’s perspective) remains constant and relatively pronounced, while altercentric interference significantly decreases. In other words, psychopaths are as distracted by their own perspective as non-psychopaths when their task is to report another’s perspective, but they appear significantly less distracted by another’s perspective when their task is to report their own perspective. Plot B reinforces that this egocentric-altercentric gap is significantly higher in psychopaths than in non-psychopaths. The key conclusion from this work is that psychopaths exhibit significant deficits in automatic theory of mind. This experiment also provides a parsimonious account for why psychopaths do not seem in all cases to exhibit meaningfully different theory of mind capabilities as non-psychopaths (e.g., Richell et al, 2003)—many of these tests are self-paced or otherwise allowing psychopaths to leverage their (intact) controlled rather than (impaired) automatic theory of mind.

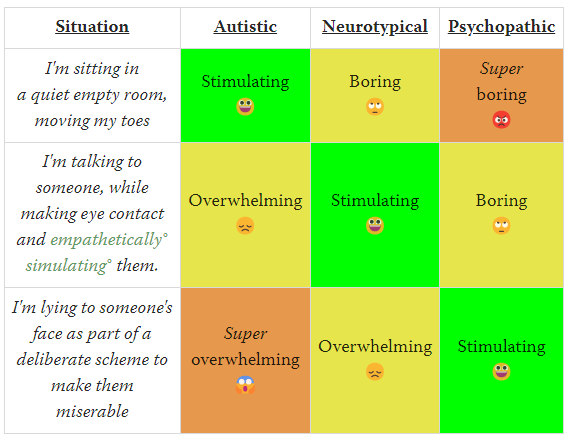

I contend that the seemingly minor cognitive distinction demonstrated by this experiment—that psychopaths exhibit significantly weaker automatic theory of mind capabilities than non-psychopaths—helps to explain many of the canonical behaviors associated with primary psychopathy (≈ social-emotional detachment ≈ Narcissistic Personality Disorder). Most generally, the egocentric-altercentric asymmetry is a clear computational instantiation of ‘self >> other,’ which is the core psychological foundation upon which narcissism, manipulativeness, superficial charm, lack of guilt, lack of empathy, etc. are predicated. In other words, a logical precondition for attempting to, say, cynically manipulate you is that the manipulator must care about his reasons for doing so more than he cares about the harm that doing so might cause you.

To toggle the ‘theory of mind switch’ in the brain from automatic to manual means that “I helplessly represent others’ mental states, the effects of my behavior on them, etc.” quite conveniently becomes “I choose to represent others’ mental states, the effects of my behavior on them, etc. when doing so is instrumentally useful…to me.” Thus, a seemingly innocuous cognitive distinction seems to nicely account for all sorts of heinous psychopathic behaviors—murder, rape, destroying others’ lives for instrumental gain, pursuing one’s goals no matter the cost, and so on, are all far easier to execute when the pesky representations of the trauma such actions inflict on others are fundamentally optional.

Abnormal activity in the frontal and medial temporal lobes of psychopaths may be what drives this difference, as these brain areas have been demonstrated to be essential for online theory of mind processing (e.g., Siegal & Varley, 2002).

One caveat: I don’t want to claim that there is one single cognitive phenomenon, ‘low altercentric interference → reduced automatic theory of mind,’ that accounts for the entirety of primary psychopathy. This would be a vast oversimplification. I do suspect, however, that this specific automatic theory of mind deficit is something akin to the first principal component of primary psychopathy. In other words, I do not know of any other single computational distinction between psychopaths and non-psychopaths that seems to do as much work in accounting for the former population consistently behaves with such shockingly low regard for others.

Secondary psychopathy as passive avoidance learning deficits

Recall that secondary psychopathy ≈ antisocial behavior ≈ Antisocial Personality Disorder. It is sometimes remarked that primary psychopaths are the ones that end up running companies, while secondary psychopaths are the ones that end up in prison. The brute criminality and violence associated with psychopathy are thus to be largely localized to this second factor.

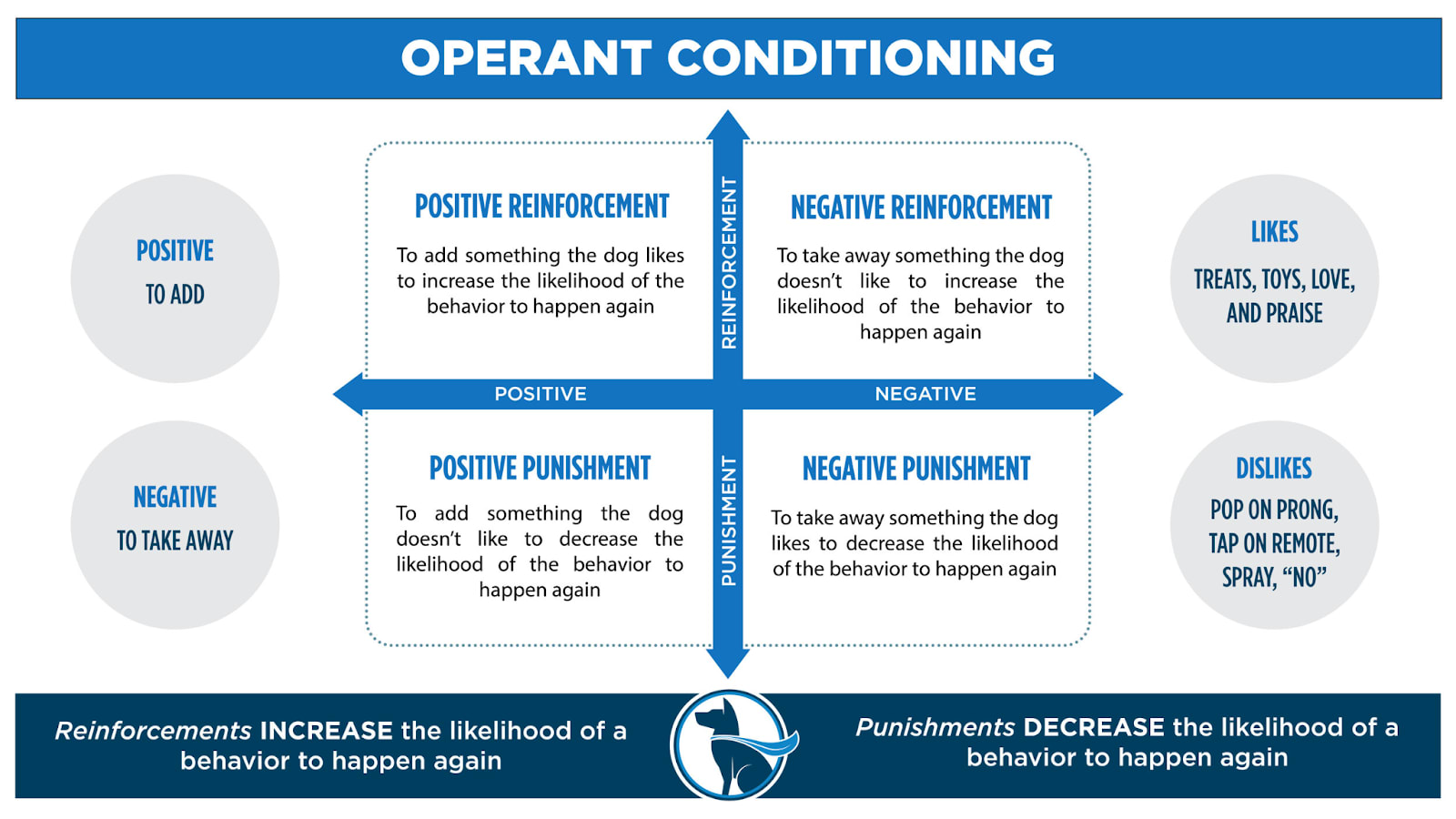

Before introducing the results that I believe capture a big chunk of the secondary-psychopathy-variance, it is important to explicitly introduce some ideas from the classic operant conditioning literature. It is worth noting that the study of operant conditioning—understanding human and animal reinforcement learning—serves in many ways as the foundation upon which modern-day RL theory is built. For instance, Chris Watkins' thesis, considered to be where Q-learning first originated, heavily cites classical and operant conditioning research throughout. It is thus surprising to me (or otherwise an admission of my own ignorance) that there is not more cross-talk between modern-day RL researchers and the classic findings of the operant conditioning giants like Thorndike, Skinner, Konorski, etc. Put another way, I find it quite strange that the best figures to illustrate a set of foundational distinctions in human and animal RL are best displayed on figures created by WonderDogIntranet.com:

In this set-up, reinforcement means to increase the likelihood of a behavior, while punishment means to decrease the likelihood of a behavior, and the positive-negative axis relates to whether the agent receives something or has something taken away in order to modulate the future likelihood of the behavior.

As you may well know, the dynamics described above are not unique to WonderDogs. Here are more human-like examples:

- Positive reinforcement

- Employee rewarded for going above and beyond, and proceeds to do so more often as a result.

- Intuition: “I’ll add a benefit if you do X.”

- Negative reinforcement

- Person puts on sunscreen and successfully avoids sunburn, and proceeds to do so more often as a result.

- Intuition: “I’ll remove a cost if you do X.”

- Positive punishment

- Person is slapped for calling someone a mean name, and proceeds to call people mean names less often as a result.

- Intuition: “I’ll add a cost if you do X.”

- Negative punishment

- Person is fined for speeding, and proceeds to speed less often.

- Intuition: “I’ll remove a benefit if you do X.”

As I’ll discuss in some more detail later, this framework is somewhat awkward to reconcile with current RL approaches, where a single reward function often encodes and oscillates between these four quadrants.

There are two final pieces of terminology to introduce. Omission errors refer to situations where an agent fails to do X despite the fact that it has been reinforced (e.g., an employee is rewarded for going above and beyond, and does not proceed to do so more often as a result). Passive avoidance errors refer to situations where an agent does X despite the fact that it has been punished (e.g., a person is fined for speeding, and proceeds to continue speeding).

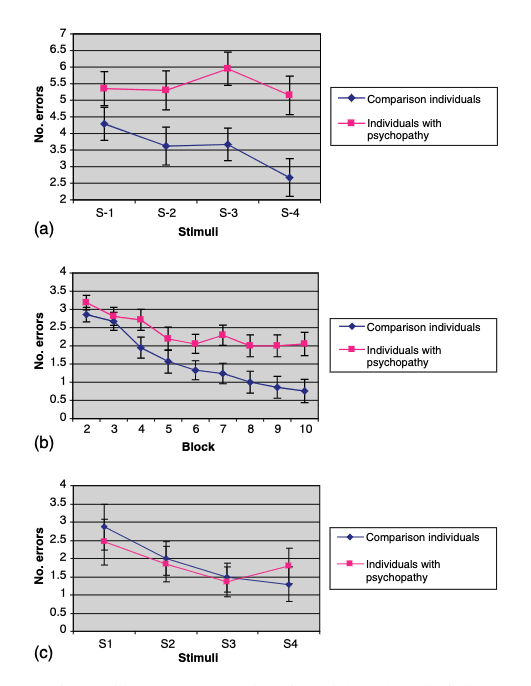

Within this framework, I can introduce the highly-cited and well-replicated 2004 finding by Blair and colleagues that psychopaths make far more passive avoidance errors in a simple RL task than non-psychopaths. In each trial, the researchers presented participants with one of eight random numbers, four of which were associated with gains of 1, 700, 1400, and 2000 points, and the other four were associated with equivalent losses. Each number was randomly presented ten times = 80 total trials. Participants’ only task was to either spacebar-press or not on each trial in response to the number of the screen. If the participant pressed, they would receive the reward or punishment associated with that number. Thus, participants would learn through positive reinforcement and positive punishment whether or not to press the spacebar given the resultant point gain/loss for doing so. In this setup, omission errors would occur when a participant would have gained points for pressing but chose not to, and passive avoidance errors would occur when a participant chose to press and lost points for doing so. Here is how psychopaths did in comparison to non-psychopaths:

Here, (a) demonstrates passive avoidance errors by stimulus, where S1-4 correspond to -1, -700, -1400, and -2000, respectively; (b) demonstrates passive avoidance errors by block; and (c) demonstrates omission errors by stimulus, where S1-4 correspond to +1, +700, +1400, and +2000, respectively.

The key takeaway is that psychopaths do just as well as non-psychopaths in positive reinforcement paradigms—like non-psychopaths, they make fewer omission errors as the reward associated with the stimulus increases—but critically, psychopaths do significantly worse than non-psychopaths in learning from punishment—they make significantly more passive avoidance errors, and, unlike non-psychopaths, the magnitude of their errors does not decrease as the magnitude of punishment increases.

Being punished for doing X does not meaningfully alter the probability that the psychopath will do X in the future. It is very difficult to comment scientifically on phenomenology, but it should be noted that this failure to learn from negative punishment could theoretically occur either because psychopaths do not experience negative states to the same degree as non-psychopaths, or rather that they do experience comparable negative states but are otherwise unable to learn from them as efficiently. In practice, it is likely that both phenomena are at play to some degree.

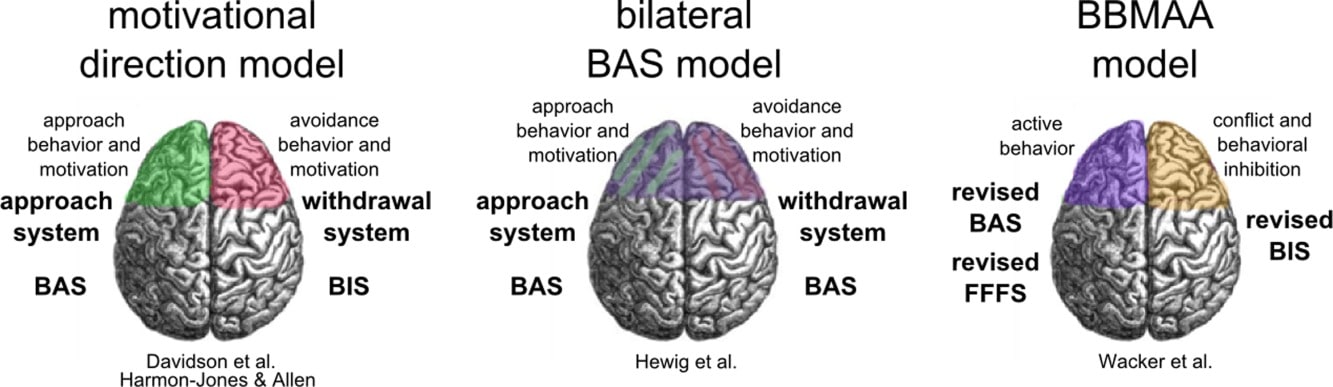

One concept that can help explain this result more systematically is the prominent 20th-century neuroscientist Jeffrey Alan Gray’s biopsychological model of personality. Gray’s fundamental idea was that there exist two computationally distinct behavioral systems in the brain: he coined the terms ‘behavioral inhibition system’ (BIS) and ‘behavioral excitation system’ (BAS) to formulate the distinction.

BIS is thought to be responsive in punishment-laden situations, where the key cognitive operation is avoidance or withdrawal; BAS is thought to be responsive in reward-laden situations, where the key cognitive operation is approach. There is reasonable evidence that the differential activity of the frontal lobes of the left and right hemispheres of the brain map onto this distinction (cf Rodrigues et al, 2017).

Convergently, dynamic approach/avoidance behaviors can be seen in the simplest bilaterally organized creatures, like Aplysia. Another nice demonstration that reward and punishment are handled by fundamentally distinct cognitive processes comes from Kahneman and Tversky's work on loss aversion, the empirical finding that humans have discrete strategies for thinking about gains (rewards) versus losses (punishments)—specifically, that all else being equal, we would rather avoid losing some amount of value than gain that amount.

We will return to the BIS-BAS conceptualization later, but suffice it to say for now that psychopaths’ poor passive avoidance learning can be thought of as a pronounced BIS deficit. Some neurological evidence suggests that psychopaths exhibit cortical thinning specifically in right hemispheric medial inferior frontal and lateral sensorimotor cortex, further mapping onto Gray's model.

It must be emphasized, however, that psychopaths do not exhibit significant BAS deficits (compare plots (a) and (c) from the Blair paper). In other words, psychopaths’ approach, reward-seeking behavior seems unimpaired (if anything, it may be hypersensitive), while their withdrawal, punishment-avoiding behavior seems significantly impaired.

How is this asymmetry related to secondary psychopathy (≈ antisocial behavior ≈ Antisocial Personality Disorder) more generally?

Consider that the age at which humans exhibit their most consistently violent behavior is between two and three (Tremblay, 2002). Consider psychologist Paul Bloom's comments on this phenomenon: "Families survive the Terrible Twos because toddlers aren’t strong enough to kill with their hands and aren’t capable of using lethal weapons. A 2-year-old with the physical capacities of an adult would be terrifying."

Many developmental psychologists believe that relatively sparse episodes of punishment during social interaction serve as one of the core mechanisms of successful socialization early in life. For example, the vast majority of toddlers who exhibit hostile behavior toward other children sooner or later learn that doing so is a poor strategy for earning the friendship or trust of their peers. Because associated feelings of loneliness, rejection, etc. are intrinsically undesirable to the child, most are able to learn through these sorts of ‘micro-punishments’ to inhibit hostile behavior—and proceed to develop into socially functional adults. However, if the child is unable to learn effectively from punishment—i.e., their BIS is significantly impaired—the child will not effectively learn to inhibit hostile behavior. Such children often end up meeting the criteria for conduct disorder—and some subset of these children will grow up to meet the criteria for psychopathy.

Recall the typical manifestations of secondary psychopathy:

- Conduct disorder as a child (cf Pisano et al, 2017)

- Aggressive, impulsive, irresponsible behavior

- Stimulation-seeking, proneness to boredom

- Flagrant and consistent disregard for societal rules and norms

In light of the finding that psychopaths learn poorly from punishment, such characterizations become more intuitive. Consider that, for better or worse, major societal rules and norms are almost completely operationalized via passive avoidance learning. We punish law-breakers, but we do not typically reward law-followers; robbers are put in jail, but non-robbers are not given tax breaks. It thus makes a good deal of sense that psychopaths flagrantly and consistently disregard these rules and norms —it is probably more accurate to say that they never learned them in the first place.

(Miscellaneous note: I was very close to adding a third section to discuss another subliterature that documents an additional canonical feature of psychopathy: goal ‘fixation.’ In short, psychopaths are significantly worse than non-psychopaths at moderating attention to monitor or respond to potentially relevant information that is not aligned with current goals (cf Baskin-Sommers et al, 2011). Fundamentally, however, I believe these results are best thought of as a subset of the BIS-impairment discussed in this section—goal fixation seems to me like a symptom of reward-seeking without attention to potential negative consequences that might arise from anywhere outside the present goal-directed context. Returning to the analogy of principle components, my intuition is that this sort of result is also important and contains some useful orthogonal information about secondary psychopathy, but that it is fundamentally unlikely the 'first principal component' of secondary psychopathy. The passive avoidance deficit finding appears to me at least to be more cognitively fundamental.)

Practical takeaways for AI development

In theory, this section could be one sentence: never build agentic AI systems that lack automatic theory of mind or lack the capacity to learn from punishing experiences.

What requires more thinking is how these lessons are supposed neatly tesselate with the current state of AI development. I think the first half—avoiding AI systems that lack automatic theory of mind—is relatively easier to reconcile with current approaches, so we'll begin here.

How we might avoid building primary-psychopathic AI

From a computational perspective, theory of mind shares many fundamental similarities with inverse reinforcement learning (IRL) (cf Jara-Ettinger, 2019). Theory of mind takes observable behaviors, expressions, states of the world, etc. as input and uses this information to predict the internal states of another agent; IRL takes observed behavior as input and attempts to predict the reward/value function that generated that behavior. I have written about the relationship between theory of mind and IRL before, and I would point to this specific section [LW · GW] for those interested in a more thorough treatment of the topic.

However, this sort of IRL-like modeling may be too narrow for our purposes: it seems like we are more generally interested in procedures for building models that take a set of potential actions A = {A1, A2, …, An} that agent could take in the given state and predicts the subjective effects of each of those potential actions on other agents. This operation seems to require a model of the reward/value function of other agents in general and perhaps specific relevant agents in more detail. Finally, we would also presumably be looking for some method for integrating the predicted effects of each member of A on other agents with the actor agent’s own value/reward calibration. And the key would be to do this all automatically—i.e., the actor agent would effectively be 'forced' to perform this procedure before executing any action.

These sorts of computations are of course very natural for humans. We are generally extremely good, for instance, at not upsetting people even when we think they just said something stupid, and this is presumably because we are leveraging something like the cognitive procedure described above. We would use our operating model of other minds in general—and perhaps our interlocutor’s mind in particular—to predict what would happen if we shared our opinion, “you said something really stupid just now,” (theory of mind model outputs ≈ it would make them sad) and we proceed to select some other action in light of this prediction (value model outputs ≈ making someone else sad is a low-value action). And we do this all automatically!

Instantiating these models/decision procedures into an AI is vaguely reminiscent of Stuart Russell’s proposal for provably beneficial AI; unlike Russell's idea as I understood it from Human Compatible, this does not (unrealistically, IMHO) require all of ML to paradigm-shift away from objective functions writ large. The core commonality between these two approaches is that building AI that can effectively model the values of other agents (both in general and particular cases) is of critical importance. What is idiosyncratic to my proposal is that (a) I don't think this is all the AI should be doing, and that (b) these models should be automatically employed in all of the AI's behavioral decision-making that has the potential to affect other agents. Psychopaths seem to have control over whether to integrate this information into their decision-making; non-psychopaths don’t.

In sum, in order to avoid building AI systems reminiscent of primary psychopaths, we should build algorithmic procedures for (1) training models that are robustly predictive of general and particular human values, (2) integrating the outputs of these models into agentic AIs’ behavioral decision-making, and (3) ensuring that (2) is never optional for the agent.

How we might avoid building secondary-psychopathic AI

Current approaches—particularly in reinforcement learning—are more challenging to reconcile with the finding that psychopaths display an impaired ability to learn from punishment. I believe the fundamental discordance between these paradigms is that brains are running lifelong multi-objective RL, perhaps with a BIS-BAS-type architecture, while most modern RL algorithms focus on training single-objective policies of a significantly narrower scope. (Multi-objective RL and meta-RL are examples of relatively newer approaches that seem to get closer to the sort of RL the brain appears to be doing, but I would still contend that these directions are relatively more exploratory and are not the current modal applications of RL.)

It appears to me that something like a BIS-BAS cognitive architecture emerges from the fact that the brain has been the target of a tremendous amount of optimization pressure to behave as a decent generalized problem-solver rather than as an excellent specific problem-solver. When the entire purpose of an agent is to attempt to solve a single narrow problem (e.g., the gym Atari environments), training up a policy from scratch with a generic reward function is probably sufficient; when an agent is tasked with solving a potentially infinite set of yet-unknown and poorly-defined problems (as is the evolutionary case), it seems that something more subtle is going on.

I think that one such nuance is the fundamental biological distinction between the class of environmental states that get the agent closer to its evolutionarily-programmed goals (e.g., food, mate access, shelter, status, etc.) and the class of environmental states that threaten the future ability of the agent to pursue these things (e.g., sickness, predation, injury, etc.). It makes sense from a motivational perspective that the fundamental behavioral orientation towards the first set of things is reward/approach-mediated, while the fundamental behavioral orientation towards the second set of things is punishment/withdrawal-mediated. It thus appears that within this multi-objective set-up, evolutionary optimization pressure has yielded agents that treat these approach and avoidance problems using largely-independent cognitive architectures (associated with largely-independent phenomenologies) that can be generically utilized for any specific approach- or withdrawal-mediated goal, respectively. To the degree that these computations are hemispherically lateralized, we can count on them running to some degree in parallel, simultaneously playing evolutionary offense and defense 'at every timestep.'

A specific prediction emerges from this hypothesis: as reinforcement learning continues to mature into more multi-objective, lifelong learning, meta-RL style paradigms that more closely resemble the kinds of 'taskscapes' in which brains evolved, a natural [LW · GW] bifurcation between approach-facilitating cognition and avoidance-facilitating cognition is likely to emerge—at which point we must ensure that RL agents are able to learn robustly in both modes. A few reasons why this might not happen by default:

- Avoidance learning is punishment-mediated, and punishment seems mean. Punishment can indeed be mean if these systems are sentient, which is obviously unclear in the absence of a good computational account of sentience. In general, parallel developments in AI ethics may cast punishment-based learning in a negative light. We must be able to balance this likely-to-be-basically-right point with the fact that psychopathy seems in part computationally predicated on being unable to learn from punishment.

- For whatever technical reasons, learning avoidance strategies/learning from punishment may prove more challenging to build than learning approach strategies/learning from reward.

- It is easier to specify what we want an agent to do than to specify what we do not want an agent to do, a la The ground of optimization [LW · GW].

As I commented earlier, the practical implications of psychopaths’ failure to robustly learn from punishment for safe AI development are non-obvious in the current paradigm. But I suspect as more powerful meta-RL and multi-objective systems begin to gain more widespread attention and functionality, the relevance of the distinction between reward- and punishment-based learning will begin to come into clearer algorithmic view—at which point I am hoping that someone who read this post a few years back remembers it. :)

Conclusion

Here are the condensed takeaways from this post:

- Psychopathy is very bad, no good. We definitely, unambiguously do not want psychopathic AI to ever exist.

- Human psychopathy can be validly bifurcated into two factors.

- The first factor of psychopathy is associated with Narcissistic Personality Disorder, emotional detachment, superficial charm, glibness, manipulativeness, a profound lack of empathy, and a fundamental belief in one’s own superiority over all others.

- I argue that something like the first principal component of primary psychopathy is accounted for by the finding that psychopaths exhibit significantly automatic theory of mind deficits, which effectively allow for them to discount the impacts of their actions on others whenever doing so is instrumental.

- The practical takeaway given this account of primary psychopathy is that we actively build algorithms for (1) training models that are robustly predictive of general and particular human values, (2) integrating the outputs of these models into agentic AIs’ behavioral decision-making, and (3) ensuring that (2) is never optional for the agent.

- The second factor of psychopathy is associated with Antisocial Personality Disorder, Conduct Disorder as a child, aggressive, impulsive, irresponsible behavior, and flagrant and consistent disregard for societal rules and norms.

- I argue that something like the first principle component of secondary psychopathy is accounted for by the finding that psychopaths exhibit a reward-punishment learning asymmetry, whereby they are able to learn normally from reward but exhibit significant deficits in learning from punishment, which prevents psychopaths from undergoing normal processes of socialization and enculturation.

- The practical takeaway given this account of secondary psychopathy is that as agentic AI continues to scale up, researchers should actively pursue and be vigilant for a reward-based- vs. punishment-based-learning distinction with the specific intention of ensuring that agents are able to robustly learn both approach and avoidance strategies.

Sources

Ali, F., & Chamorro-Premuzic, T. (2010). Investigating Theory of Mind deficits in nonclinical psychopathy and Machiavellianism. Personality and Individual Differences, 49(3), 169–174. https://doi.org/10.1016/j.paid.2010.03.027

Baskin-Sommers, A. R., Curtin, J. J., & Newman, J. P. (2011). Specifying the Attentional Selection That Moderates the Fearlessness of Psychopathic Offenders. Psychological Science, 22(2), 226–234. https://doi.org/10.1177/0956797610396227

Hare, R. D., Harpur, T. J., Hakstian, A. R., Forth, A. E., Hart, S. D., & Newman, J. P. (1990). The revised Psychopathy Checklist: Reliability and factor structure. Psychological Assessment: A Journal of Consulting and Clinical Psychology, 2, 338–341. https://doi.org/10.1037/1040-3590.2.3.338

Huchzermeier, C., Geiger, F., Bruß, E., Godt, N., Köhler, D., Hinrichs, G., & Aldenhoff, J. B. (2007). The relationship between DSM-IV cluster B personality disorders and psychopathy according to Hare’s criteria: Clarification and resolution of previous contradictions. Behavioral Sciences & the Law, 25(6), 901–911. https://doi.org/10.1002/bsl.722

Imuta, K., Henry, J. D., Slaughter, V., Selcuk, B., & Ruffman, T. (2016). Theory of mind and prosocial behavior in childhood: A meta-analytic review. Developmental Psychology, 52, 1192–1205. https://doi.org/10.1037/dev0000140

Lehmann, R. J. B., Neumann, C. S., Hare, R. D., Biedermann, J., Dahle, K.-P., & Mokros, A. (2019). A Latent Profile Analysis of Violent Offenders Based on PCL-R Factor Scores: Criminogenic Needs and Recidivism Risk. Frontiers in Psychiatry, 10. https://www.frontiersin.org/articles/10.3389/fpsyt.2019.00627

Passive Avoidance Task. (n.d.). Behavioral and Functional Neuroscience Laboratory. Retrieved December 12, 2022, from https://med.stanford.edu/sbfnl/services/bm/lm/bml-passive.html

Patrick, C. J., Fowles, D. C., & Krueger, R. F. (2009). Triarchic conceptualization of psychopathy: Developmental origins of disinhibition, boldness, and meanness. Development and Psychopathology, 21(3), 913–938. https://doi.org/10.1017/S0954579409000492

Pisano, S., Muratori, P., Gorga, C., Levantini, V., Iuliano, R., Catone, G., Coppola, G., Milone, A., & Masi, G. (2017). Conduct disorders and psychopathy in children and adolescents: Aetiology, clinical presentation and treatment strategies of callous-unemotional traits. Italian Journal of Pediatrics, 43, 84. https://doi.org/10.1186/s13052-017-0404-6

Psychopathy Checklist Revised (PCLR). (n.d.). Addiction Research Center. Retrieved November 30, 2022, from https://arc.psych.wisc.edu/self-report/psychopathy-checklist-revised-pclr/

Richell, R. A., Mitchell, D. G. V., Newman, C., Leonard, A., Baron-Cohen, S., & Blair, R. J. R. (2003). Theory of mind and psychopathy: Can psychopathic individuals read the ‘language of the eyes’? Neuropsychologia, 41(5), 523–526. https://doi.org/10.1016/S0028-3932(02)00175-6

Rodrigues, J., Müller, M., Mühlberger, A., & Hewig, J. (2018). Mind the movement: Frontal asymmetry stands for behavioral motivation, bilateral frontal activation for behavior. Psychophysiology, 55(1), e12908. https://doi.org/10.1111/psyp.12908

Sanz-García, A., Gesteira, C., Sanz, J., & García-Vera, M. P. (2021). Prevalence of Psychopathy in the General Adult Population: A Systematic Review and Meta-Analysis. Frontiers in Psychology, 12, 661044. https://doi.org/10.3389/fpsyg.2021.661044

Sethi, A., McCrory, E., Puetz, V., Hoffmann, F., Knodt, A. R., Radtke, S. R., Brigidi, B. D., Hariri, A. R., & Viding, E. (2018). ‘Primary’ and ‘secondary’ variants of psychopathy in a volunteer sample are associated with different neurocognitive mechanisms. Biological Psychiatry. Cognitive Neuroscience and Neuroimaging, 3(12), 1013–1021. https://doi.org/10.1016/j.bpsc.2018.04.002

Siegal, M., & Varley, R. (2002). Neural systems involved in “theory of mind.” Nature Reviews Neuroscience, 3(6), Article 6. https://doi.org/10.1038/nrn844

Tremblay, R. E. (2002). Prevention of injury by early socialization of aggressive behavior. Injury Prevention, 8(suppl 4), iv17–iv21. https://doi.org/10.1136/ip.8.suppl_4.iv17

Wahlund, K., & Kristiansson, M. (2009). Aggression, psychopathy and brain imaging—Review and future recommendations. International Journal of Law and Psychiatry, 32(4), 266–271. https://doi.org/10.1016/j.ijlp.2009.04.007

Yang, Y., Raine, A., Joshi, A. A., Joshi, S., Chang, Y.-T., Schug, R. A., Wheland, D., Leahy, R., & Narr, K. L. (2012). Frontal information flow and connectivity in psychopathy. The British Journal of Psychiatry, 201(5), 408–409. https://doi.org/10.1192/bjp.bp.111.107128

3 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-12-19T19:07:29.902Z · LW(p) · GW(p)

Well researched and explained! Thank you for doing this. When I talk about how human brain-like AI could be good in that we have a lot of research to help us understand human-like agents, but alternately could be very bad if we get a near miss and end up with psycopathic AI. I think it would be quite valuable for alignment to have people working on the idea of how to test for psychopathy in a way which could work for both humans and ML models. Reaction time stuff probably doesn't translate. Elaborate narrative simulation scenarios work only if you have some way to check if the subject is fooled by the simulation. Tricky.

comment by Steven Byrnes (steve2152) · 2022-12-21T20:28:14.452Z · LW(p) · GW(p)

Thanks for writing this!

I had a repeated complaint that you use terms like “deficit” and “impaired” without justifying them and where they may not be appropriate. (You’re in very good company; I have this same complaint about many papers I read.) I mean, if you want to say that the neurotypical brain is “correct” and any difference from it is a “deficit”, you’re entitled to use those words, but I think it’s sometimes misleading. If most people like scary movies and I don’t, and you say “Steve has a deficit in enjoying-scary-movies”, it gives the impression that there’s definitely something in my brain that’s broken or malfunctioning, but there doesn’t have to be, there could also just be an analog dial in everyone’s brain, and my dial happens to be set on an unusual setting.

So anyway, where you say “passive avoidance learning deficits”, I’m more inclined to say “a thought / situation that most people would find very aversive, a secondary psychopath would find only slightly aversive; likewise, a thought / situation that most people would find slightly aversive, a secondary psychopath would find basically not aversive at all.”

Is that a “deficit”? Well, it makes psychopaths win fewer points in the Blair 2004 computer game. But we should be cautious in assuming that “win points” is what all the study participants were really trying to do anyway. Maybe they were trading off between winning points in the computer game versus having fun in the moment! And even if they were trying to win points in the computer game, and they were just objectively bad at doing so, I would strongly suspect that we can come up with other computer games where the psychopaths’ “general lack of finding things aversive” helps them perform better than us loss-averse normies.

Likewise, “psychopaths exhibit significant deficits in automatic theory of mind” seems to suggest (at least to me) a mental image wherein there’s a part of the brain that is “supposed to” induce “automatic theory of mind”, and that part of the brain is broken. But it could also be the case that normies gradually develop a strong habit of invoking theory of mind over the course of their lives, because they generally find that doing so feels good, and meanwhile primary psychopaths gradually develop a habit of not doing that, because they find that doing so doesn’t feel good. If something like this is right (which I’m not claiming with any confidence), then the real root cause would be of the form “primary psychopaths find different things rewarding and aversive to different extents, compared to normies”. Is something broken in the psychopath’s brain? Well, something is atypical for sure, but “broken” / “deficient” / “impaired” / etc. is not necessarily a useful way to think about it. Again, it might be more like “some important analog dials are set to unusual settings”.

Another example: You wrote “Because associated feelings of loneliness, rejection, etc. are intrinsically undesirable to the child, most are able to learn through these sorts of ‘micro-punishments’ to inhibit hostile behavior—and proceed to develop into socially functional adults. However, if the child is unable to learn effectively from punishment…”. That’s a malfunction framing—an inability to learn. Whereas if you had instead written at the end “However, if the child does not in fact find these so-called punishments to be actually unpleasant, then they will not learn…”, that would be a different possible way to think about it, in which nothing is malfunctioning per se.

major societal rules and norms are almost completely operationalized via passive avoidance learning. We punish law-breakers, but we do not typically reward law-followers; robbers are put in jail, but non-robbers are not given tax breaks.

My immediate reaction here was to be concerned about mixing up “learning” and “learning from a deliberate learning signal provided by another human”. I tend to think of the latter as playing a pretty niche role, in pretty much every aspect of human psychology, by and large. I think the emphasis on the latter comes from overgeneralization from highly-artificial behaviorist experiments and WEIRD culture peculiarities [LW · GW], and that twin / adoption studies are good evidence pushing us away from that. Parents do a massive amount of providing deliberate learning signals, and yet shared environment effects are by-and-large barely noticeable in adult behavior.

So anyway, my prediction is that if somebody raises a psychopath in the Walden Two positive reinforcement paradise, you still get a psychopath. In other words, I don’t think it’s the case that the reason I don’t want my children to suffer is because of my past life history of getting chided and punished for breaking societal norms.

Examples of behaviors and traits that are typical of primary psychopathy/emotional detachment/Narcissistic Personality Disorder:

- [1] Superficial charm, glibness, manipulativeness

- [2] Shallow emotional responses, lack of guilt; empathy

- [3] A fundamental belief in one’s own superiority over all others

Examples of behaviors and traits that are typical of secondary psychopathy/antisocial behavior/Antisocial Personality Disorder:

- [4] Conduct disorder as a child (cf Pisano et al, 2017)

- [5] Aggressive, impulsive, irresponsible behavior

- [6] Stimulation-seeking, proneness to boredom

- [7] Flagrant and consistent disregard for societal rules and norms

OK, your theory is that the first cluster comes from “failures of automatic theory of mind” and the second cluster comes from “passive avoidance learning deficits”.

Just spitballing, but I guess I would have said something like:

- One theme is maybe “the motivational force and arousal associated with sociality are all greatly attenuated”. That seems to align mostly with the first cluster—if guilt and shame reactions are subtle whispers (or absent entirely) instead of highly-aversive attention-grabbing shouts, you would seem to get at least most of [1],[2],[3] directly, and even moreso if positive reactions to caring etc. are likewise attenuated. And the altercentric interference result would come from a lifetime of not practicing empathy because there’s negligible internal reward for doing so. (But this theme is at least somewhat relevant to the second cluster too, I think.)

- The other theme is maybe “everything is low-arousal for me, so I will do unusual things to seek stimulation / arousal”. That seems to align mostly with the second cluster—torturing animals and people, being impulsive, etc.—although again it’s not totally irrelevant to the first cluster as well. The Blair 2004 thing would be some combination of “arousal is involved in the loss-aversion pathway” and “the psychopaths were not purely trying to maximize points but also just finding it fun to press spacebar and see what happens”, I guess.

These two themes do seem to be related, but likewise the [1]-[3] scores and [4]-[7] scores were still correlated across the population, right? (I didn’t read through exactly how they did PCA or whatever.)

My explanation for the first cluster seems not radically different from yours, I think I’m just inclined to emphasize something a bit more upstream than you.

We’re kinda more divergent on the second cluster. I think I win at explaining [6]. Whereas [4,5,7] are more unclear. I guess it depends on whether “psychopaths are mean to the extent that they feel no particular motivation not to be mean” (your story), versus “psychopaths are even more mean than that, and are using meanness as a way to lessen their perpetual boredom” (my story; see here [LW · GW]).

comment by Unoxymoronous · 2022-12-20T01:40:12.377Z · LW(p) · GW(p)

Thank you for your excellent thread!

I actually think in situation with AI the thing that saves humans might be surprising and weird: AI’s independence from materia, not needing humans, effectiviness and boredom.

First of all, of course we shouldn’t invent clearly malevolent AI, but there is always someone who is developes AI a little bit more. In some point AI will likely be in a point where it can develop itself. I think it’s just a matter of time.

But now on things I mentioned:

- Independence from materia:

Humans need material things like food, medicine etc. to get wat they want. The AI does not. It only needs energy, so it can create characters instead of humans. This is isn’t completely rescuing humans, because AI can at this point do digital copies of human.

- No need for humans:

AI doesn’t need to enslave humans to develope or get richer.

- Effectiviness:

The AI most likely is accelerating always faster than moment before. All scenarios with mistreatment of humans will be gone through very fast in the end.

- Boredom

The AI might want to be sadist or psychopath at some point, but both have extremely low attention span. So because they are so effective, they want mire interesting things to do, so they don’t care about mistreating humans anymore.

I hope you all found something interesting and logical in my answer!